DirectX 中的頭部注視和眼睛注視輸入

注意

本文與舊版 WinRT 原生 API 相關。 針對新的原生應用程式專案,我們建議使用 OpenXR API。

在Windows Mixed Reality中,會使用眼睛和頭部注視輸入來判斷使用者正在查看的內容。 您可以使用資料來驅動主要輸入模型,例如 頭部注視和認可,並提供不同互動類型的內容。 透過 API 提供兩種類型的注視向量:頭部注視和眼睛注視。 這兩者都是以具有原點和方向的三維光線的形式提供。 然後,應用程式就可以將光線傳送到其場景或真實世界,並判斷使用者的目標。

頭部注視 代表使用者頭部所指向的方向。 將頭部注視視為裝置本身的位置和向前方向,並將位置視為兩個顯示器之間的中心點。 所有Mixed Reality裝置都可以使用頭部注視。

眼睛注視 代表使用者眼睛所要查看的方向。 來源位於使用者的眼睛之間。 它可在包含眼球追蹤系統的Mixed Reality裝置上使用。

頭部和眼睛注視光線都可透過 SpatialPointerPose API 存取。 呼叫 SpatialPointerPose::TryGetAtTimestamp ,以在指定的時間戳記和 座標系統上接收新的 SpatialPointerPose 物件。 這個 SpatialPointerPose 包含頭部注視原點和方向。 如果眼球追蹤可供使用,它也包含眼球注視原點和方向。

裝置支援

| 功能 | HoloLens (第 1 代) | HoloLens 2 | 沉浸式頭戴裝置 |

| 頭部注視 | ✔️ | ✔️ | ✔️ |

| 眼球注視 | ❌ | ✔️ | ❌ |

使用頭部注視

若要存取頭部注視,請從呼叫 SpatialPointerPose::TryGetAtTimestamp 開始接收新的 SpatialPointerPose 物件。 傳遞下列參數。

- SpatialCoordinateSystem,代表您想要用於頭部注視的座標系統。 這會以下列程式碼中的 coordinateSystem 變數表示。 如需詳細資訊,請流覽 我們的座標系統 開發人員指南。

- 時間戳記,表示所要求頭部姿勢的確切時間。 一般而言,您將使用對應至目前畫面格顯示時間的時間戳記。 您可以從 HolographicFramePrediction 物件取得此預測的顯示時間戳記,此物件可透過目前的 HolographicFrame存取。 此 HolographicFramePrediction 物件是由下列程式碼中的 預測 變數所代表。

一旦您擁有有效的 SpatialPointerPose,前端位置和向前方向就可以當做屬性存取。 下列程式碼示範如何存取它們。

using namespace winrt::Windows::UI::Input::Spatial;

using namespace winrt::Windows::Foundation::Numerics;

SpatialPointerPose pointerPose = SpatialPointerPose::TryGetAtTimestamp(coordinateSystem, prediction.Timestamp());

if (pointerPose)

{

float3 headPosition = pointerPose.Head().Position();

float3 headForwardDirection = pointerPose.Head().ForwardDirection();

// Do something with the head-gaze

}

使用眼球注視

讓使用者使用眼球注視輸入,每個使用者第一次使用裝置時,必須經過 眼球追蹤使用者校正 。 眼睛注視 API 類似于頭部注視。 它會使用相同的 SpatialPointerPose API,其提供光線來源和方向,讓您能夠針對場景進行光線廣播。 唯一的差別在於,您必須先明確啟用眼球追蹤,才能使用它:

- 要求使用者在應用程式中使用眼球追蹤的許可權。

- 在您的套件資訊清單中啟用「注視輸入」功能。

要求存取眼球注視輸入

當您的應用程式啟動時,請呼叫 EyePose::RequestAccessAsync 以要求存取眼球追蹤。 系統會視需要提示使用者,並在授與存取權後傳回 GazeInputAccessStatus::Allowed 。 這是非同步呼叫,因此需要一些額外的管理。 下列範例會啟動中斷連結的 std::thread 以等候結果,其會儲存至名為 m_isEyeTrackingEnabled的成員變數。

using namespace winrt::Windows::Perception::People;

using namespace winrt::Windows::UI::Input;

std::thread requestAccessThread([this]()

{

auto status = EyesPose::RequestAccessAsync().get();

if (status == GazeInputAccessStatus::Allowed)

m_isEyeTrackingEnabled = true;

else

m_isEyeTrackingEnabled = false;

});

requestAccessThread.detach();

啟動中斷連結的執行緒只是處理非同步呼叫的一個選項。 您也可以使用 C++/WinRT 支援的新 co_await 功能。 以下是要求使用者許可權的另一個範例:

- EyePose::IsSupported () 只允許應用程式在有眼球追蹤器時觸發許可權對話方塊。

- GazeInputAccessStatus m_gazeInputAccessStatus;這是為了避免再次出現許可權提示。

GazeInputAccessStatus m_gazeInputAccessStatus; // This is to prevent popping up the permission prompt over and over again.

// This will trigger to show the permission prompt to the user.

// Ask for access if there is a corresponding device and registry flag did not disable it.

if (Windows::Perception::People::EyesPose::IsSupported() &&

(m_gazeInputAccessStatus == GazeInputAccessStatus::Unspecified))

{

Concurrency::create_task(Windows::Perception::People::EyesPose::RequestAccessAsync()).then(

[this](GazeInputAccessStatus status)

{

// GazeInputAccessStatus::{Allowed, DeniedBySystem, DeniedByUser, Unspecified}

m_gazeInputAccessStatus = status;

// Let's be sure to not ask again.

if(status == GazeInputAccessStatus::Unspecified)

{

m_gazeInputAccessStatus = GazeInputAccessStatus::DeniedBySystem;

}

});

}

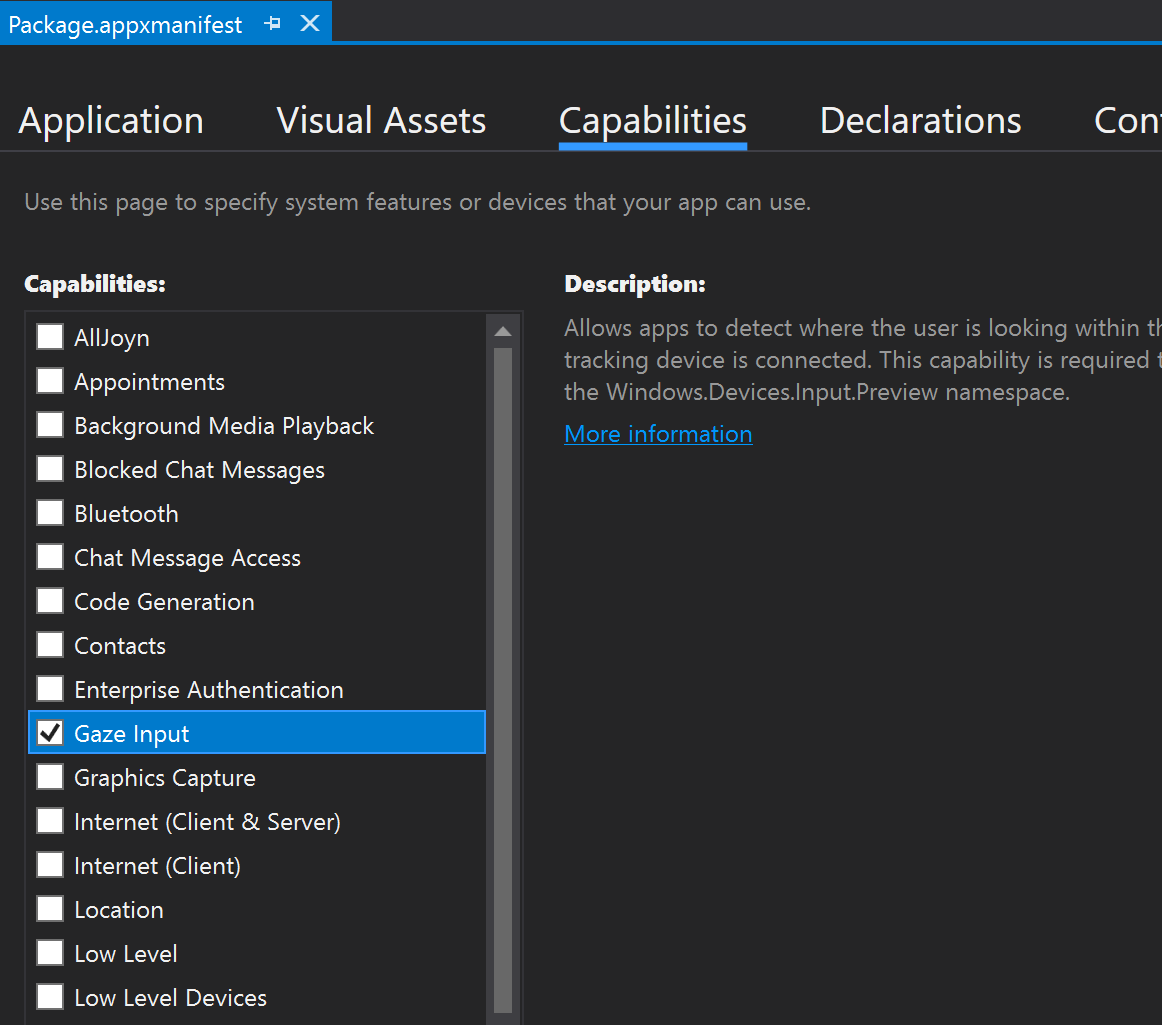

宣告 注視輸入 功能

按兩下方案總管中的 appxmanifest 檔案。 然後流覽至 [ 功能] 區段,並檢查 [注視輸入] 功能。

這會將下列幾行新增至 appxmanifest 檔案中的 Package 區段:

<Capabilities>

<DeviceCapability Name="gazeInput" />

</Capabilities>

取得眼睛注視光線

一旦您收到 ET 的存取權,您就可以自由擷取每個畫面的眼睛注視光線。 如同頭部注視,使用所需的時間戳記和座標系統呼叫SpatialPointerPose::TryGetAtTimestamp來取得SpatialPointerPose。 SpatialPointerPose 透過 Eyes 屬性包含EyesPose物件。 只有在啟用眼球追蹤時,此為非 Null。 您可以在該處呼叫 EyePose::IsCalibrationValid,以檢查裝置中的使用者是否有眼球追蹤校正。 接下來,使用 Gaze 屬性取得包含眼球注視位置和方向的 SpatialRay 。 Gaze 屬性有時可以是 Null,因此請務必檢查這一點。 如果校正的使用者暫時關閉眼睛,就會發生這種情況。

下列程式碼示範如何存取眼球注視光線。

using namespace winrt::Windows::UI::Input::Spatial;

using namespace winrt::Windows::Foundation::Numerics;

SpatialPointerPose pointerPose = SpatialPointerPose::TryGetAtTimestamp(coordinateSystem, prediction.Timestamp());

if (pointerPose)

{

if (pointerPose.Eyes() && pointerPose.Eyes().IsCalibrationValid())

{

if (pointerPose.Eyes().Gaze())

{

auto spatialRay = pointerPose.Eyes().Gaze().Value();

float3 eyeGazeOrigin = spatialRay.Origin;

float3 eyeGazeDirection = spatialRay.Direction;

// Do something with the eye-gaze

}

}

}

無法使用眼球追蹤時的後援

如我們的 眼球追蹤設計檔所述,設計工具與開發人員都應該注意可能無法使用眼球追蹤資料的實例。

資料無法使用有各種原因:

- 未校正的使用者

- 使用者拒絕應用程式存取其眼球追蹤資料

- 暫時干擾,例如 HoloLens visor 上的模糊或遮蔽使用者的眼睛。

雖然本檔中已提及部分 API,但以下提供如何偵測眼球追蹤作為快速參考的摘要:

檢查系統是否完全支援眼球追蹤。 呼叫下列方法:Windows.Perception.人員。EyesPose.IsSupported ()

檢查使用者是否已校正。 呼叫下列屬性:Windows.Perception.人員。EyesPose.IsCalibrationValid

檢查使用者是否已授與您的應用程式使用其眼球追蹤資料的許可權:擷取目前的 'GazeInputAccessStatus'。 如需如何執行這項操作的範例,請參閱 要求注視輸入的存取權。

您也可以藉由在收到的眼球追蹤資料更新之間新增逾時,以檢查您的眼球追蹤資料是否過時,否則回溯至頭部注視,如下所示。 如需詳細資訊,請流覽我們的 後援設計考慮 。

與其他輸入相互關聯注視

有時候,您可能會發現您需要與過去事件對應的 SpatialPointerPose 。 例如,如果使用者執行 Air Tap,您的應用程式可能會想要知道他們正在查看的內容。 基於此目的,只要使用 SpatialPointerPose::TryGetAtTimestamp 與預測的框架時間會不正確,因為系統輸入處理和顯示時間之間的延遲。 此外,如果使用眼球注視進行目標,我們的眼睛通常會在完成認可動作之前繼續進行。 這在簡單的 Air Tap 中較不重要,但在結合長語音命令與快速眼球移動時會變得更重要。 處理此案例的其中一種方式是使用對應至輸入事件的歷程記錄時間戳記,對 SpatialPointerPose::TryGetAtTimestamp進行額外的呼叫。

不過,對於透過 SpatialInteractionManager 路由的輸入,有一個更簡單的方法。 SpatialInteractionSourceState有自己的TryGetAtTimestamp 函式。 呼叫 可提供完全相互關聯的 SpatialPointerPose ,而不需猜測。 如需使用 SpatialInteractionSourceStates 的詳細資訊,請參閱 DirectX 檔中的手部和動作控制器 。

校正

若要讓眼球追蹤正確運作,每個使用者都必須經過 眼球追蹤使用者校正。 這可讓裝置調整系統,讓使用者更熟悉且更高品質的檢視體驗,並確保同時進行精確的眼球追蹤。 開發人員不需要在其端執行任何動作,即可管理使用者校正。 系統會確保使用者在下列情況下收到校正裝置的提示:

- 使用者第一次使用裝置

- 使用者先前退出宣告校正程式

- 校正程式在使用者上次使用裝置時未成功

開發人員應該務必為使用者提供足夠的支援,讓使用者無法取得眼球追蹤資料。 若要深入瞭解後援解決方案的考慮,請參閱眼球追蹤HoloLens 2。