注視輸入

混合實境應用程式中的注視輸入全都關於找出使用者正在查看的內容。 當裝置上的眼球追蹤相機與 Unreal 世界空間中的光線相符時,您的使用者的視線資料就會變成可用。 注視可用於藍圖和 C++,而且是機制的核心功能,例如物件互動、尋找方式和相機控制項。

啟用眼球追蹤

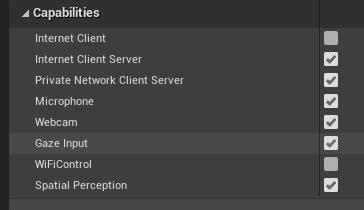

- 在 [專案設定 > HoloLens] 中,啟用 注視輸入 功能:

- 建立新的動作專案,並將其新增至您的場景

注意

Unreal 中的 HoloLens 眼球追蹤只有單一注視光線適用于兩眼。 不支援需要兩個光線的身歷聲追蹤。

使用眼球追蹤

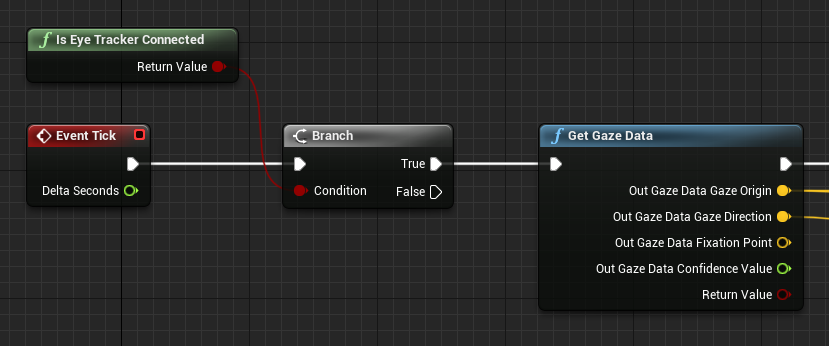

首先,檢查您的裝置是否支援 使用 IsEyeTrackerConnected 函式進行眼球追蹤。 如果函式傳回 true,請呼叫 GetGazeData 來尋找使用者眼睛在目前框架中查看的位置:

注意

HoloLens 上無法使用修正點和信賴值。

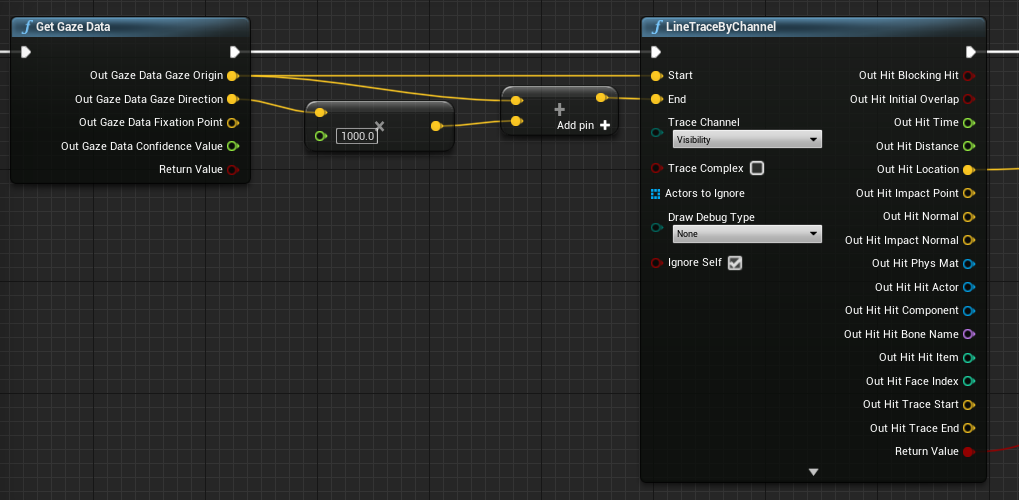

使用線條追蹤中的注視原點和方向,找出使用者所尋找的確切位置。 注視值是向量,從注視原點開始,結束于原點加上注視方向乘以線條追蹤距離:

取得頭部方向

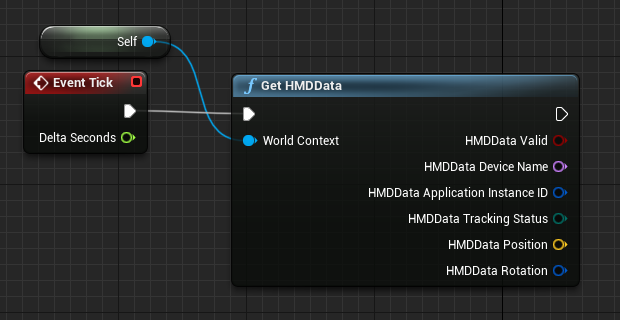

您也可以使用前端掛接顯示器 (HMD) 旋轉來代表使用者頭部的方向。 您可以取得使用者頭部方向,而不啟用注視輸入功能,但不會取得任何眼球追蹤資訊。 將藍圖的參考新增為世界內容,以取得正確的輸出資料:

注意

取得 HMD 資料僅適用于 Unreal 4.26 和更新版本。

使用 C++

- 在遊戲的 build.cs 檔案中,將 EyeTracker 新增至 PublicDependencyModuleNames 清單:

PublicDependencyModuleNames.AddRange(

new string[] {

"Core",

"CoreUObject",

"Engine",

"InputCore",

"EyeTracker"

});

- 在File/ New C++ 類別中,建立名為EyeTracker的新 C++ 動作專案

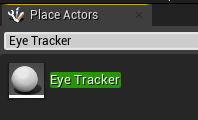

- Visual Studio 解決方案將會開啟新的 EyeTracker 類別。 建置並執行 ,以使用新的 EyeTracker 動作專案開啟 Unreal 遊戲。 在 [放置動作 專案] 視窗中搜尋 「EyeTracker」,並將類別拖放到遊戲視窗中,以將它新增至專案:

- 在 EyeTracker.cpp中,新增 EyeTrackerFunctionLibrary和 DrawDebugHelpers 的include:

#include "EyeTrackerFunctionLibrary.h"

#include "DrawDebugHelpers.h"

檢查您的裝置是否支援 使用 UEyeTrackerFunctionLibrary::IsEyeTrackerConnected 進行眼球追蹤,然後再嘗試取得任何注視資料。 如果支援眼球追蹤,請從 UEyeTrackerFunctionLibrary::GetGazeData尋找線條追蹤的開始和結束。 您可以從該處建構注視向量,並將其內容傳遞至 LineTraceSingleByChannel ,以偵錯任何光線點擊結果:

void AEyeTracker::Tick(float DeltaTime)

{

Super::Tick(DeltaTime);

if(UEyeTrackerFunctionLibrary::IsEyeTrackerConnected())

{

FEyeTrackerGazeData GazeData;

if(UEyeTrackerFunctionLibrary::GetGazeData(GazeData))

{

FVector Start = GazeData.GazeOrigin;

FVector End = GazeData.GazeOrigin + GazeData.GazeDirection * 100;

FHitResult Hit Result;

if (GWorld->LineTraceSingleByChannel(HitResult, Start, End, ECollisionChannel::ECC_Visiblity))

{

DrawDebugCoordinateSystem(GWorld, HitResult.Location, FQuat::Identity.Rotator(), 10);

}

}

}

}

下一個開發檢查點

依循我們配置的 Unreal 開發旅程,此時您會探索 MRTK核心建置組塊。 接下來,您可以繼續進行下一個建置組塊:

或者,直接跳到混合實境平台功能和 API 的主題:

您可以隨時回到 Unreal 開發檢查點。