The only reason your query is fast is related to the fact that you used temporary table and has nothing to do with your solution (your function).

In such table with such amount of data it is simply better to use two temporary table and then JOIN

I executed your query using your data but since my laptop is poor, in the PipeData table I only filled 10k rows using your query with top 10000

CREATE DATABASE [ErlandTest]

CONTAINMENT = NONE

ON PRIMARY ( NAME = N'ErlandTest', FILENAME = N'E:\SQL_Files\ErlandTest.mdf' , SIZE = 8192KB , FILEGROWTH = 65536KB )

LOG ON ( NAME = N'ErlandTest_log', FILENAME = N'E:\SQL_Files\ErlandTest_log.ldf' , SIZE = 8192KB , FILEGROWTH = 65536KB )

GO

USE [ErlandTest]

go

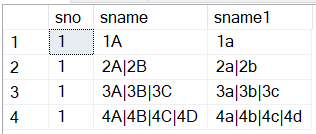

CREATE TABLE PipeData (id int NOT NULL,

ShipName nvarchar(MAX) NOT NULL,

ShipAddress nvarchar(MAX) NOT NULL,

CONSTRAINT pk_PipeData PRIMARY KEY (id)

)

go

-- THE ONLY DIFFERENT IS THAT I ADDED "top 10000"

INSERT PipeData(id, ShipName, ShipAddress)

SELECT top 10000 CustomerID, string_agg(convert(nvarchar(MAX), ShipName), '|') WITHIN GROUP (ORDER BY OrderID),

string_agg(convert(nvarchar(MAX), ShipAddress), '|') WITHIN GROUP (ORDER BY OrderID)

FROM BigDB..BigOrders

GROUP BY CustomerID

go

----------

Next, I tested your solution on these 10k rows and it took more than 5 minutes.

I tested my solution (meaning using OPENJSON) using temporary tables, and guess what ?!?

As I expected my solution finished in 20 seconds!

Assuming I did not made a mistake in my test (and this is the expected result I had) the different is 20 seconds vs 5 minuets

So here is my query using temp table

DROP TABLE IF EXISTS #t1

DROP TABLE IF EXISTS #t2

DROP TABLE IF EXISTS #temp4

go

DECLARE @d datetime2 = sysdatetime()

CREATE TABLE #t1 (id int NOT NULL,

pos int NOT NULL,

ShipName nvarchar(60) NOT NULL,

PRIMARY KEY (id, pos))

CREATE TABLE #t2 (id int NOT NULL,

pos int NOT NULL,

ShipAddress nvarchar(60) NOT NULL,

PRIMARY KEY (id, pos))

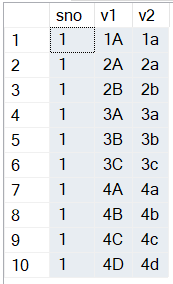

INSERT #t1(id, pos, ShipName)

SELECT t.id,[key] listpos ,[value] nstr

from PipeData t

CROSS APPLY OPENJSON ('["' + REPLACE(t.ShipName,'|','","') + '"]') AS s

INSERT #t2(id, pos, ShipAddress)

SELECT t.id,[key] listpos ,[value] nstr

from PipeData t

CROSS APPLY OPENJSON ('["' + REPLACE(t.ShipAddress,'|','","') + '"]') AS s

SELECT t1.id, t1.ShipName, t2.ShipAddress as str

INTO #temp4

FROM #t1 t1

JOIN #t2 t2 ON t1.id = t2.id

AND t1.pos = t2.pos

SELECT datediff(ms, @d, sysdatetime())

go -- finished in 20410