Project schedule API performance

Applies To: Project Operations for resource/non-stocked based scenarios, Lite deployment - deal to proforma invoicing, Project for the web

This article provides information about the performance benchmarks of the Project schedule application programming interfaces (APIs) and identifies the best practices for optimizing usage.

Project Scheduling Service

The Project Scheduling Service is a multi-tenant service that runs in Microsoft Azure. It's designed to improve interaction by providing a fast and fluid experience when users work on projects. This improvement is achieved by accepting change requests, processing them, and then immediately returning the result. The service asynchronously persists to Dataverse and doesn't block users from performing other operations.

The Project schedule APIs rely on the Project Scheduling Service to run requests that are described in more detail in later sections of this article.

The Project schedule APIs are designed to work with the following work breakdown structure (WBS) entities:

- Project

- Project Task

- Project Task Dependency

- Project Team Member

- Resource Assignment

Both out-of-box fields and custom fields are supported. Unless otherwise noted, all common operations are supported, such as create, update, and delete. For more information, see Use Project schedule APIs to perform operations and scheduling entities.

As part of the Project schedule APIs, a unit-of-work pattern has been added. This pattern is known as an OperationSet, and it can be used when several requests must be processed in a single transaction.

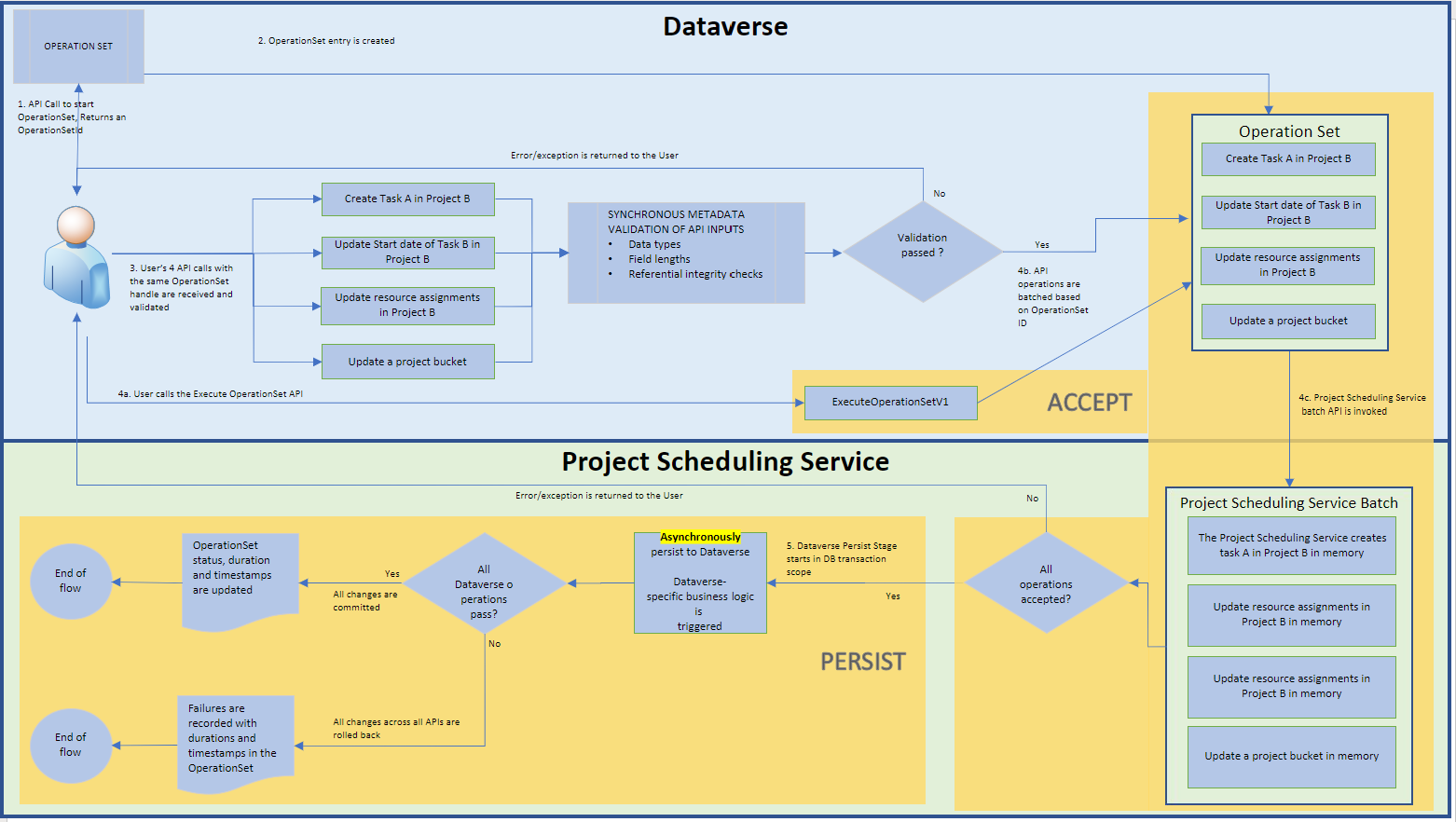

The following illustration shows the flow that a partner will experience when this feature is used.

Step 1: A client makes an API call to an Open Data Protocol (OData) endpoint in Dataverse to create an OperationSet.

Step 2: After the new OperationSet is created, an OperationSetId value is returned.

Step 3: The client uses the OperationSetId value to make another Project schedule API request. The result is a create, update, or delete request on a scheduling entity. When this request is made, metadata validation is done. If the validation fails, the request is terminated, and an error is returned.

Steps 4a–4c: These steps represent the ACCEPT phase. The client calls the ExecuteOperationSetV1 API, which sends all the changes to the Project Scheduling Service in one batch. The Project Scheduling Service runs its own validations on requests in the batch. Any validation failures undo the batch and return an exception to the caller. If the batch is successfully accepted by the Project Scheduling Service, the OperationSet status is updated to reflect the fact that the OperationSet is being processed by the Project Scheduling Service.

Step 5: This step represents is the PERSIST phase. The Project Scheduling Service asynchronously writes the batch to Dataverse in a transaction. If the write operation is successful, the OperationSet is marked as Completed. Any errors roll back the transaction and the batch, and the OperationSet is marked as Failed.

Performance methodology

Execution time is defined as the time from the call to the ExecuteOperationSetV1 API until the Project Scheduling Service has finished writing to Dataverse. All operations run a combined 2,200 times, and the P99 execution time measurements are reported. Single-record and bulk operations are measured.

For a single-record operation, the OperationSet contains one request. For bulk operations, it contains 20, 50, or 100 requests. Each bulk size is reported separately.

These operations run on a UR 15 Project Operations Lite deployment in North America.

Results

Create operations

Single-record create operations

The following table shows the execution times for the creation of a single record. The times are in seconds.

| Record type | Time | |

|---|---|---|

| Required fields | All supported fields | |

| Project | 2.5 | 3.78 |

| Task | 8.82 | 9.34 |

| Assignment | 9.19 | 9.19 |

| Team member | 0.84 | 4.2 |

| Dependency | 8.84 | 8.84 |

Bulk create operations

The following table shows the execution times for the creation of many records. Specifically, Microsoft measured the execution times for the creation of 20, 50, and 100 records in a single OperationSet. The times are in seconds.

| Record type | Time | |||||

|---|---|---|---|---|---|---|

| 20 records | 50 records | 100 records | ||||

| Required fields | All supported fields | Required fields | All supported fields | Required fields | All supported fields | |

| Task | 19.92 | 38.35 | 36.67 | 99.13 | 116.77 | 174.06 |

| Assignment | 13.94 | 13.94 | 43.95 | 43.95 | 69.38 | 69.38 |

| Dependency | 30.04 | 30.04 | 77.82 | 77.82 | 176.89 | 176.89 |

Note

Bulk create operations on the Project and Team Member entities aren't included in this table, because the runtime for those operations resembles the runtime when the API for creating a single record is called multiple times. These APIs are run immediately in Dataverse.

The following illustration shows a plot of the execution times for the Task, Assignment, and Dependency entities when 20, 50, and 100 records are created and all the supported fields are used.

Update operations

Single-record update operations

The following table shows the execution times for updates of a single record. The times are in seconds.

| Record type | Time | |

|---|---|---|

| Required fields | All supported fields | |

| Project | 9.53 | 13.91 |

| Task | 8.82 | 9.91 |

| Team member | 9 | 8.96 |

Note

Update operations on the Resource Assignments and Project Task Dependency entities aren't supported.

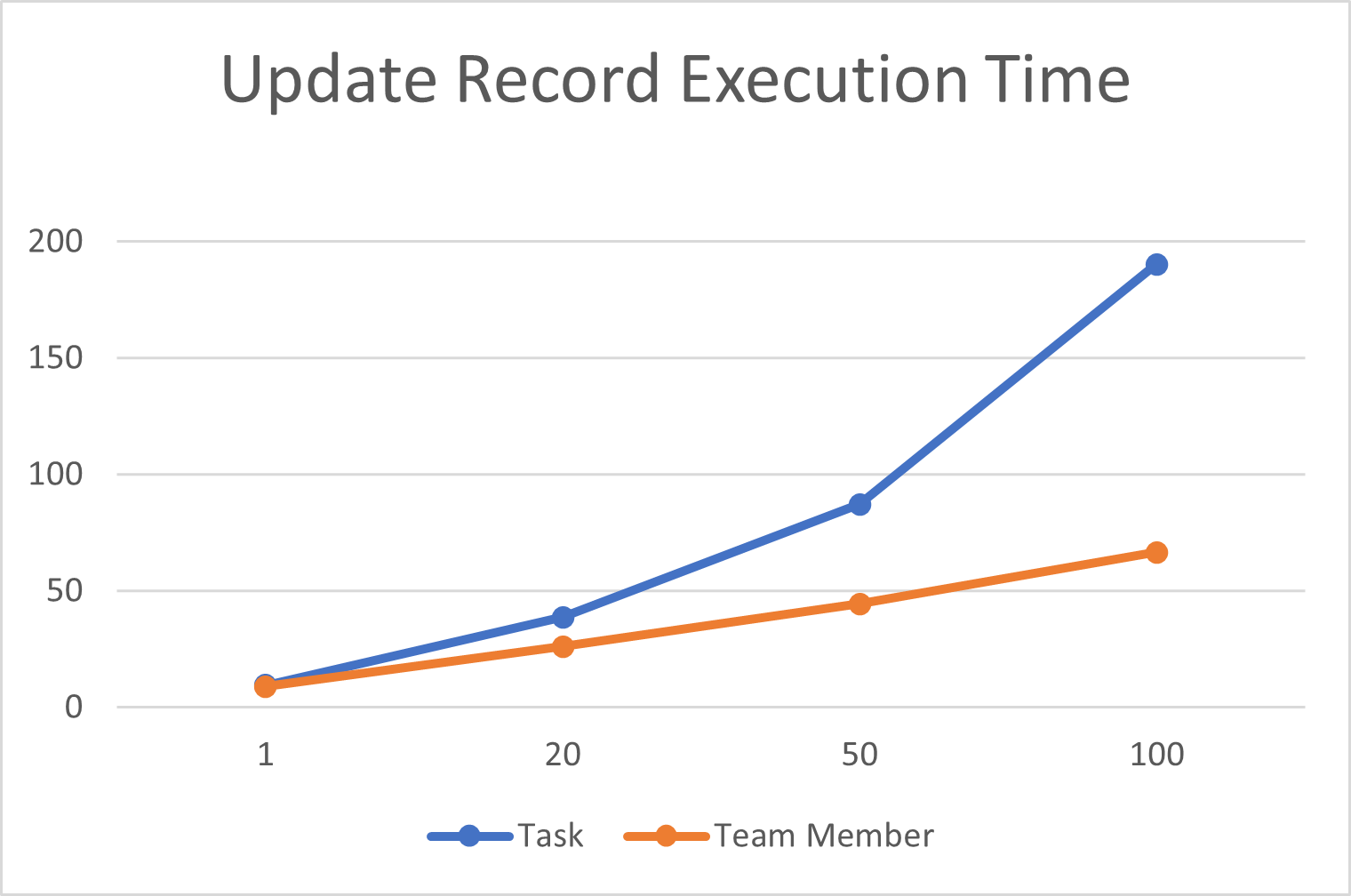

Bulk update operations

The following table shows the execution times for updates of many records. Specifically, Microsoft measured the execution times for updates of 20, 50, and 100 records in a single OperationSet. The times are in seconds.

| Record type | Time | |||||

|---|---|---|---|---|---|---|

| 20 records | 50 records | 100 records | ||||

| Required fields | All supported fields | Required fields | All supported fields | Required fields | All supported fields | |

| Task | 8.91 | 38.71 | 20.92 | 87.13 | 36.68 | 190.34 |

| Team member | 20.52 | 26.06 | 41.93 | 44.51 | 38.63 | 66.53 |

Note

Update operations on the Resource Assignments and Project Task Dependency entities aren't supported.

The following illustration shows a plot of the execution times for the Task and Team Member entities when 20, 50, and 100 records are updated and all the supported fields are used.

Delete operations

Single-record delete operations

The following table shows the execution times for the deletion of a single record. The times are in seconds.

| Record type | Time |

|---|---|

| Task | 20.12 |

| Assignment | 10.86 |

| Team member | 12.52 |

| Dependency | 20.89 |

Note

Delete operations on the Project entity aren't supported.

Bulk delete operations

The following table shows the execution times for the deletion of many records. Specifically, Microsoft measured the execution times for the deletion of 20, 50, and 100 records in a single OperationSet. The times are in seconds.

| Record type | Time | ||

|---|---|---|---|

| 20 records | 50 records | 100 records | |

| Task | 20.91 | 67.43 | 71.96 |

| Assignment | 11.75 | 25.79 | 47.66 |

| Team member | 9.78 | 39.73 | 24.33 |

| Dependency | 24.61 | 54.9 | 109.16 |

Note

Delete operations on the Project entity aren't supported.

The following illustration shows a plot of the execution times for the Task, Assignment, Team Member, and Dependency entities when 20, 50, and 100 records are deleted.

Observations

For each record operation, the ExecuteOperationSet API takes about 800 milliseconds to send a request to the Project Scheduling Service. The Project Scheduling Service then takes about five seconds to process the payload and call Dataverse. The rest of the execution time is spent running business logic and writing data to the database in Dataverse.

When 100 records are created, updated, or deleted, the ExecuteOperationSet API takes about three seconds to send the request to the Project Scheduling Service. The Project Scheduling Service then takes about five seconds to process the requests and call Dataverse. Bulk operations must pay a Project Scheduling Service tax one time, for all the records in the OperationSet. Therefore, bulk operations have a significantly lower average execution time than single-record operations.

Scenarios

The following table shows the execution times when the Project schedule APIs are used to accomplish specific scenarios. The times are in seconds.

| Scenario | Time |

|---|---|

| Create a project that has 40 tasks. | 36.01 |

| Create a project that has 40 tasks and 20 dependencies. | 38.11 |

| Create a project that has 40 tasks and 30 assignments. | 60.17 |

| Create a project that has 40 tasks, 20 dependencies, and 30 assignments. | 60.27 |

Best practices

Based on the preceding scenario results, the APIs perform better in the following conditions:

- Group as many operations together as possible. The average runtime for bulk operations is better than the average runtime for single-record operations. The smaller the number of OperationSets in use, the faster the average execution time will be.

- Set only the minimum attributes that are required to accomplish your scenario. Be selective about the types of non-required fields included in an OperationSet request. Fields that contain foreign keys or rollup fields will negatively affect performance.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for