Scaling with Event Hubs

There are two factors that influence scaling with Event Hubs.

- Throughput units (standard tier) or processing units (premium tier)

- Partitions

Throughput units

The throughput capacity of event hubs is controlled by throughput units. Throughput units are prepurchased units of capacity. A single throughput unit lets you:

- Ingress: Up to 1 MB per second or 1,000 events per second (whichever comes first).

- Egress: Up to 2 MB per second or 4,096 events per second.

Beyond the capacity of the purchased throughput units, ingress is throttled and Event Hubs throws a ServerBusyException. Egress doesn't produce throttling exceptions, but is still limited to the capacity of the purchased throughput units. If you receive publishing rate exceptions or are expecting to see higher egress, be sure to check how many throughput units you have purchased for the namespace. You can manage throughput units on the Scale page of the namespaces in the Azure portal. You can also manage throughput units programmatically using the Event Hubs APIs.

Throughput units are prepurchased and are billed per hour. Once purchased, throughput units are billed for a minimum of one hour. Up to 40 throughput units can be purchased for an Event Hubs namespace and are shared across all event hubs in that namespace.

The Auto-inflate feature of Event Hubs automatically scales up by increasing the number of throughput units, to meet usage needs. Increasing throughput units prevents throttling scenarios, in which:

- Data ingress rates exceed set throughput units.

- Data egress request rates exceed set throughput units.

The Event Hubs service increases the throughput when load increases beyond the minimum threshold, without any requests failing with ServerBusy errors.

For more information about the autoinflate feature, see Automatically scale throughput units.

Processing units

Event Hubs Premium provides superior performance and better isolation within a managed multitenant PaaS environment. The resources in a Premium tier are isolated at the CPU and memory level so that each tenant workload runs in isolation. This resource container is called a Processing Unit (PU). You can purchase 1, 2, 4, 8 or 16 processing Units for each Event Hubs Premium namespace.

How much you can ingest and stream with a processing unit depends on various factors such as your producers, consumers, the rate at which you're ingesting and processing, and much more.

For example, Event Hubs Premium namespace with one PU and one event hub (100 partitions) can approximately offer core capacity of ~5-10 MB/s ingress and 10-20 MB/s egress for both AMQP or Kafka workloads.

To learn about configuring PUs for a premium tier namespace, see Configure processing units.

Note

To learn more about quotas and limits, see Azure Event Hubs - quotas and limits.

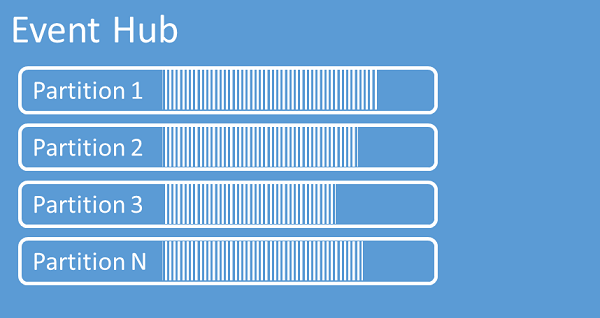

Partitions

Event Hubs organizes sequences of events sent to an event hub into one or more partitions. As newer events arrive, they're added to the end of this sequence.

A partition can be thought of as a commit log. Partitions hold event data that contains the following information:

- Body of the event

- User-defined property bag describing the event

- Metadata such as its offset in the partition, its number in the stream sequence

- Service-side timestamp at which it was accepted

Advantages of using partitions

Event Hubs is designed to help with processing of large volumes of events, and partitioning helps with that in two ways:

- Even though Event Hubs is a PaaS service, there's a physical reality underneath. Maintaining a log that preserves the order of events requires that these events are being kept together in the underlying storage and its replicas and that results in a throughput ceiling for such a log. Partitioning allows for multiple parallel logs to be used for the same event hub and therefore multiplying the available raw input-output (IO) throughput capacity.

- Your own applications must be able to keep up with processing the volume of events that are being sent into an event hub. It might be complex and requires substantial, scaled-out, parallel processing capacity. The capacity of a single process to handle events is limited, so you need several processes. Partitions are how your solution feeds those processes and yet ensures that each event has a clear processing owner.

Number of partitions

The number of partitions is specified at the time of creating an event hub. It must be between one and the maximum partition count allowed for each pricing tier. For the partition count limit for each tier, see this article.

We recommend that you choose at least as many partitions as you expect that are required during the peak load of your application for that particular event hub. For tiers other than the premium and dedicated tiers, you can't change the partition count for an event hub after its creation. For an event hub in a premium or dedicated tier, you can increase the partition count after its creation, but you can't decrease them. The distribution of streams across partitions will change when it's done as the mapping of partition keys to partitions changes, so you should try hard to avoid such changes if the relative order of events matters in your application.

Setting the number of partitions to the maximum permitted value is tempting, but always keep in mind that your event streams need to be structured such that you can indeed take advantage of multiple partitions. If you need absolute order preservation across all events or only a handful of substreams, you might not be able to take advantage of many partitions. Also, many partitions make the processing side more complex.

It doesn't matter how many partitions are in an event hub when it comes to pricing. It depends on the number of pricing units (throughput units (TUs) for the standard tier, processing units (PUs) for the premium tier, and capacity units (CUs) for the dedicated tier) for the namespace or the dedicated cluster. For example, an event hub of the standard tier with 32 partitions or with one partition incur the exact same cost when the namespace is set to one TU capacity. Also, you can scale TUs or PUs on your namespace or CUs of your dedicated cluster independent of the partition count.

As partition is a data organization mechanism that allows you to publish and consume data in a parallel manner. We recommend that you balance scaling units (throughput units for the standard tier, processing units for the premium tier, or capacity units for the dedicated tier) and partitions to achieve optimal scale. In general, we recommend a maximum throughput of 1 MB/s per partition. Therefore, a rule of thumb for calculating the number of partitions would be to divide the maximum expected throughput by 1 MB/s. For example, if your use case requires 20 MB/s, we recommend that you choose at least 20 partitions to achieve the optimal throughput.

However, if you have a model in which your application has an affinity to a particular partition, increasing the number of partitions isn't beneficial. For more information, see availability and consistency.

Mapping of events to partitions

You can use a partition key to map incoming event data into specific partitions for the purpose of data organization. The partition key is a sender-supplied value passed into an event hub. It's processed through a static hashing function, which creates the partition assignment. If you don't specify a partition key when publishing an event, a round-robin assignment is used.

The event publisher is only aware of its partition key, not the partition to which the events are published. This decoupling of key and partition insulates the sender from needing to know too much about the downstream processing. A per-device or user unique identity makes a good partition key, but other attributes such as geography can also be used to group related events into a single partition.

Specifying a partition key enables keeping related events together in the same partition and in the exact order in which they arrived. The partition key is some string that is derived from your application context and identifies the interrelationship of the events. A sequence of events identified by a partition key is a stream. A partition is a multiplexed log store for many such streams.

Note

While you can send events directly to partitions, we don't recommend it, especially when high availability is important to you. It downgrades the availability of an event hub to partition-level. For more information, see Availability and Consistency.

Next steps

You can learn more about Event Hubs by visiting the following links:

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for