Hello Aurimas,

Here is a concrete example of a "unnecessary" retransmission:

15:28:51.097963 server.50076 > client.60345: . 62673456:62674916(1460) ack 81 win 8192 (DF)

15:28:51.101547 client.60345 > server.50076: . ack 62673456 win 32768 (DF)

15:28:51.101548 client.60345 > server.50076: . ack 62673456 win 32768 <nop,nop,sack 62676376:62677836> (DF)

15:28:51.101549 client.60345 > server.50076: . ack 62673456 win 32768 <nop,nop,sack 62679296:62682216 62676376:62677836> (DF)

15:28:51.101632 client.60345 > server.50076: . ack 62673456 win 32768 <nop,nop,sack 62685136:62686596 62679296:62682216 62676376:62677836> (DF)

15:28:51.101633 client.60345 > server.50076: . ack 62674916 win 32768 <nop,nop,sack 62685136:62686596 62679296:62682216 62676376:62677836> (DF)

15:28:51.101645 server.50076 > client.60345: . 62673456:62674916(1460) ack 81 win 8192 (DF)

15:28:51.105159 client.60345 > server.50076: . ack 62709956 win 32768 <nop,nop,sack 62673456:62674916> (DF)

These are just "selected" packets from the trace, all containing a reference to the sequence number 62673456. This is how I interpret that:

- The packet is first sent.

- All prior packets are acknowledged up to the packet.

- 1 packet, sent later, is selectively acknowledged.

- 2 packets, sent later, are selectively acknowledged.

- 3 packets, sent later, are selectively acknowledged.

- The packet is acknowledged, along with some selective acknowledgements.

- The packet is retransmitted.

- A duplicate selective acknowledgement (DSACK) for the packet is received.

The ordering of steps 6 and 7 may seem a bit odd, but this is just due to the asynchrony of the processing and logging. After step 5 (3 reports that the packet is missing), a retransmission is initiated (queued); before the retransmission is actually sent, the "missing" acknowledgement arrives but it is now too late to stop the queued retransmission.

If the acknowledgement for the packet had arrived 1 microsecond earlier (before the packet was reported "missing" for a third time) then there would have been no problem. These small intervals of time are probably an indication that the "reordering" of packets is happening as the packets pass through some intermediate network device that processes packets in parallel.

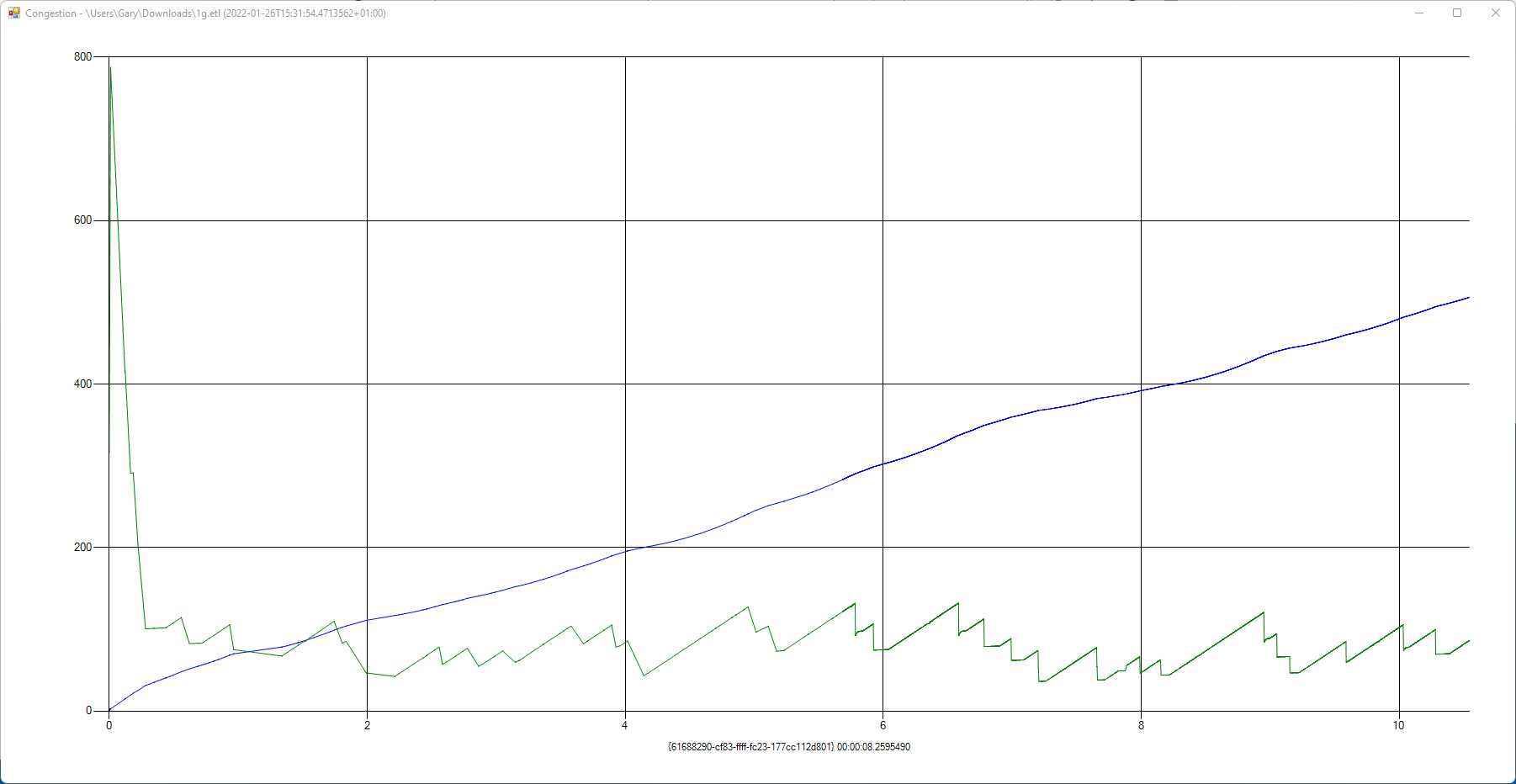

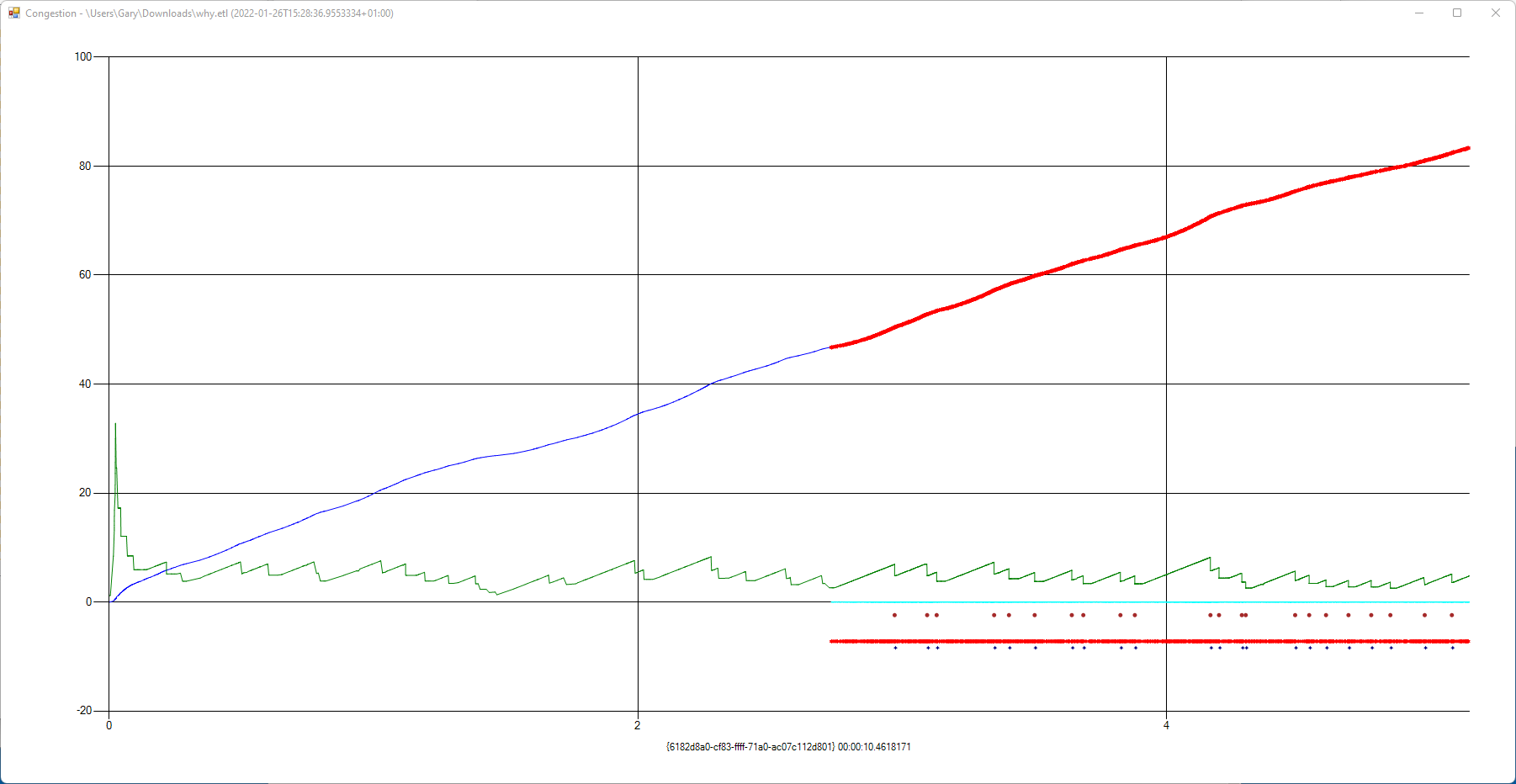

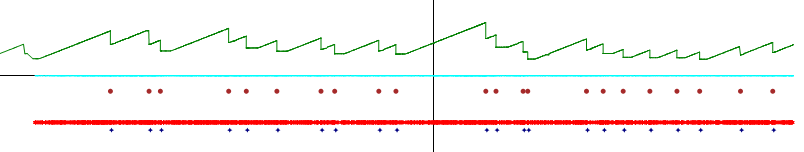

The "big" effect that these small glitches have is on the size of the sender's congestion window. It won't be practicable to eliminate the small amount of reordering, so the congestion control mechanism needs to be cleverer.

Gary