Hello @Egoist ,

Sorry, I was too quick to cast aspersions on the "Large Send Offload" functionality. After longer consideration, all of the problems seem to arise outside of Windows.

Let's look at some of the interesting behaviour.

11:39:17.343617 10.132.84.7.58616 > 24.220.62.52.8080: P 63:124(61) ack 76 win 1026 (DF)

11:39:17.343635 truncated-ip 0

11:39:17.343654 truncated-ip 0

11:39:17.343662 truncated-ip 0

11:39:17.343670 10.132.84.7.58616 > 24.220.62.52.8080: . 12412:13872(1460) ack 76 win 1026 (DF)

11:39:17.348628 24.220.62.52.8080 > 10.132.84.7.58616: . ack 63 win 251 <nop,nop,sack 4220:5680> (DF)

11:39:17.349441 24.220.62.52.8080 > 10.132.84.7.58616: . ack 63 win 274 <nop,nop,sack 7140:8316 4220:5680> (DF)

11:39:17.349442 24.220.62.52.8080 > 10.132.84.7.58616: . ack 63 win 297 <nop,nop,sack 9776:11236 7140:8316 4220:5680> (DF)

11:39:17.349442 24.220.62.52.8080 > 10.132.84.7.58616: . ack 63 win 320 <nop,nop,sack 9776:12412 7140:8316 4220:5680> (DF)

11:39:17.349442 24.220.62.52.8080 > 10.132.84.7.58616: . ack 63 win 343 <nop,nop,sack 9776:13872 7140:8316 4220:5680> (DF)

11:39:17.349604 24.220.62.52.8080 > 10.132.84.7.58616: . ack 124 win 343 <nop,nop,sack 9776:13872 7140:8316 4220:5680> (DF)

First, 61 bytes are sent, then 3 large segments (each carrying 4096 bytes - which are sent as two 1460 byte packets and one 1176 byte packet by the network adapter) and then a 1460 byte packet.

Those first 61 bytes are first acknowledged in the sixth ack response - long after later packets have been acknowledged with a "sack" (selective acknowledgement). The subsequent acks also indicate that packets are being received by the server "out of order".

"Out of order" reception typically results from packet loss plus retransmission, but no retransmission is taking place at this time and some packets seem to be "overtaking" others as they cross the network.

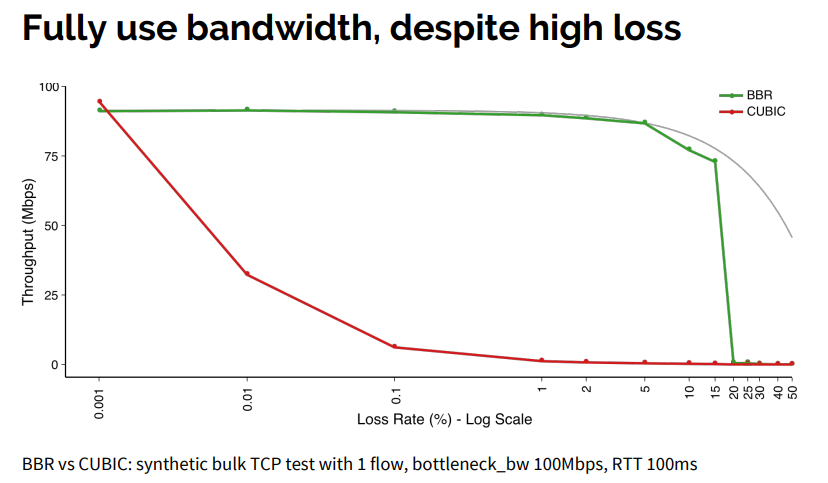

The frequent packet loss quickly reduces the "congestion window" which starts at 10 * MSS and soon falls to just one MSS:

SeqNo = 648939448, BytesSent = 0, CWnd = 14600

SeqNo = 648939449, BytesSent = 0, CWnd = 14600

SeqNo = 648939449, BytesSent = 62, CWnd = 14600

SeqNo = 648939511, BytesSent = 61, CWnd = 14662

SeqNo = 648939572, BytesSent = 4096, CWnd = 14662

SeqNo = 648943668, BytesSent = 4096, CWnd = 14662

SeqNo = 648947764, BytesSent = 4096, CWnd = 14662

SeqNo = 648951860, BytesSent = 2313, CWnd = 14662

SeqNo = 648953320, BytesSent = 6815, CWnd = 17643

SeqNo = 648942492, BytesSent = 1460, CWnd = 1460

SeqNo = 648942492, BytesSent = 1460, CWnd = 1460

SeqNo = 648960135, BytesSent = 2920, CWnd = 2920

SeqNo = 648945128, BytesSent = 2920, CWnd = 2920

SeqNo = 648948048, BytesSent = 1460, CWnd = 4380

SeqNo = 648949508, BytesSent = 2922, CWnd = 4382

SeqNo = 648953320, BytesSent = 4383, CWnd = 4383

SeqNo = 648953320, BytesSent = 1460, CWnd = 1460

SeqNo = 648953320, BytesSent = 1460, CWnd = 1460

SeqNo = 648953320, BytesSent = 1460, CWnd = 1460

SeqNo = 648963055, BytesSent = 4380, CWnd = 4380

SeqNo = 648963055, BytesSent = 1460, CWnd = 1460

SeqNo = 648967435, BytesSent = 2920, CWnd = 2920

SeqNo = 648964515, BytesSent = 2920, CWnd = 2920

SeqNo = 648967435, BytesSent = 1460, CWnd = 4380

SeqNo = 648964515, BytesSent = 1460, CWnd = 1460

SeqNo = 648970355, BytesSent = 2920, CWnd = 3066

SeqNo = 648968895, BytesSent = 3066, CWnd = 3066

SeqNo = 648971815, BytesSent = 1460, CWnd = 4380

SeqNo = 648968895, BytesSent = 1460, CWnd = 1460

SeqNo = 648973275, BytesSent = 4526, CWnd = 4526

SeqNo = 648977655, BytesSent = 1607, CWnd = 4527

SeqNo = 648974735, BytesSent = 1460, CWnd = 1460

SeqNo = 648974735, BytesSent = 1460, CWnd = 1460

SeqNo = 648974735, BytesSent = 1460, CWnd = 1460

SeqNo = 648979115, BytesSent = 4380, CWnd = 4380

SeqNo = 648983495, BytesSent = 4383, CWnd = 4383

SeqNo = 648987875, BytesSent = 4386, CWnd = 4386

SeqNo = 648992255, BytesSent = 1467, CWnd = 4387

SeqNo = 648993715, BytesSent = 1861, CWnd = 4781

SeqNo = 648990795, BytesSent = 1460, CWnd = 1460

SeqNo = 648995175, BytesSent = 2920, CWnd = 2920

SeqNo = 648992255, BytesSent = 4380, CWnd = 4380

SeqNo = 648998095, BytesSent = 4381, CWnd = 4381

SeqNo = 649002475, BytesSent = 4384, CWnd = 4384

SeqNo = 649006855, BytesSent = 1977, CWnd = 4897

SeqNo = 649003935, BytesSent = 1460, CWnd = 1460

SeqNo = 649008315, BytesSent = 3427, CWnd = 3427

SeqNo = 649011235, BytesSent = 3429, CWnd = 3429

SeqNo = 649014155, BytesSent = 4041, CWnd = 4041

SeqNo = 649017075, BytesSent = 4954, CWnd = 4954

SeqNo = 649021455, BytesSent = 5867, CWnd = 5867

SeqNo = 649027295, BytesSent = 2215, CWnd = 6595

SeqNo = 649029510, BytesSent = 1460, CWnd = 6595

SeqNo = 649030970, BytesSent = 1460, CWnd = 6595

SeqNo = 649022915, BytesSent = 1460, CWnd = 5840

SeqNo = 649032430, BytesSent = 1460, CWnd = 5840

SeqNo = 649033890, BytesSent = 1460, CWnd = 5840

SeqNo = 649035350, BytesSent = 1460, CWnd = 5840

SeqNo = 649036810, BytesSent = 1460, CWnd = 5840

SeqNo = 649038270, BytesSent = 1460, CWnd = 5840

SeqNo = 649039730, BytesSent = 1460, CWnd = 5840

SeqNo = 649022915, BytesSent = 1460, CWnd = 1460

SeqNo = 649041190, BytesSent = 948, CWnd = 5548

SeqNo = 649042139, BytesSent = 0, CWnd = 5549

The impact that this has on performance can be seen clearly here:

11:39:18.271541 24.220.62.52.8080 > 10.132.84.7.58616: . ack 8600 win 594 <nop,nop,sack 7140:8316 15332:23607 9776:13872> (DF)

11:39:18.275841 24.220.62.52.8080 > 10.132.84.7.58616: . ack 13872 win 616 <nop,nop,sack 9776:10060 15332:23607> (DF)

11:39:18.275916 10.132.84.7.58616 > 24.220.62.52.8080: . 13872:15332(1460) ack 76 win 1026 (DF)

11:39:18.587669 10.132.84.7.58616 > 24.220.62.52.8080: . 13872:15332(1460) ack 76 win 1026 (DF)

11:39:19.196868 10.132.84.7.58616 > 24.220.62.52.8080: . 13872:15332(1460) ack 76 win 1026 (DF)

11:39:20.399855 10.132.84.7.58616 > 24.220.62.52.8080: . 13872:15332(1460) ack 76 win 1026 (DF)

11:39:20.406807 24.220.62.52.8080 > 10.132.84.7.58616: . ack 23607 win 639 (DF)

Windows is trying to fill the gap at 13872:15332 and can only send one MSS at a time because the congestion window is now that size (i.e. Windows has to wait until this data is acked before it can send any more). In fact it re-sends this packet 4 times at ever increasing intervals until, after two seconds, it is finally acknowledged.

The Windows system always seems to be the victim of the network behaviour without itself having put a foot wrong - there appears to be nothing that can be done from the Windows side to improve the situation.

Gary