Performance optimizations on queries using SQL Change Tracking features.

Problem: Performance optimizations on queries using SQL Change Tracking features.

In this blog I will throw some light on what to do when we are facing performance issues when there is performance trouble involving query with using SQL Change tracking.

a) First of all I will begin with what this change tracking is. Change tracking is the feature which was introduced in SQL 2008.It plays and important role when you want to do real time synchronization between two databases. Since triggers are not the best solution to find out the rows which have been modified as they are tightly bounded with event of Insert, Update or Delete actions .I am not going to deep dive on design and implementation of Change tracking which could be used as workaround for triggers .You could find some of that info over here:

https://msdn.microsoft.com/en-us/library/cc280462(v=sql.105).aspx

https://msdn.microsoft.com/en-us/library/bb933875.aspx

I am going to touch some points on what can you do if in real time systems, lot of data has been modified on source database and which may or may not impact the performance of change tracking queries depending on how they have been designed .Change tracking queries usually involves built-in TSQL functions which helps an application to detect what changes has occurred since last time synchronization has happened. For more info please visit this article:

https://msdn.microsoft.com/en-us/library/cc280358(v=sql.105).aspx

Change tracking usually involves below type of queries wherein join is performed with the resultset returned from CHNAGETABLE function. For e.g.:

-- Obtain incremental changes by using the synchronization version obtained the last time the data was synchronized.

SELECT

CT.ProductID, P.Name, P.ListPrice,

CT.SYS_CHANGE_OPERATION, CT.SYS_CHANGE_COLUMNS,

CT.SYS_CHANGE_CONTEXT

FROM

SalesLT.Product AS P

RIGHT OUTER JOIN

CHANGETABLE(CHANGES SalesLT.Product, @last_synchronization_version) AS CT

ON

P.ProductID = CT.ProductID

b) More often than not queries designs such as above will perform absolutely fine when there is less amount of data but there could be a problem if source server has lot of inserts or updates happening

Now when Change tracking is enabled SQL server creates internal table. The naming convention used is: “change_tracking_<object_id>”.SQL Server stores the DML change for that table in this internal table. Since it’s an internal table it cannot be queried directly. Hence we have to use change tracking functions.

Care should be taken while performing query on using CHANGETABLE functions that appropriate business rule is applied such as SYS_CHANGE_OPERATION = 'I' or SYS_CHANGE_OPERATION = 'U' in the WHERE clause of the query if you want just new inserts to be returned only or new updates or both .

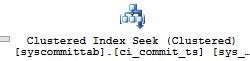

If there is a slow performing query all the general guidelines should be followed as for tuning queries. This post will not go into general performing query techniques in database engine. Once you have captured the SQL Server execution plan for a query involving change tracking functions such as CHANGETABLE ,you will see that execution plan contains the cost associated with the internal tables as well like below:

As we can see above it’s an internal table on which clustered index seek has happened on query execution. Now consider a little more complicated scenario when there are multiple joins involved in a query which has CHANGETABLE as one of its table on which JOIN is performed. In that case we may well see clustered index seek happening not only on internal change tracking table but many other user defined tables as well.

Probably first thing you will check in execution plan is whether there is anywhere clustered index seek not happening and pinpoint something wrong easily. Or if all the tables involved have updated stats or not. Check also if there is blocking happening or not.

If you see that despite the fact that query execution plan seem to indicate nothing wrong, please take a second look at the COST associated with the change tracking internal table which begins something like : change_tracking_<object_id>. There could be one more important table which is also system table named syscommittab used in change tracking. Please note that cost associated with it as well:

It’s imperative we check the cost associated with the above two tables : change_tracking_<object_id, syscommittab.

1) If the ratio of the total cost of these tables (change_tracking_<object_id, syscommittab) to the total cost of the entire query is no higher side that means we have a very chance that our query is performing very fast within expected SLA.

2) But if the ratio of total cost of above these tables(change_tracking_<object_id, syscommittab) to the total cost of the entire query is on lower side that means query may be very slow and there may be query design issues which we may need a look ahead from here and which cannot be ruled out. This will also indicate that cost associated with user defined tables is implicitly more. For e.g. user defined tables having cost adding up to 90% in turn triggering longer wait for query response. Thereby lower ratio value.

In case of 2) please check and see if there is any subquery used in your query .If yes then check if you can deploy some workaround to avoid subquery as it can lead to bad execution plan .Try to use normal join condition instead of subquery and test out the performance. If you have subquery see if you can work around the same logic using WITH clause using common table expressions:

https://msdn.microsoft.com/en-us/library/ms175972(v=sql.100).aspx

Conclusion

c) Since this post is just a pointer to indicate where to start looking for problem if you fall under 2) point above. The only rule I can recommend is to make sure that targeting ratio of total cost of tables (change_tracking_<object_id, syscommittab) to the total cost of the entire query should be on higher side for e.g. 60-80% .This would ensure query involving change tracking is within our expectation and returns results quick enough. The reason I say this is because CHANGE TRACKING tables are relatively smaller tables as compared to source tables which have all the data since they keep recently modified data rows only. If the cost associated with these table is larger than it should return results quicker enough, considering the fact that clustered index seek on internal change tracking tables is bound to return results back quicker enough than the clustered index seek on its PARENT table of specific internal change tracking table.

Thx

Ashutosh Sharma

SQL Consultant

Disclaimer

The views expressed on this website/blog are mine alone and do not reflect the views of my company. All postings on this blog are provided “AS IS” with no warranties, and confers no rights.