The Storage option in Azure

- av Einar Ingebrigtsen

The storage option in Azure is very powerful. It provides a way to work with data that is redundant when stored, making it secure for you and you don’t have to worry about it. There are always kept a minimum of 3 copies of your data and some of the options enables to have copies of the data kept across multiple facilities. You can read more in depth about how the redundancy works here.

A brilliant thing that comes with Azure storage is CDN (Content Delivery Network). This enables you take content and spread it across a network making the content available closer to the user. This improves not only performance, but also availability since it will be available across different facilities around the world.

Everything inside Azure Storage has been optimized for scalability, performance and redundancy. It has also bee made easily accessible with REST APIs and wrappers for the most commonly used environments. There is also quite a bit of open source contributions out there, so you should be able to use Azure Storage in most cases.

This post is going to focus on Azure Storage from a developer perspective, how can you make use of the different aspects of Azure Storage in your application.

Emulators

If you want to test things out without having to work directly with Azure, there is an emulator for Azure Storage that emulates it all locally. You will need to download the SDK to get it. This post won’t go into depth with how that all works, but you can read more about it here.

Storage Account

Before we get started with using the storage account, we’re going to need a storage account in Azure. If you already have one, you can skip this part.

Click the  button at the bottom of the screen and select DataServices -> Storage -> Quick Create.

button at the bottom of the screen and select DataServices -> Storage -> Quick Create.

This should slide out a form to the right to fill in with the data you want. Firstly the URL, this is an Azure wide unique URL and the UI will constantly validate whether or not it is available. Secondly is location or affinity group. If you already have an affinity group you can reuse it here or simply chose the location in which you want the account to be. Then click the “CREATE STORAGE ACCOUNT” button at the bottom.

This probably takes a minute or so to provision. When its done it should sit under the Storage tab in the navigation menu to the left and have its status as online.

Working with the Storage Account

Coming at it from.NET, the concepts in Azure Storage has a couple of things they share. The first thing is the same NuGet package. In your .NET project WindowsAzure.Storage. Add the NuGet reference either through the UI or the Package Manager Console. This will pull down a few references.

The second thing they share is how we access the account programmatically, it is the same across the different services.

First we need a connection string. You can have this in your AppSettings if you’d like, but I’ll leave that up for you to decide later. For now we’re just going to have it directly hardcoded in code. Having it in your App.config is probably something you’d like, especially if you’re going to change between using the emulator when running locally (DEBUG) and the real deal when running in production.

The format of the connection string looks like this:

DefaultEndpointsProtocol=https;AccountName=[INSERT ACCOUNTNAME];AccountKey=[INSERT ACCOUNTKEY]

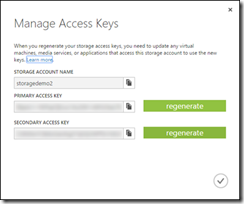

In order for us to be able to create this, we’re going to have to get the key for the storage account. This is accessible by selecting the storage account (not navigate to it) and then click the  button in the toolbar at the bottom.

button in the toolbar at the bottom.

From the popup we can get the account key. The account name is the name of the storage account.

var connectionString = "DefaultEndpointsProtocol=https;AccountName=storagedemo2;AccountKey=[SECRET]";

var account = CloudStorageAccount.Parse(connectionString);

With this we now have the account ready that we can use for all of the remaining code in this post.

Blobs

If you’re looking for a place to store unstructured data, Blob Storage could be the place. It is perfect for storing large data such as text or binary data. Also an excellent candidate for storing smaller static data that you want to make available through the CDN that Azure offers. Good candidates for this could be images, video or audio files for streaming or storing files that you want distributed access to. It is also a great candidate for storing backups and disaster recovery data.

Within your storage account you can create containers and within these you can put blobs. There is no nested folder structure or anything, its just that one level – the container.

The first thing we need is a CloudBlobClient instance, we get it from our account:

var blobClient = account.CreateCloudBlobClient();

Once we have that we need a reference to a container. Containers can be created using the visual studio plugin through the server explorer, or any other tool. But we can also programmatically get it created if it does not exist:

var container = blobClient.GetContainerReference("mycontainer");

container.CreateIfNotExists();

By default, containers and its content are private. We can change this behavior by setting the permissions, making everything in it publically available if we want to:

container.SetPermissions(

new BlobContainerPermissions

{

PublicAccess = BlobContainerPublicAccessType.Blob

});

Once we have the container we can start putting thing into it, we have two different blob types that we can work with; block or page. There are some fundamental differences between these two, something that you can read more in-depth about here. But putting it short, block blobs are the most common ones but has a limitation of 200GB in size, while page blobs can be 1TB and are typically used to hold VHD images – this due to its better handling of random read and write. We’re sticking with block for this sample:

var blockBlob = container.GetBlockBlobReference("blockblob");

using( var stream = File.OpenRead(@"c:\myFile.txt") )

{

blockBlob.UploadFromStream(stream);

}

You can upload from bytes directly if you’d like, or you could use a MemoryStream or any other stream for that matter. If we want to download a blob, we simply do the following:

var blockBlob = container.GetBlockBlobReference("blockblob");

using (var stream = File.OpenWrite(@"c:\myOtherFile.txt"))

{

blockBlob.DownloadToStream(stream);

}

As with uploading, downloading can download to memory or other streams as well.

Blobs can be deleted:

var blockBlob = container.GetBlockBlobReference("blockblob");

blockBlob.Delete();

If you’d like you can list blobs in a container by calling the .ListBlobs() method on the container.

Tables

Unlike SQL, Table Storage is schema less and is a bit similar to how document databases are structured. Instead of having a fixed schema with columns for each table, you can pretty much store whatever you want to in a table. Every document can be different if you want to. It is a very different model from what the ideas of SQL and relational thinking has been, and in some cases it proves to be a better model as well. It seems that the idea behind NoSQL has been lost in many cases, No in this case does not been no as in not use, it means “Not Only”. This is a very good idea and something that you should really be applying for other things as well. The one size fits all idea is not really a scalable one. One size fits all is much more a convenient thinking than a realistic way to go. This is where Table Storage can come and provide an alternative, in fact, you might find that you want to store the exact same data in SQL and in Table Storage – just that the one sitting in Table Storage is structured a bit different and more optimal for typically reading throughput, while the SQL one might prove a better fit for querying and generating reports on top of.

Tables are different in nature from how a SQL table is, being without a schema it means you can’t define things like a primary key, composite key or even chose what will be indexed. Tables have 2 fixed set of keys; PartitionKey and RowKey. Together they uniquely identify the entity. The implication of this is that Azure will keep data with the same PartitionKey close to each other and easily retrievable.

Before we start using a table, we’re going to need to create the table in Azure. You can either do this through the tool that gets added in your server explorer when installing the Azure SDK, or you can download a separate tool for doing so. I’ve been using Azure Storage Explorer since the beginning of my Azure dev days and find it very useful, it can be downloaded here.

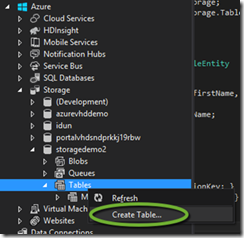

From Visual Studio you can simply navigate to the Server Explorer and connect to your account and then Storage -> Tables. Right click it and select “Create Table”

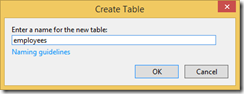

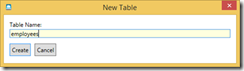

Give it a name, in our case employees. Names are case insensitive, you can read more about the naming guidelines here.

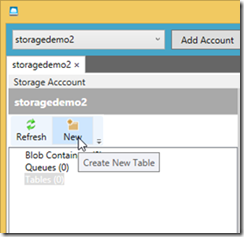

If you want to use the Azure Storage Explorer, just set up your account and then select Tables and then click the New button.

Give it a name; employees.

Now that we have our table we can start using it in our code. The first thing you need is an instance of something called CloudTableClient. We get it from the CloudStorageAccount instance we got earlier:

var tableClient = account.CreateCloudTableClient();

With this we can get a reference to the table:

var table = tableClient.GetTableReference("employees");

With the table reference, we could programmatically create the table in Azure if it does not exist so we don’t have to manually go and create it as we did. The CloudTable type has a method called CreateIfNotExists and an async version of it as well.

Everything that we want to do to the table is now about TableOperations. Whether you’re inserting an entity, updating it, deleting it or finding it – you have to go through a TableOperation. Before we get into that in detail, lets introduce an entity called Employee:

public class Employee : TableEntity

{

public Employee() { }

public Employee(string firstName, string lastName)

{

PartitionKey = lastName;

RowKey = firstName;

}

public string FirstName

{

get { return PartitionKey; }

set { PartitionKey = value; }

}

public string LastName

{

get { return RowKey; }

set { RowKey = value; }

}

public string PhoneNumber { get; set; }

}

A very simple entity, but one point to emphasize; we’re saying that the uniqueness is a combination of the first and last name of the employee. Obviously in the real world, this is not true. Typically it could be something like PartitionKey be the department and RowKey just be the unique employee number. But in the interest of simplicity, lets stick with this. To create an instance and insert it into table you do the following:

var employee = new Employee("John", "Smith");

var operation = TableOperation.Insert(employee);

table.Execute(operation);

To retrieve an existing entity we can as follows:

var retrieveOperation = TableOperation.Retrieve<Employee>("Smith", "John");

var result = table.Execute(retrieveOperation);

var employee = result.Result as Employee;

Then we can do some changes to it and replace it in the table:

employee.PhoneNumber = "212-555-1234";

var replaceOperation = TableOperation.Replace(employee);

table.Execute(replaceOperation);

These are the most common ones, and of course delete – you can do that as well.

There is even a InsertOrReplace operation that helps with doing insert or replace, so you don’t have to know.

We can even query the table:

var query = new TableQuery<Employee>().Where(

TableQuery.GenerateFilterCondition("PhoneNumber", QueryComparisons.Equal, "212-555-1234"));

var queryResult = table.ExecuteQuery(query).ToArray();

The conditions can be combined by using the TableQuery.CombineFilters() and chain together whatever filter you want to query with. Another aspect of querying is that sometimes you simply don’t want the entire object, just one of its properties. This could save some bandwidth as well. To accomplish this you have to use something called DynamicTableEntity and an EntityResolver. To read more about how projections like this work you should read this blogpost.

var query = new TableQuery<DynamicTableEntity>().Select(new string[] { "PhoneNumber" });

EntityResolver<string> resolver = (pk, rk, ts, props, etag) => props.ContainsKey("PhoneNumber") ? props["PhoneNumber"].StringValue : null;

var queryResult = table.ExecuteQuery(query, resolver, null, null).ToArray();

Queues

The Queues in Azure Storage are a not to be mistaken with MSMQ, RabbitMQ or anything similar. It is very capable and again built for scale, performance and redundancy. Queues are designed to store a large numbers of messages that can be accessed from anywhere in the world via authenticated calls. A single message can be up to 64 KB in size and there can be millions of these per queue, basically limited by the capacity limit of the storage account. A storage account can contain a total of 500 TB of blog, queue and table data.

Queues are typically used for scheduling work to be processed asynchronously or communication between different decoupled parts of your system running in different app services. Although, I would recommend having a look at the Azure Service Bus for other options for how to let decoupled parts of your system talk together.

From the account we can get the CloudQueueClient.

var queueClient = account.CreateCloudQueueClient();

From this we can get a queue reference and we can make sure it exists programmatically:

var queue = queueClient.GetQueueReference("myqueue");

queue.CreateIfNotExists();

Inserting a message is simple, we create an instance of a CloudQueueMessage and add it to the queue:

var message = new CloudQueueMessage("This is a message");

queue.AddMessage(message);

You can add messages that aren’t strings as well. There are overloads of the constructor that takes byte array and more.

Typically in another process or other part of your application you would need to have a loop that was just waiting for a message. Basically you have two options; peeking at the tip of the queue or getting the message at the tip of the queue. Peeking only gives you a peek at the message, without changing its visibility. While getting it will give you the message and change the visibility of the message for anyone else.

To peek at the tip of the queue, do the following:

message = queue.PeekMessage();

Then, if you’re getting it:

message = queue.GetMessage();

If your system is having a multi-part workflow related to the message and you want to keep the message and its visibility but change its status, you can simply change its characteristics and update it on the queue:

message.SetMessageContent("Updated");

queue.UpdateMessage(message,

TimeSpan.FromSeconds(0.0),

MessageUpdateFields.Content | MessageUpdateFields.Visibility);

Completely deleting a message:

message = queue.GetMessage();

queue.DeleteMessage(message);

By using the GetMessages() method on the queue you can get a batch of messages, maximum of 32 with a timeout.

Files

An option that Azure provides in addition to the Blob Storage is something called files. This is basically all about exposing storage as network shares to your application or virtual machines running in Azure. It complies with the SMB 2.1 protocol enabling you to map it as a drive directly. This is a great feature for legacy apps that rely on this type of storage for storing files. It is also accessible programmatically. This post won’t be covering it, you can find more information here.

Conclusion

Working with storage is pretty simple, APIs are focused and all the nitty-gritty details are hidden and you can focus on delivering the business value. Having things scale for you without having to think about is a great relief when making software. Azure has come a long way since its initial release, with a constant focus on making things simpler, more accessible and robust.

Do YOU want to try Azure Storage? Or do you want to take a look at the services provided in Azure? Or do you just want to play around and take a look at the possibilities in the cloud? You can try for free for 30 days – get your free Trial now!