Recovering from Log File Corruption

I was with a customer this week how had experienced an issue with their server, and it left them with a corrupt transaction log. Obviously I can't and won't go into detail about who it was and the specifics of how it happened, but this customer experienced a hardware issue and some faulty memory, which lead to an unclean shutdown of the server and a corruption in the transaction log. When they system eventually came online they had a database that could not be used.

Now after trying to bring the database online and seeing the error messages that told us that it was the transaction log that was the issue, we needed to determine what our options were and how best to deal with this. Obviously we don't want to lose any data, but since this is a production system, the clock is certainly ticking. The first question for me was " what is the SLA for this system and how much data can you afford to lose ??" I got the answer that I was expecting which was "the system needs to be up A.S.A.P and we can't lose any data". A stock response, but at least we all know what we are working towards.

So the next step was to find out what backups we have, and are they an option. The customer had the database in full recovery mode and did have a full backup and transaction log backup schedule, however the transaction log was only being backed up a few times a day, and so if we went back to those we would be losing around 4 hours worth of data. The customer didn't exactly like the sound of that, so I said lets keep that in our back pocket and see what else we can explore.

Next we tried to get CheckDB to rebuild the log for us, which is something that it is able to do under the right conditions. We put the database into EMERGANCY MODE using the following command:

ALTER DATABASE <dbname> SET EMERGENCY, SINGLE_USER

This put the database into a bypass recovery mode - basically bringing the database up but without the transaction log file. Now we can run a few commands to try and fix the issue. In this case we used the REPAIR_ALLOW_DATA_LOSS feature of CheckDB to try and fix the issue:

DBCC CHECKDB('<dbname>', REPAIR_ALLOW_DATA_LOSS)

When the database is in EMERGENCY MODE and you specify repair allow data loss, CheckDB will try and rebuild the log file if it is the log file that is causing the issue, and recovery can't be completed. We executed this command but straight away we hit another problem. The hardware fault had not only corrupted the log, but it had also caused the server to just turn off, which is a non-clean shutdown of the system. One thing that this repair option requires is a clean shutdown of SQL Server, and so all we got from CheckDB was an error message saying that the log file could not be rebuilt because the database was not shut down cleanly. So that rules out that as an option.

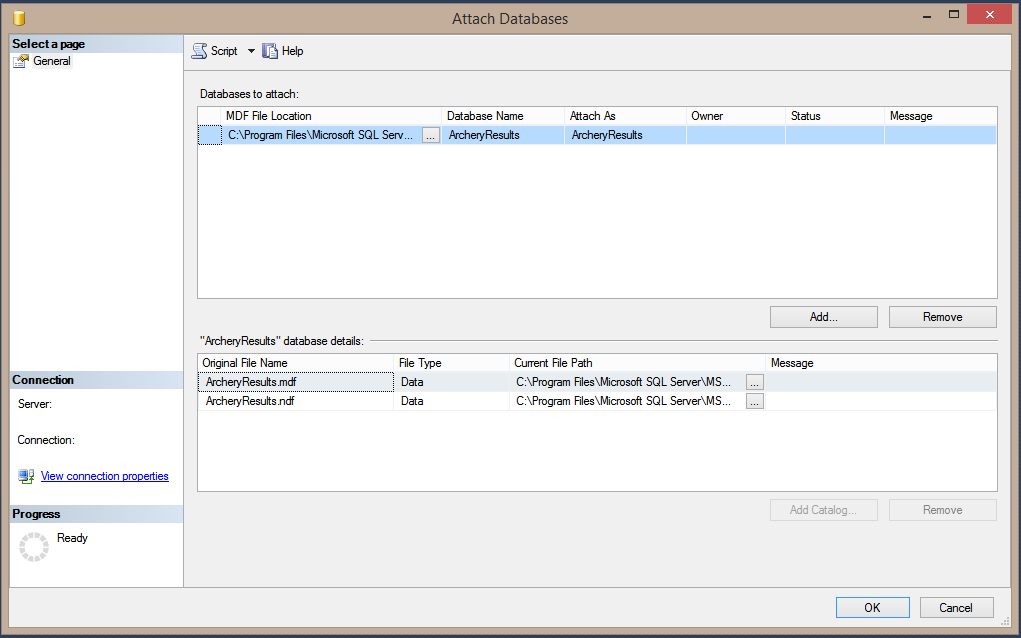

So next we tried something a little different but could have worked. We detached the database, and then tried to re-attached the database, but we removed the reference to the log file in the hopes that the attach process would create a new log file for us:

Now this didn't work either and basically for the same reason as CheckDB, the database was not cleanly shut down. When attaching a database, the UI doesn't use sp_attach_db as you might expect since that is the old syntax. What actually happens is that it uses CREATE DATABASE ..... FOR ATTACH and this command has the addition option ATTACH_REBUILD_LOG. This however requires the database to have been cleanly shutdown and since we didn't have that, the command failed in the same way as CheckDB did.

For completeness, we also tried sp_attach_single_file_db to see if that would also work, but as I am sure you have already guessed, this didn't work either fro the same reason as before - no clean shutdown.

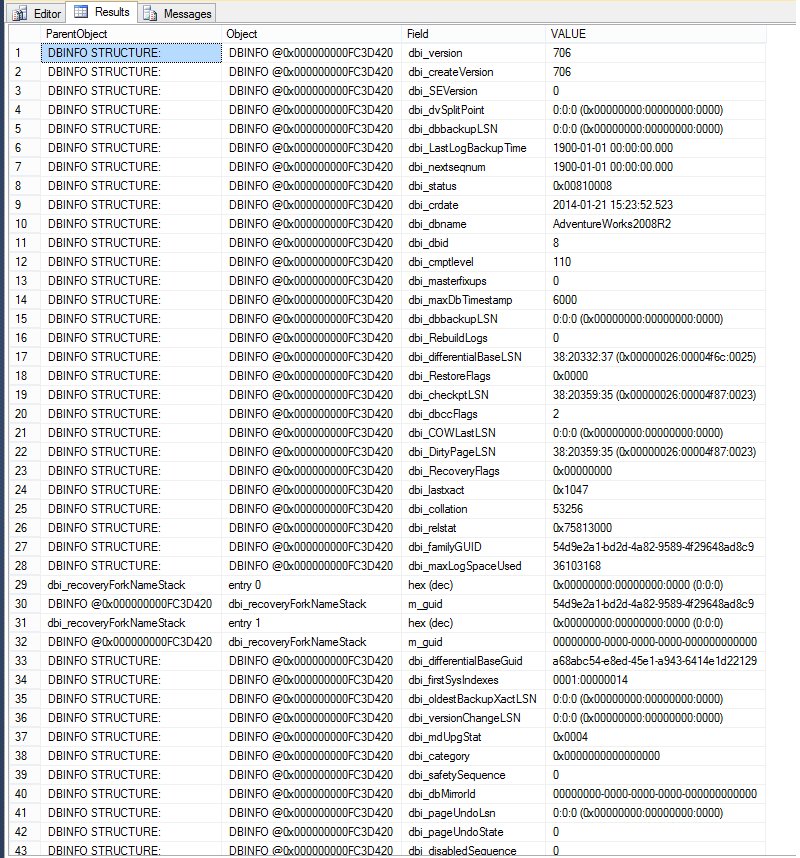

So now we tried something to see if it would work, and that was to try and trick SQL into thinking a new log file was for this actual database. What we did was we detached the broken database, and moved the data files out to a new location. We then created a blank database with the same name, same files, in their original locations, and then we shutdown the SQL Service. We then copied just the old data files over the top of the new ones, leaving the new log file in place, and we re-started the SQL Service. The hope being that it would see that new file and away we go. Alas this didn't work because SQL could tell that this log file was not for this database. The header information for the database included a number of LSN's so it knows where the last checkpoint was, last backup etc ..... and obviously these would not be present in this new log file and it wouldn't have been too hard for SQL to determine that this was not the log file for this database and it would not have been able to run crash recovery on the database during start-up.

So now we have a database that still doesn't work, and we have a log file that can't be used, but it does mean we can run the next command, which is to force SQL Server to create new log file for this database. We can do this using the ALTER DATABSE command and big thanks goes out to my colleagues who pointed the syntax for this to me. We put the database back into EMERGENCY MODE and then told SQL to REBUILD the log:

ALTER DATABASE <dbname> SET EMERGENCY, SINGLE_USER

GO

ALTER DATABASE <dbname> REBUILD LOG ON (NAME=<logical file name>, FILENAME='<full path to new file>')

GO

We ran this command and a few seconds later we had a brand new transaction log for this database. Now we put the database back into MULTI_USER ONLINE mode, and now all we had to do was run a full DBCC CHECKDB to see if we had any other issues.

The big point here to remember is that we "may" have lost some data, and we will not know what we have lost. Any transaction that were in the transaction log, that data that was not hardened to disk from a CHECKPOINT will be lost for good. For the customer they said they could live with that, since a CHECKPOINT will happen a lot more often than their backup routine did. Therefore by default they can say that have lost minimal data.

I don't advocate this as a method for all log recovery situations, and nothing can replace a good backup strategy. If you can't afford to lose more than a few minutes worth data, then backup your transaction logs every few minutes so that you can recover the data. Set-up mirroring or Always-On so that you have another copy of the database to hand so that you can get back online faster. Nothing in this post replaces a good HA/DR strategy and I want to make sure that is clear. What we did here is show that it is possible to get a database back up and running after a transaction log corruption, at the potential cost of some unknown data loss.