"Memory Camping"

On occasion, Windows may report a failure to start a new process due to insufficient virtual memory, physical memory, "storage" or system resources.

While there are many possible (genuine) reasons for this shortage (most often visible through Task Manager, Resource Monitor or PerfMon), sometimes there is no obvious resource that is in short supply, or exhausted - so why would the OS report this?

One possible reason is "memory camping" - first a bit of background on processes, virtual memory and working sets...

Windows works on the principle of "virtual" memory - that which is made up of physical RAM and on disk in the form of page files.

Basic tenets:

- Physical RAM that is not allocated is wasted

- Physical RAM which is allocated but does not contain data that has been recently accessed is wasted

In the case of the first scenario, Vista now uses more physical RAM for the system cache - if a process or the kernel needs RAM and there is not enough "free" then the cache can be trimmed in the same way that processes are.

Trimming is what occurs in the second scenario - we have pages of memory allocated that have aged (i.e. not been accessed in some time) and will in time be cleaned up and eventually paged out to disk.

The amount of a virtual memory belonging to a process that is currently resident in physical memory is called the working set.

From Performance Monitor on Windows Server 2008 SP1:

"Working Set is the current size, in bytes, of the Working Set of this process. The Working Set is the set of memory pages touched recently by the threads in the process. If free memory in the computer is above a threshold, pages are left in the Working Set of a process even if they are not in use. When free memory falls below a threshold, pages are trimmed from Working Sets. If they are needed they will then be soft-faulted back into the Working Set before leaving main memory."

Fire up Process Explorer and you can add the columns to display Working Set and Virtual Size for each process on the system.

Note that the Working Set contains both "shared" and "private" values.

Processes that load the same DLLs will have the size of these DLLs attributed to both of them, however in reality they are only loaded once - this is "shared".

Conversely, processes can have private memory allocations that, as the name implies, are only visible to themselves.

(To make it slightly more complicated, "shared" is actually a subset of "shareable" - think of this as "potentially shared".)

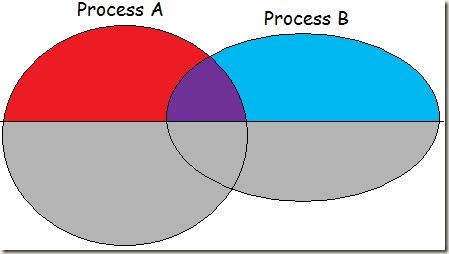

See the following MS Paint masterpiece:

The 2 overlapping ovals illustrate the virtual sizes of 2 processes running a system that have shared DLLs.

The line indicates the portions of the virtual sizes which are resident in physical RAM (above the line) and faulted out (below the line).

RED: Process A's private working set

RED + PURPLE: Process A's working set

BLUE: Process B's private working set

BLUE + PURPLE: Process B's working set

PURPLE: Process A's and Process B's shared working set

(I am not concerned with going into the distinction of virtual memory that is on the Modified List or paged to disk, so these are simply all grey.)

So this is why you can't base memory sizing requirements purely of virtual sizes, or even off working sets - in the first case it could be not using physical memory at all*, and in the second case there can be a huge overlap between processes and the "whole is less than the sum of the parts" (for a change).

(* Except the bare minimum used by the kernel required to reference every running process on the system.)

Back in Process Explorer you can add the "WS Private", "WS Shareable" and "WS Shared" counters - all subsets of the Working Set.

(In fact, Working Set = WS Private + WS Shareable.)

So how does Windows determine how much physical RAM is actually in use at any given time?

The Memory Manager keeps a track of this through the Page Frame Database, which has to be large enough to describe all the pages in physical memory.

In the situation where the "not yet allocated" physical pages counter is less than is need to fullfil a memory request, what happens?

In the event where the Free and Zeroed lists in the kernel are not big enough, the Memory Manager has to start trimming pages - reducing the working sets of processes and aged pages used by the cache or paged pool.

However, before this is attempted Windows can take a shortcut to determine if it would ever have enough memory to satisfy the request.

It does this through "hints" in the each process' Process Environment Block (PEB) - specifically the Minimum Working Set.

This value is an indication to the OS as to how small it can possibly get, if trimmed to the bone (it is not impossible for the minimum working set to be larger than the current working set!).

With the hints from each process, now the Memory Manager can do a quick calculation to see if it can even start to trim in order to satisfy a request for more memory:

- Add all the Minimum Working Set values together, plus the nonpaged pool size and non-pageable parts of the kernel

- Ensure that this is less than available page file space, as this is where the memory would have to get hard faulted to

- Subtract the value from the physical RAM installed, and ensure this is greater than or equal to the memory allocation request

If step 2 fails, we have insufficient virtual memory.

If step 3 fails, we have insufficient physical memory... maybe.

The Minimum Working Set hint is set through the second parameter in a call to SetProcessWorkingSetSize() - so if this number is wildly inaccurate then it will throw off the quick calculation made by the Memory Manager and lead to strange errors.

This is referred to as "memory camping" - when you look in Task Manager you may see plenty of available memory (Free + System Cache), but trying to start a new process reports "out of memory" (or similar).

The calculation in step 1 above returns a figure way bigger than it should be (or really is), which leads to step 2 or 3 failing the test.

A classic example is where a 32-bit application uses SetProcessWorkingSetSize() to specify dwMinimumWorkingSetSize and dwMaximumWorkingSetSize as 0xFFFFFFFF and the source is simply compiled as 64-bit without any changes.

As per https://msdn.microsoft.com/en-us/library/ms686234(VS.85).aspx:

"If both dwMinimumWorkingSetSize and dwMaximumWorkingSetSize have the value (SIZE_T)–1, the function removes as many pages as possible from the working set of the specified process."

When compiled into a 32-bit executable or DLL, 0xFFFFFFFF == -1.

However, when compiled into a 64-bit binary, 0x00000000FFFFFFFF == 4294967295, which is basically 4GB (minus 1).

Now this process may report its "smallest possible physical memory footprint" as 4GB, leading to an error of almost 4GB - at best causing unnecessary page trimming and at worst refusing to start any new process.

If you suspect this to be the case for a problem you are troubleshooting, the latest Process Explorer (at time of writing this is 11.31) can display the "Min Working Set" for each process for you - if you see a large figure in here then check that the owner is accurately reporting it (it should be greater than the current Working Set value).

The more complex method would be to do a kernel debug and view the output from the command "!process 0 1", looking at the "Working Set Sizes (now, min, max)" output for each process.

To give a real example, this may be the root cause if a virtual machine fails to start on a Hyper-V host, yet there is enough available RAM reported by Task Manager (visible as Free + System Cache) - worth considering if you have the symptoms of KB953585 and the hotfix didn't help:

https://support.microsoft.com/kb/953585

Error message when you try to start a Hyper-V virtual machine on a Windows Server 2008-based or Windows Vista-based computer that uses the NUMA architecture: "An error occurred while attempting to change the state of virtual machine VMNAME"