When in doubt, Reboot!

I tend to get quite a bit of Kerberos related cases. These are related across the box, from the Engine, to Reporting Services to just straight connectivity with custom applications. I had one given to me yesterday because the engineer had gone through everything we normally go through and wasn’t getting anywhere.

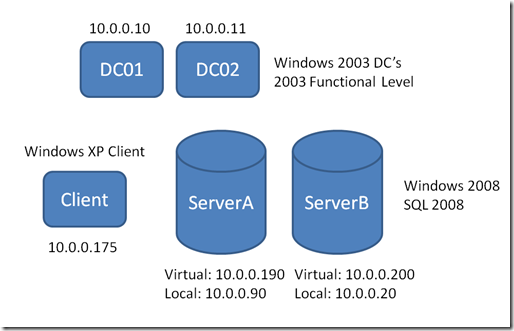

The situation was an 8 node cluster with multiple instances across the nodes. These were running Windows 2008 with SQL 2008. One node in particular was having an issue when they were issuing a Linked Server Query from a remote client.

When trying to hit the linked server from within Management Studio on the client machine, we received the following message:

Msg 18456, Level 14, State 1, Line 1

Login failed for user 'NT AUTHORITY\ANONYMOUS LOGON'

Kerberos Configuration:

When we see this type of error, it is typically Kerberos related as the Service we are using (ServerA) is unable to delegate the client’s credentials to the backend server (ServerB – Linked Server). The first thing we do is go through our regular kerberos checklist – SPN’s and Delegation settings. Both SQL Servers were using the same Domain User Service Account (SNEAKERNET\SQLSvc). We can use SetSPN to check what SPN’s are on that account. NOTE: There are numerous ways to look for SPN’s but SetSPN is one of the easier command line tools available. You could also use LDIFDE (https://support.microsoft.com/kb/237677), ADSIEdit (https://technet.microsoft.com/en-us/library/cc773354(WS.10).aspx) and other tools. You will see us use an in house tool called DHDiag to collect SPN’s. This is just a wrapper that calls LDIFDE to output the results.

So, here are the SetSPN results:

C:\Users\Administrator>setspn -l sqlsvc

Registered ServicePrincipalNames for CN=SQL Service,OU=Service Account,DC=sneakernet,DC=local:

MSSQLSvc/SQL02:26445

MSSQLSvc/SQL02.sneakernet.local:26445

MSSQLSvc/SQL01.sneakernet.local:14556

MSSQLSvc/SQL01:14556

Why do we see SQL01 and SQL02 when our machine names are ServerA and ServerB? This is because SQL01 and SQL02 are the virtual names for the cluster. This name will move to whatever the active node is for that given instance. Where as ServerA and ServerB are the physical machine names and may or may not be actually hosting that instance. We can also see from this that we have two distinct instances because of the ports (14556 & 26445). If you look at some of our documentation (i.e. https://msdn.microsoft.com/en-us/library/ms189585(SQL.90).aspx), it indicates that for clusters, you need to also add a SQL SPN that does not include the port number. I have yet to see where this is actually needed. Every cluster I’ve seen has never had one. Typically if it is needed, you will receive a KRB_ERR_S_PRINCIPAL_UNKNOWN error if you enable Kerberos Event Logging. If you do see that and it lists that SPN, then go ahead and add it. But, from my experience, you won’t see it.

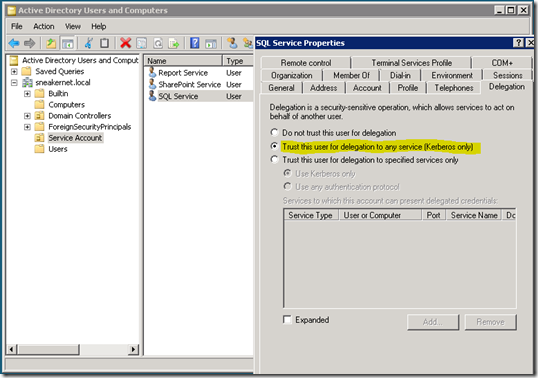

Ok, our SPNs look good. Lets look at our Delegation Settings. In this case we really care about the SQL Service Account, because that is the context that will be performing the delegation.

We can do this by going to the properties for that account within Active Directory Users and Computers. You will see a Delegation tab on the account. If you don’t see the delegation tab, then the account does not have an SPN attached to it. In this case we have “Trust this user for delegation to any service (Kerberos only)”. This is what I call Full or Open Delegation as opposed to Constrained Delegation (which is more secure). We are good to go here. Nine times out of ten, the SPN or Delegation setting is going to be the cause of your issue. In this case it isn’t. What can we do now?

Kerberos Event Logging and Network Traces:

We can enable Kerberos Event Logging (https://support.microsoft.com/default.aspx?scid=kb;EN-US;262177) which will give us errors within the System Log for Kerberos. This can sometimes be very helpful in diagnosing what may or may not be happening. This produced the following results on ServerA:

Error Code: 0x1b Unknown Error

Error Code: 0x19 KDC_ERR_PREAUTH_REQUIRED

And KDC_ERR_BADOPTION

These are not uncommon and when we looked at these, they didn’t really relate to our issue. Which means we had nothing here. Of note, doing a linked server query from ServerB to ServerA worked, and it also produced the same events listed above. So, nothing to gain here.

The next thing we can look at is getting a network trace as this will show us the communication between Service in question and the Domain Controller. I usually end up at this level if the SPN’s and Delegation settings check out. This is really where some customers can have issues, because typically these are hard to interpret and will require a call to CSS. We grabbed a trace in the failing and working condition to see what was different. We saw the following:

Failing:

525355 2009-06-30 15:55:39.468865 10.0.0.90 10.0.0.10 KRB5 TGS-REQ

KDC_REQ_BODY

KDCOptions: 40810000 (Forwardable, Renewable, Canonicalize)

Realm: SNEAKERNET.LOCAL

Server Name (Enterprise Name): ServerA$@SNEAKERNET.LOCAL

Working:

353115 23.437037 10.0.0.20 10.0.0.11 KRB5 TGS-REQ

KDC_REQ_BODY

KDCOptions: 40810000 (Forwardable, Renewable, Canonicalize)

Realm: SNEAKERNET.LOCAL

Server Name (Service and Instance): MSSQLSvc/SQL02.sneakernet.local:26445

You’ll notice that we are hitting different DC’s here, but that wasn’t the issue as we also saw the failing one hitting different DC’s as we continued. The other item that is different is the working one requested the right SPN, where as the failing one is requesting the physical machine account context. This is what was forcing us into NTLM and causing the Login failed error. But why was that happening? So far we have zero information to indicate what could be causing it.

SSPIClient:

We then used an internal tool called SSPIClient which makes direct calls to the InitializeSecurityContext API call which is how we do impersonation. This tool allowed us to take SQL Server out of the picture and focus on the Kerberos issue directly. We could see that we were failing back to NTLM which really confirmed what we saw in the network trace.

2009-07-01 16:34:24.577 ENTER InitializeSecurityContextA

2009-07-01 16:34:24.577 phCredential = 0x0090936c

2009-07-01 16:34:24.577 phContext = 0x00000000

2009-07-01 16:34:24.577 pszTargetName = 'MSSQLSvc/SQL02.sneakernet.local:26445'

2009-07-01 16:34:24.577 fContextReq = 0x00000003 ISC_REQ_DELEGATE|ISC_REQ_MUTUAL_AUTH

2009-07-01 16:34:24.577 TargetDataRep = 16

2009-07-01 16:34:24.577 pInput = 0x00000000

2009-07-01 16:34:24.577 phNewContext = 0x0090937c

2009-07-01 16:34:24.577 pOutput = 0x0017d468

2009-07-01 16:34:24.577 pOutput->ulVersion = 0

2009-07-01 16:34:24.577 pOutput->cBuffers = 1

2009-07-01 16:34:24.577 pBuffers[00].cbBuffer = 52

2009-07-01 16:34:24.577 pBuffers[00].BufferType = 2 SECBUFFER_TOKEN

2009-07-01 16:34:24.577 pBuffers[00].pvBuffer = 0x02c99f90

2009-07-01 16:34:24.578 02c99f90 4e 54 4c 4d 53 53 50 00 01 00 00 00 97 b2 08 e2 NTLMSSP.........

2009-07-01 16:34:24.578 02c99fa0 03 00 03 00 31 00 00 00 09 00 09 00 28 00 00 00 ....1.......(...

2009-07-01 16:34:24.578 pfContextAttr = 0x00001000 ISC_RET_INTERMEDIATE_RETURN

2009-07-01 16:34:24.578 ptsExpiry = 0x0017d43c -> 2009-07-01 10:39:24 *** EXPIRED *** (05:55:00 diff)

2009-07-01 16:34:24.578 EXIT InitializeSecurityContextA returned 0x00090312 SEC_I_CONTINUE_NEEDED (The function completed successfully, but must be called again to complete the context)

NOTE: We purged all of the Kerberos Tickets before we did this to make sure we would request the ticket from the KDC. This was done using KerbTray which is part of the Windows Resource Kit.

This tells us that we were requesting a given SPN for the Target, but the buffer shows NTLMSSP. This means we fell down to NTLM instead of getting Kerberos. This still doesn’t explain why.

End Result:

Unfortunately, this was one of those issues that just escaped us. This tends to happen with odd Kerberos cases. We had the Directory Services team engaged as well and they did not know what else we could do in terms of data collection outside of a Kernel Dump to see what may be going on. We noticed that the nodes had not been rebooted since April 5th which is a while. The SQL Service was recycled on June 25th. We decided to fail over to another node and reboot ServerA. After we rebooted, we tried SSPIClient again and we saw a proper response come back which also didn’t list EXPIRED. The issue at this point it was resolved. We don’t have hard data to indicate what exactly the issue was, but the thought is that something was cached and invalid causing the issue. Rebooting cleared that out and allowed us to work as expected.

Which leads me to my motto: When in doubt, Reboot!

Adam W. Saxton | Microsoft SQL Server Escalation Services