SharePoint 2010 Search

Microsoft SharePoint Server 2010 delivers powerful intranet and people search, right out of the box. SharePoint 2010 Search helps your people find the information they need to get their jobs done. It provides intranet search, people search, and a platform to build search-driven applications. SharePoint 2010 Search is its combination of relevance, refinement, and people. This new approach to search provides an experience that is highly personalized, efficient, and effective.

What's new?

- Possible to have multiple index servers - This means there is no single point failure and major performance improvements when we introduce new index server(s)

- Index servers are Stateless - Crawlers do not keep the indexes locally, they propagate it off to query servers. So, if Index server goes down, we can bring in another one and it doesn’t need to re-index everything, it picks up where we left.

- Possible to have multiple index partitions - Provides better redundancy and performance

- Possible to have multiple Crawl and Property Database - Better performance by better SQL throughput

- Possible to have multiple crawl components - which can utilize the additional crawl and property databases - Better crawl performance

- Content Source Priority - Crawler will prioritize based on the selected priority e.g. a content source with high priority will be crawled first

- Ability to crawl more number of content items compared to 2007 (estimated is 100 million with SP2010 compared to 50 million with MOSS 2007)

- Improved Administrative Interface - Eases administrators job

- Boolean queries are now supported!

- Search Suggestions as-you-type

- Did you mean

- Federated Search Connectors which can be consumed by Windows 7

- Search Refinement Panel

- Improved People Search

- Phonetic name and nickname matching

- Better relevancy

- Health and performance monitoring via predefined reports

- Ability to customize the search reports

- View in Browser using WAC from search result

Changes in Search Architecture in SharePoint 2010

Search Architecture - Physical Components

How things were in 2007?

In 2007, we had the concept of SSP. Components included:

- Web Server: Server with the web sites hosted that can be browsed by the end users and service related processes for accessing the content.

- Index Server: Server with the mssdmn.exe (Filter daemons) and mssearch.exe (Search service) running on them. The number of filter daemon processes would depend, however main purpose of this server was to get to the source of content (e.g. the web server) and copy over the content locally for temporary time. During this time the content is opened and text is extracted to create an index. This index is kept locally in bits and pieces is sent over to the next server which is Query Server.

- Query Server: Server with the file system index which has been created by the index server. The index is "propagated" from the Index server to this server (only when both servers are two separate boxes; if same, no propagation happens).

The 3 servers are conceptually separate, however they can be used in any combination needed. Same server can host all 3 services or it can be separated as desired.

In addition to this, the concept of SSP ensured that we have one index per SSP. Since multiple web applications can be associated with same SSP and the SSP can have multiple content sources, many times this ended up with single index shared among various web applications.

The concept changes a little bit with the release of 2010. In 2010:

Web Server

Remains the same concept as 2007.

Query Server

Is changed a bit. We have the same conceptual design of hosting the index. But the index is divided as compared to 2007. In 2010 what we have is something called as the index partition. The concept of index partition came up because in the 2007 era we had one index per SSP that used to have the "so called" single point of failure.

Index Server

Is changed a little. Conceptually same as 2007, goal is to index content. However we have something called as "Crawl Components".

The index server runs one or more crawlers components. The index server also hosts the search administration component, usually. Atleast one server in the farm must be an index role. Two or more index servers provide redundancy based on how crawlers are associated to the crawl databases. You can add more index servers to increase crawl performance and scale out for capacity requirements. The role can be shared on a server with web server role or with other application server roles.

Index partitions:

Index partitions means we can have one index created out of a various content sources "divided" among different "Query Servers". On top of this, these index partitions can be hosted on multiple query servers as a copy so that even if one query server is dead, entire index is not lost. This provides fault tolerance to a certain extent with load balancing; in a way, achieving what we did not have in 2007. Of course this would mean you need to have at least 2 query servers; having just one query server would not give you any fault tolerance or load balancing.

The entire index can be divided into discrete partitions that can then be distributed across multiple query servers. A second instance of each index partition can be deployed to a different query server to achieve redundancy. The entire index is an aggregation of all index partitions.

Search admin component:

The component is responsible and required for changing the topology and administration of search instance. Only one search Administration component is allowed per search service application. Can run on any server in the farm, preferably an index server or a query server.

Crawlers:

Crawl content based on what is specified in the crawl database. Each crawler is associated with one crawl database. Add crawlers to address capacity requirements and to increase crawling performance.

Database Server

Hosts Search related databases. Can host other SharePoint databases as well. Can be mirrored or clustered. Add disks or more database servers to scale out. This hosts the property database, crawl database and search administration database. The property database stores properties for the crawled content that are required for querying. The crawl database stores the crawl history, manages crawl operations. Each crawl database can have one or more crawlers associated with it.

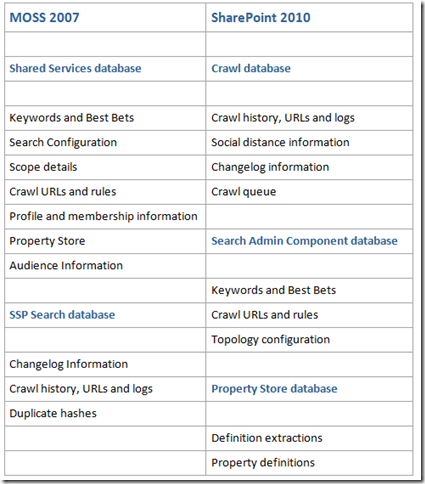

Below is the brief comparison of features stored in the 2007 databases versus 2010 databases for search:

Search UI changes in SharePoint 2010

Where is Shared Services Provider (SSP) gone?

In MOSS 2007, we used to have Shared Services Provider which used to combine services like Search, User Profiles, Excel Services, Audiences and Business Data Catalog (BDC). In a situation where SSP goes corrupt or in a situation where we had to recreate SSP we had to reconfigure all the above services which was a pain. Also, scalability was an issue with SSPs.

Why Service Applications?

So now with SharePoint Server 2010, we came up with a new Service Application architecture where we decided to break down the SSP in to individual and independent components and came up with the concept of "Service Applications". Service Applications are a huge improvement to the product, addressing many of the scalability compromises inherent in the SSP model. Service Applications can now be built by third parties and are available in both SharePoint Foundation and SharePoint Server. In designing Service Applications there were three main goals:

· Richness - the ability to deliver more features and allow third parties to build their own "shared services".

· Scalability - handle larger loads, scale farms, scale to the cloud

· Flexibility - provides more granularity

SharePoint 2010 Search Solution Offerings

SharePoint Server 2010 Search – the out-of-the-box SharePoint search for enterprise deployments. SharePoint Server 2010 Search represents an important upgrade to the existing search for SharePoint

FAST Search Server 2010 for SharePoint – a brand new add-on product based on the FAST search technology that combines the best of FAST’s high-end search capabilities with the best of SharePoint. FAST Search for SharePoint 2010 is a completely new offering and the first new product based on the FAST technology since FAST was acquired by Microsoft in April 2008

Fresh 2010 Central Administration UI

As you see, the Central Administration UI is logically grouped into 8 categories.

- Application Management - Manage Web Applications, Site Collections, Service Applications and Databases from here

- System Settings - Farm Info, Manage Services, Configure Incoming and Outgoing Email, Configure Text Messages (SMS), Farm Management

- Monitoring - Health Analyzer, Timer Jobs definitions and status, Logging and Reporting

- Backup and Restore - Farm backup and Restore, Granular Backup

- Security - Manage Farm Administrators, Policy, Managed and Service Accounts, Authentication Providers, Antivirus, Blocked File Types, IRM etc...

- Upgrade and Migration - License conversion, Features management, Patch install status, Database status, Upgrade status

- General Application Settings - Manage External Service Connectors, InfoPath Forms Services, Site Directory, SharePoint Designer, Search, Reporting Services, Content Deployment

- Configuration Wizards - Farm Configuration Wizard

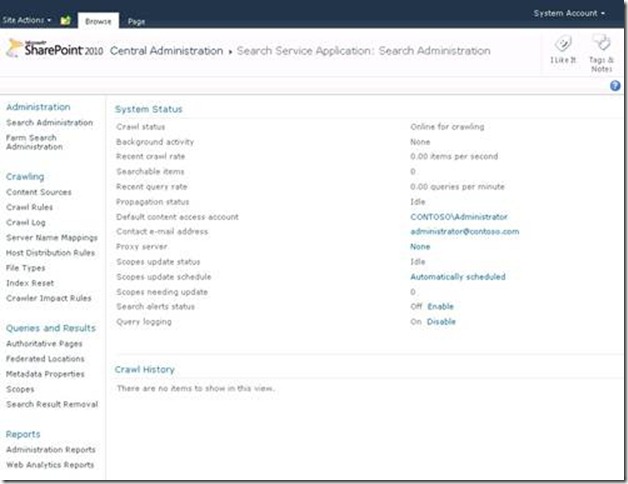

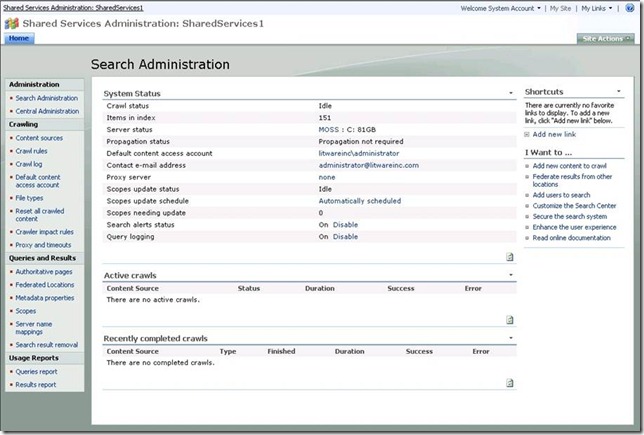

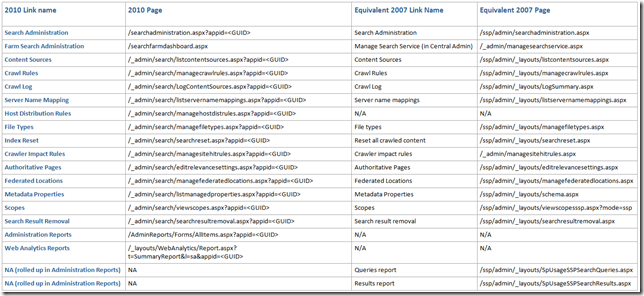

Search Service Application UI

Search Administration UI in SharePoint 2007

Search Application Topology

In SharePoint 2010's Search Administration page, if you scroll down, you'll see Search Application Topology. Lets try to understand the components of that -

As you see in the above screenshot, Search Application Topology can be divided into 4 major components -

- Administration Component

- Crawl Component

- Databases

- Administration Database

- Crawl Database

- Property Database

- Index Partition

As per your scaling requirements, you can create new Crawl Components, Crawl Databases, Query Components and Property Databases.

You can have a Dedicated Crawl Database if you wish to, if you create a dedicated crawl database that can be used in Host Distribution Rules.

![clip_image001[4] clip_image001[4]](https://msdntnarchive.blob.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/28/88/metablogapi/1780.clip_image0014_thumb_6CC547AB.jpg)