Data’s CODEX and the control of data expediently

By Victoria Holt, Senior Data Analytics and Platforms Architect at Eduserv

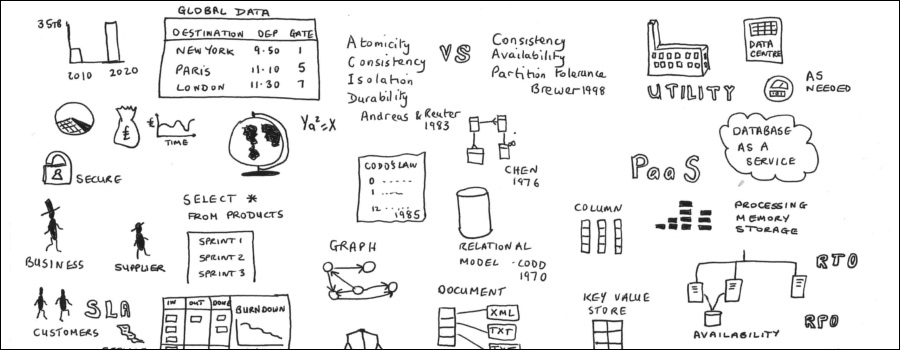

This is the third instalment in Victoria's series about the best practices and procedures used in the management of database systems. If you've missed the previous two articles, or just want a refresher, you can find them here and here.  Database management is facing a significant change with the adoption of cloud database-as-a-service offerings, the explosion of data and with the adoption of DevOps. Challenges exist for database managers to make the best choices of practices and procedures to satisfy the requirements of organisations, whilst securing and maintaining these data assets. Database managers today need knowledge of rapidly changing technological developments and an understanding of up-to-date software and hardware when designing systems.

Database management is facing a significant change with the adoption of cloud database-as-a-service offerings, the explosion of data and with the adoption of DevOps. Challenges exist for database managers to make the best choices of practices and procedures to satisfy the requirements of organisations, whilst securing and maintaining these data assets. Database managers today need knowledge of rapidly changing technological developments and an understanding of up-to-date software and hardware when designing systems.

The proliferation of tasks and activities being carried out by many teams of staff means that what was once a simple management task has become much more complex. There is a need for businesses to adopt a data driven approach to compete; to aid management and innovation. Value can be obtained from the utilisation of dark data, big data and open data with predictive analytics.

With this undercurrent of rapid change happening, carrying out academic research to understand the complexity and interconnectedness seemed an innovative way forward. The research has been significant for understanding what practices and procedures are used for managing databases, understanding the interactions between various components, and the relationships of the complex parts of the system. Also, how these affect the management of database systems and reflecting on these outcomes to identify possible improvements in the system, which may result in the emergence of innovation. The research conducted led to insights into the system. The ultimate aim was to help improve the quality of database administration management methods because databases are key to everyday life, and successful management of these is of critical importance.

Enhancements to an existing analytic method

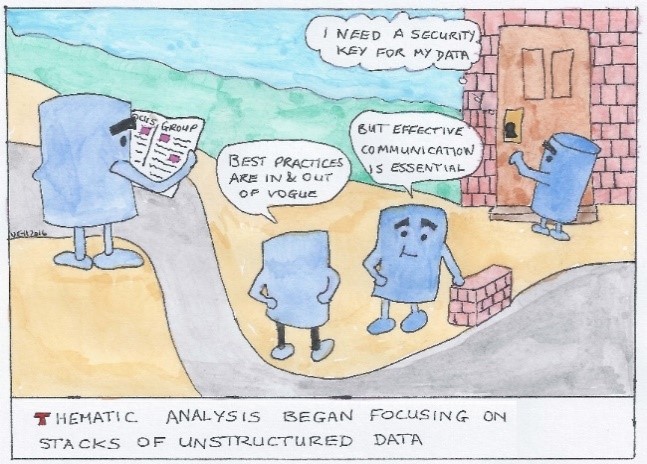

There are many methods for analysing data. This qualitative research followed a quantitative survey which enabled the research to change from a wide and shallow approach to a narrow and deep approach for data collection. The qualitative research enabled the captured information to be explained, with the help of stories about managing database systems. As in industry, academic research requires the analysis of data and the use of multiple methods is required to add checks and balances.

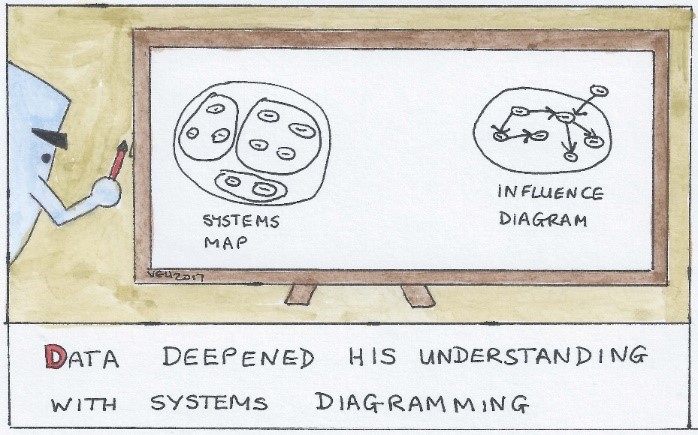

My novel approach combined three stages: a First Coding Cycle, based on Thematic Analysis (Braun & Clarke 2006), a Transitional Process with a few post coding techniques mentioned in Saldana (2013) followed by a holistic look at the situation using systems diagramming to deepen the analysis, which was the synthesis system's thinking stage. Taking this holistic approach to database systems enabled best practices and the complexity of database systems to be examined in a new light. The codes and themes were created in the Thematic Analysis stage, and the transitional process looked at code landscaping, a code relations chart and an operational model. These led to systems thinking diagramming and the creation of a systems map.

A map of complexity

The analysis of the findings enabled me to map out the complexity of managing database systems. This data map is a mapping of the high level components involved in managing database systems. The map clearly sets out the interconnections of various socio-technical components, which help with understanding the complexity. The complexity is unlikely to be reduced with the adoption of the new database-as-a-service, but is likely to create a shift with new components of complexity. The research resultant data map has 44 interconnections among components. Understanding what these components are, and what their interconnections are, is critical to improve management.

What’s next?

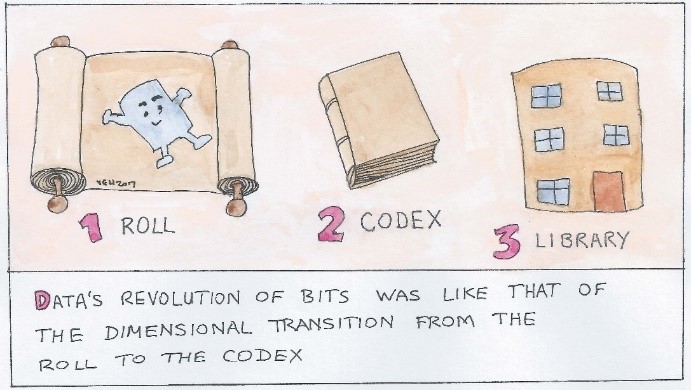

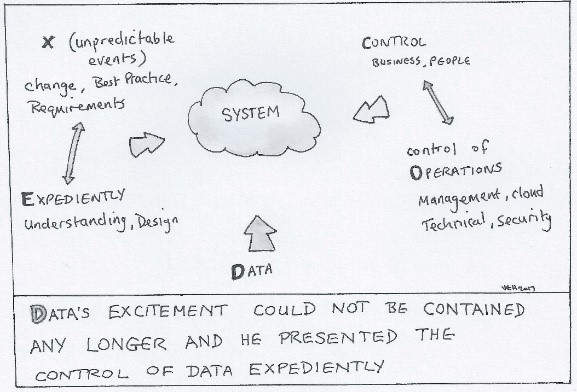

To create improvement and innovation, the analysis was examined in various ways and used an analogy of the CODEX to create a blueprint. The components in the CODEX were developed from analysing the data in several different ways. The CODEX is an agile approach to decipher the complexity of interconnections and has the potential to create an autonomous way to deliver best practice. The CODEX is about creating continuously changing best practice, whilst acknowledging the environmental costs and implementations for other teams.

I believe there is now an opportunity to build on the prediction of best practice via machine learning or graph theory. This might enable the automatic prediction of complex components to improve best practice.

The third part of my research is depicted as a continuation of the cartoon story of my PhD. The first two parts of the story are here and here.

|

|

|

|

|

|

|

|

|

Victoria Holt is a Senior Data Analytics and Platforms Architect at Eduserv specializing in the Microsoft Data Platform, and is responsible for the data platform and driving forward a data driven vision. She is also a database researcher working on a part-time PhD with the Open University, looking at the management of database systems. She is involved with helping out at SQL Server community events including SQLBits, the UK SQL Server User Group, SQL Relay and PASS SQL Saturdays over many years.

Victoria Holt is a Senior Data Analytics and Platforms Architect at Eduserv specializing in the Microsoft Data Platform, and is responsible for the data platform and driving forward a data driven vision. She is also a database researcher working on a part-time PhD with the Open University, looking at the management of database systems. She is involved with helping out at SQL Server community events including SQLBits, the UK SQL Server User Group, SQL Relay and PASS SQL Saturdays over many years.