Deploy your professional voice model as an endpoint

After you've successfully created and trained your voice model, you deploy it to a custom neural voice endpoint.

Note

You can create up to 50 endpoints with a standard (S0) Speech resource, each with its own custom neural voice.

To use your custom neural voice, you must specify the voice model name, use the custom URI directly in an HTTP request, and use the same Speech resource to pass through the authentication of the text to speech service.

Add a deployment endpoint

To create a custom neural voice endpoint:

Sign in to the Speech Studio.

Select Custom voice > Your project name > Deploy model > Deploy model.

Select a voice model that you want to associate with this endpoint.

Enter a Name and Description for your custom endpoint.

Select Endpoint type according to your scenario. If your resource is in a supported region, the default setting for the endpoint type is High performance. Otherwise, if the resource is in an unsupported region, the only available option is Fast resume.

- High performance: Optimized for scenarios with real-time and high-volume synthesis requests, such as conversational AI, call-center bots. It takes around 5 minutes to deploy or resume an endpoint. For information about regions where the High performance endpoint type is supported, see the footnotes in the regions table.

- Fast resume: Optimized for audio content creation scenarios with less frequent synthesis requests. Easy and quick to deploy or resume an endpoint in under a minute. The Fast resume endpoint type is supported in all regions where text to speech is available.

Select Deploy to create your endpoint.

After your endpoint is deployed, the endpoint name appears as a link. Select the link to display information specific to your endpoint, such as the endpoint key, endpoint URL, and sample code. When the status of the deployment is Succeeded, the endpoint is ready for use.

Application settings

The application settings that you use as REST API request parameters are available on the Deploy model tab in Speech Studio.

- The Endpoint key shows the Speech resource key the endpoint is associated with. Use the endpoint key as the value of your

Ocp-Apim-Subscription-Keyrequest header. - The Endpoint URL shows your service region. Use the value that precedes

voice.speech.microsoft.comas your service region request parameter. For example, useeastusif the endpoint URL ishttps://eastus.voice.speech.microsoft.com/cognitiveservices/v1. - The Endpoint URL shows your endpoint ID. Use the value appended to the

?deploymentId=query parameter as the value of your endpoint ID request parameter.

Use your custom voice

The custom endpoint is functionally identical to the standard endpoint that's used for text to speech requests.

One difference is that the EndpointId must be specified to use the custom voice via the Speech SDK. You can start with the text to speech quickstart and then update the code with the EndpointId and SpeechSynthesisVoiceName. For more information, see use a custom endpoint.

To use a custom voice via Speech Synthesis Markup Language (SSML), specify the model name as the voice name. This example uses the YourCustomVoiceName voice.

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-US">

<voice name="YourCustomVoiceName">

This is the text that is spoken.

</voice>

</speak>

Switch to a new voice model in your product

Once you've updated your voice model to the latest engine version, or if you want to switch to a new voice in your product, you need to redeploy the new voice model to a new endpoint. Redeploying new voice model on your existing endpoint is not supported. After deployment, switch the traffic to the newly created endpoint. We recommend that you transfer the traffic to the new endpoint in a test environment first to ensure that the traffic works well, and then transfer to the new endpoint in the production environment. During the transition, you need to keep the old endpoint. If there are some problems with the new endpoint during transition, you can switch back to your old endpoint. If the traffic has been running well on the new endpoint for about 24 hours (recommended value), you can delete your old endpoint.

Note

If your voice name is changed and you are using Speech Synthesis Markup Language (SSML), be sure to use the new voice name in SSML.

Suspend and resume an endpoint

You can suspend or resume an endpoint, to limit spend and conserve resources that aren't in use. You won't be charged while the endpoint is suspended. When you resume an endpoint, you can continue to use the same endpoint URL in your application to synthesize speech.

Note

The suspend operation will complete almost immediately. The resume operation completes in about the same amount of time as a new deployment.

This section describes how to suspend or resume a custom neural voice endpoint in the Speech Studio portal.

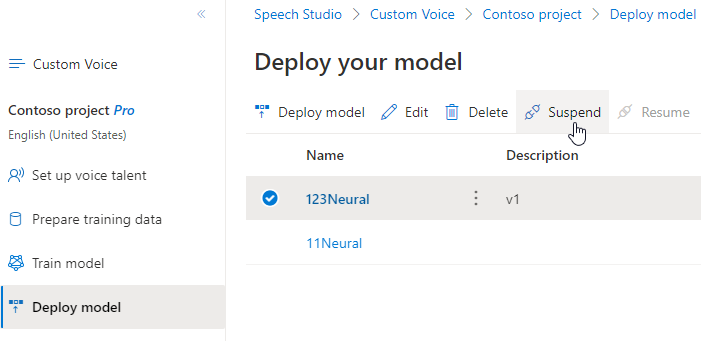

Suspend endpoint

To suspend and deactivate your endpoint, select Suspend from the Deploy model tab in Speech Studio.

In the dialog box that appears, select Submit. After the endpoint is suspended, Speech Studio will show the Successfully suspended endpoint notification.

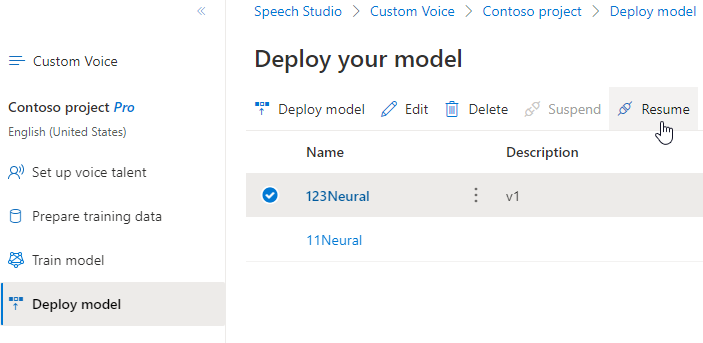

Resume endpoint

To resume and activate your endpoint, select Resume from the Deploy model tab in Speech Studio.

In the dialog box that appears, select Submit. After you successfully reactivate the endpoint, the status will change from Suspended to Succeeded.

Next steps

After you've successfully created and trained your voice model, you deploy it to a custom neural voice endpoint.

Note

You can create up to 50 endpoints with a standard (S0) Speech resource, each with its own custom neural voice.

Add a deployment endpoint

To create an endpoint, use the Endpoints_Create operation of the custom voice API. Construct the request body according to the following instructions:

- Set the required

projectIdproperty. See create a project. - Set the required

modelIdproperty. See train a voice model. - Set the required

descriptionproperty. The description can be changed later.

Make an HTTP PUT request using the URI as shown in the following Endpoints_Create example.

- Replace

YourResourceKeywith your Speech resource key. - Replace

YourResourceRegionwith your Speech resource region. - Replace

EndpointIdwith an endpoint ID of your choice. The ID must be a GUID and must be unique within your Speech resource. The ID will be used in the project's URI and can't be changed later.

curl -v -X PUT -H "Ocp-Apim-Subscription-Key: YourResourceKey" -H "Content-Type: application/json" -d '{

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

} ' "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/EndpointId?api-version=2023-12-01-preview"

You should receive a response body in the following format:

{

"id": "9f50c644-2121-40e9-9ea7-544e48bfe3cb",

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

"properties": {

"kind": "HighPerformance"

},

"status": "NotStarted",

"createdDateTime": "2023-04-01T05:30:00.000Z",

"lastActionDateTime": "2023-04-02T10:15:30.000Z"

}

The response header contains the Operation-Location property. Use this URI to get details about the Endpoints_Create operation. Here's an example of the response header:

Operation-Location: https://eastus.api.cognitive.microsoft.com/customvoice/operations/284b7e37-f42d-4054-8fa9-08523c3de345?api-version=2023-12-01-preview

Operation-Id: 284b7e37-f42d-4054-8fa9-08523c3de345

You use the endpoint Operation-Location in subsequent API requests to suspend and resume an endpoint and delete an endpoint.

Use your custom voice

To use your custom neural voice, you must specify the voice model name, use the custom URI directly in an HTTP request, and use the same Speech resource to pass through the authentication of the text to speech service.

The custom endpoint is functionally identical to the standard endpoint that's used for text to speech requests.

One difference is that the EndpointId must be specified to use the custom voice via the Speech SDK. You can start with the text to speech quickstart and then update the code with the EndpointId and SpeechSynthesisVoiceName. For more information, see use a custom endpoint.

To use a custom voice via Speech Synthesis Markup Language (SSML), specify the model name as the voice name. This example uses the YourCustomVoiceName voice.

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-US">

<voice name="YourCustomVoiceName">

This is the text that is spoken.

</voice>

</speak>

Suspend an endpoint

You can suspend or resume an endpoint, to limit spend and conserve resources that aren't in use. You won't be charged while the endpoint is suspended. When you resume an endpoint, you can continue to use the same endpoint URL in your application to synthesize speech.

To suspend an endpoint, use the Endpoints_Suspend operation of the custom voice API.

Make an HTTP POST request using the URI as shown in the following Endpoints_Suspend example.

- Replace

YourResourceKeywith your Speech resource key. - Replace

YourResourceRegionwith your Speech resource region. - Replace

YourEndpointIdwith the endpoint ID that you received when you created the endpoint.

curl -v -X POST "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/YourEndpointId:suspend?api-version=2023-12-01-preview" -H "Ocp-Apim-Subscription-Key: YourResourceKey" -H "content-type: application/json" -H "content-length: 0"

You should receive a response body in the following format:

{

"id": "9f50c644-2121-40e9-9ea7-544e48bfe3cb",

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

"properties": {

"kind": "HighPerformance"

},

"status": "Disabling",

"createdDateTime": "2023-04-01T05:30:00.000Z",

"lastActionDateTime": "2023-04-02T10:15:30.000Z"

}

Resume an endpoint

To suspend an endpoint, use the Endpoints_Resume operation of the custom voice API.

Make an HTTP POST request using the URI as shown in the following Endpoints_Resume example.

- Replace

YourResourceKeywith your Speech resource key. - Replace

YourResourceRegionwith your Speech resource region. - Replace

YourEndpointIdwith the endpoint ID that you received when you created the endpoint.

curl -v -X POST "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/YourEndpointId:resume?api-version=2023-12-01-preview" -H "Ocp-Apim-Subscription-Key: YourResourceKey" -H "content-type: application/json" -H "content-length: 0"

You should receive a response body in the following format:

{

"id": "9f50c644-2121-40e9-9ea7-544e48bfe3cb",

"description": "Endpoint for Jessica voice",

"projectId": "ProjectId",

"modelId": "JessicaModelId",

"properties": {

"kind": "HighPerformance"

},

"status": "Running",

"createdDateTime": "2023-04-01T05:30:00.000Z",

"lastActionDateTime": "2023-04-02T10:15:30.000Z"

}

Delete an endpoint

To delete an endpoint, use the Endpoints_Delete operation of the custom voice API.

Make an HTTP DELETE request using the URI as shown in the following Endpoints_Delete example.

- Replace

YourResourceKeywith your Speech resource key. - Replace

YourResourceRegionwith your Speech resource region. - Replace

YourEndpointIdwith the endpoint ID that you received when you created the endpoint.

curl -v -X DELETE "https://YourResourceRegion.api.cognitive.microsoft.com/customvoice/endpoints/YourEndpointId?api-version=2023-12-01-preview" -H "Ocp-Apim-Subscription-Key: YourResourceKey"

You should receive a response header with status code 204.

Switch to a new voice model in your product

Once you've updated your voice model to the latest engine version, or if you want to switch to a new voice in your product, you need to redeploy the new voice model to a new endpoint. Redeploying new voice model on your existing endpoint is not supported. After deployment, switch the traffic to the newly created endpoint. We recommend that you transfer the traffic to the new endpoint in a test environment first to ensure that the traffic works well, and then transfer to the new endpoint in the production environment. During the transition, you need to keep the old endpoint. If there are some problems with the new endpoint during transition, you can switch back to your old endpoint. If the traffic has been running well on the new endpoint for about 24 hours (recommended value), you can delete your old endpoint.

Note

If your voice name is changed and you are using Speech Synthesis Markup Language (SSML), be sure to use the new voice name in SSML.

Next steps

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for