Tutorial: Copy data from Azure Data Box via NFS

This tutorial describes how to connect to and copy data from the local web UI of your Data Box to an on-premises data server via NFS. The data on your Data Box is exported from your Azure Storage account.

In this tutorial, you learn how to:

- Prerequisites

- Connect to Data Box

- Copy data from Data Box

Prerequisites

Before you begin, make sure that:

- You have placed the order for Azure Data Box.

- For an import order, see Tutorial: Order Azure Data Box.

- For an export order, see Tutorial: Order Azure Data Box.

- You've received your Data Box and the order status in the portal is Delivered.

- You have a host computer to which you want to copy the data from your Data Box. Your host computer must

- Run a Supported operating system.

- Be connected to a high-speed network. We strongly recommend that you have at least one 10-GbE connection. If a 10-GbE connection isn't available, use a 1-GbE data link but the copy speeds will be impacted.

Connect to Data Box

Based on the storage account selected, Data Box creates up to:

- Three shares for each associated storage account for GPv1 and GPv2.

- One share for premium storage.

- One share for blob storage account.

Under block blob and page blob shares, first-level entities are containers, and second-level entities are blobs. Under shares for Azure Files, first-level entities are shares, second-level entities are files.

The following table shows the UNC path to the shares on your Data Box and Azure Storage path URL where the data is uploaded. The final Azure Storage path URL can be derived from the UNC share path.

| Blobs and Files | Paths and URLs |

|---|---|

| Azure Block blobs | \\<DeviceIPAddress>\<StorageAccountName_BlockBlob>\<ContainerName>\files\a.txthttps://<StorageAccountName>.blob.core.windows.net/<ContainerName>/files/a.txt |

| Azure Page blobs | \\<DeviceIPAddres>\<StorageAccountName_PageBlob>\<ContainerName>\files\a.txthttps://<StorageAccountName>.blob.core.windows.net/<ContainerName>/files/a.txt |

| Azure Files | \\<DeviceIPAddres>\<StorageAccountName_AzFile>\<ShareName>\files\a.txthttps://<StorageAccountName>.file.core.windows.net/<ShareName>/files/a.txt |

If you are using a Linux host computer, perform the following steps to configure Data Box to allow access to NFS clients. Data Box can connect as many as five NFS clients at a time.

Supply the IP addresses of the allowed clients that can access the share:

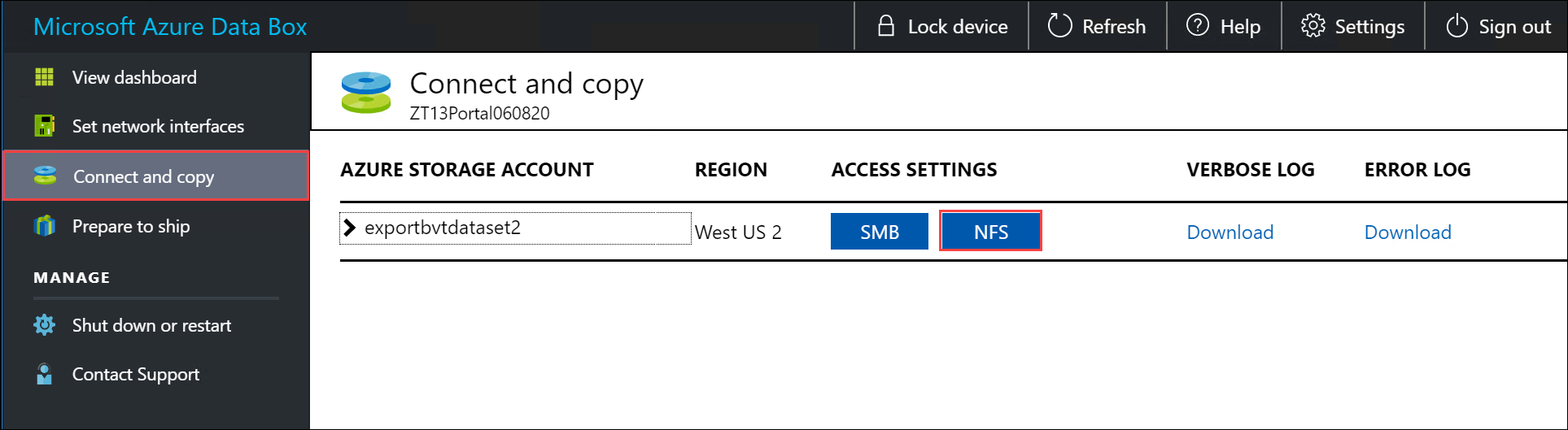

In the local web UI, go to the Connect and copy page. Under NFS settings, click NFS client access.

To add an NFS client, supply the client's IP address and click Add. Data Box can connect as many as five NFS clients at a time. When you finish, click OK.

Ensure that the Linux host computer has a supported version of NFS client installed. Use the specific version for your Linux distribution.

Once the NFS client is installed, use the following command to mount the NFS share on your Data Box device:

sudo mount <Data Box device IP>:/<NFS share on Data Box device> <Path to the folder on local Linux computer>The following example shows how to connect via NFS to a Data Box share. The Data Box device IP is

10.161.23.130, the shareMystoracct_Blobis mounted on the ubuntuVM, mount point being/home/databoxubuntuhost/databox.sudo mount -t nfs 10.161.23.130:/Mystoracct_Blob /home/databoxubuntuhost/databoxFor Mac clients, you will need to add an additional option as follows:

sudo mount -t nfs -o sec=sys,resvport 10.161.23.130:/Mystoracct_Blob /home/databoxubuntuhost/databoxAlways create a folder for the files that you intend to copy under the share and then copy the files to that folder. The folder created under block blob and page blob shares represents a container to which data is uploaded as blobs. You cannot copy files directly to root folder in the storage account.

Copy data from Data Box

Once you're connected to the Data Box shares, the next step is to copy data.

Before you begin the data copy:

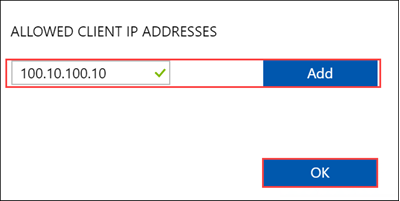

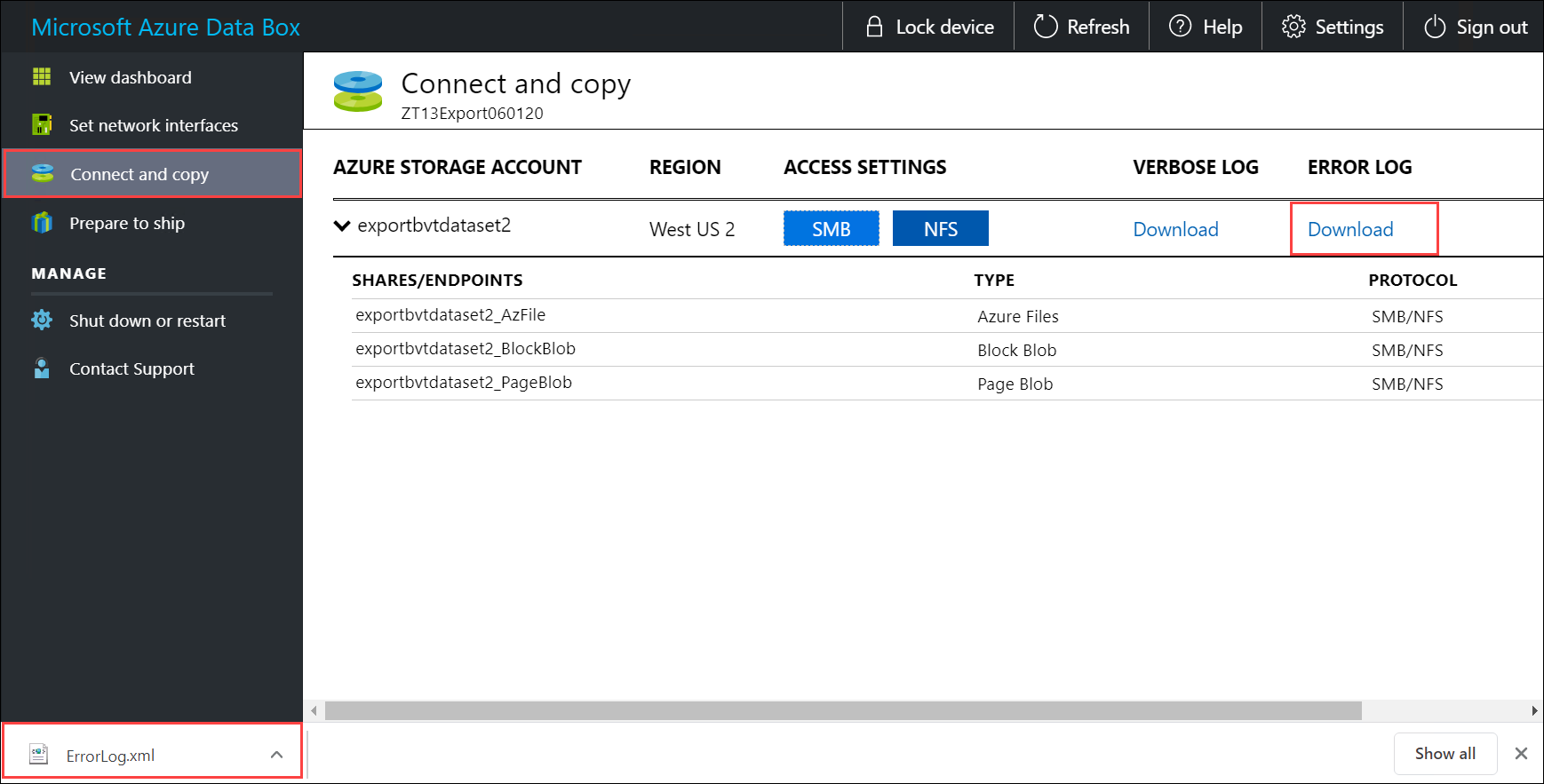

Download the copy log. In the Connect and copy page, select Copy log. When prompted, save the log on your system.

If your copy log size is too large, you will need to use Azure Storage Explorer or AzCopy in order to download the copy log and prevent any failures.

- If you are using Azure Storage Explorer to download the copy log, you can map your Azure storage account in Azure Storage Explorer and then download the raw file.

- If you are using AzCopy to download the copy log, you can use the

AzCopy copycommand to copy the log file from your storage account to your local system.

Repeat the steps to download the verbose log.

Review the verbose log. The verbose log contains a list of all the files that were successfully exported from Azure Storage account. The log also contains file size and checksum computation.

<File CloudFormat="BlockBlob" Path="validblobdata/test1.2.3.4" Size="1024" crc64="7573843669953104266"> </File><File CloudFormat="BlockBlob" Path="validblobdata/helloEndWithDot..txt" Size="11" crc64="7320094093915972193"> </File><File CloudFormat="BlockBlob" Path="validblobdata/test..txt" Size="12" crc64="17906086011702236012"> </File><File CloudFormat="BlockBlob" Path="validblobdata/test1" Size="1024" crc64="7573843669953104266"> </File><File CloudFormat="BlockBlob" Path="validblobdata/test1.2.3" Size="1024" crc64="7573843669953104266"> </File><File CloudFormat="BlockBlob" Path="validblobdata/.......txt" Size="11" crc64="7320094093915972193"> </File><File CloudFormat="BlockBlob" Path="validblobdata/copylogb08fa3095564421bb550d775fff143ed====..txt" Size="53638" crc64="1147139997367113454"> </File><File CloudFormat="BlockBlob" Path="validblobdata/testmaxChars-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-123456790-12345679" Size="1024" crc64="7573843669953104266"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/file0" Size="0" crc64="0"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/file1" Size="0" crc64="0"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/file4096_000001" Size="4096" crc64="16969371397892565512"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/file4096_000000" Size="4096" crc64="16969371397892565512"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/64KB-Seed10.dat" Size="65536" crc64="10746682179555216785"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/LiveSiteReport_Oct.xlsx" Size="7028" crc64="6103506546789189963"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/NE_Oct_GeoReport.xlsx" Size="103197" crc64="13305485882546035852"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/64KB-Seed1.dat" Size="65536" crc64="3140622834011462581"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/1mbfiles-0-0" Size="1048576" crc64="16086591317856295272"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/file524288_000001" Size="524288" crc64="8908547729214703832"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/4mbfiles-0-0" Size="4194304" crc64="1339017920798612765"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/file524288_000000" Size="524288" crc64="8908547729214703832"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/8mbfiles-0-1" Size="8388608" crc64="3963298606737216548"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/1mbfiles-0-1" Size="1048576" crc64="11061759121415905887"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/XLS-10MB.xls" Size="1199104" crc64="2218419493992437463"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/8mbfiles-0-0" Size="8388608" crc64="1072783424245035917"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/4mbfiles-0-1" Size="4194304" crc64="9991307204216370812"> </File><File CloudFormat="BlockBlob" Path="export-ut-container/VL_Piracy_Negtive10_TPNameAndGCS.xlsx" Size="12398699" crc64="13526033021067702820"> </File>Review the copy log for any errors. This log indicates the files that could not be copied due to errors.

Here is a sample output of copy log when there were no errors and all the files were copied during the data copy from Azure to Data Box device.

<CopyLog Summary="Summary"> <Status>Succeeded</Status> <TotalFiles_Blobs>5521</TotalFiles_Blobs> <FilesErrored>0</FilesErrored> </CopyLog>Here is a sample output when the copy log has errors and some of the files failed to copy from Azure.

<ErroredEntity CloudFormat="AppendBlob" Path="export-ut-appendblob/wastorage.v140.3.0.2.nupkg"> <Category>UploadErrorCloudHttp</Category> <ErrorCode>400</ErrorCode> <ErrorMessage>UnsupportBlobType</ErrorMessage> <Type>File</Type> </ErroredEntity><ErroredEntity CloudFormat="AppendBlob" Path="export-ut-appendblob/xunit.console.Primary_2020-05-07_03-54-42-PM_27444.hcsml"> <Category>UploadErrorCloudHttp</Category> <ErrorCode>400</ErrorCode> <ErrorMessage>UnsupportBlobType</ErrorMessage> <Type>File</Type> </ErroredEntity><ErroredEntity CloudFormat="AppendBlob" Path="export-ut-appendblob/xunit.console.Primary_2020-05-07_03-54-42-PM_27444 (1).hcsml"> <Category>UploadErrorCloudHttp</Category> <ErrorCode>400</ErrorCode> <ErrorMessage>UnsupportBlobType</ErrorMessage> <Type>File</Type> </ErroredEntity><CopyLog Summary="Summary"> <Status>Failed</Status> <TotalFiles_Blobs>4</TotalFiles_Blobs> <FilesErrored>3</FilesErrored> </CopyLog>You have the following options to export those files:

- You can transfer the files that could not be copied over the network.

- If your data size was larger than the usable device capacity, then a partial copy occurs and all the files that were not copied are listed in this log. You can use this log as an input XML to create a new Data Box order and then copy over these files.

You can now begin data copy. If you're using a Linux host computer, use a copy utility similar to Robocopy. Some of the alternatives available in Linux are rsync, FreeFileSync, Unison, or Ultracopier.

The cp command is one of best options to copy a directory. For more information on the usage, go to cp man pages.

If using the rsync option for a multi-threaded copy, follow these guidelines:

Install the CIFS Utils or NFS Utils package depending on the filesystem your Linux client is using.

sudo apt-get install cifs-utilssudo apt-get install nfs-utilsInstall

rsyncand Parallel (varies depending on the Linux distributed version).sudo apt-get install rsyncsudo apt-get install parallelCreate a mount point.

sudo mkdir /mnt/databoxMount the volume.

sudo mount -t NFS4 //Databox IP Address/share_name /mnt/databoxMirror folder directory structure.

rsync -za --include='*/' --exclude='*' /local_path/ /mnt/databoxCopy files.

cd /local_path/; find -L . -type f | parallel -j X rsync -za {} /mnt/databox/{}where j specifies the number of parallelization, X = number of parallel copies

We recommend that you start with 16 parallel copies and increase the number of threads depending on the resources available.

Important

The following Linux file types are not supported: symbolic links, character files, block files, sockets, and pipes. These file types will result in failures during the Prepare to ship step.

Once the copy is complete, go to the Dashboard and verify the used space and the free space on your device.

You can now proceed to ship your Data Box to Microsoft.

Next steps

In this tutorial, you learned about Azure Data Box topics such as:

- Prerequisites

- Connect to Data Box

- Copy data from Data Box

Advance to the next tutorial to learn how to ship your Data Box back to Microsoft.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for