Process data at the edge with Azure IoT Data Processor Preview pipelines

Important

Azure IoT Operations Preview – enabled by Azure Arc is currently in PREVIEW. You shouldn't use this preview software in production environments.

See the Supplemental Terms of Use for Microsoft Azure Previews for legal terms that apply to Azure features that are in beta, preview, or otherwise not yet released into general availability.

Industrial assets generate data in many different formats and use various communication protocols. This diversity of data sources, coupled with varying schemas and unit measures, makes it difficult to use and analyze raw industrial data effectively. Furthermore, for compliance, security, and performance reasons, you can’t upload all datasets to the cloud.

To process this data traditionally requires expensive, complex, and time-consuming data engineering. Azure IoT Data Processor Preview is a configurable data processing service that can manage the complexities and diversity of industrial data. Use Data Processor to make data from disparate sources more understandable, usable, and valuable.

What is Azure IoT Data Processor Preview?

Azure IoT Data Processor Preview is a component of Azure IoT Operations Preview. Data Processor lets you aggregate, enrich, normalize, and filter the data from your devices. Data Processor is a pipeline-based data processing engine that lets you process data at the edge before you send it to the other services either at the edge or in the cloud:

Data Processor ingests real-time streaming data from sources such as OPC UA servers, historians, and other industrial systems. It normalizes this data by converting various data formats into a standardized, structured format, which makes it easier to query and analyze. The data processor can also contextualize the data, enriching it with reference data or last known values (LKV) to provide a comprehensive view of your industrial operations.

The output from Data Processor is clean, enriched, and standardized data that's ready for downstream applications such as real-time analytics and insights tools. The data processor significantly reduces the time required to transform raw data into actionable insights.

Key Data Processor features include:

Flexible data normalization to convert multiple data formats into a standardized structure.

Enrichment of data streams with reference or LKV data to enhance context and enable better insights.

Built-in Microsoft Fabric integration to simplify the analysis of clean data.

Ability to process data from various sources and publish the data to various destinations.

As a data agnostic data processing platform, Data Processor can ingest data in any format, process the data, and then write it out to a destination. To support these capabilities, Data Processor can deserialize and serialize various formats. For example, it can serialize to parquet in order to write files to Microsoft Fabric.

Automatic and configurable retry policies to handle transient errors when sending data to cloud destinations.

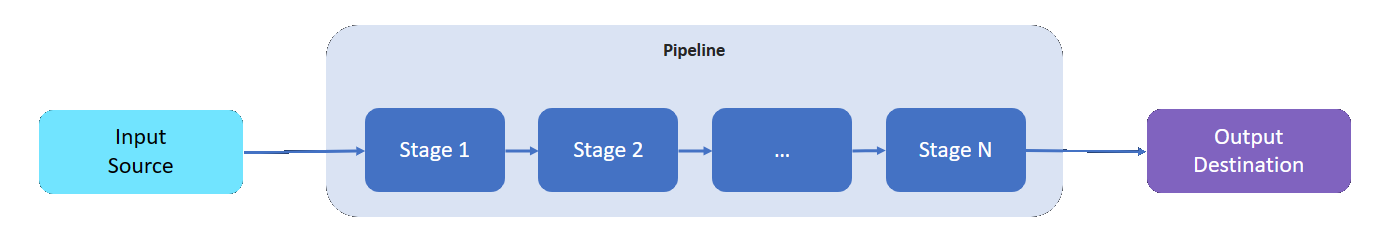

What is a pipeline?

A Data Processor pipeline has an input source where it reads data from, a destination where it writes processed data to, and a variable number of intermediate stages to process the data.

The intermediate stages represent the different available data processing capabilities:

- You can add as many intermediate stages as you need to a pipeline.

- You can order the intermediate stages of a pipeline as you need. You can reorder stages after you create a pipeline.

- Each stage adheres to a defined implementation interface and input/output schema contract.

- Each stage is independent of the other stages in the pipeline.

- All stages operate within the scope of a partition. Data isn't shared between different partitions.

- Data flows from one stage to the next only.

Data Processor pipelines can use the following stages:

| Stage | Description |

|---|---|

| Source - MQ | Retrieves data from an MQTT broker. |

| Source - HTTP endpoint | Retrieves data from an HTTP endpoint. |

| Source - SQL | Retrieves data from a Microsoft SQL Server database. |

| Source - InfluxDB | Retrieves data from an InfluxDB database. |

| Filter | Filters data coming through the stage. For example, filter out any message with temperature outside of the 50F-150F range. |

| Transform | Normalizes the structure of the data. For example, change the structure from {"Name": "Temp", "value": 50} to {"temp": 50}. |

| LKV | Stores selected metric values into an LKV store. For example, store only temperature and humidity measurements into LKV, ignore the rest. A subsequent stage can enrich a message with the stored LKV data. |

| Enrich | Enriches messages with data from the reference data store. For example, add an operator name and lot number from the operations dataset. |

| Aggregate | Aggregates values passing through the stage. For example, when temperature values are sent every 100 milliseconds, emit an average temperature metric every 30 seconds. |

| Call out | Makes a call to an external HTTP or gRPC service. For example, call an Azure Function to convert from a custom message format to JSON. |

| Destination - MQ | Writes your processed, clean, and contextualized data to an MQTT topic. |

| Destination - Reference | Writes your processed data to the built-in reference store. Other pipelines can use the reference store to enrich their messages. |

| Destination - gRPC | Sends your processed, clean, and contextualized data to a gRPC endpoint. |

| Destination - HTTP | Sends your processed, clean, and contextualized data to an HTTP endpoint. |

| Destination - Fabric Lakehouse | Sends your processed, clean, and contextualized data to a Microsoft Fabric lakehouse in the cloud. |

| Destination - Azure Data Explorer | Sends your processed, clean, and contextualized data to an Azure Data Explorer endpoint in the cloud. |

| Destination - Azure Blob Storage | Sends your processed, clean, and contextualized data to an Azure Blob Storage endpoint in the cloud. |

Next step

To try out Data Processor pipelines, see the Azure IoT Operations quickstarts.

To learn more about Data Processor, see:

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for