Perform safe rollout of new deployments for real-time inference

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, you'll learn how to deploy a new version of a machine learning model in production without causing any disruption. You'll use a blue-green deployment strategy (also known as a safe rollout strategy) to introduce a new version of a web service to production. This strategy will allow you to roll out your new version of the web service to a small subset of users or requests before rolling it out completely.

This article assumes you're using online endpoints, that is, endpoints that are used for online (real-time) inferencing. There are two types of online endpoints: managed online endpoints and Kubernetes online endpoints. For more information on endpoints and the differences between managed online endpoints and Kubernetes online endpoints, see What are Azure Machine Learning endpoints?.

The main example in this article uses managed online endpoints for deployment. To use Kubernetes endpoints instead, see the notes in this document that are inline with the managed online endpoint discussion.

In this article, you'll learn to:

- Define an online endpoint with a deployment called "blue" to serve version 1 of a model

- Scale the blue deployment so that it can handle more requests

- Deploy version 2 of the model (called the "green" deployment) to the endpoint, but send the deployment no live traffic

- Test the green deployment in isolation

- Mirror a percentage of live traffic to the green deployment to validate it

- Send a small percentage of live traffic to the green deployment

- Send over all live traffic to the green deployment

- Delete the now-unused v1 blue deployment

Prerequisites

Before following the steps in this article, make sure you have the following prerequisites:

The Azure CLI and the

mlextension to the Azure CLI. For more information, see Install, set up, and use the CLI (v2).Important

The CLI examples in this article assume that you are using the Bash (or compatible) shell. For example, from a Linux system or Windows Subsystem for Linux.

An Azure Machine Learning workspace. If you don't have one, use the steps in the Install, set up, and use the CLI (v2) to create one.

Azure role-based access controls (Azure RBAC) are used to grant access to operations in Azure Machine Learning. To perform the steps in this article, your user account must be assigned the owner or contributor role for the Azure Machine Learning workspace, or a custom role allowing

Microsoft.MachineLearningServices/workspaces/onlineEndpoints/*. For more information, see Manage access to an Azure Machine Learning workspace.(Optional) To deploy locally, you must install Docker Engine on your local computer. We highly recommend this option, so it's easier to debug issues.

Prepare your system

Set environment variables

If you haven't already set the defaults for the Azure CLI, save your default settings. To avoid passing in the values for your subscription, workspace, and resource group multiple times, run this code:

az account set --subscription <subscription id>

az configure --defaults workspace=<Azure Machine Learning workspace name> group=<resource group>

Clone the examples repository

To follow along with this article, first clone the examples repository (azureml-examples). Then, go to the repository's cli/ directory:

git clone --depth 1 https://github.com/Azure/azureml-examples

cd azureml-examples

cd cli

Tip

Use --depth 1 to clone only the latest commit to the repository. This reduces the time to complete the operation.

The commands in this tutorial are in the file deploy-safe-rollout-online-endpoints.sh in the cli directory, and the YAML configuration files are in the endpoints/online/managed/sample/ subdirectory.

Note

The YAML configuration files for Kubernetes online endpoints are in the endpoints/online/kubernetes/ subdirectory.

Define the endpoint and deployment

Online endpoints are used for online (real-time) inferencing. Online endpoints contain deployments that are ready to receive data from clients and send responses back in real time.

Define an endpoint

The following table lists key attributes to specify when you define an endpoint.

| Attribute | Description |

|---|---|

| Name | Required. Name of the endpoint. It must be unique in the Azure region. For more information on the naming rules, see endpoint limits. |

| Authentication mode | The authentication method for the endpoint. Choose between key-based authentication key and Azure Machine Learning token-based authentication aml_token. A key doesn't expire, but a token does expire. For more information on authenticating, see Authenticate to an online endpoint. |

| Description | Description of the endpoint. |

| Tags | Dictionary of tags for the endpoint. |

| Traffic | Rules on how to route traffic across deployments. Represent the traffic as a dictionary of key-value pairs, where key represents the deployment name and value represents the percentage of traffic to that deployment. You can set the traffic only when the deployments under an endpoint have been created. You can also update the traffic for an online endpoint after the deployments have been created. For more information on how to use mirrored traffic, see Allocate a small percentage of live traffic to the new deployment. |

| Mirror traffic | Percentage of live traffic to mirror to a deployment. For more information on how to use mirrored traffic, see Test the deployment with mirrored traffic. |

To see a full list of attributes that you can specify when you create an endpoint, see CLI (v2) online endpoint YAML schema or SDK (v2) ManagedOnlineEndpoint Class.

Define a deployment

A deployment is a set of resources required for hosting the model that does the actual inferencing. The following table describes key attributes to specify when you define a deployment.

| Attribute | Description |

|---|---|

| Name | Required. Name of the deployment. |

| Endpoint name | Required. Name of the endpoint to create the deployment under. |

| Model | The model to use for the deployment. This value can be either a reference to an existing versioned model in the workspace or an inline model specification. In the example, we have a scikit-learn model that does regression. |

| Code path | The path to the directory on the local development environment that contains all the Python source code for scoring the model. You can use nested directories and packages. |

| Scoring script | Python code that executes the model on a given input request. This value can be the relative path to the scoring file in the source code directory. The scoring script receives data submitted to a deployed web service and passes it to the model. The script then executes the model and returns its response to the client. The scoring script is specific to your model and must understand the data that the model expects as input and returns as output. In this example, we have a score.py file. This Python code must have an init() function and a run() function. The init() function will be called after the model is created or updated (you can use it to cache the model in memory, for example). The run() function is called at every invocation of the endpoint to do the actual scoring and prediction. |

| Environment | Required. The environment to host the model and code. This value can be either a reference to an existing versioned environment in the workspace or an inline environment specification. The environment can be a Docker image with Conda dependencies, a Dockerfile, or a registered environment. |

| Instance type | Required. The VM size to use for the deployment. For the list of supported sizes, see Managed online endpoints SKU list. |

| Instance count | Required. The number of instances to use for the deployment. Base the value on the workload you expect. For high availability, we recommend that you set the value to at least 3. We reserve an extra 20% for performing upgrades. For more information, see limits for online endpoints. |

To see a full list of attributes that you can specify when you create a deployment, see CLI (v2) managed online deployment YAML schema or SDK (v2) ManagedOnlineDeployment Class.

Create online endpoint

First set the endpoint's name and then configure it. In this article, you'll use the endpoints/online/managed/sample/endpoint.yml file to configure the endpoint. The following snippet shows the contents of the file:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineEndpoint.schema.json

name: my-endpoint

auth_mode: key

The reference for the endpoint YAML format is described in the following table. To learn how to specify these attributes, see the online endpoint YAML reference. For information about limits related to managed online endpoints, see limits for online endpoints.

| Key | Description |

|---|---|

$schema |

(Optional) The YAML schema. To see all available options in the YAML file, you can view the schema in the preceding code snippet in a browser. |

name |

The name of the endpoint. |

auth_mode |

Use key for key-based authentication. Use aml_token for Azure Machine Learning token-based authentication. To get the most recent token, use the az ml online-endpoint get-credentials command. |

To create an online endpoint:

Set your endpoint name:

For Unix, run this command (replace

YOUR_ENDPOINT_NAMEwith a unique name):export ENDPOINT_NAME="<YOUR_ENDPOINT_NAME>"Important

Endpoint names must be unique within an Azure region. For example, in the Azure

westus2region, there can be only one endpoint with the namemy-endpoint.Create the endpoint in the cloud:

Run the following code to use the

endpoint.ymlfile to configure the endpoint:az ml online-endpoint create --name $ENDPOINT_NAME -f endpoints/online/managed/sample/endpoint.yml

Create the 'blue' deployment

In this article, you'll use the endpoints/online/managed/sample/blue-deployment.yml file to configure the key aspects of the deployment. The following snippet shows the contents of the file:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model:

path: ../../model-1/model/

code_configuration:

code: ../../model-1/onlinescoring/

scoring_script: score.py

environment:

conda_file: ../../model-1/environment/conda.yaml

image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest

instance_type: Standard_DS3_v2

instance_count: 1

To create a deployment named blue for your endpoint, run the following command to use the blue-deployment.yml file to configure

az ml online-deployment create --name blue --endpoint-name $ENDPOINT_NAME -f endpoints/online/managed/sample/blue-deployment.yml --all-traffic

Important

The --all-traffic flag in the az ml online-deployment create allocates 100% of the endpoint traffic to the newly created blue deployment.

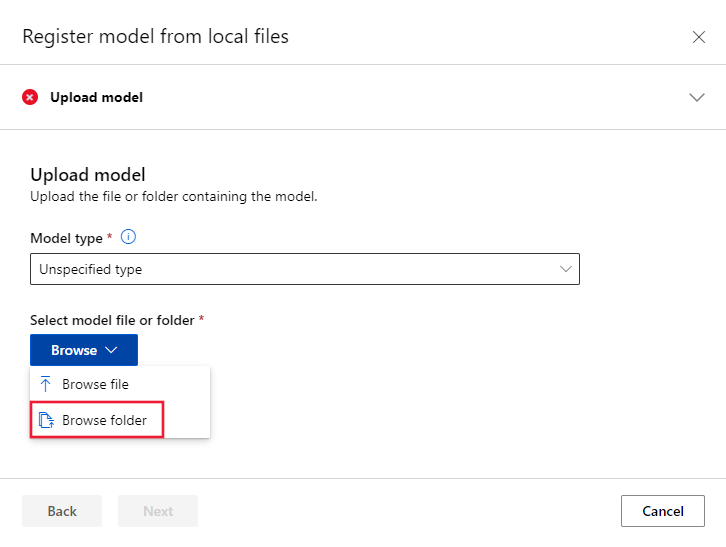

In the blue-deployment.yaml file, we specify the path (where to upload files from) inline. The CLI automatically uploads the files and registers the model and environment. As a best practice for production, you should register the model and environment and specify the registered name and version separately in the YAML. Use the form model: azureml:my-model:1 or environment: azureml:my-env:1.

For registration, you can extract the YAML definitions of model and environment into separate YAML files and use the commands az ml model create and az ml environment create. To learn more about these commands, run az ml model create -h and az ml environment create -h.

For more information on registering your model as an asset, see Register your model as an asset in Machine Learning by using the CLI. For more information on creating an environment, see Manage Azure Machine Learning environments with the CLI & SDK (v2).

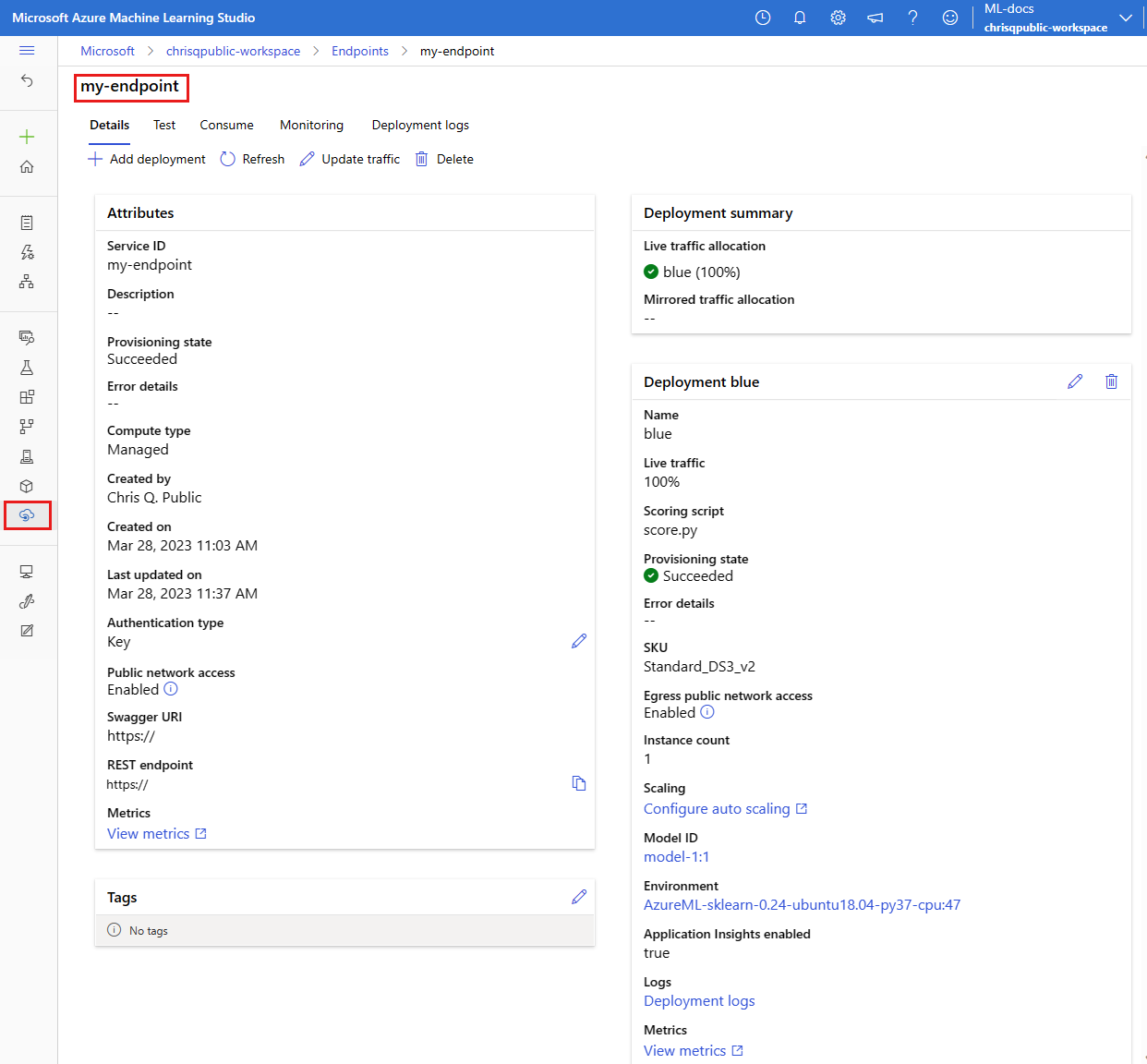

Confirm your existing deployment

One way to confirm your existing deployment is to invoke your endpoint so that it can score your model for a given input request. When you invoke your endpoint via the CLI or Python SDK, you can choose to specify the name of the deployment that will receive the incoming traffic.

Note

Unlike the CLI or Python SDK, Azure Machine Learning studio requires you to specify a deployment when you invoke an endpoint.

Invoke endpoint with deployment name

If you invoke the endpoint with the name of the deployment that will receive traffic, Azure Machine Learning will route the endpoint's traffic directly to the specified deployment and return its output. You can use the --deployment-name option for CLI v2, or deployment_name option for SDK v2 to specify the deployment.

Invoke endpoint without specifying deployment

If you invoke the endpoint without specifying the deployment that will receive traffic, Azure Machine Learning will route the endpoint's incoming traffic to the deployment(s) in the endpoint based on traffic control settings.

Traffic control settings allocate specified percentages of incoming traffic to each deployment in the endpoint. For example, if your traffic rules specify that a particular deployment in your endpoint will receive incoming traffic 40% of the time, Azure Machine Learning will route 40% of the endpoint's traffic to that deployment.

You can view the status of your existing endpoint and deployment by running:

az ml online-endpoint show --name $ENDPOINT_NAME

az ml online-deployment show --name blue --endpoint $ENDPOINT_NAME

You should see the endpoint identified by $ENDPOINT_NAME and, a deployment called blue.

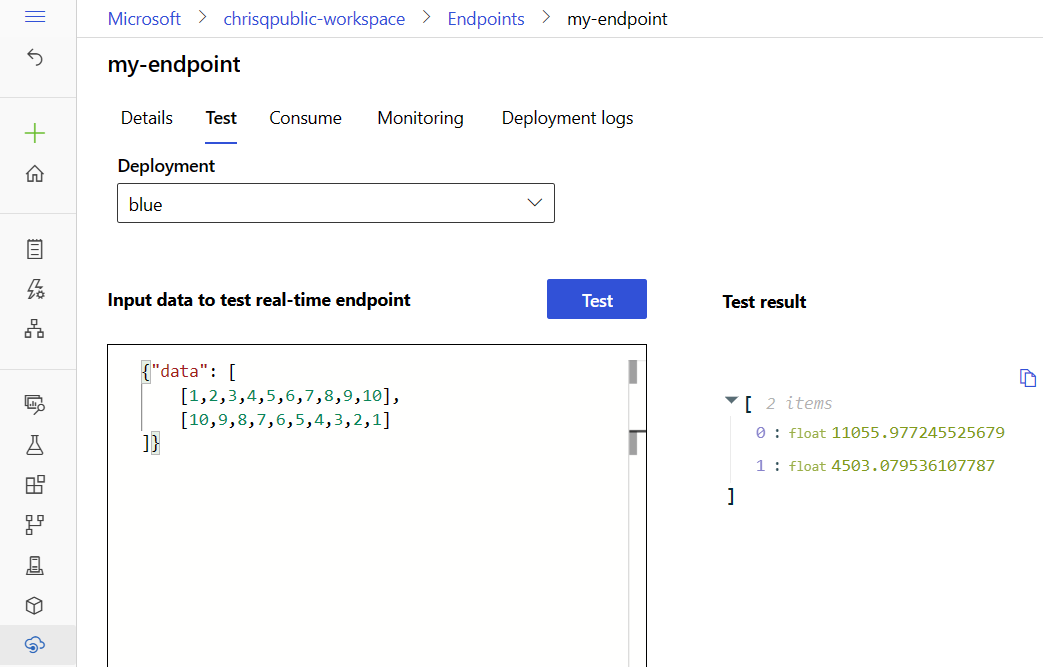

Test the endpoint with sample data

The endpoint can be invoked using the invoke command. We'll send a sample request using a json file.

az ml online-endpoint invoke --name $ENDPOINT_NAME --request-file endpoints/online/model-1/sample-request.json

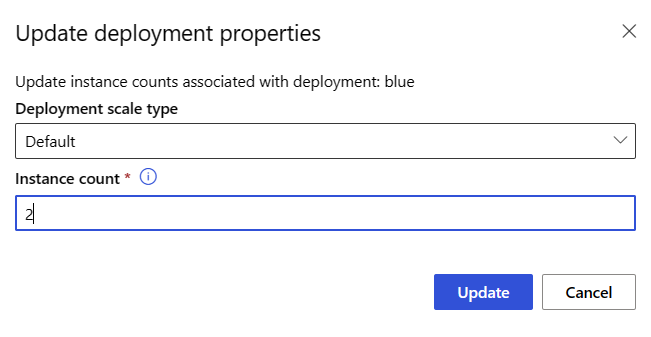

Scale your existing deployment to handle more traffic

In the deployment described in Deploy and score a machine learning model with an online endpoint, you set the instance_count to the value 1 in the deployment yaml file. You can scale out using the update command:

az ml online-deployment update --name blue --endpoint-name $ENDPOINT_NAME --set instance_count=2

Note

Notice that in the above command we use --set to override the deployment configuration. Alternatively you can update the yaml file and pass it as an input to the update command using the --file input.

Deploy a new model, but send it no traffic yet

Create a new deployment named green:

az ml online-deployment create --name green --endpoint-name $ENDPOINT_NAME -f endpoints/online/managed/sample/green-deployment.yml

Since we haven't explicitly allocated any traffic to green, it has zero traffic allocated to it. You can verify that using the command:

az ml online-endpoint show -n $ENDPOINT_NAME --query traffic

Test the new deployment

Though green has 0% of traffic allocated, you can invoke it directly by specifying the --deployment name:

az ml online-endpoint invoke --name $ENDPOINT_NAME --deployment-name green --request-file endpoints/online/model-2/sample-request.json

If you want to use a REST client to invoke the deployment directly without going through traffic rules, set the following HTTP header: azureml-model-deployment: <deployment-name>. The below code snippet uses curl to invoke the deployment directly. The code snippet should work in Unix/WSL environments:

# get the scoring uri

SCORING_URI=$(az ml online-endpoint show -n $ENDPOINT_NAME -o tsv --query scoring_uri)

# use curl to invoke the endpoint

curl --request POST "$SCORING_URI" --header "Authorization: Bearer $ENDPOINT_KEY" --header 'Content-Type: application/json' --header "azureml-model-deployment: green" --data @endpoints/online/model-2/sample-request.json

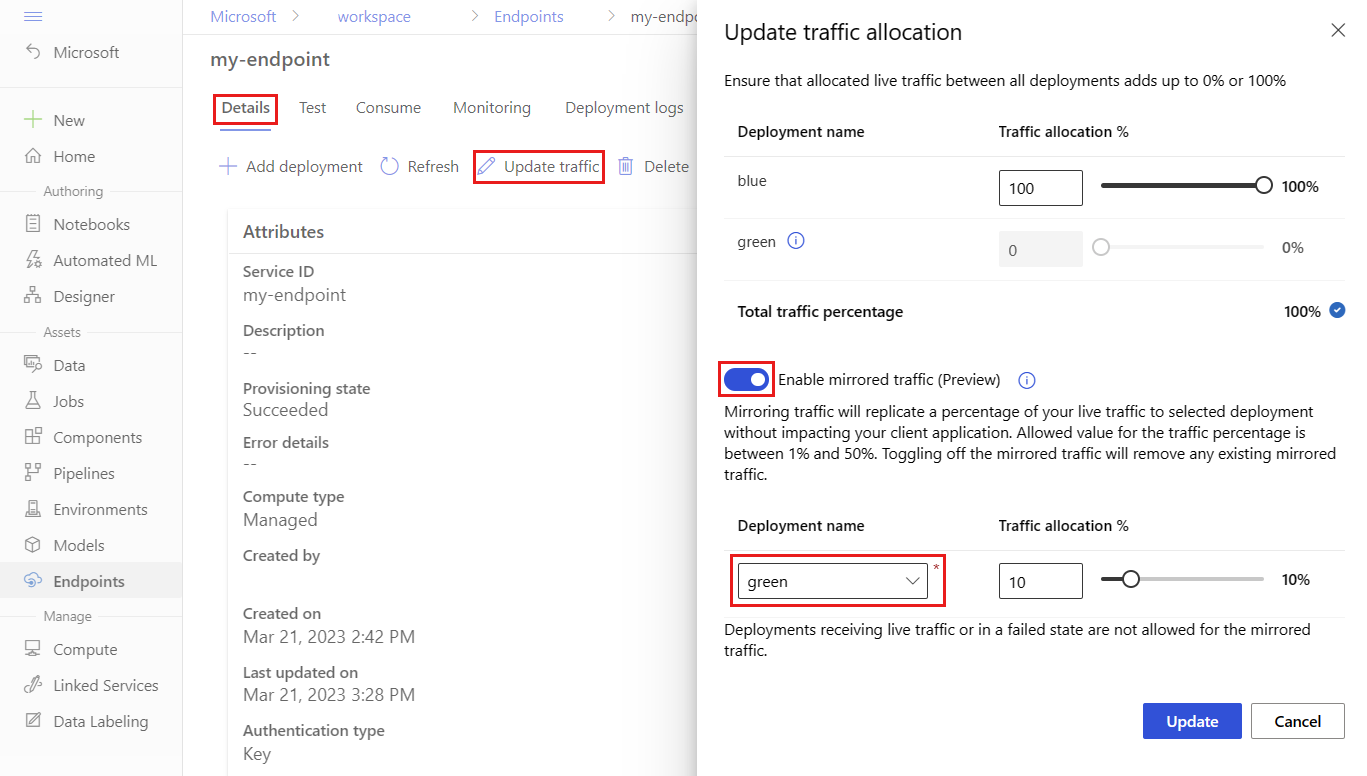

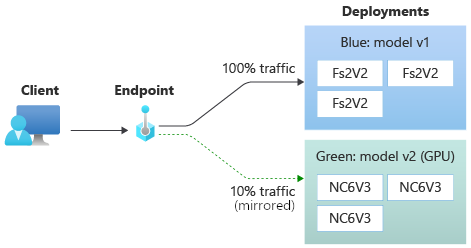

Test the deployment with mirrored traffic

Once you've tested your green deployment, you can mirror (or copy) a percentage of the live traffic to it. Traffic mirroring (also called shadowing) doesn't change the results returned to clients—requests still flow 100% to the blue deployment. The mirrored percentage of the traffic is copied and submitted to the green deployment so that you can gather metrics and logging without impacting your clients. Mirroring is useful when you want to validate a new deployment without impacting clients. For example, you can use mirroring to check if latency is within acceptable bounds or to check that there are no HTTP errors. Testing the new deployment with traffic mirroring/shadowing is also known as shadow testing. The deployment receiving the mirrored traffic (in this case, the green deployment) can also be called the shadow deployment.

Mirroring has the following limitations:

- Mirroring is supported for the CLI (v2) (version 2.4.0 or above) and Python SDK (v2) (version 1.0.0 or above). If you use an older version of CLI/SDK to update an endpoint, you'll lose the mirror traffic setting.

- Mirroring isn't currently supported for Kubernetes online endpoints.

- You can mirror traffic to only one deployment in an endpoint.

- The maximum percentage of traffic you can mirror is 50%. This limit is to reduce the effect on your endpoint bandwidth quota (default 5 MBPS)—your endpoint bandwidth is throttled if you exceed the allocated quota. For information on monitoring bandwidth throttling, see Monitor managed online endpoints.

Also note the following behaviors:

- A deployment can be configured to receive only live traffic or mirrored traffic, not both.

- When you invoke an endpoint, you can specify the name of any of its deployments — even a shadow deployment — to return the prediction.

- When you invoke an endpoint with the name of the deployment that will receive incoming traffic, Azure Machine Learning won't mirror traffic to the shadow deployment. Azure Machine Learning mirrors traffic to the shadow deployment from traffic sent to the endpoint when you don't specify a deployment.

Now, let's set the green deployment to receive 10% of mirrored traffic. Clients will still receive predictions from the blue deployment only.

The following command mirrors 10% of the traffic to the green deployment:

az ml online-endpoint update --name $ENDPOINT_NAME --mirror-traffic "green=10"

You can test mirror traffic by invoking the endpoint several times without specifying a deployment to receive the incoming traffic:

for i in {1..20} ; do

az ml online-endpoint invoke --name $ENDPOINT_NAME --request-file endpoints/online/model-1/sample-request.json

done

You can confirm that the specific percentage of the traffic was sent to the green deployment by seeing the logs from the deployment:

az ml online-deployment get-logs --name blue --endpoint $ENDPOINT_NAME

After testing, you can set the mirror traffic to zero to disable mirroring:

az ml online-endpoint update --name $ENDPOINT_NAME --mirror-traffic "green=0"

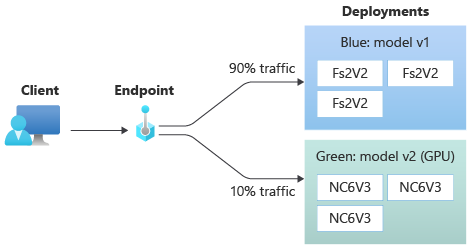

Allocate a small percentage of live traffic to the new deployment

Once you've tested your green deployment, allocate a small percentage of traffic to it:

az ml online-endpoint update --name $ENDPOINT_NAME --traffic "blue=90 green=10"

Tip

The total traffic percentage must sum to either 0% (to disable traffic) or 100% (to enable traffic).

Now, your green deployment receives 10% of all live traffic. Clients will receive predictions from both the blue and green deployments.

Send all traffic to your new deployment

Once you're fully satisfied with your green deployment, switch all traffic to it.

az ml online-endpoint update --name $ENDPOINT_NAME --traffic "blue=0 green=100"

Remove the old deployment

Use the following steps to delete an individual deployment from a managed online endpoint. Deleting an individual deployment does affect the other deployments in the managed online endpoint:

az ml online-deployment delete --name blue --endpoint $ENDPOINT_NAME --yes --no-wait

Delete the endpoint and deployment

If you aren't going to use the endpoint and deployment, you should delete them. By deleting the endpoint, you'll also delete all its underlying deployments.

az ml online-endpoint delete --name $ENDPOINT_NAME --yes --no-wait

Related content

- Explore online endpoint samples

- Deploy models with REST

- Use network isolation with managed online endpoints

- Access Azure resources with a online endpoint and managed identity

- Monitor managed online endpoints

- Manage and increase quotas for resources with Azure Machine Learning

- View costs for an Azure Machine Learning managed online endpoint

- Managed online endpoints SKU list

- Troubleshooting online endpoints deployment and scoring

- Online endpoint YAML reference

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for