How to operationalize a training pipeline with batch endpoints

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, you'll learn how to operationalize a training pipeline under a batch endpoint. The pipeline uses multiple components (or steps) that include model training, data preprocessing, and model evaluation.

You'll learn to:

- Create and test a training pipeline

- Deploy the pipeline to a batch endpoint

- Modify the pipeline and create a new deployment in the same endpoint

- Test the new deployment and set it as the default deployment

About this example

This example deploys a training pipeline that takes input training data (labeled) and produces a predictive model, along with the evaluation results and the transformations applied during preprocessing. The pipeline will use tabular data from the UCI Heart Disease Data Set to train an XGBoost model. We use a data preprocessing component to preprocess the data before it is sent to the training component to fit and evaluate the model.

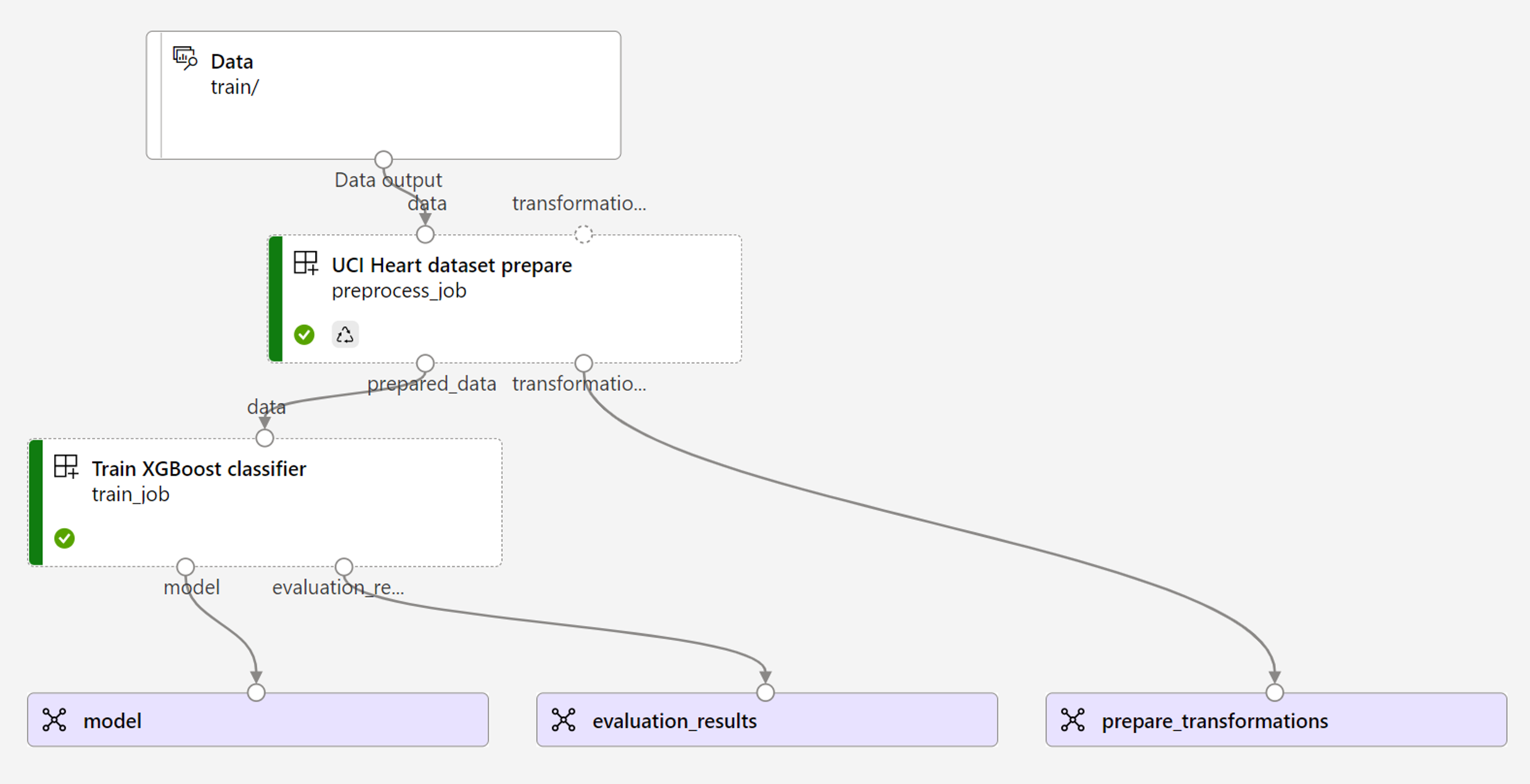

A visualization of the pipeline is as follows:

The example in this article is based on code samples contained in the azureml-examples repository. To run the commands locally without having to copy/paste YAML and other files, first clone the repo and then change directories to the folder:

git clone https://github.com/Azure/azureml-examples --depth 1

cd azureml-examples/cli

The files for this example are in:

cd endpoints/batch/deploy-pipelines/training-with-components

Follow along in Jupyter notebooks

You can follow along with the Python SDK version of this example by opening the sdk-deploy-and-test.ipynb notebook in the cloned repository.

Prerequisites

Before following the steps in this article, make sure you have the following prerequisites:

An Azure subscription. If you don't have an Azure subscription, create a free account before you begin. Try the free or paid version of Azure Machine Learning.

An Azure Machine Learning workspace. If you don't have one, use the steps in the Manage Azure Machine Learning workspaces article to create one.

Ensure that you have the following permissions in the workspace:

Create or manage batch endpoints and deployments: Use an Owner, Contributor, or Custom role that allows

Microsoft.MachineLearningServices/workspaces/batchEndpoints/*.Create ARM deployments in the workspace resource group: Use an Owner, Contributor, or Custom role that allows

Microsoft.Resources/deployments/writein the resource group where the workspace is deployed.

You need to install the following software to work with Azure Machine Learning:

The Azure CLI and the

mlextension for Azure Machine Learning.az extension add -n mlNote

Pipeline component deployments for Batch Endpoints were introduced in version 2.7 of the

mlextension for Azure CLI. Useaz extension update --name mlto get the last version of it.

Connect to your workspace

The workspace is the top-level resource for Azure Machine Learning, providing a centralized place to work with all the artifacts you create when you use Azure Machine Learning. In this section, we'll connect to the workspace in which you'll perform deployment tasks.

Pass in the values for your subscription ID, workspace, location, and resource group in the following code:

az account set --subscription <subscription>

az configure --defaults workspace=<workspace> group=<resource-group> location=<location>

Create the training pipeline component

In this section, we'll create all the assets required for our training pipeline. We'll begin by creating an environment that includes necessary libraries to train the model. We'll then create a compute cluster on which the batch deployment will run, and finally, we'll register the input data as a data asset.

Create the environment

The components in this example will use an environment with the XGBoost and scikit-learn libraries. The environment/conda.yml file contains the environment's configuration:

environment/conda.yml

channels:

- conda-forge

dependencies:

- python=3.8.5

- pip

- pip:

- mlflow

- azureml-mlflow

- datasets

- jobtools

- cloudpickle==1.6.0

- dask==2023.2.0

- scikit-learn==1.1.2

- xgboost==1.3.3

- pandas==1.4

name: mlflow-env

Create the environment as follows:

Define the environment:

environment/xgboost-sklearn-py38.yml

$schema: https://azuremlschemas.azureedge.net/latest/environment.schema.json name: xgboost-sklearn-py38 image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest conda_file: conda.yml description: An environment for models built with XGBoost and Scikit-learn.Create the environment:

Create a compute cluster

Batch endpoints and deployments run on compute clusters. They can run on any Azure Machine Learning compute cluster that already exists in the workspace. Therefore, multiple batch deployments can share the same compute infrastructure. In this example, we'll work on an Azure Machine Learning compute cluster called batch-cluster. Let's verify that the compute exists on the workspace or create it otherwise.

az ml compute create -n batch-cluster --type amlcompute --min-instances 0 --max-instances 5

Register the training data as a data asset

Our training data is represented in CSV files. To mimic a more production-level workload, we're going to register the training data in the heart.csv file as a data asset in the workspace. This data asset will later be indicated as an input to the endpoint.

az ml data create --name heart-classifier-train --type uri_folder --path data/train

Create the pipeline

The pipeline we want to operationalize takes one input, the training data, and produces three outputs: the trained model, the evaluation results, and the data transformations applied as preprocessing. The pipeline consists of two components:

preprocess_job: This step reads the input data and returns the prepared data and the applied transformations. The step receives three inputs:data: a folder containing the input data to transform and scoretransformations: (optional) Path to the transformations that will be applied, if available. If the path isn't provided, then the transformations will be learned from the input data. Since thetransformationsinput is optional, thepreprocess_jobcomponent can be used during training and scoring.categorical_encoding: the encoding strategy for the categorical features (ordinaloronehot).

train_job: This step will train an XGBoost model based on the prepared data and return the evaluation results and the trained model. The step receives three inputs:data: the preprocessed data.target_column: the column that we want to predict.eval_size: indicates the proportion of the input data used for evaluation.

The pipeline configuration is defined in the deployment-ordinal/pipeline.yml file:

deployment-ordinal/pipeline.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineComponent.schema.json

type: pipeline

name: uci-heart-train-pipeline

display_name: uci-heart-train

description: This pipeline demonstrates how to train a machine learning classifier over the UCI heart dataset.

inputs:

input_data:

type: uri_folder

outputs:

model:

type: mlflow_model

mode: upload

evaluation_results:

type: uri_folder

mode: upload

prepare_transformations:

type: uri_folder

mode: upload

jobs:

preprocess_job:

type: command

component: ../components/prepare/prepare.yml

inputs:

data: ${{parent.inputs.input_data}}

categorical_encoding: ordinal

outputs:

prepared_data:

transformations_output: ${{parent.outputs.prepare_transformations}}

train_job:

type: command

component: ../components/train_xgb/train_xgb.yml

inputs:

data: ${{parent.jobs.preprocess_job.outputs.prepared_data}}

target_column: target

register_best_model: false

eval_size: 0.3

outputs:

model:

mode: upload

type: mlflow_model

path: ${{parent.outputs.model}}

evaluation_results:

mode: upload

type: uri_folder

path: ${{parent.outputs.evaluation_results}}

Note

In the pipeline.yml file, the transformations input is missing from the preprocess_job; therefore, the script will learn the transformation parameters from the input data.

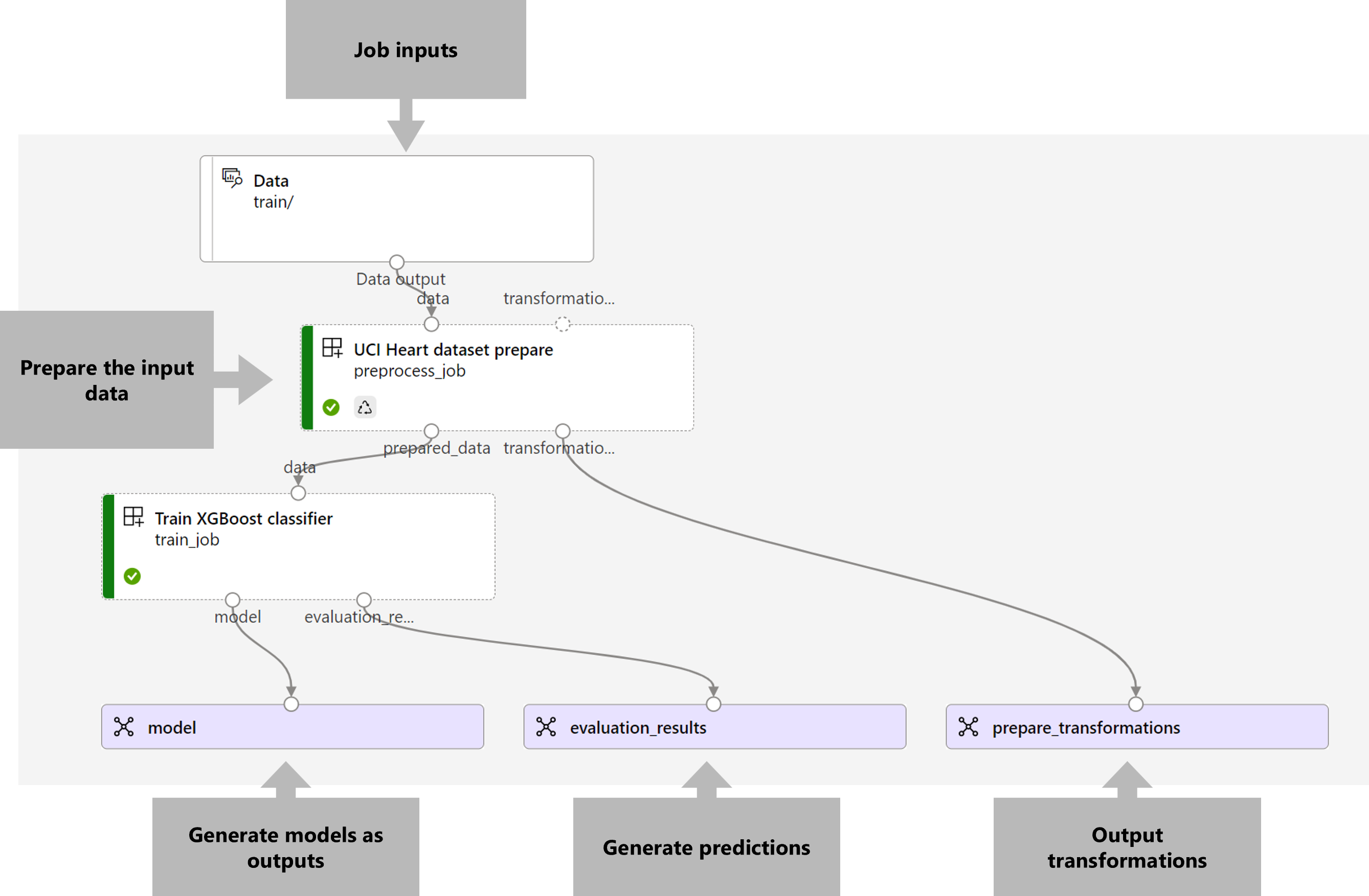

A visualization of the pipeline is as follows:

Test the pipeline

Let's test the pipeline with some sample data. To do that, we'll create a job using the pipeline and the batch-cluster compute cluster created previously.

The following pipeline-job.yml file contains the configuration for the pipeline job:

deployment-ordinal/pipeline-job.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineJob.schema.json

type: pipeline

experiment_name: uci-heart-train-pipeline

display_name: uci-heart-train-job

description: This pipeline demonstrates how to train a machine learning classifier over the UCI heart dataset.

compute: batch-cluster

component: pipeline.yml

inputs:

input_data:

type: uri_folder

outputs:

model:

type: mlflow_model

mode: upload

evaluation_results:

type: uri_folder

mode: upload

prepare_transformations:

mode: upload

Create the test job:

az ml job create -f deployment-ordinal/pipeline-job.yml --set inputs.input_data.path=azureml:heart-classifier-train@latest

Create a batch endpoint

Provide a name for the endpoint. A batch endpoint's name needs to be unique in each region since the name is used to construct the invocation URI. To ensure uniqueness, append any trailing characters to the name specified in the following code.

Configure the endpoint:

The

endpoint.ymlfile contains the endpoint's configuration.endpoint.yml

$schema: https://azuremlschemas.azureedge.net/latest/batchEndpoint.schema.json name: uci-classifier-train description: An endpoint to perform training of the Heart Disease Data Set prediction task. auth_mode: aad_tokenCreate the endpoint:

Query the endpoint URI:

Deploy the pipeline component

To deploy the pipeline component, we have to create a batch deployment. A deployment is a set of resources required for hosting the asset that does the actual work.

Configure the deployment:

The

deployment-ordinal/deployment.ymlfile contains the deployment's configuration. You can check the full batch endpoint YAML schema for extra properties.deployment-ordinal/deployment.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineComponentBatchDeployment.schema.json name: uci-classifier-train-xgb description: A sample deployment that trains an XGBoost model for the UCI dataset. endpoint_name: uci-classifier-train type: pipeline component: pipeline.yml settings: continue_on_step_failure: false default_compute: batch-clusterCreate the deployment:

Run the following code to create a batch deployment under the batch endpoint and set it as the default deployment.

az ml batch-deployment create --endpoint $ENDPOINT_NAME -f deployment-ordinal/deployment.yml --set-defaultTip

Notice the use of the

--set-defaultflag to indicate that this new deployment is now the default.Your deployment is ready for use.

Test the deployment

Once the deployment is created, it's ready to receive jobs. Follow these steps to test it:

Our deployment requires that we indicate one data input.

The

inputs.ymlfile contains the definition for the input data asset:inputs.yml

inputs: input_data: type: uri_folder path: azureml:heart-classifier-train@latestTip

To learn more about how to indicate inputs, see Create jobs and input data for batch endpoints.

You can invoke the default deployment as follows:

You can monitor the progress of the show and stream the logs using:

It's worth mentioning that only the pipeline's inputs are published as inputs in the batch endpoint. For instance, categorical_encoding is an input of a step of the pipeline, but not an input in the pipeline itself. Use this fact to control which inputs you want to expose to your clients and which ones you want to hide.

Access job outputs

Once the job is completed, we can access some of its outputs. This pipeline produces the following outputs for its components:

preprocess job: output istransformations_outputtrain job: outputs aremodelandevaluation_results

You can download the associated results using:

az ml job download --name $JOB_NAME --output-name transformations

az ml job download --name $JOB_NAME --output-name model

az ml job download --name $JOB_NAME --output-name evaluation_results

Create a new deployment in the endpoint

Endpoints can host multiple deployments at once, while keeping only one deployment as the default. Therefore, you can iterate over your different models, deploy the different models to your endpoint and test them, and finally, switch the default deployment to the model deployment that works best for you.

Let's change the way preprocessing is done in the pipeline to see if we get a model that performs better.

Change a parameter in the pipeline's preprocessing component

The preprocessing component has an input called categorical_encoding, which can have values ordinal or onehot. These values correspond to two different ways of encoding categorical features.

ordinal: Encodes the feature values with numeric values (ordinal) from[1:n], wherenis the number of categories in the feature. Ordinal encoding implies that there's a natural rank order among the feature categories.onehot: Doesn't imply a natural rank ordered relationship but introduces a dimensionality problem if the number of categories is large.

By default, we used ordinal previously. Let's now change the categorical encoding to use onehot and see how the model performs.

Tip

Alternatively, we could have exposed the categorial_encoding input to clients as an input to the pipeline job itself. However, we chose to change the parameter value in the preprocessing step so that we can hide and control the parameter inside of the deployment and take advantage of the opportunity to have multiple deployments under the same endpoint.

Modify the pipeline. It looks as follows:

The pipeline configuration is defined in the

deployment-onehot/pipeline.ymlfile:deployment-onehot/pipeline.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineComponent.schema.json type: pipeline name: uci-heart-train-pipeline display_name: uci-heart-train description: This pipeline demonstrates how to train a machine learning classifier over the UCI heart dataset. inputs: input_data: type: uri_folder outputs: model: type: mlflow_model mode: upload evaluation_results: type: uri_folder mode: upload prepare_transformations: type: uri_folder mode: upload jobs: preprocess_job: type: command component: ../components/prepare/prepare.yml inputs: data: ${{parent.inputs.input_data}} categorical_encoding: onehot outputs: prepared_data: transformations_output: ${{parent.outputs.prepare_transformations}} train_job: type: command component: ../components/train_xgb/train_xgb.yml inputs: data: ${{parent.jobs.preprocess_job.outputs.prepared_data}} target_column: target eval_size: 0.3 outputs: model: type: mlflow_model path: ${{parent.outputs.model}} evaluation_results: type: uri_folder path: ${{parent.outputs.evaluation_results}}Configure the deployment:

The

deployment-onehot/deployment.ymlfile contains the deployment's configuration. You can check the full batch endpoint YAML schema for extra properties.deployment-onehot/deployment.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineComponentBatchDeployment.schema.json name: uci-classifier-train-onehot description: A sample deployment that trains an XGBoost model for the UCI dataset using onehot encoding for variables. endpoint_name: uci-classifier-train type: pipeline component: pipeline.yml settings: continue_on_step_failure: false default_compute: batch-clusterCreate the deployment:

Your deployment is ready for use.

Test a nondefault deployment

Once the deployment is created, it's ready to receive jobs. We can test it in the same way we did before, but now we'll invoke a specific deployment:

Invoke the deployment as follows, specifying the deployment parameter to trigger the specific deployment

uci-classifier-train-onehot:You can monitor the progress of the show and stream the logs using:

Configure the new deployment as the default one

Once we're satisfied with the performance of the new deployment, we can set this new one as the default:

az ml batch-endpoint update --name $ENDPOINT_NAME --set defaults.deployment_name=$DEPLOYMENT_NAME

Delete the old deployment

Once you're done, you can delete the old deployment if you don't need it anymore:

az ml batch-deployment delete --name uci-classifier-train-xgb --endpoint-name $ENDPOINT_NAME --yes

Clean up resources

Once you're done, delete the associated resources from the workspace:

Run the following code to delete the batch endpoint and its underlying deployment. --yes is used to confirm the deletion.

az ml batch-endpoint delete -n $ENDPOINT_NAME --yes

(Optional) Delete compute, unless you plan to reuse your compute cluster with later deployments.

Next steps

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for