Get started with AzCopy

AzCopy is a command-line utility that you can use to copy blobs or files to or from a storage account. This article helps you download AzCopy, connect to your storage account, and then transfer data.

Note

AzCopy V10 is the currently supported version of AzCopy.

If you need to use a previous version of AzCopy, see the Use the previous version of AzCopy section of this article.

This video shows you how to download and run the AzCopy utility.

The steps in the video are also described in the following sections.

Download AzCopy

First, download the AzCopy V10 executable file to any directory on your computer. AzCopy V10 is just an executable file, so there's nothing to install.

- Windows 64-bit (zip)

- Windows 32-bit (zip)

- Linux x86-64 (tar)

- Linux ARM64 (tar)

- macOS (zip)

- macOS ARM64 Preview (zip)

These files are compressed as a zip file (Windows and Mac) or a tar file (Linux). To download and decompress the tar file on Linux, see the documentation for your Linux distribution.

For detailed information on AzCopy releases, see the AzCopy release page.

Note

If you want to copy data to and from your Azure Table storage service, then install AzCopy version 7.3.

Run AzCopy

For convenience, consider adding the directory location of the AzCopy executable to your system path for ease of use. That way you can type azcopy from any directory on your system.

If you choose not to add the AzCopy directory to your path, you'll have to change directories to the location of your AzCopy executable and type azcopy or .\azcopy in Windows PowerShell command prompts.

As an owner of your Azure Storage account, you aren't automatically assigned permissions to access data. Before you can do anything meaningful with AzCopy, you need to decide how you'll provide authorization credentials to the storage service.

Authorize AzCopy

You can provide authorization credentials by using Microsoft Entra ID, or by using a Shared Access Signature (SAS) token.

Option 1: Use Microsoft Entra ID

By using Microsoft Entra ID, you can provide credentials once instead of having to append a SAS token to each command.

Option 2: Use a SAS token

You can append a SAS token to each source or destination URL that use in your AzCopy commands.

This example command recursively copies data from a local directory to a blob container. A fictitious SAS token is appended to the end of the container URL.

azcopy copy "C:\local\path" "https://account.blob.core.windows.net/mycontainer1/?sv=2018-03-28&ss=bjqt&srt=sco&sp=rwddgcup&se=2019-05-01T05:01:17Z&st=2019-04-30T21:01:17Z&spr=https&sig=MGCXiyEzbtttkr3ewJIh2AR8KrghSy1DGM9ovN734bQF4%3D" --recursive=true

To learn more about SAS tokens and how to obtain one, see Using shared access signatures (SAS).

Note

The Secure transfer required setting of a storage account determines whether the connection to a storage account is secured with Transport Layer Security (TLS). This setting is enabled by default.

Transfer data

After you've authorized your identity or obtained a SAS token, you can begin transferring data.

To find example commands, see any of these articles.

| Service | Article |

|---|---|

| Azure Blob Storage | Upload files to Azure Blob Storage |

| Azure Blob Storage | Download blobs from Azure Blob Storage |

| Azure Blob Storage | Copy blobs between Azure storage accounts |

| Azure Blob Storage | Synchronize with Azure Blob Storage |

| Azure Files | Transfer data with AzCopy and file storage |

| Amazon S3 | Copy data from Amazon S3 to Azure Storage |

| Google Cloud Storage | Copy data from Google Cloud Storage to Azure Storage (preview) |

| Azure Stack storage | Transfer data with AzCopy and Azure Stack storage |

Get command help

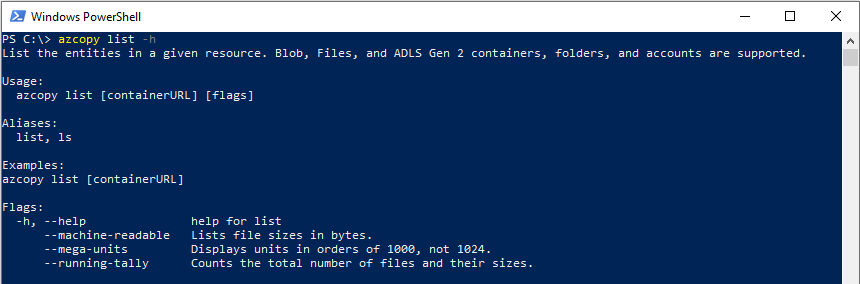

To see a list of commands, type azcopy -h and then press the ENTER key.

To learn about a specific command, just include the name of the command (For example: azcopy list -h).

List of commands

The following table lists all AzCopy v10 commands. Each command links to a reference article.

| Command | Description |

|---|---|

| azcopy bench | Runs a performance benchmark by uploading or downloading test data to or from a specified location. |

| azcopy copy | Copies source data to a destination location |

| azcopy doc | Generates documentation for the tool in Markdown format. |

| azcopy env | Shows the environment variables that can configure AzCopy's behavior. |

| azcopy jobs | Subcommands related to managing jobs. |

| azcopy jobs clean | Remove all log and plan files for all jobs. |

| azcopy jobs list | Displays information on all jobs. |

| azcopy jobs remove | Remove all files associated with the given job ID. |

| azcopy jobs resume | Resumes the existing job with the given job ID. |

| azcopy jobs show | Shows detailed information for the given job ID. |

| azcopy list | Lists the entities in a given resource. |

| azcopy login | Logs in to Microsoft Entra ID to access Azure Storage resources. |

| azcopy login status | Lists the entities in a given resource. |

| azcopy logout | Logs the user out and terminates access to Azure Storage resources. |

| azcopy make | Creates a container or file share. |

| azcopy remove | Delete blobs or files from an Azure storage account. |

| azcopy sync | Replicates the source location to the destination location. |

| azcopy set-properties | Change the access tier of one or more blobs and replace (overwrite) the metadata, and index tags of one or more blobs. |

Note

AzCopy does not have a command to rename files.

Use in a script

Obtain a static download link

Over time, the AzCopy download link will point to new versions of AzCopy. If your script downloads AzCopy, the script might stop working if a newer version of AzCopy modifies features that your script depends upon.

To avoid these issues, obtain a static (unchanging) link to the current version of AzCopy. That way, your script downloads the same exact version of AzCopy each time that it runs.

To obtain the link, run this command:

| Operating system | Command |

|---|---|

| Linux | curl -s -D- https://aka.ms/downloadazcopy-v10-linux \| grep ^Location |

| Windows PowerShell | (Invoke-WebRequest -Uri https://aka.ms/downloadazcopy-v10-windows -MaximumRedirection 0 -ErrorAction SilentlyContinue).headers.location |

| PowerShell 6.1+ | (Invoke-WebRequest -Uri https://aka.ms/downloadazcopy-v10-windows -MaximumRedirection 0 -ErrorAction SilentlyContinue -SkipHttpErrorCheck).headers.location |

Note

For Linux, --strip-components=1 on the tar command removes the top-level folder that contains the version name, and instead extracts the binary directly into the current folder. This allows the script to be updated with a new version of azcopy by only updating the wget URL.

The URL appears in the output of this command. Your script can then download AzCopy by using that URL.

Linux

wget -O azcopy_v10.tar.gz https://aka.ms/downloadazcopy-v10-linux && tar -xf azcopy_v10.tar.gz --strip-components=1

Windows PowerShell

Invoke-WebRequest -Uri 'https://azcopyvnext.azureedge.net/release20220315/azcopy_windows_amd64_10.14.1.zip' -OutFile 'azcopyv10.zip'

Expand-archive -Path '.\azcopyv10.zip' -Destinationpath '.\'

$AzCopy = (Get-ChildItem -path '.\' -Recurse -File -Filter 'azcopy.exe').FullName

# Invoke AzCopy

& $AzCopy

PowerShell 6.1+

Invoke-WebRequest -Uri 'https://azcopyvnext.azureedge.net/release20220315/azcopy_windows_amd64_10.14.1.zip' -OutFile 'azcopyv10.zip'

$AzCopy = (Expand-archive -Path '.\azcopyv10.zip' -Destinationpath '.\' -PassThru | where-object {$_.Name -eq 'azcopy.exe'}).FullName

# Invoke AzCopy

& $AzCopy

Escape special characters in SAS tokens

In batch files that have the .cmd extension, you'll have to escape the % characters that appear in SAS tokens. You can do that by adding an extra % character next to existing % characters in the SAS token string. The resulting character sequence appears as %%. Make sure to add an extra ^ before each & character to create the character sequence ^&.

Run scripts by using Jenkins

If you plan to use Jenkins to run scripts, make sure to place the following command at the beginning of the script.

/usr/bin/keyctl new_session

Use in Azure Storage Explorer

Storage Explorer uses AzCopy to perform all of its data transfer operations. You can use Storage Explorer if you want to apply the performance advantages of AzCopy, but you prefer to use a graphical user interface rather than the command line to interact with your files.

Storage Explorer uses your account key to perform operations, so after you sign into Storage Explorer, you won't need to provide additional authorization credentials.

Configure, optimize, and fix

See any of the following resources:

Use a previous version (deprecated)

If you need to use the previous version of AzCopy, see either of the following links:

Note

These versions AzCopy are been deprecated. Microsoft recommends using AzCopy v10.

Next steps

If you have questions, issues, or general feedback, submit them on GitHub page.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for