Quickstart: Deploy a SQL Server container cluster on Azure

Applies to:

SQL Server - Linux

This quickstart demonstrates how to configure a highly available SQL Server instance in a container with persistent storage, on Azure Kubernetes Service (AKS) or Red Hat OpenShift. If the SQL Server instance fails, the orchestrator automatically re-creates it in a new pod. The cluster service also provides resiliency against a node failure.

This quickstart uses the following command line tools to manage the cluster.

| Cluster service | Command line tool |

|---|---|

| Azure Kubernetes Service (AKS) | kubectl (Kubernetes CLI) |

| Azure Red Hat OpenShift | oc (OpenShift CLI) |

Prerequisites

An Azure account with an active subscription. Create an account for free.

A Kubernetes cluster. For more information on creating and connecting to a Kubernetes cluster in AKS with

kubectl, see Deploy an Azure Kubernetes Service (AKS) cluster.Note

To protect against node failure, a Kubernetes cluster requires more than one node.

Azure CLI. See How to install the Azure CLI to install the latest version.

Create an SA password

Create an SA password in the Kubernetes cluster. Kubernetes can manage sensitive configuration information, like passwords as secrets.

To create a secret in Kubernetes named

mssqlthat holds the valueMyC0m9l&xP@ssw0rdfor theMSSQL_SA_PASSWORD, run the following command. Remember to pick your own complex password:Important

The

SA_PASSWORDenvironment variable is deprecated. UseMSSQL_SA_PASSWORDinstead.kubectl create secret generic mssql --from-literal=MSSQL_SA_PASSWORD="MyC0m9l&xP@ssw0rd"

Create storage

For a database in a Kubernetes cluster, you must use persisted storage. You can configure a persistent volume and persistent volume claim in the Kubernetes cluster using the following steps:

Create a manifest to define the storage class and the persistent volume claim. The manifest specifies the storage provisioner, parameters, and reclaim policy. The Kubernetes cluster uses this manifest to create the persistent storage.

The following YAML example defines a storage class and persistent volume claim. The storage class provisioner is

azure-disk, because this Kubernetes cluster is in Azure. The storage account type isStandard_LRS. The persistent volume claim is namedmssql-data. The persistent volume claim metadata includes an annotation connecting it back to the storage class.kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: azure-disk provisioner: kubernetes.io/azure-disk parameters: storageaccounttype: Standard_LRS kind: Managed --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: mssql-data annotations: volume.beta.kubernetes.io/storage-class: azure-disk spec: accessModes: - ReadWriteOnce resources: requests: storage: 8GiSave the file (for example,

pvc.yaml).Create the persistent volume claim in Kubernetes, where

<path to pvc.yaml file>is the location where you saved the file:kubectl apply -f <path to pvc.yaml file>The persistent volume is automatically created as an Azure storage account, and bound to the persistent volume claim.

storageclass "azure-disk" created persistentvolumeclaim "mssql-data" createdVerify the persistent volume claim, where

<persistentVolumeClaim>is the name of the persistent volume claim:kubectl describe pvc <persistentVolumeClaim>In the preceding step, the persistent volume claim is named

mssql-data. To see the metadata about the persistent volume claim, run the following command:kubectl describe pvc mssql-dataThe returned metadata includes a value called

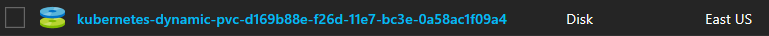

Volume. This value maps to the name of the blob.Name: mssql-data Namespace: default StorageClass: azure-disk Status: Bound Volume: pvc-d169b88e-f26d-11e7-bc3e-0a58ac1f09a4 Labels: ‹none> Annotations: kubectl.kubernetes.io/last-applied-configuration-{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{"volume.beta. kubernetes.io/storage-class":"azure-disk"},"name":"mssq1-data... pv.kubernetes.io/bind-completed-yes pv.kubernetes.io/bound-by-controller=yes volume.beta.kubernetes.io/storage-class=azure-disk volume.beta.kubernetes.io/storage-provisioner=kubernetes.io/azure-disk Capacity: 8Gi Access Modes: RWO Events: <none>The value for volume matches part of the name of the blob in the following image from the Azure portal:

Verify the persistent volume.

kubectl describe pvkubectlreturns metadata about the persistent volume that was automatically created and bound to the persistent volume claim.

Create the deployment

The container hosting the SQL Server instance is described as a Kubernetes deployment object. The deployment creates a replica set. The replica set creates the pod.

You create a manifest to describe the container, based on the SQL Server mssql-server-linux Docker image.

- The manifest references the

mssql-serverpersistent volume claim, and themssqlsecret that you already applied to the Kubernetes cluster. - The manifest also describes a service. This service is a load balancer. The load balancer guarantees that the IP address persists after SQL Server instance is recovered.

- The manifest describes resource requests and limits. These are based on the minimum system requirements.

Create a manifest (a YAML file) to describe the deployment. The following example describes a deployment, including a container based on the SQL Server container image.

apiVersion: apps/v1 kind: Deployment metadata: name: mssql-deployment spec: replicas: 1 selector: matchLabels: app: mssql template: metadata: labels: app: mssql spec: terminationGracePeriodSeconds: 30 hostname: mssqlinst securityContext: fsGroup: 10001 containers: - name: mssql image: mcr.microsoft.com/mssql/server:2022-latest resources: requests: memory: "2G" cpu: "2000m" limits: memory: "2G" cpu: "2000m" ports: - containerPort: 1433 env: - name: MSSQL_PID value: "Developer" - name: ACCEPT_EULA value: "Y" - name: MSSQL_SA_PASSWORD valueFrom: secretKeyRef: name: mssql key: MSSQL_SA_PASSWORD volumeMounts: - name: mssqldb mountPath: /var/opt/mssql volumes: - name: mssqldb persistentVolumeClaim: claimName: mssql-data --- apiVersion: v1 kind: Service metadata: name: mssql-deployment spec: selector: app: mssql ports: - protocol: TCP port: 1433 targetPort: 1433 type: LoadBalancerCopy the preceding code into a new file, named

sqldeployment.yaml. Update the following values:MSSQL_PID

value: "Developer": Sets the container to run SQL Server Developer edition. Developer edition isn't licensed for production data. If the deployment is for production use, set the appropriate edition (Enterprise,Standard, orExpress). For more information, see How to license SQL Server.persistentVolumeClaim: This value requires an entry forclaimName:that maps to the name used for the persistent volume claim. This tutorial usesmssql-data.name: MSSQL_SA_PASSWORD: Configures the container image to set the SA password, as defined in this section.valueFrom: secretKeyRef: name: mssql key: MSSQL_SA_PASSWORDWhen Kubernetes deploys the container, it refers to the secret named

mssqlto get the value for the password.securityContext: Defines privilege and access control settings for a pod or container. In this case, it's specified at the pod level, so all containers adhere to that security context. In the security context, we define thefsGroupwith the value10001, which is the Group ID (GID) for themssqlgroup. This value means that all processes of the container are also part of the supplementary GID10001(mssql). The owner for volume/var/opt/mssqland any files created in that volume will be GID10001(themssqlgroup).

Warning

By using the

LoadBalancerservice type, the SQL Server instance is accessible remotely (via the Internet) at port 1433.Save the file. For example,

sqldeployment.yaml.Create the deployment, where

<path to sqldeployment.yaml file>is the location where you saved the file:kubectl apply -f <path to sqldeployment.yaml file>The deployment and service are created. The SQL Server instance is in a container, connected to persistent storage.

deployment "mssql-deployment" created service "mssql-deployment" createdThe deployment and service are created. The SQL Server instance is in a container, connected to persistent storage.

To view the status of the pod, type

kubectl get pod.NAME READY STATUS RESTARTS AGE mssql-deployment-3813464711-h312s 1/1 Running 0 17mThe pod has a status of

Running. This status indicates that the container is ready. After the deployment is created, it can take a few minutes before the pod is visible. The delay is because the cluster pulls the mssql-server-linux image from the Microsoft Artifact Registry. After the image is pulled the first time, subsequent deployments might be faster if the deployment is to a node that already has the image cached on it.Verify the services are running. Run the following command:

kubectl get servicesThis command returns services that are running, and the internal and external IP addresses for the services. Note the external IP address for the

mssql-deploymentservice. Use this IP address to connect to SQL Server.NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 52m mssql-deployment LoadBalancer 10.0.113.96 52.168.26.254 1433:30619/TCP 2mFor more information about the status of the objects in the Kubernetes cluster, run the following command. Remember to replace

<MyResourceGroup>and<MyKubernetesClustername>with your resource group and Kubernetes cluster name:az aks browse --resource-group <MyResourceGroup> --name <MyKubernetesClustername>You can also verify the container is running as non-root by running the following command, where

<nameOfSqlPod>is the name of your SQL Server pod:kubectl.exe exec <nameOfSqlPod> -it -- /bin/bashYou can see the username as

mssqlif you runwhoami.mssqlis a non-root user.whoami

Connect to the SQL Server instance

You can connect with an application from outside the Azure virtual network, using the sa account and the external IP address for the service. Use the password that you configured as the OpenShift secret.

You can use the following applications to connect to the SQL Server instance.

Connect with sqlcmd

To connect with sqlcmd, run the following command:

sqlcmd -S <External IP Address> -U sa -P "MyC0m9l&xP@ssw0rd"

Replace the following values:

<External IP Address>with the IP address for themssql-deploymentserviceMyC0m9l&xP@ssw0rdwith your complex password

Verify failure and recovery

To verify failure and recovery, you can delete the pod with the following steps:

List the pod running SQL Server.

kubectl get podsNote the name of the pod running SQL Server.

Delete the pod.

kubectl delete pod mssql-deployment-0mssql-deployment-0is the value returned from the previous step for the pod name.

Kubernetes automatically recreates the pod to recover a SQL Server instance, and connects to the persistent storage. Use kubectl get pods to verify that a new pod is deployed. Use kubectl get services to verify that the IP address for the new container is the same.

Clean up resources

If you don't plan on going through the tutorials that follow, clean up your unnecessary resources. Use the az group delete command to remove the resource group, container service, and all related resources. Replace <MyResourceGroup> with the name of the resource group containing your cluster.

az group delete --name <MyResourceGroup> --yes --no-wait

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for