Troubleshoot API server and etcd problems in Azure Kubernetes Services

This guide is designed to help you identify and resolve any unlikely problems that you might encounter within the API server in large Microsoft Azure Kubernetes Services (AKS) deployments.

Microsoft has tested the reliability and performance of the API server at a scale of 5,000 nodes and 200,000 pods. The cluster that contains the API server has the ability to automatically scale out and deliver Kubernetes Service Level Objectives (SLOs). If you experience high latencies or time-outs, it's probably because there's a resource leakage on the distributed etc directory (etcd), or an offending client has excessive API calls.

Prerequisites

The Kubernetes kubectl tool. To install kubectl by using Azure CLI, run the az aks install-cli command.

AKS diagnostics logs (specifically, kube-audit events) that are enabled and sent to a Log Analytics workspace. To determine if logs are collected using resource-specific or Azure diagnostics mode, check the Diagnostic Settings blade in the Azure portal.

The Standard tier for AKS clusters. If you're using the Free tier, the API server and etcd contain limited resources. AKS clusters in the Free tier don't provide high availability. This is often the root cause of API server and etcd problems.

The kubectl-aks plugin for running commands directly on AKS nodes without using the Kubernetes control plane.

Symptoms

The following table outlines the common symptoms of API server failures:

| Symptom | Description |

|---|---|

| Time-outs from the API server | Frequent time-outs that are beyond the guarantees in the AKS API server SLA. For example, kubectl commands time-out. |

| High latencies | High latencies that make the Kubernetes SLOs fail. For example, the kubectl command takes more than 30 seconds to list pods. |

API server pod in CrashLoopbackOff status or facing webhook call failures |

Verify that you don't have any custom admission webhook (such as the Kyverno policy engine) that's blocking the calls to the API server. |

Troubleshooting checklist

If you are experiencing high latency times, follow these steps to pinpoint the offending client and the types of API calls that fail.

Step 1: Identify top user agents by the number of requests

To identify which clients generate the most requests (and potentially the most API server load), run a query that resembles the following code. The following query lists the top 10 user agents by the number of API server requests sent.

AKSAudit

| where TimeGenerated between(now(-1h)..now()) // When you experienced the problem

| summarize count() by UserAgent

| top 10 by count_

| project UserAgent, count_

Note

If your query returns no results, you may have selected the wrong table to query diagnostics logs. In resource-specific mode, data is written to individual tables depending on the category of the resource. Diagnostics logs are written to the AKSAudit table. In Azure diagnostics mode, all data is written to the AzureDiagnostics table. For more information, see Azure resource logs.

Although it's helpful to know which clients generate the highest request volume, high request volume alone might not be a cause for concern. A better indicator of the actual load that each client generates on the API server is the response latency that they experience.

Step 2: Identify and chart the average latency of API server requests per user agent

To identify the average latency of API server requests per user agent as plotted on a time chart, run the following query:

AKSAudit

| where TimeGenerated between(now(-1h)..now()) // When you experienced the problem

| extend start_time = RequestReceivedTime

| extend end_time = StageReceivedTime

| extend latency = datetime_diff('millisecond', end_time, start_time)

| summarize avg(latency) by UserAgent, bin(start_time, 5m)

| render timechart

This query is a follow-up to the query in the "Identify top user agents by the number of requests" section. It might give you more insights into the actual load that's generated by each user agent over time.

Tip

By analyzing this data, you can identify patterns and anomalies that can indicate problems on your AKS cluster or applications. For example, you might notice that a particular user is experiencing high latency. This scenario can indicate the type of API calls that are causing excessive load on the API server or etcd.

Step 3: Identify bad API calls for a given user agent

Run the following query to tabulate the 99th percentile (P99) latency of API calls across different resource types for a given client:

AKSAudit

| where TimeGenerated between(now(-1h)..now()) // When you experienced the problem

| extend HttpMethod = Verb

| extend Resource = tostring(ObjectRef.resource)

| where UserAgent == "DUMMYUSERAGENT" // Filter by name of the useragent you are interested in

| where Resource != ""

| extend start_time = RequestReceivedTime

| extend end_time = StageReceivedTime

| extend latency = datetime_diff('millisecond', end_time, start_time)

| summarize p99latency=percentile(latency, 99) by HttpMethod, Resource

| render table

The results from this query can be useful to identify the kinds of API calls that fail the upstream Kubernetes SLOs. In most cases, an offending client might be making too many LIST calls on a large set of objects or objects that are too large. Unfortunately, no hard scalability limits are available to guide users about API server scalability. API server or etcd scalability limits depend on various factors that are explained in Kubernetes Scalability thresholds.

Cause 1: A network rule blocks the traffic from agent nodes to the API server

A network rule can block traffic between the agent nodes and the API server.

To verify whether a misconfigured network policy is blocking communication between the API server and agent nodes, run the following kubectl-aks commands:

kubectl aks config import \

--subscription <mySubscriptionID> \

--resource-group <myResourceGroup> \

--cluster-name <myAKSCluster>

kubectl aks check-apiserver-connectivity --node <myNode>

The config import command retrieves the Virtual Machine Scale Set information for all the nodes in the cluster. Then, the check-apiserver-connectivity command uses this information to verify the network connectivity between the API server and a specified node, specifically for its underlying scale set instance.

Note

If the output of the check-apiserver-connectivity command contains the Connectivity check: succeeded message, then the network connectivity is unimpeded.

Solution 1: Fix the network policy to remove the traffic blockage

If the command output indicates that a connection failure occurred, reconfigure the network policy so that it doesn't unnecessarily block traffic between the agent nodes and the API server.

Cause 2: An offending client leaks etcd objects and results in a slowdown of etcd

A common problem is continuously creating objects without deleting unused ones in the etcd database. This can cause performance problems when etcd deals with too many objects (more than 10,000) of any type. A rapid increase of changes on such objects could also cause the etcd database size (4 gigabytes by default) to be exceeded.

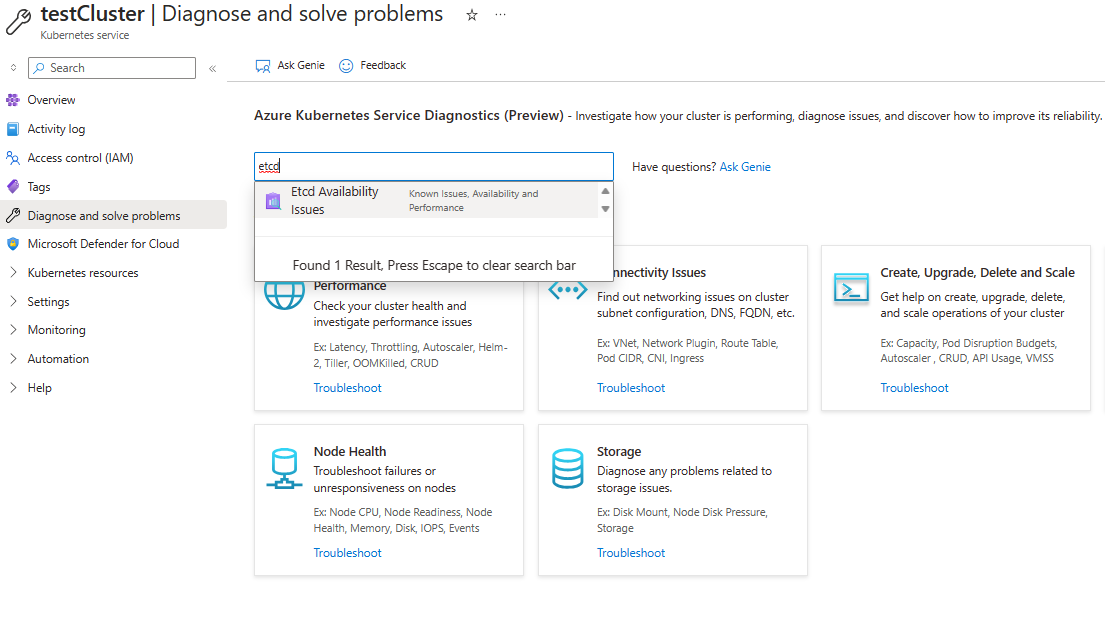

To check the etcd database usage, navigate to Diagnose and Solve problems in the Azure portal. Run the Etcd Availability Issues diagnosis tool by searching for "etcd" in the search box. The diagnosis tool shows you the usage breakdown and the total database size.

If you just want a quick way to view the current size of your etcd database in bytes, run the following command:

kubectl get --raw /metrics | grep -E "etcd_db_total_size_in_bytes|apiserver_storage_size_bytes|apiserver_storage_db_total_size_in_bytes"

Note

The metric name in the previous command is different for different Kubernetes versions. For Kubernetes 1.25 and earlier, use etcd_db_total_size_in_bytes. For Kubernetes 1.26 to 1.28, use apiserver_storage_db_total_size_in_bytes.

Solution 2: Define quotas for object creation, delete objects, or limit object lifetime in etcd

To prevent etcd from reaching capacity and causing cluster downtime, you can limit the maximum number of resources that are created. You can also slow the number of revisions that are generated for resource instances. To limit the number of objects that can be created, you can define object quotas.

If you have identified objects that are no longer in use but are taking up resources, consider deleting them. For example, you can delete completed jobs to free up space:

kubectl delete jobs --field-selector status.successful=1

For objects that support automatic cleanup, you can set Time to Live (TTL) values to limit the lifetime of these objects. You can also label your objects so that you can bulk delete all the objects of a specific type by using label selectors. If you establish owner references among objects, any dependent objects are automatically deleted after the parent object is deleted.

Cause 3: An offending client makes excessive LIST or PUT calls

If you determine that etcd isn't overloaded with too many objects, an offending client might be making too many LIST or PUT calls to the API server.

Solution 3a: Tune your API call pattern

Consider tuning your client's API call pattern to reduce the pressure on the control plane.

Solution 3b: Throttle a client that's overwhelming the control plane

If you can't tune the client, you can use the Priority and Fairness feature in Kubernetes to throttle the client. This feature can help preserve the health of the control plane and prevent other applications from failing.

The following procedure shows you how to throttle an offending client's LIST Pods API set to five concurrent calls:

Create a FlowSchema that matches the API call pattern of the offending client:

apiVersion: flowcontrol.apiserver.k8s.io/v1beta2 kind: FlowSchema metadata: name: restrict-bad-client spec: priorityLevelConfiguration: name: very-low-priority distinguisherMethod: type: ByUser rules: - resourceRules: - apiGroups: [""] namespaces: ["default"] resources: ["pods"] verbs: ["list"] subjects: - kind: ServiceAccount serviceAccount: name: bad-client-account namespace: defaultCreate a lower priority configuration to throttle bad API calls of the client:

apiVersion: flowcontrol.apiserver.k8s.io/v1beta2 kind: PriorityLevelConfiguration metadata: name: very-low-priority spec: limited: assuredConcurrencyShares: 5 limitResponse: type: Reject type: LimitedObserve the throttled call in the API server metrics.

kubectl get --raw /metrics | grep "restrict-bad-client"

Cause 4: A custom webhook might cause a deadlock in API server pods

A custom webhook, such as Kyverno, might be causing a deadlock within API server pods.

Check the events that are related to your API server. You might see event messages that resemble the following text:

Internal error occurred: failed calling webhook "mutate.kyverno.svc-fail": failed to call webhook: Post "https://kyverno-system-kyverno-system-svc.kyverno-system.svc:443/mutate/fail?timeout=10s": write unix @->/tunnel-uds/proxysocket: write: broken pipe

In this example, the validating webhook is blocking the creation of some API server objects. Because this scenario might occur during bootstrap time, the API server and Konnectivity pods can't be created. Therefore, the webhook can't connect to those pods. This sequence of events causes the deadlock and the error message.

Solution 4: Delete webhook configurations

To fix this problem, delete the validating and mutating webhook configurations. To delete these webhook configurations in Kyverno, review the Kyverno troubleshooting article.

Third-party contact disclaimer

Microsoft provides third-party contact information to help you find additional information about this topic. This contact information may change without notice. Microsoft does not guarantee the accuracy of third-party contact information.

Third-party information disclaimer

The third-party products that this article discusses are manufactured by companies that are independent of Microsoft. Microsoft makes no warranty, implied or otherwise, about the performance or reliability of these products.

Contact us for help

If you have questions or need help, create a support request, or ask Azure community support. You can also submit product feedback to Azure feedback community.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for