你当前正在访问 Microsoft Azure Global Edition 技术文档网站。 如果需要访问由世纪互联运营的 Microsoft Azure 中国技术文档网站,请访问 https://docs.azure.cn。

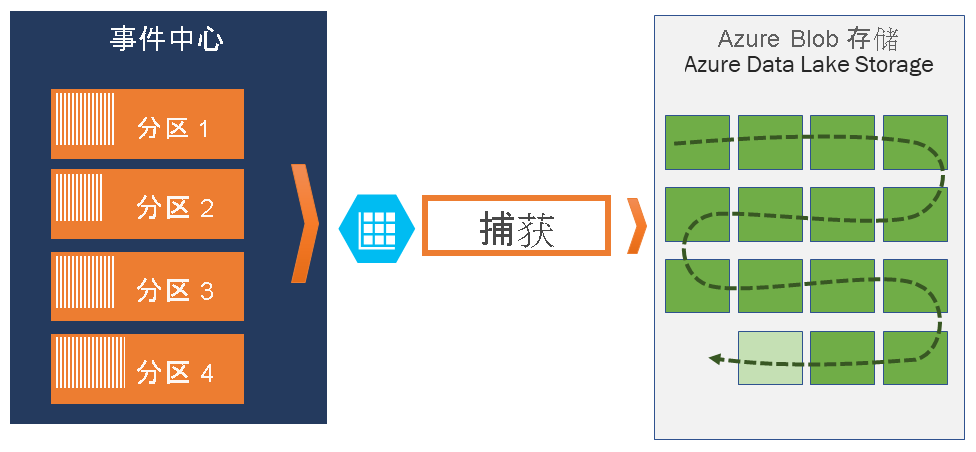

通过 Azure Blob 存储或 Azure Data Lake Storage 中的 Azure 事件中心来捕获事件

使用 Azure 事件中心,可以按指定的时间间隔或大小差异在所选的 Azure Blob 存储或者 Azure Data Lake Storage Gen 1 或 Gen 2 帐户中自动捕获事件中心的流式处理数据。 设置捕获极其简单,无需管理费用即可运行它,并且可以使用事件中心标准层中的吞吐量单位或高级层中的处理单位自动缩放它。 事件中心捕获是在 Azure 中加载流式处理数据的最简单方法,并可让用户专注于数据处理,而不是数据捕获。

注意

将事件中心捕获配置为使用 Azure Data Lake Storage Gen 2 的方法与将其配置为使用 Azure Blob 存储的方法相同。 有关详细信息,请参阅配置事件中心捕获。

事件中心捕获可让用户在同一个流上处理实时和基于批处理的管道。 这意味着可以构建随着时间的推移随用户的需要增长的解决方案。 无论用户现在正在构建基于批处理的系统并着眼于将来进行实时处理,还是要将高效的冷路径添加到现有的实时解决方案,事件中心捕获都可以使流式处理数据处理更加简单。

重要

- 不使用托管标识进行身份验证时,目标存储(Azure 存储或 Azure Data Lake Storage)帐户必须与事件中心位于同一订阅中。

- 事件中心不支持捕获高级存储帐户中的事件。

- 事件中心捕获支持任何支持块 Blob 的非高级 Azure 存储帐户。

Azure 事件中心捕获的工作原理

事件中心是遥测数据入口的时间保留持久缓冲区,类似于分布式日志。 缩小事件中心的关键在于分区使用者模式。 每个分区是独立的数据段,并单独使用。 根据可配置的保留期,随着时间的推移此数据会过时。 因此,给定的事件中心永远不会装得“太满”。

使用事件中心捕获,用户可以指定自己的 Azure Blob 存储帐户和容器或 Azure Data Lake Store(用于存储已捕获数据)。 这些帐户可以与事件中心在同一区域中,也可以在另一个区域中,从而增加了事件中心捕获功能的灵活性。

已捕获数据以 Apache Avro 格式写入;该格式是紧凑、便捷的二进制格式,并使用内联架构提供丰富的数据结构。 这种格式广泛用于 Hadoop 生态系统、流分析和 Azure 数据工厂。 在本文后面提供了有关如何使用 Avro 的详细信息。

注意

在 Azure 门户中不使用代码编辑器时,可以使用 Parquet 格式的 Azure Data Lake Storage Gen2 帐户在事件中心捕获流式处理数据。 有关详细信息,请参阅如何:以 Parquet 格式捕获事件中心数据和教程:使用 Azure Synapse Analytics 捕获 parquet 格式的事件中心数据并进行分析。

捕获窗口

使用事件中心捕获,用户可以设置用于控制捕获的窗口。 此窗口使用最小大小并具有使用“第一个获胜”策略的时间配置,这意味着遇到的第一个触发器将触发捕获操作。 如果使用 15 分钟,100 MB 的捕获窗口,且发送速度为每秒 1 MB,则大小窗口将在时间窗口之前触发。 每个分区独立捕获,并在捕获时写入已完成的块 blob,在遇到捕获间隔时针对时间进行命名。 存储命名约定如下所示:

{Namespace}/{EventHub}/{PartitionId}/{Year}/{Month}/{Day}/{Hour}/{Minute}/{Second}

日期值填充为零;示例文件名可能是:

https://mystorageaccount.blob.core.windows.net/mycontainer/mynamespace/myeventhub/0/2017/12/08/03/03/17.avro

如果 Azure 存储 blob 暂时不可用,事件中心捕获将在事件中心上配置的保留期内保留数据,并在存储帐户再次可用后重新填充数据。

缩放吞吐量单位或处理单位

在事件中心的标准层中,流量由吞吐量单位控制,而在事件中心的高级层中,流量由处理单位控制。 事件中心捕获直接从内部事件中心存储复制数据,从而绕过吞吐量单位或处理单位出口配额,为流分析或 Spark 等其他处理读取器节省了出口量。

配置后,用户发送第一个事件时,事件中心捕获会自动运行,并持续保持运行状态。 为了让下游处理更便于了解该进程正在运行,事件中心会在没有数据时写入空文件。 此进程提供了可预测的频率以及可以供给批处理处理器的标记。

设置事件中心捕获

可以使用 Azure 门户或使用 Azure 资源管理器模板在创建事件中心时配置捕获。 有关详细信息,请参阅以下文章:

注意

如果对现有的事件中心启用“捕获”功能,该功能将在启用后捕获到达事件中心的事件。 它不会在启用功能之前捕获事件中心中存在的事件。

Azure 事件中心捕获的收费方式

高级层包含捕获功能,因此不收取额外的费用。 对于标准层,该功能按月收费,费用与为命名空间购买的吞吐量单位数或处理单位数成正比。 随着吞吐量单位或处理单位的增加和减少,事件中心捕获计量也会相应地增加和减少以提供匹配的性能。 相继进行计量。 有关定价的详细信息,请参见事件中心定价。

捕获不会使用流出量配额,因为它是单独计费的。

事件网格集成

可以创建 Azure 事件网格订阅,其中事件中心命名空间作为其源。 以下教程介绍如何创建事件网格订阅,其中事件中心作为源,Azure Functions 应用作为接收器:使用事件网格和 Azure Functions 处理捕获的事件中心数据并将其迁移到 Azure Synapse Analytics。

浏览捕获的文件

若要了解如何浏览捕获的 Avro 文件,请参阅浏览捕获的 Avro 文件。

作为目标的 Azure 存储帐户

若要在以 Azure 存储为捕获目标的事件中心上启用捕获,或在以 Azure 存储为捕获目标的事件中心上更新属性,用户或服务主体必须有一个 RBAC 角色,并在存储帐户范围内分配以下权限。

Microsoft.Storage/storageAccounts/blobServices/containers/write

Microsoft.Storage/storageAccounts/blobServices/containers/blobs/write

如果没有上述权限,你将看到以下错误:

Generic: Linked access check failed for capture storage destination <StorageAccount Arm Id>.

User or the application with object id <Object Id> making the request doesn't have the required data plane write permissions.

Please enable Microsoft.Storage/storageAccounts/blobServices/containers/write, Microsoft.Storage/storageAccounts/blobServices/containers/blobs/write permission(s) on above resource for the user or the application and retry.

TrackingId:<ID>, SystemTracker:mynamespace.servicebus.windows.net:myhub, Timestamp:<TimeStamp>

存储 Blob 数据所有者内置角色具有上述权限,因此请将用户帐户或服务主体添加到此角色。

后续步骤

事件中心捕获是将数据加载到 Azure 最简单的方法。 使用 Azure Data Lake、Azure 数据工厂和 Azure HDInsight,可以执行批处理操作和其他分析,并且可以选择熟悉的工具和平台,以所需的任何规模执行。

了解如何使用 Azure 门户和 Azure 资源管理器模板启用此功能: