Xamarin.iOS 中的 ARKit 简介

适用于 iOS 11 的增强现实

ARKit 支持各种增强现实应用程序和游戏

ARKit 入门

要开始使用增强现实,以下说明演练了简单的应用程序:定位 3D 模型,让 ARKit 通过其跟踪功能保持模型就位。

1.添加 3D 模型

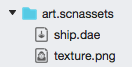

应使用SceneKitAsset生成操作将资产添加到项目中。

2.配置视图

在视图控制器的ViewDidLoad方法中,加载场景资产并在视图中设置Scene属性:

ARSCNView SceneView = (View as ARSCNView);

// Create a new scene

var scene = SCNScene.FromFile("art.scnassets/ship");

// Set the scene to the view

SceneView.Scene = scene;

3.(可选)实现会话委托

虽然对于简单情况不需要,但实现会话委托有助于调试 ARKit 会话的状态(在实际应用程序中,向用户提供反馈)。 使用以下代码创建简单的委托:

public class SessionDelegate : ARSessionDelegate

{

public SessionDelegate() {}

public override void CameraDidChangeTrackingState(ARSession session, ARCamera camera)

{

Console.WriteLine("{0} {1}", camera.TrackingState, camera.TrackingStateReason);

}

}

在ViewDidLoad方法中分配委托:

// Track changes to the session

SceneView.Session.Delegate = new SessionDelegate();

4.将 3D 模型定位到世界

在ViewWillAppear中,以下代码建立 ARKit 会话,并设置 3D 模型在空间中相对于设备相机的位置:

// Create a session configuration

var configuration = new ARWorldTrackingConfiguration {

PlaneDetection = ARPlaneDetection.Horizontal,

LightEstimationEnabled = true

};

// Run the view's session

SceneView.Session.Run(configuration, ARSessionRunOptions.ResetTracking);

// Find the ship and position it just in front of the camera

var ship = SceneView.Scene.RootNode.FindChildNode("ship", true);

ship.Position = new SCNVector3(2f, -2f, -9f);

每次运行或恢复应用程序时,3D 模型将放置在相机前面。 定位模型后,在 ARKit 保持模型定位时移动并观看相机。

5.暂停增强现实会话

当视图控制器不可见(在ViewWillDisappear方法中)时,最好暂停 ARKit 会话:

SceneView.Session.Pause();

总结

上述代码生成简单的 ARKit 应用程序。 更复杂的示例需要托管增强现实会话的视图控制器实现IARSCNViewDelegate,并实现其他方法。

ARKit 提供了许多更复杂的功能,例如表面跟踪和用户交互。