在 SharePoint Server 中傳送設定記錄

適用于: 2013

2013

2019

2019  訂閱版本

訂閱版本  Microsoft 365 中的 SharePoint

Microsoft 365 中的 SharePoint

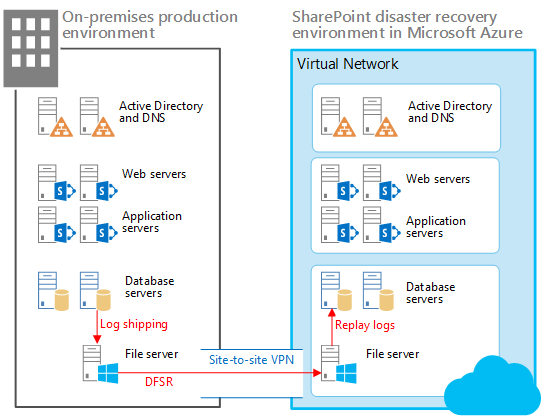

使用 記錄傳送,您可以將交易記錄從主要資料庫備份至個別 SQL Server 執行個體上的次要資料庫。 在此處所述的案例中,SQL Server 記錄傳送會與分散式檔案系統複寫 (DFSR) 搭配使用,將資料庫和交易紀錄複製至 Microsoft Azure 中的復原伺服器陣列,如下所示。

在此災害復原案例中,SharePoint Server 生產伺服器陣列位於內部部署,而復原伺服器陣列位於 Azure 中。 您也可以針對其他類型的災害復原案例,調整本主題中的指引。

Azure 中暖待命解決方案的元素

在此圖例中:

並排說明兩個環境:內部部署 SharePoint 伺服器陣列以及 Azure 中的復原 (待命) 伺服器陣列。

每個環境都包括檔案共用。

記錄傳送是用來將記錄從內部部署環境中的次要資料庫伺服器複製至本機檔案共用。

DFSR 會將檔案從內部部署環境中的檔案共用複製至 Azure 環境中的檔案共用。 在 WAN 案例中,DFSR 比直接將記錄傳送至 Azure 中的次要伺服器更為有效率。

記錄傳送會將記錄從 Azure 環境中的檔案共用重新執行到復原環境中SQL Server Always On可用性群組中的主要複本。

除非執行復原練習,否則記錄傳送的資料庫不會附加至 SharePoint Server 伺服器陣列。

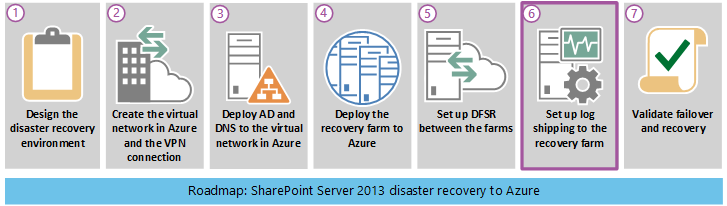

下圖顯示 Azure 解決方案中完整 SharePoint Server 災害復原所含的七個階段。 本圖強調顯示<階段 6:設定記錄傳送至復原伺服器陣列>,並在下列各節中予以說明。

使用記錄傳送進行災害復原

記錄傳送可讓您將資料庫的交易記錄檔從主要資料庫伺服器執行個體自動傳送至次要資料庫伺服器執行個體。 在內部部署測試環境中,我們會使用Always On具有兩個複本的可用性群組,以獲得高可用性。 我們已對兩個複本設定記錄傳送。 每個複本都必須可以傳送交易記錄。 只有使用中並擁有資料庫的複本才能傳送記錄。 不過,如果發生容錯移轉事件,而次要複本變成使用中,則必須傳送交易記錄,而非失敗複本。

在 Azure 環境中接收交易記錄之後,會一次一個地將它們還原至次要資料庫伺服器上的每個 SharePoint 資料庫。 如需測試環境的詳細資訊,請參閱 Microsoft 概念證明環境。

注意事項

部分組織會使用第三部資料庫伺服器做為監視器來記錄備份和還原作業的歷程記錄和狀態。 此選用監視伺服器會在備份作業失敗時建立警示。

如需記錄傳送的詳細資訊,請參閱下表中所列的文章。

表格:記錄傳送的參考文章

| URL | 描述 |

|---|---|

| 關於記錄傳送 (SQL Server) |

說明記錄傳送交易記錄備份以及可用的選項。 |

| 設定記錄傳送 (SQL Server) |

說明如何使用 SQL Server Management Studio 或 Transact-SQL 在 SQL Server 2012 中設定記錄傳送。 |

| 檢視記錄傳送報告 (SQL Server Management Studio) |

說明如何在 SQL Server Management Studio 中檢視交易記錄傳送狀態報告。 您可以在監視伺服器、主要伺服器或次要伺服器上執行狀態報告。 |

效能考量

記錄傳送包含三個工作。 每個工作都會執行下列其中一項作業:

備份主要伺服器執行個體上的交易記錄。

將交易記錄檔複製至次要伺服器執行個體。

還原次要伺服器執行個體上的記錄備份。

每個工作都會依排程操作,並執行間隔的時間,這預設會對資料庫伺服器造成重要影響,而且預設是 SharePoint 伺服器陣列效能。

若要正確地設定備份、複製和還原工作間隔以進行記錄傳送,則必須分析正在進行記錄傳送的資料量。 記錄傳送的資料量受到內容資料庫中每日變更量的影響。 根據內容、維護變更和使用尖峰,變更百分比可能會有顯著變化。

若要取得精確的變更百分比,請計算在指定間隔記錄傳送之每個內容資料庫的交易記錄備份中的變更總和。 使用此資料來計算與主要資料庫的變更百分比。

下列指引衍生自數版 SharePoint Server 的 Microsoft 欄位專業人員記錄傳送體驗。

請確定所有記錄傳送工作與前一個工作的偏移至少有一分鐘延遲,以避免因同時啟動所有工作而導致效能衰退。

最好是備份和複製多個小型交易記錄,而非一些大型交易記錄。

排程依頻繁間隔進行記錄備份和複製。 您可以依較不頻繁的間隔來還原交易記錄。 例如,請使用 5 分鐘的備份和複製間隔以及 15 分鐘的還原間隔來開始作業。

設定記錄傳送的必要條件

請確定您符合下列在災害復原解決方案中,使用記錄傳送的必要條件。

SQL Server 登入是具有記錄傳送所需權限等級的網域帳戶。 記錄傳送儲存程序需要有 sysadmin 固定伺服器角色的成員資格。

主要資料庫必須使用完整或大量記錄的復原模型。

注意

如果您將資料庫切換為簡單復原,則記錄傳送會停止運作。

設定記錄傳送之前,您必須建立共用,讓次要伺服器可以使用交易記錄備份。 這是在其中產生交易記錄備份之目錄的共用。

除了復原點目標 (RPO) 以外,請盡可能地確定復原的伺服器陣列資料完成且未損毀。 若要達到這些目標,您必須謹慎地規劃和排程記錄傳送的每個層面。

記錄傳送基礎結構

下圖顯示用於災害復原解決方案環境的記錄傳送基礎結構。

記錄傳送基礎結構和資料流程

上圖顯示記錄傳送基礎結構和資料流程。 此圖顯示生產伺服器陣列和 Azure 復原伺服器陣列中的 SQL Server 資料庫伺服器和檔案伺服器。 這些伺服器陣列幾乎完全相同,而且每個伺服器陣列都包含每個Always On可用性群組的主要和次要複本。 檔案伺服器 (FIL1 和 AZ-FIL1) 以相同的方式設定 (包括硬碟數目和磁碟大小)。 不會顯示伺服器陣列中的其他伺服器。

為了提供高可用性,可用性群組中的每個複本會儲存其他複本的備份 (完整、差異和交易記錄)。

主要和次要複本 (分別是 SQL-HA1 和 SQL-HA2) 會製作儲存在可用性群組的其合作夥伴上的備份。

交易記錄傳送設定於次要複本上,以將生產資料庫上備份的影響降到最低。 這些交易記錄會寫入至內部部署檔案伺服器 (FIL1) 上的共用資料夾。 Windows Server 分散式檔案系統 (DFS) 複寫服務會將交易記錄從 FIL1 複製至 AZ-FIL1。 AZ-FIL1 上的交易記錄會還原到 AZ-SQL-HA1 (復原伺服器陣列中可用性群組的主要複本)。

設定和驗證記錄傳送所需的步驟

下列清單緊縮和彙總設定、執行和驗證記錄傳送所需的步驟:

備份資料庫。

將完整和差異備份設定到本機資料夾,也一併設定到檔案伺服器上的共用資料夾。

驗證已對本機資料夾和共用資料夾進行備份。

設定和測試分散式檔案系統 (DFS) 複寫。

在檔案伺服器的共用資料夾上,建立命名空間和複寫以在內部部署與 Azure 伺服器陣列之間傳送交易記錄和備份檔案。

在執行記錄傳送之後,驗證所有傳送。

設定和測試記錄傳送。

使用下列指令碼,在主要資料庫伺服器上設定記錄傳送: Primary-Logshippingsetupparameter 。 此指令碼會建立備份工作,並排程它們進行記錄傳送,然後啟動工作。

SET NOCOUNT ON

USE MSDB

GO

--@PrimServer : Primary Server name

--@SecServer : DR/Secondary Server Name

--@SecInstance : DR/Secondary FQDN

--@Domain : Domain Name

--@BkpDrive : Production Backup server Name

--@DBName : DatabaseName

DECLARE @LS_BackupJobIdAS uniqueidentifier, @LS_PrimaryIdAS uniqueidentifier , @SP_Add_RetCode As int

DECLARE @Time as nvarchar(10),@SecInstance as nvarchar(250), @PrimServer as nvarchar(50),@SecServer as nvarchar(50),

@Domain as nvarchar(50),@DBName as nvarchar(max),@BkpDrive as nvarchar(250),@CMD as nvarchar(max),@Counter int

----------------------------------------------------------------------------

IF OBJECT_ID ('tempdb.DBO.#LogShipping','U') IS NOT NULL DROP TABLE #LogShipping

Create table #LogShipping ( LSDBs nvarchar(max))

Set @PrimServer ='SQL1'

Set @SecServer ='SQL2'

Set @SecInstance ='SQL2.corp.adventureworks.com'

Set @Domain ='corp.adventureworks.com'

Set @BkpDrive ='FS1.corp.adventureworks.com'

Set @DBName = 'Social_DB'

Set @Time = '0130'

SET @DBName = UPPER(REPLACE(@DBName, ' ', ''))

SET @DBName = '''' + REPLACE(@DBName, ',', ''', ''') + ''''

Set @CMD = ' Select ' +

'''DECLARE @SP_Add_RetCode As int, @LS_BackupJobIdAS uniqueidentifier, @LS_PrimaryIdAS uniqueidentifier

EXEC @SP_Add_RetCode = master.dbo.sp_add_log_shipping_primary_database ' + CHAR(10) +

'@database = '''''' + db.Name + ''''''' + CHAR(10) +

',@backup_directory = ''''\\' + @BkpDrive + '\LS\' + ''' + db.Name + ''''' + '''' + CHAR(10) +

',@backup_share = ' + '''''\\' + @BkpDrive + '\LS\' + ''' + db.Name + ''''' + '''' + CHAR(10) +

',@backup_job_name = ''''' + 'LSBackup_' + ''' + db.Name + ''''' + '''' + CHAR(10) +

',@backup_retention_period = 4320

,@backup_compression = 1

,@backup_threshold = 180

,@threshold_alert_enabled = 1

,@history_retention_period = 5760

,@backup_job_id = @LS_BackupJobId OUTPUT

,@primary_id = @LS_PrimaryId OUTPUT

,@overwrite = 1 ' +

'IF (@@ERROR = 0 AND @SP_Add_RetCode = 0)

BEGIN

DECLARE @LS_BackUpScheduleUIDAs uniqueidentifier ,@LS_BackUpScheduleIDAS int

EXEC msdb.dbo.sp_add_schedule

@schedule_name = ''''' + 'LSBackupSchedule_'+ @PrimServer + '_' + ''' + db.Name + ''''' + '''' + CHAR(10) +

',@enabled = 1

,@freq_type = 4

,@freq_interval = 1

,@freq_subday_type = 4

,@freq_subday_interval = 13

,@freq_recurrence_factor = 0

,@active_start_date = 20090506

,@active_end_date = 99991231

,@active_start_time = ' + @Time + CHAR(10) +

',@active_end_time = 235900

,@schedule_uid = @LS_BackUpScheduleUID OUTPUT

,@schedule_id = @LS_BackUpScheduleID OUTPUT

EXEC msdb.dbo.sp_attach_schedule @job_id = @LS_BackupJobId ,@schedule_id = @LS_BackUpScheduleID

EXEC msdb.dbo.sp_update_job @job_id = @LS_BackupJobId ,@enabled = 1

END

EXEC master.dbo.sp_add_log_shipping_alert_job

EXEC master.dbo.sp_add_log_shipping_primary_secondary

@primary_database = ''' + ''''' + db.Name + ''''' + '''' + CHAR(10) +

',@secondary_server = ''''' + @SecInstance + '''''' + CHAR(10) +

',@secondary_database = ''' + ''''' + db.Name + ''''' + '''' + CHAR(10) +

',@overwrite = 1 ''' +

' [LSDBs] FROM sys.databases db where name in (' + @DBName + ')' +

'and db.name not in (''master'',''model'',''msdb'',''tempdb'',''metricsops'',''periscope'' )

and Not (exists (select lss.Secondary_database from msdb.dbo.log_shipping_Secondary_databases lss where db.Name = lss.Secondary_database)

or exists (select lsp.primary_database from msdb.dbo.log_shipping_primary_databases lsp where db.Name = lsp.primary_database)

)'

Insert #LogShipping (LSDBs)

Exec ( @CMD)

Set @Counter = @@rowcount

While (@counter > 0)

Begin

select top 1 @CMD = LSDBs from #LogShipping

exec sp_executesql @CMD

set @counter = @counter - 1

delete top (1) from #LogShipping

End

IF OBJECT_ID ('tempdb.DBO.#LogShipping','U') IS NOT NULL DROP TABLE #LogShipping

-- ****** End: Script to be run at Primary: [DB1-PSMSQL-01] ******

- 使用下列指令碼,在次要資料庫伺服器上設定記錄傳送: Secondary-Logshippingsetupparameter scripts 。 在實驗室環境中,次要資料庫伺服器位於 Azure 的復原伺服器陣列中。

-- ****** Begin: Script to be run at Secondary: 9.3 BUILD******

SET NOCOUNT ON

USE MSDB

GO

--@PrimServer : Primary Server name

--@SecServer : DR/Secondary Server Name

--@SecInstance : DR/Secondary FQDN

--@Domain : Domain Name

--@PrimaryBkpDrive : Production Backup server Name

--@BkpDrive : Secondary Backup server Name

--@DBName : DatabaseName

DECLARE @LS_BackupJobIdAS uniqueidentifier, @LS_PrimaryIdAS uniqueidentifier , @SP_Add_RetCode As int

DECLARE @Time as nvarchar(10),@SecInstance as nvarchar(250), @PrimServer as nvarchar(50),@SecServer as nvarchar(50),

@Domain as nvarchar(50),@DBName as nvarchar(max),@PrimaryBkpDrive as nvarchar(250),@BkpDrive as nvarchar(250),@CMD as nvarchar(max),@CMD2 as nvarchar(max),@Counter int

DECLARE @Delimeter char(1),@DB nvarchar(200), @StartPos int, @Length int

IF OBJECT_ID ('tempdb.DBO.#LogShipping','U') IS NOT NULL DROP TABLE #LogShipping

Create table #LogShipping ( LSDBs nvarchar(max))

IF OBJECT_ID ('tempdb.DBO.#DBs','U') IS NOT NULL DROP TABLE #DBs

Create TABLE #DBs (Name nvarchar(200))

Set @PrimServer ='az-sql-ha1'

Set @SecServer =' az-sql-ha2'

Set @SecInstance ='SQL1.corp.adventureworks.com'

Set @Domain =' corp.adventureworks.com '

SET @PrimaryBkpDrive = 'fs1.corp.adventureworks.com'

Set @BkpDrive =' az-sp-fs.corp.adventureworks.com '

Set @DBName = 'Social_DB'

Set @Time = '0130'

--Parsing Function

SET @Delimeter = ','

WHILE LEN(@DBName) > 0

BEGIN

SET @StartPos = CHARINDEX(@Delimeter, @DBName)

IF @StartPos < 0 SET @StartPos = 0

SET @Length = LEN(@DBName) - @StartPos - 1

IF @Length < 0 SET @Length = 0

IF @StartPos > 0

BEGIN

SET @DB = Rtrim(Ltrim(SUBSTRING(@DBName, 1, @StartPos - 1)))

SET @DBName = SUBSTRING(@DBName, @StartPos + 1, LEN(@DBName) - @StartPos)

END

ELSE

BEGIN

SET @DB = Rtrim(Ltrim(@DBName))

SET @DBName = ''

END

INSERT #DBs (Name) VALUES(@DB)

END

--SET @DBName = UPPER(REPLACE(@DBName, ' ', ''))

--SET @DBName = '''' + REPLACE(@DBName, ',', ''', ''') + ''''

Set @CMD = 'Select ' +

''' DECLARE @LS_Secondary__CopyJobId AS uniqueidentifier, @LS_Secondary__RestoreJobId AS uniqueidentifier ,@LS_Secondary__SecondaryId AS uniqueidentifier , @LS_Add_RetCode As int ,@LS_Add_RetCode2 As int

DECLARE @LS_SecondaryCopyJobScheduleUIDAs uniqueidentifier ,@LS_SecondaryCopyJobScheduleIDAS int, @LS_SecondaryRestoreJobScheduleUIDAs uniqueidentifier ,@LS_SecondaryRestoreJobScheduleIDAS int

EXEC @LS_Add_RetCode = master.dbo.sp_add_log_shipping_secondary_primary

@primary_server = ''''' + @PrimServer + ''''''+ CHAR(10) +

',@primary_database = '' + ' + ''''''''' + db.Name + ''''''''' + CHAR(10) +

' + '',@backup_source_directory = ' + '''''\\' + @PrimaryBkpDrive + '\LS\'' + db.Name + ''''''' + CHAR(10) +

' ,@backup_destination_directory = ' + '''''\\' + @BkpDrive + '\LS\'' + db.Name + ''''''' + CHAR(10) +

',@copy_job_name = ''''LSCopy_DB1-PSMSQL-01_'' + db.Name + ''''''' + CHAR(10) +

',@restore_job_name = ''''LSRestore_'+ @PrimServer + '_'' + db.Name + ''''''' + CHAR(10) +

',@file_retention_period = 4320

,@overwrite = 1

,@copy_job_id = @LS_Secondary__CopyJobId OUTPUT

,@restore_job_id = @LS_Secondary__RestoreJobId OUTPUT

,@secondary_id = @LS_Secondary__SecondaryId OUTPUT

IF (@@ERROR = 0 AND @LS_Add_RetCode = 0)

BEGIN

EXEC msdb.dbo.sp_add_schedule

@schedule_name =''''DefaultCopyJobSchedule''''

,@enabled = 1

,@freq_type = 4

,@freq_interval = 1

,@freq_subday_type = 4

,@freq_subday_interval = 15

,@freq_recurrence_factor = 0

,@active_start_date = 20090506

,@active_end_date = 99991231

,@active_start_time = ' + @Time + '

,@active_end_time = 235900

,@schedule_uid = @LS_SecondaryCopyJobScheduleUID OUTPUT

,@schedule_id = @LS_SecondaryCopyJobScheduleID OUTPUT

EXEC msdb.dbo.sp_attach_schedule

@job_id = @LS_Secondary__CopyJobId

,@schedule_id = @LS_SecondaryCopyJobScheduleID

EXEC msdb.dbo.sp_add_schedule

@schedule_name =''''DefaultRestoreJobSchedule''''

,@enabled = 1

,@freq_type = 4

,@freq_interval = 1

,@freq_subday_type = 4

,@freq_subday_interval = 15

,@freq_recurrence_factor = 0

,@active_start_date = 20090506

,@active_end_date = 99991231

,@active_start_time = ' + @Time + '

,@active_end_time = 235900

,@schedule_uid = @LS_SecondaryRestoreJobScheduleUID OUTPUT

,@schedule_id = @LS_SecondaryRestoreJobScheduleID OUTPUT

EXEC msdb.dbo.sp_attach_schedule

@job_id = @LS_Secondary__RestoreJobId

,@schedule_id = @LS_SecondaryRestoreJobScheduleID

END

IF (@@ERROR = 0 AND @LS_Add_RetCode = 0)

BEGIN

EXEC @LS_Add_RetCode2 = master.dbo.sp_add_log_shipping_secondary_database

@secondary_database = ' + ''''''' + db.Name + ''''''' + CHAR(10) + '

,@primary_server = ''''' + @PrimServer + '''''

,@primary_database = '+ ''''''' + db.Name + ''''''' + CHAR(10) +

',@restore_delay = 0

,@restore_mode = 1

,@disconnect_users= 1

,@restore_threshold = 180

,@threshold_alert_enabled = 1

,@history_retention_period= 5760

,@overwrite = 1

END

IF (@@error = 0 AND @LS_Add_RetCode = 0)

BEGIN

EXEC msdb.dbo.sp_update_job @job_id = @LS_Secondary__CopyJobId ,@enabled = 0

EXEC msdb.dbo.sp_update_job @job_id = @LS_Secondary__RestoreJobId ,@enabled = 1

END ''' + '[LSDBs] FROM #DBs db'

--Print @CMD

Insert #LogShipping (LSDBs)

Exec ( @CMD)

Set @Counter = @@rowcount

While (@counter > 0)

Begin

select top 1 @CMD = LSDBs from #LogShipping

exec sp_executesql @CMD

set @counter = @counter - 1

delete top (1) from #LogShipping

End

IF OBJECT_ID ('tempdb.DBO.#LogShipping','U') IS NOT NULL DROP TABLE #LogShipping

IF OBJECT_ID ('tempdb.DBO.#DBs','U') IS NOT NULL DROP TABLE #DBs

-- ****** End: Script to be run at Secondary: 9.3 Build ******

- 驗證將交易記錄傳送至共用,而且 DFS 將這些記錄複寫至 Azure 檔案伺服器上的共用。 在 SQL Server 中開啟 [作業活動監視器],驗證已順利傳送交易記錄。 在生產環境中的檔案伺服器和 Azure 伺服器陣列上 開啟共用資料夾,驗證 DFS 正在傳送交易記錄。

請參閱

概念

設定 SharePoint Server 的SQL Server Always On可用性群組