什麼是 Azure Data Factory?

適用於: Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

提示

試用 Microsoft Fabric 中的 Data Factory,這是適用於企業的全方位分析解決方案。 Microsoft Fabric 涵蓋從資料移動到資料科學、即時分析、商業智慧和報告的所有項目。 了解如何免費啟動新的試用版!

在巨量數據世界中,未經處理、未組織的數據通常會儲存在關係型、非關係型和其他記憶體系統中。 不過,原始數據本身沒有適當的內容或意義,無法為分析師、數據科學家或商務決策者提供有意義的見解。

巨量資料需要以下服務:可協調和運作程序,將這些龐大的未經處理資料存放區精簡成可操作的商業見解。 Azure Data Factory 是一種受控雲端服務,專門針對複雜的混合式「擷取、轉換和載入」(ELT)、「擷取、載入和轉換」(ELT) 及資料整合專案所打造。

Azure Data Factory 的功能

數據壓縮:在數據 複製活動 期間,可以壓縮數據,並將壓縮的數據寫入目標數據源。 這項功能有助於優化數據複製中的頻寬使用量。

不同數據源的廣泛 連線 性支援:Azure Data Factory 提供連線到不同數據源的廣泛連線支援。 當您想要從不同的數據源提取或寫入數據時,這會很有用。

自定義事件觸發程式:Azure Data Factory 可讓您使用自定義事件觸發程式將數據處理自動化。 此功能可讓您在特定事件發生時自動執行特定動作。

數據預覽和驗證:在數據 複製活動 期間,會提供用來預覽和驗證數據的工具。 這項功能可協助您確保數據已正確複製並寫入目標數據源。

可自定義的數據流:Azure Data Factory 可讓您建立可自定義的數據流。 這項功能可讓您新增自定義動作或數據處理步驟。

整合式安全性:Azure Data Factory 提供整合式安全性功能,例如 Azure Active Directory 整合和角色型訪問控制,以控制數據流的存取。 此功能會增加數據處理的安全性,並保護您的數據。

使用方式情節

例如,想像一下,一家遊戲公司收集了 PB 的遊戲記錄,這些記錄在雲端中由遊戲產生。 該公司想分析這些記錄,以取得客戶喜好、人口統計資料和使用行為的深入解析。 它也希望找到向上銷售和交叉銷售的機會、開發引人注目的新功能、推動業務增長,並為客戶提供更好的體驗。

若要分析這些記錄,此公司必須使用參考資料,例如內部部署資料存放區中的客戶資訊、遊戲資訊及行銷活動資訊。 此公司想要利用這份來自內部部署資料存放區的資料,將之與雲端資料存放區中的額外記錄資料結合。

為了擷取深入解析,它希望使用雲端中的Spark叢集來處理聯結的數據(Azure HDInsight),並將轉換的數據發佈至雲端數據倉儲,例如 Azure Synapse Analytics,以輕鬆地在上面建置報表。 他們想自動化此工作流程,並照每日排程對之進行監視與管理。 他們也希望能在檔案進入 Blob 存放區容器時執行它。

Azure Data Factory 是可解決這類數據案例的平臺。 它是 雲端式 ETL 和數據整合服務,可讓您建立數據驅動工作流程,以便大規模協調數據移動和轉換數據。 使用 Azure Data Factory,可以建立並排程資料驅動的工作流程 (稱為管線),它可以從不同的資料存放區內嵌資料。 您可以建置複雜的 ETL 程式,透過資料流以可視化方式轉換資料,或使用 Azure HDInsight Hadoop、Azure Databricks 和 Azure SQL 資料庫 等計算服務。

此外,您可以將已轉換的數據發佈至數據存放區,例如 Azure Synapse Analytics 以取用商業智慧 (BI) 應用程式。 最後,透過 Azure Data Factory,即可將未經處理資料組織到有意義的資料存放區和資料湖中,以供做出更好的業務決策。

如何運作?

Data Factory 包含一系列互連系統,其提供資料工程師完整的端對端平台。

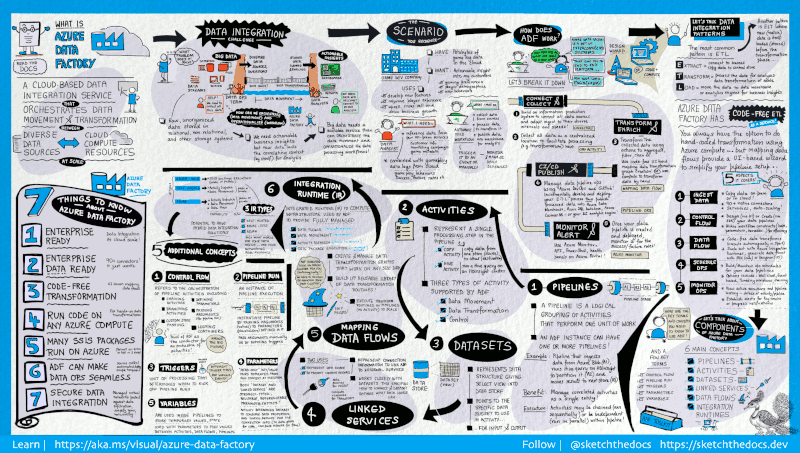

此視覺效果指南提供完整 Data Factory 架構的詳細概觀:

若要查看詳細數據,請選取要放大的上一個影像,或流覽至 高解析度影像。

連線及收集

企業有不同類型的數據,這些數據位於不同來源內部部署、雲端、結構化、非結構化和半結構化中,全都以不同的間隔和速度抵達。

建置資訊生產系統的第一個步驟是連線到所有必要的數據處理來源,例如軟體即服務 (SaaS) 服務、資料庫、檔案共用和 FTP Web 服務。 下一個步驟是視需要將數據移至集中式位置以供後續處理。

沒有 Data Factory,企業必須建置自訂的資料移動元件或撰寫自訂服務,以整合這些資料來源和處理。 整合和維護這類系統的成本很高且困難。 此外,它們通常缺少企業級監視、警示,以及完全受控服務可以提供的控件。

透過 Data Factory,您可以使用 資料管線中的「複製活動 」,將數據從內部部署和雲端源資料存放區移至雲端中的集中化數據存放區,以進行進一步分析。 例如,您可以使用 Azure Data Lake Analytics 計算服務,在 Azure Data Lake 儲存體 收集數據,稍後再轉換數據。 您也可以在 Azure Blob 記憶體中收集數據,稍後再使用 Azure HDInsight Hadoop 叢集進行轉換。

轉換及擴充

數據出現在雲端的集中式數據存放區之後,請使用 ADF 對應數據流來處理或轉換收集的數據。 數據流可讓數據工程師建置和維護在 Spark 上執行的數據轉換圖形,而不需要瞭解 Spark 叢集或 Spark 程式設計。

如果您想要手動撰寫轉換程序代碼,ADF 支援外部活動,以便在 HDInsight Hadoop、Spark、Data Lake Analytics 和 機器學習 等計算服務上執行轉換。

CI/CD 和發佈

Data Factory 使用 Azure DevOps 和 GitHub 提供數據管線 CI/CD 的完整支援。 這可讓您在發佈完成的產品之前,以累加方式開發和傳遞 ETL 程式。 將原始數據精簡成商務就緒消費型窗體之後,將數據載入 Azure 數據倉儲、Azure SQL 資料庫、Azure Cosmos DB,或商務使用者可從其商業智慧工具指向的分析引擎。

監視器

成功建置和部署數據整合管線之後,請從精簡的數據提供商務價值、監視排程的活動和管線,以取得成功和失敗率。 Azure Data Factory 內建支援透過 Azure 監視器、API、PowerShell、Azure 監視器記錄和 Azure 入口網站 的健康情況面板進行管線監視。

最上層概念

Azure 訂用帳戶可能有一或多個 Azure Data Factory 實例(或數據處理站)。 Azure Data Factory 是由下列重要元件所組成:

- Pipelines

- 活動

- 資料集

- 連結服務

- 資料流程

- Integration Runtimes

這些元件組成了一個平台,您可以在其上進行移動和轉換資料的步驟,撰寫資料驅動的工作流程。

管線

數據處理站可能有一或多個管線。 管線是執行一項工作單位的多個活動邏輯群組。 一起進行時,管線中的活動便能執行工作。 例如,管線可以包含一組活動,以從 Azure Blob 內嵌資料,然後對 HDInsight 叢集執行 Hive 查詢來分割資料。

其優點是管線可讓您以一組方式管理活動,而不是個別管理每個活動。 管線中的活動可以鏈結在一起,以循序運作,也可以以平行方式獨立運作。

對應數據流

建立和管理數據轉換邏輯的圖表,讓您可用來轉換任何大小的數據。 您可以從 ADF 管線建置可重複使用的數據轉換例程連結庫,並以相應放大的方式執行這些程式。 Data Factory 會在 Spark 叢集上執行邏輯,並在您需要時啟動和向下旋轉。 您永遠不需要管理或維護叢集。

活動

活動代表管線中的處理步驟。 例如,您可以使用複製活動將數據從一個數據存放區複製到另一個數據存放區。 同樣地,您可以使用Hive活動,在 Azure HDInsight 叢集上執行 Hive 查詢,以轉換或分析您的數據。 Data Factory 支援三種類型的活動:數據移動活動、數據轉換活動和控制活動。

資料集

數據集代表數據存放區中的數據結構,其只會指向或參考您想要在活動中使用的數據做為輸入或輸出。

連結服務

已連結的服務非常類似連接字串,可定義 Data Factory 連接到外部資源所需的連線資訊。 以這種方式思考:鏈接服務會定義數據源的連線,而數據集代表數據的結構。 例如,Azure 儲存體 鏈接的服務會指定要連線到 Azure 儲存體 帳戶的 連接字串。 此外,Azure Blob 資料集會指定 Blob 容器和包含資料資料夾。

鏈接服務用於 Data Factory 中的兩個用途:

用來代表資料存放區,其包含但不限於 SQL Server 資料庫、Oracle 資料庫、檔案共用或 Azure blob 儲存體帳戶。 如需支援的數據存放區清單,請參閱 複製活動 一文。

用來代表可裝載活動執行的 計算資源 。 例如,HDInsightHive 活動會在 HDInsight Hadoop 叢集上執行。 如需轉換活動和支援的計算環境清單,請參閱 轉換數據 一文。

整合執行階段

在 Data Factory 中,活動可定義要執行的動作。 連結服務可定義目標資料存放區或計算服務。 整合運行時間提供活動與鏈接服務之間的橋樑。 鏈接服務或活動會參考它,並提供執行活動或從中分派活動的計算環境。 如此一來,就能在最接近目標資料存放區或計算服務的區域執行活動,效率最高,又滿足安全性和合規性需求。

觸發程序

觸發程式代表處理單位,決定何時需要啟動管線執行。 不同類型的事件有不同類型的觸發程序。

管線執行

管線執行是管線執行的實例。 管線執行通常會藉由將自變數傳遞至管線中定義的參數來具現化。 自變數可以手動或觸發程式定義內傳遞。

參數

參數是唯讀設定的索引鍵/值組。 參數是在管線中定義。 已定義參數的引數會在執行時從執行內容傳遞,而執行內容由觸發程序或手動執行的管線所建立。 管線中的活動會取用參數值。

數據集是強型別參數和可重複使用/可參考的實體。 活動可以參考數據集,並可取用數據集定義中定義的屬性。

鏈接服務也是強型別參數,其中包含數據存放區或計算環境的連線資訊。 它也是可重複使用/可參考的實體。

控制流程

控制流程是管線活動的協調流程,其中包括將活動循序鏈結、分支、在管線層級定義參數,以及在隨選或從觸發程序叫用管線時傳遞引數。 它也包含自定義狀態傳遞和迴圈容器,也就是 For-each 反覆運算器。

變數

變數可用於管線內部儲存暫存值,也可以與參數搭配使用,以在管線、數據流和其他活動之間傳遞值。

相關內容

以下是探索的重要下一個步驟檔: