使用對應資料流程安全地轉換資料

適用於: Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

提示

試用 Microsoft Fabric 中的 Data Factory,這是適用於企業的全方位分析解決方案。 Microsoft Fabric 涵蓋從資料移動到資料科學、即時分析、商業智慧和報告的所有項目。 了解如何免費開始新的試用!

如果您不熟悉 Azure Data Factory,請參閱 Azure Data Factory 簡介。

在本教學課程中,您將使用 Data Factory 使用者介面 (UI) 來建立管線,目標是透過使用 Data Factory 受控虛擬網路 (部分機器翻譯) 中的對應資料流程,來將資料從 Azure Data Lake Storage Gen2 來源複製和轉換到 Data Lake Storage Gen2 接收器 (這兩者都允許存取選取的網路) 當您使用對應資料流轉換資料時,您可以展開本教學課程中的組態模式。

在本教學課程中,您會執行下列步驟:

- 建立資料處理站。

- 建立具有資料流程活動的管線。

- 建置具有四個轉換的對應資料流。

- 對管線執行測試。

- 監視資料流程活動。

必要條件

- Azure 訂用帳戶。 如果您沒有 Azure 訂用帳戶,請在開始前建立免費 Azure 帳戶。

- Azure 儲存體帳戶。 您需要使用 Data Lake Storage 作為「來源」和「接收」資料存放區。 如果您沒有儲存體帳戶,請參閱建立 Azure 儲存體帳戶,按照步驟建立此帳戶。 請確定儲存體帳戶只允許從選取的網路存取。

在本教學課程中,我們將轉換的檔案是 moviesDB.csv,您可以在此 GitHub 內容網站上找到此檔案。 若要從 GitHub 擷取檔案,請將內容複寫到您選擇的文字編輯器,以 .csv 檔案的形式儲存在本機。 若要將檔案上傳到儲存體帳戶,請參閱使用 Azure 入口網站上傳 Blob。 這些範例會參考名為 sample-data 的容器。

建立資料處理站

在此步驟中,您可以建立資料處理站,並開啟 Data Factory UI,以在資料處理站中建立管線。

開啟 Microsoft Edge 或 Google Chrome。 目前,只有 Microsoft Edge 和 Google Chrome 網頁瀏覽器支援 Data Factory UI。

在左側功能表上,選取 [建立資源]>[分析]>[資料處理站]。

在 [新增資料處理站] 頁面的 [名稱] 下,輸入 ADFTutorialDataFactory。

資料處理站的名稱必須是「全域唯一」的名稱。 如果您收到有關名稱值的錯誤訊息,請輸入不同的資料處理站名稱 (例如 yournameADFTutorialDataFactory)。 如需 Data Factory 成品的命名規則,請參閱 Data Factory 命名規則。

選取您要在其中建立資料處理站的 Azure 訂用帳戶。

針對 [資源群組],採取下列其中一個步驟︰

- 選取 [使用現有的] ,然後從下拉式清單選取現有的資源群組。

- 選取 [建立新的] ,然後輸入資源群組的名稱。

若要了解資源群組,請參閱使用資源群組管理您的 Azure 資源。

在 [版本] 下,選取 [V2]。

在 [位置] 下,選取資料處理站的位置。 只有受到支援的位置會出現在下拉式清單中。 資料處理站所使用的資料存放區 (例如 Azure 儲存體和 Azure SQL Database) 和計算 (例如 Azure HDInsight) 可位於其他區域。

選取 建立。

建立完成後,您會在通知中心看到通知。 選取 [移至資源],以移至 Data Factory 頁面。

選取 [開啟 Azure Data Factory Studio],以在個別的索引標籤中啟動 Data Factory UI。

在 Data Factory 受控虛擬網路中建立 Azure IR

在此步驟中,您會在 Data Factory 受控虛擬網路中建立 Azure IR。

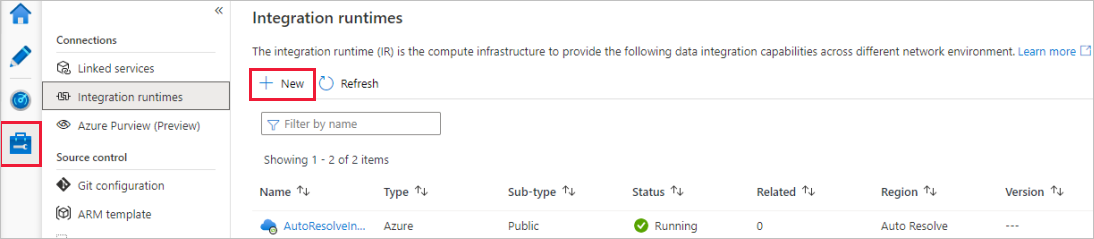

在 Data Factory 入口網站中,移至 [管理] ,然後選取 [新增] 以建立新的 Azure IR。

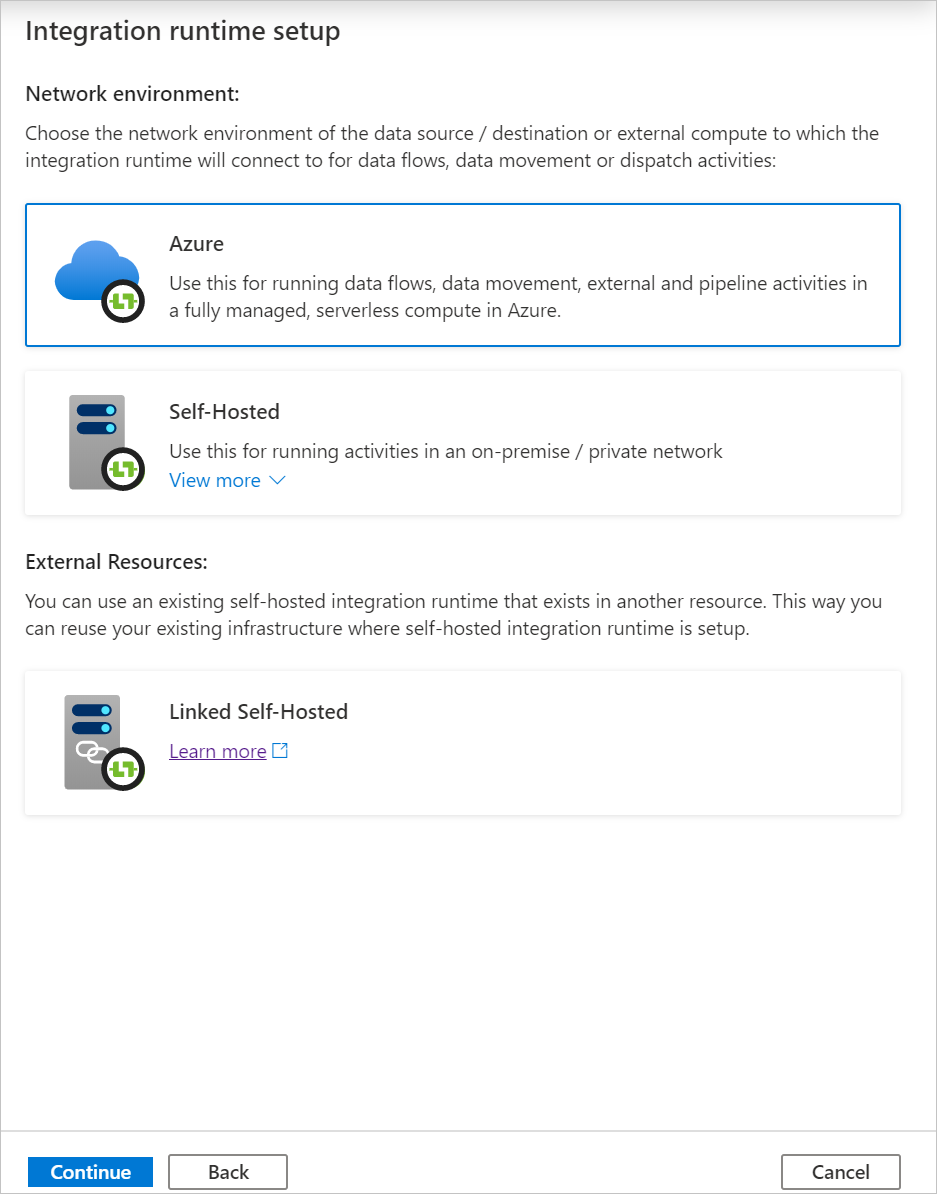

在 [整合執行階段設定] 頁面上,選擇要根據必要功能建立的整合執行階段。 在本教學課程中,選取 [Azure]、[自我裝載],然後按一下 [繼續]。

選取 [Azure],然後按一下 [繼續] 以建立 Azure 整合執行階段。

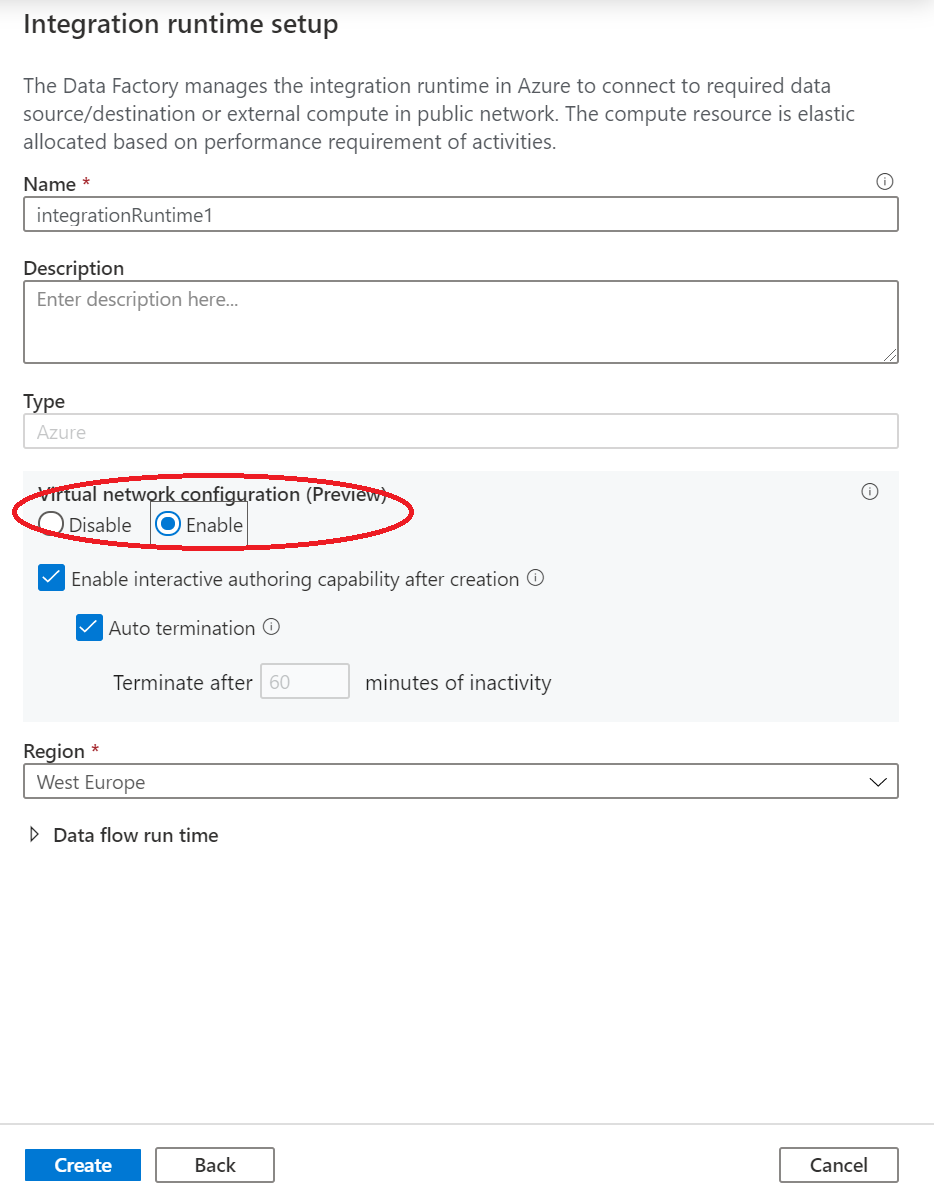

在 [虛擬網路設定 (預覽)] 中,選取 [啟用]。

選取 建立。

建立具有資料流程活動的管線

在此步驟中,您將建立包含資料流程活動的管線。

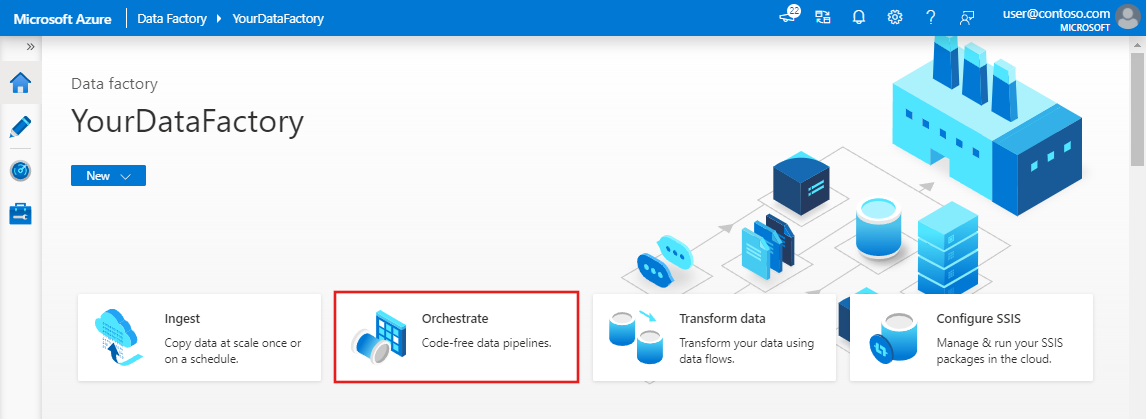

在 Azure Data Factory 的首頁上選取 [協調]。

在管線的屬性窗格中,輸入 TransformMovies 作為管線名稱。

在 [活動] 窗格中,展開 [移動和轉換]。 將資料流程活動從窗格拖放到管線畫布。

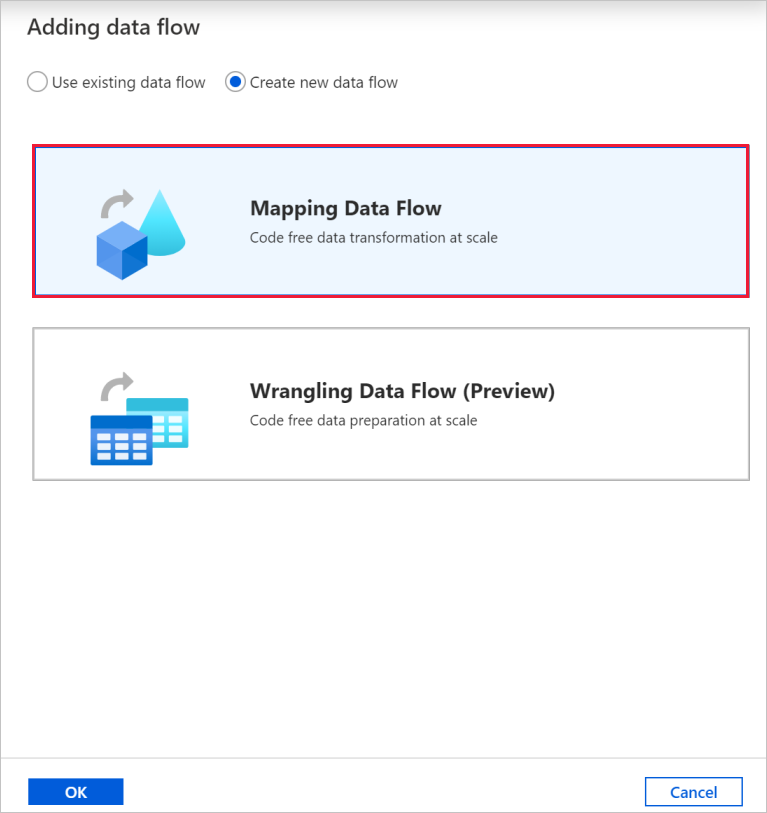

在 [新增資料流程] 快顯項目中,選取 [建立新的資料流程],並接著選取[對應資料流程]。 完成後,選取 [確定]。

在屬性窗格上,將您的資料流程命名為 TransformMovies。

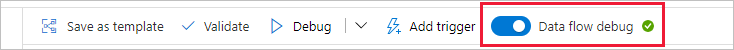

在管線畫布的頂端列中,滑動資料流程偵錯滑桿。 偵錯模式可讓您對即時 Spark 叢集進行轉換邏輯的互動式測試。 資料流程叢集需要 5-7 分鐘的準備時間,如果使用者想要進行資料流程開發,建議使用者先開啟偵錯功能。 如需詳細資訊,請參閱偵錯模式。

在資料流程畫布中建置轉換邏輯

建立資料流程之後,會自動傳送到資料流程畫布。 在此步驟中,您將建置一個資料流程來擷取 Data Lake Storage 中的 moviesDB.csv,並彙總 1910 年到 2000 年喜歌劇的平均評等。 然後,您會將此檔案寫回 Data Lake Storage。

新增來源轉換

在此步驟中,您會將 Data Lake Storage Gen2 設為來源。

在資料流程畫布中,選取 [新增來源] 方塊以新增來源。

將來源命名為 MoviesDB。 選取 [新增] 以建立新的來源資料集。

選取 [Azure Data Lake Storage Gen2],然後選取 [繼續]。

選取 [DelimitedText],然後選取 [繼續]。

將資料集命名為 MoviesDB。 在已連結的服務的下拉式清單中,選取 [新增]。

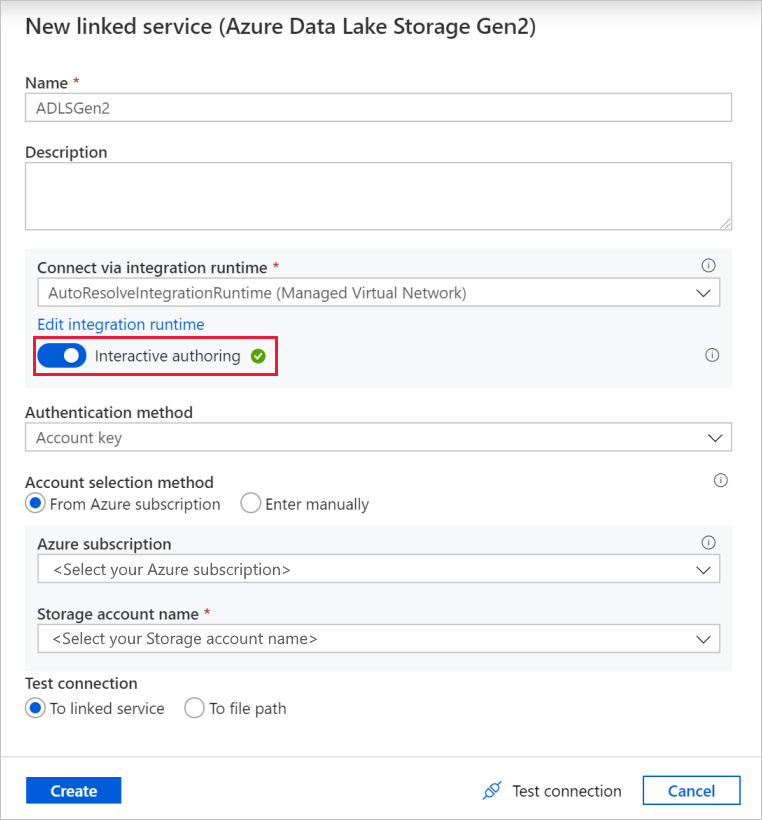

在連結服務建立畫面中,將 Data Lake Storage Gen2 連結服務命名為 ADLSGen2,並指定驗證方法。 然後輸入您的連線認證。 在本教學課程中,我們會使用「帳戶金鑰」來連線至儲存體帳戶。

請務必啟用互動式製作。 可能需要約 1 分鐘的時間才能啟用。

選取測試連線。 這應該會失敗,因為在未建立和核准私人端點的情況下,儲存體帳戶無法啟用存取。 在錯誤訊息中,您應該會看到一個連結,可讓您建立私人端點,以供您建立受控私人端點。 另一個替代方式是直接前往 [管理] 索引標籤,並遵循此章節 (部分機器翻譯) 中的指示建立受控私人端點。

讓對話方塊保持開啟,然後移至儲存體帳戶。

遵循本節的指示來核准私人連結。

返回對話方塊。 選取 [測試連線],然後選取 [建立] 以部署已連結的服務。

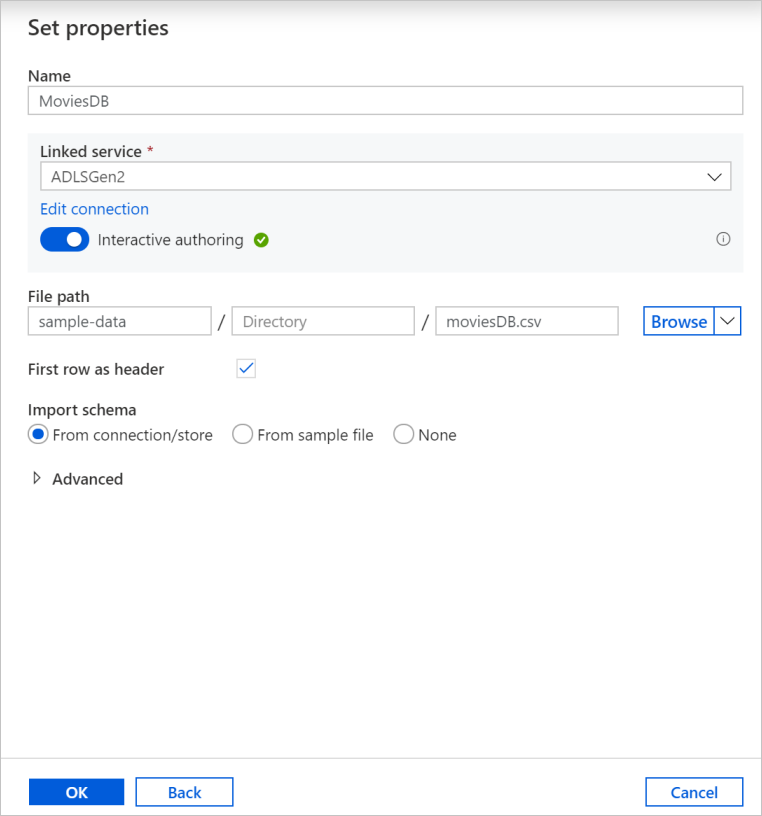

在資料集建立畫面中的 [檔案路徑] 欄位下輸入檔案的所在位置。 在本教學課程中,moviesDB.csv 檔案位於容器 sample-data 中。 因為檔案有標題,所以請選取 [First row as header] \(第一個資料列作為標頭\) 核取方塊。 選取 [從連線/存放區],以直接從儲存體中的檔案匯入標頭結構描述。 完成後,選取 [確定]。

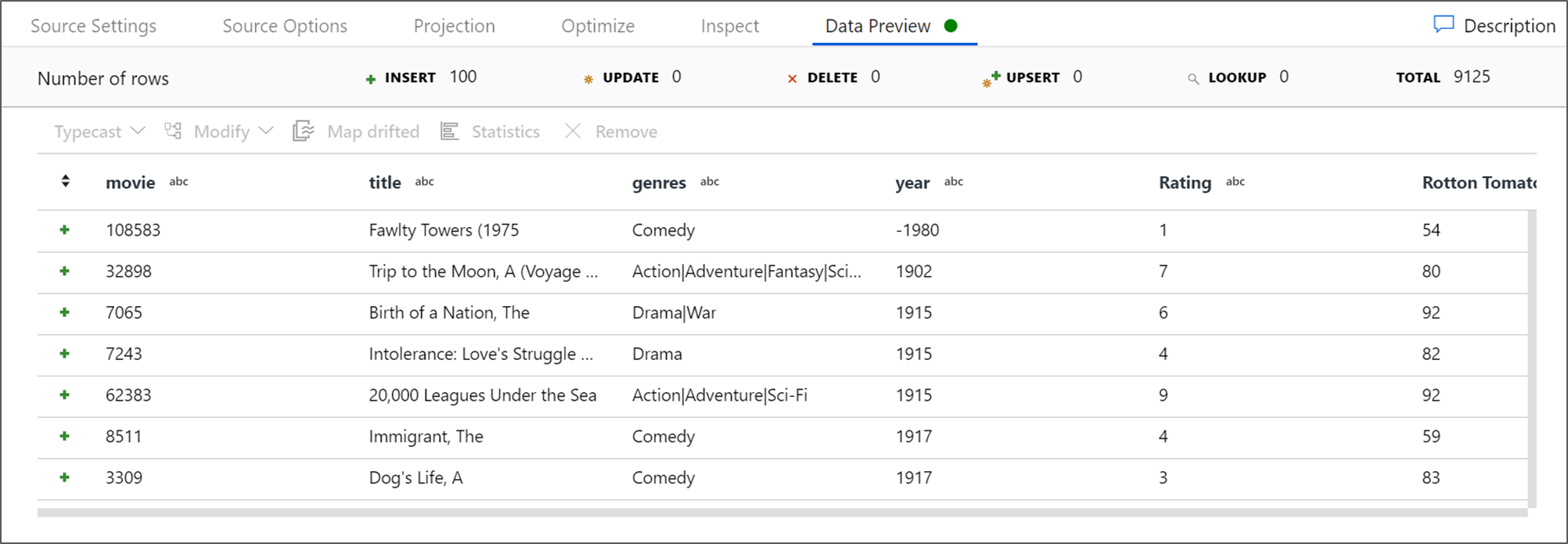

如果您的偵錯叢集已啟動,請前往來源轉換的 [資料預覽] 索引標籤,然後選取 [重新整理] 以取得資料的快照集。 您可以使用資料預覽來確認是否已正確設定轉換。

建立受控私人端點

如果您未在測試連線時使用上述超連結,請遵循路徑。 現在您需要建立受控私人端點,以連線至所建立的已連結服務。

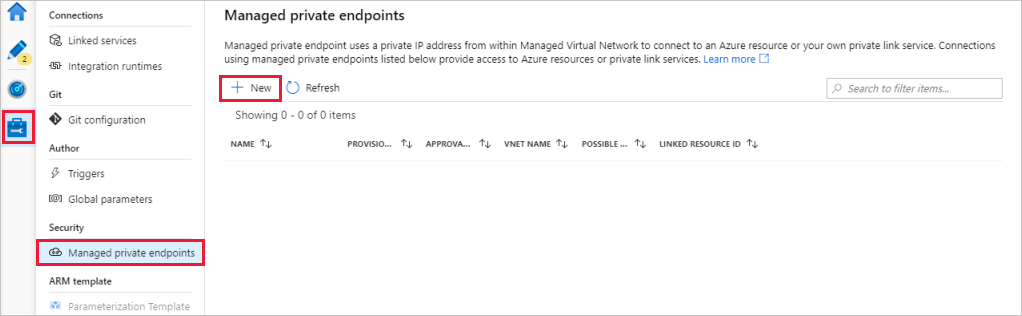

移至管理索引標籤。

注意

並非所有 Data Factory 執行個體圴可使用管理索引標籤。 如果您沒有看到該索引標籤,可以選取 [作者]>[連線]>[私人端點] 來存取私人端點。

移至受控私人端點區段。

在受控私人端點之下選取 [+新增]。

從清單中選取 [Azure Data Lake Storage Gen2] 圖格,然後選取 [繼續]。

輸入建立之儲存體帳戶的名稱。

選取 建立。

在等候幾秒鐘之後,您應該會看到建立的私人連結需要核准。

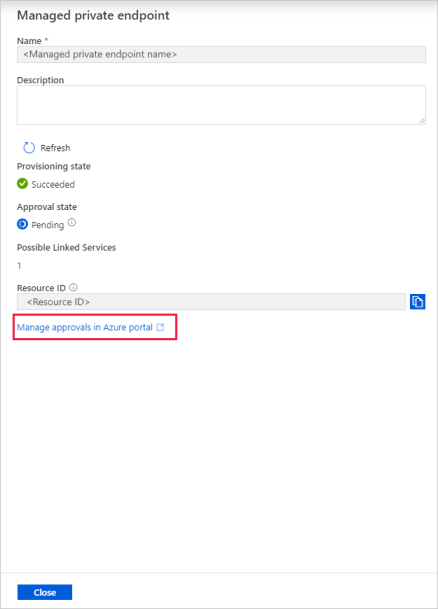

選取之前建立的私人端點。 您會看到超連結,引導您在儲存體帳戶層級核准私人端點。

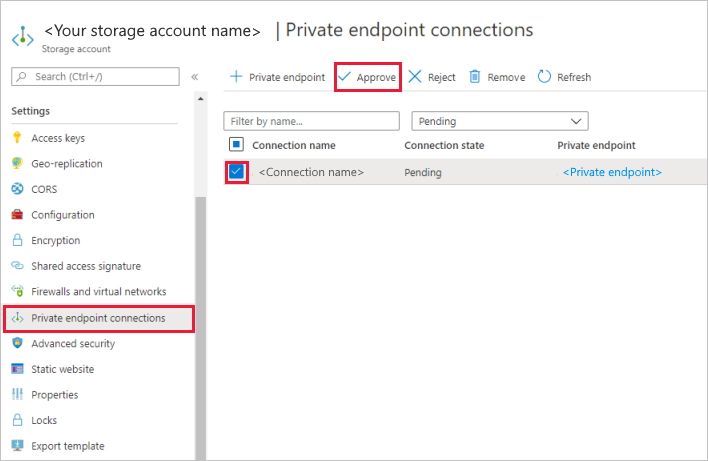

在儲存體帳戶中核准私人連結

在儲存體帳戶中,移至設定區段下的私人端點連線。

選取您建立的私人端點旁的核取方塊,然後選取 [核准]。

新增描述,然後選取 [是]。

回到 Data Factory 中管理索引標籤的受控私人端點區段。

大約一分鐘之後,您應能看到私人端點的核准出現。

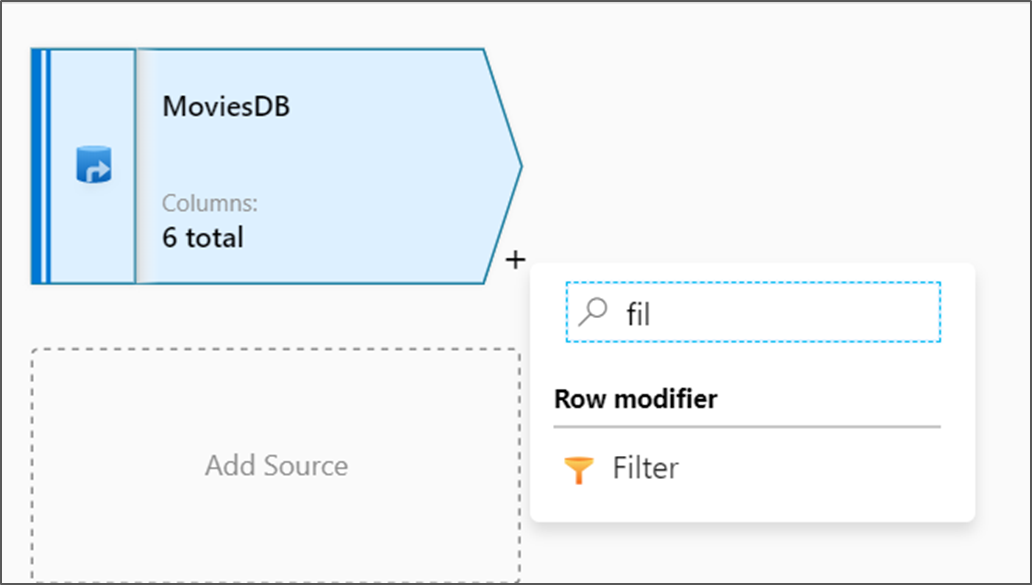

新增篩選轉換

在資料流程畫布的來源節點旁,選取加號圖示以新增轉換。 您要新增的第一個轉換是篩選。

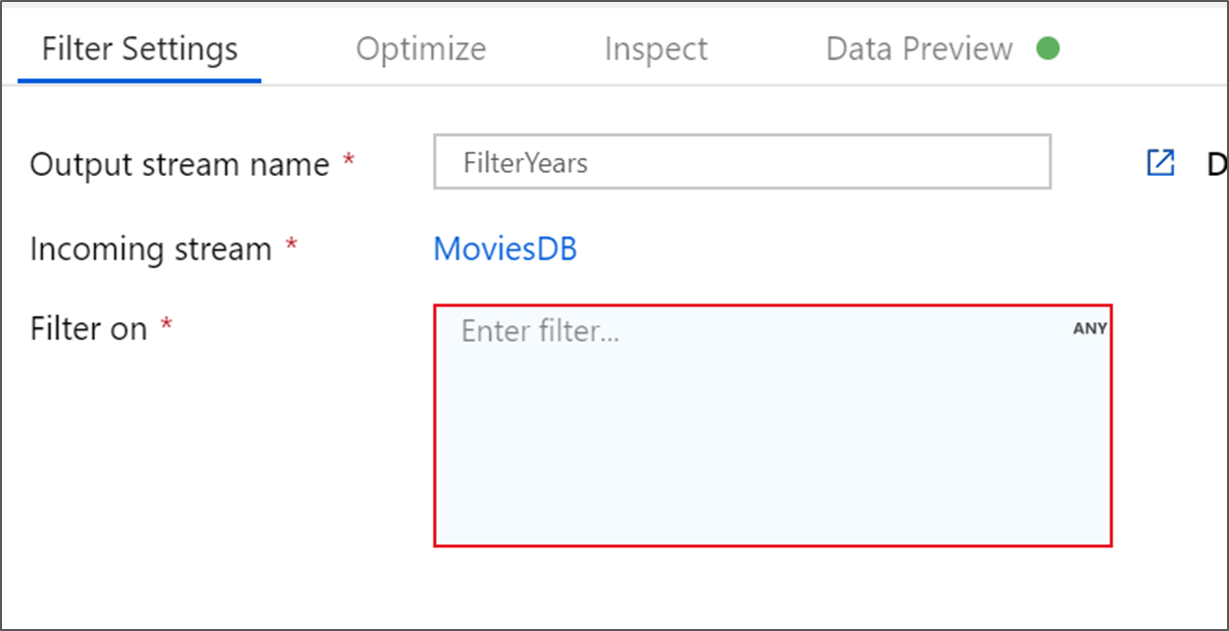

將篩選轉換命名為 FilterYears。 選取 [篩選對象] 旁的運算式方塊,以開啟運算式產生器。 您將在這裡指定篩選條件。

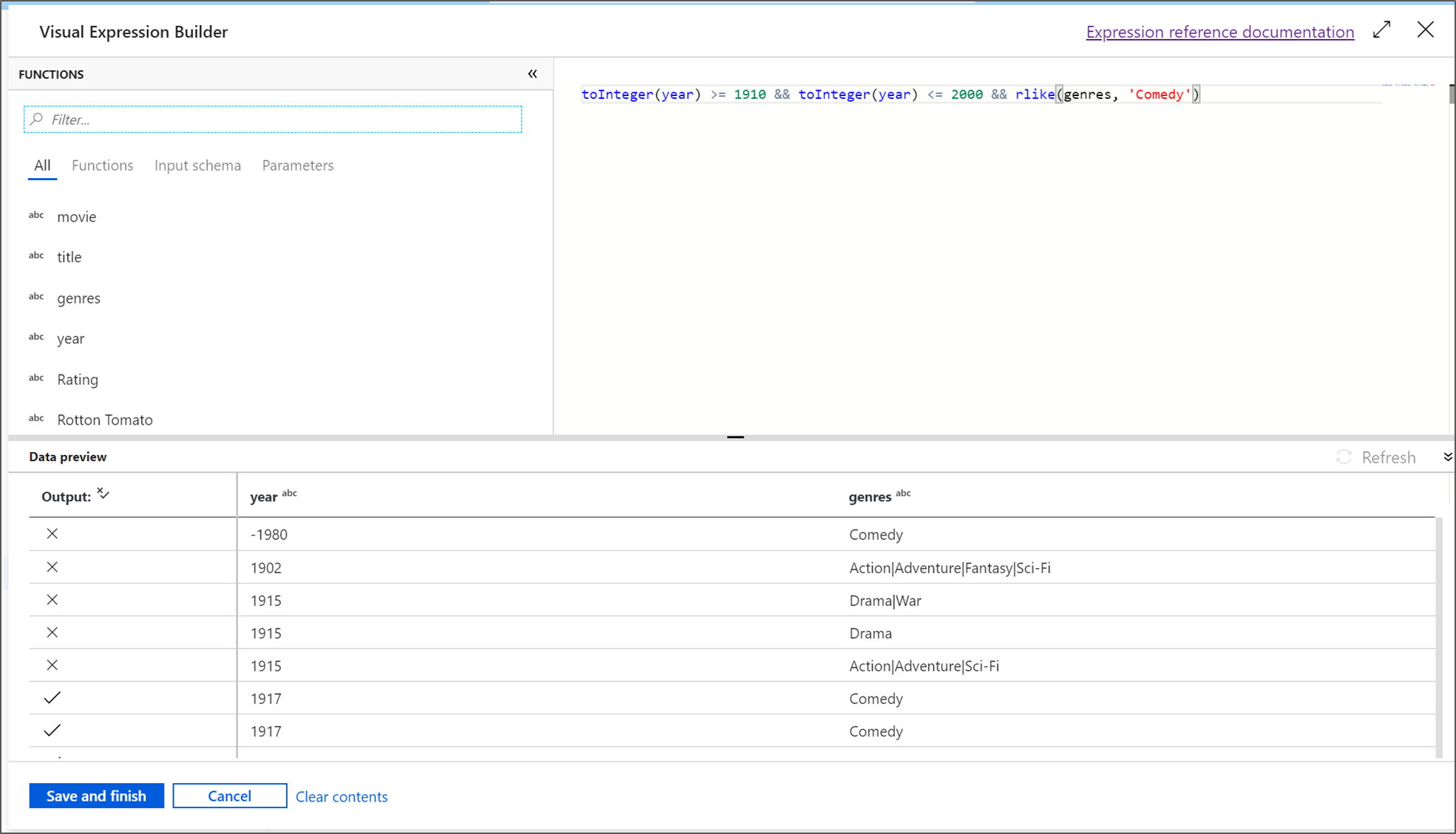

資料流程運算式產生器可讓您以互動方式建立要在各種轉換中使用的運算式。 運算式可以包含內建函式、輸入結構描述中的資料行,以及使用者定義的參數。 如需如何建立運算式的詳細資訊,請參閱資料流程運算式產生器 (部分機器翻譯)。

在本教學課程中,您想要篩選在 1910 年到 2000 年之間上映的喜歌劇內容類型電影。 因為當年份目前為字串,您必須使用

toInteger()函式將其轉換成整數。 使用大於或等於 (>=) 和小於或等於 (<=) 運算子,針對常值年份值 1910 和 2000 進行比較。 使用 and(&&) 運算子來聯合這些運算式。 產生的運算式如下所示:toInteger(year) >= 1910 && toInteger(year) <= 2000若要找出哪些電影是喜劇,您可以使用

rlike()函式來尋找資料行內容類型中的「喜劇」模式。 將rlike運算式與比較年份聯集以取得:toInteger(year) >= 1910 && toInteger(year) <= 2000 && rlike(genres, 'Comedy')如果您有使用中偵錯叢集,您可以選取 [重新整理] 查看運算式輸出與所使用輸入的比較來確認您的邏輯。 如何使用資料流程運算式語言來完成這項邏輯的正確解答不只一個。

在您完成運算式之後,選取 [儲存並完成]。

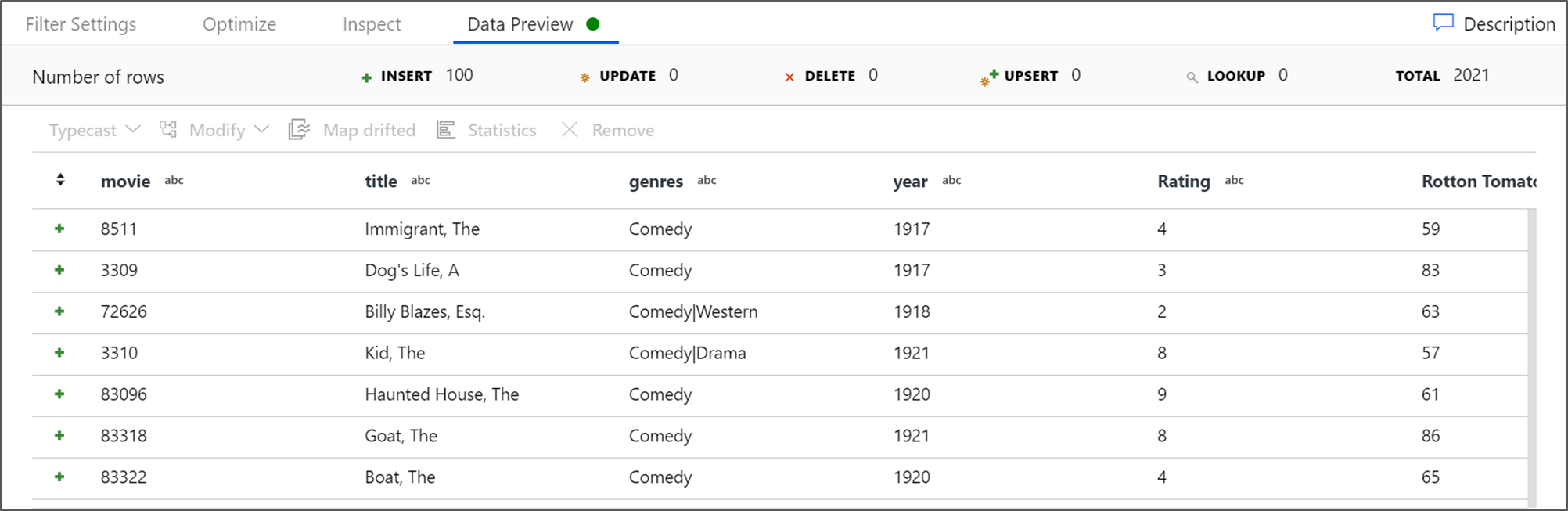

擷取 [資料預覽] 以確認篩選是否正常運作。

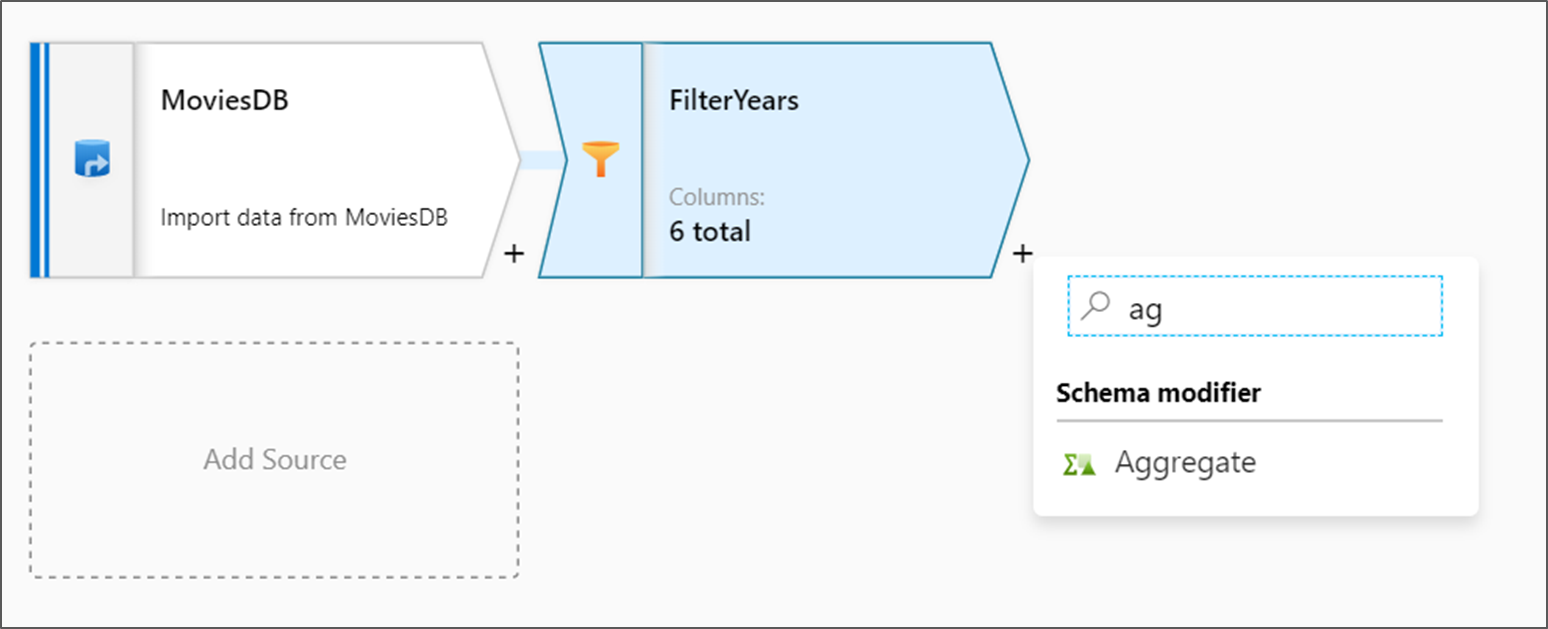

新增彙總轉換

下一個要新增的轉換是 [Schema modifier] \(結構描述修飾詞\) 下的彙總轉換。

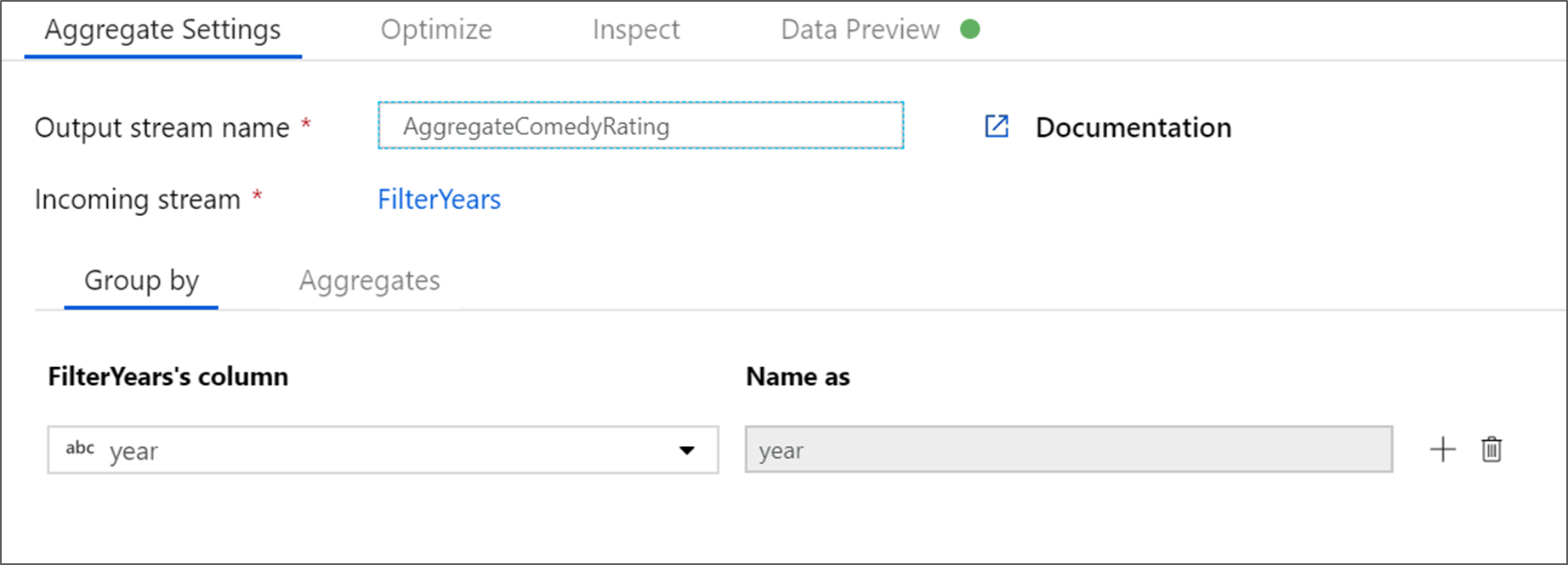

將彙總轉換命名為 AggregateComedyRatings。 在 [分組方式] 索引標籤上,從下拉式方塊中選取 [year],以依電影上映年份分組彙總。

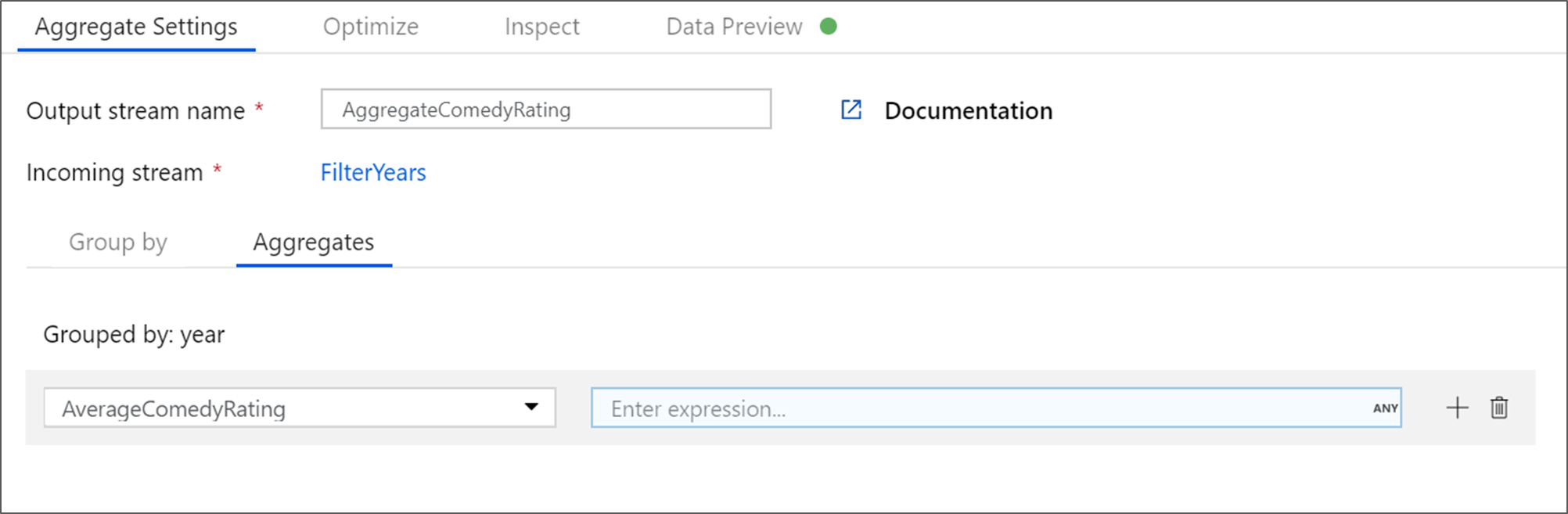

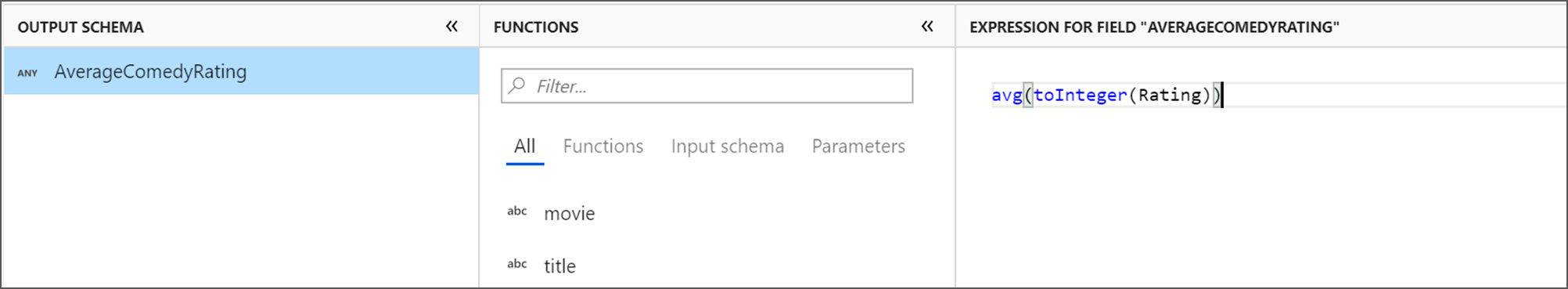

移至 [彙總] 索引標籤。在左側文字輸入框中,將彙總資料行命名為 AverageComedyRating。 選取右側運算式方塊,透過運算式產生器輸入彙總運算式。

若要取得資料行 Rating 的平均值,請使用

avg()彙總函式。 由於 Rating 為字串且avg()接受數字輸入,因此我們必須透過toInteger()函式將值轉換成數字。 此運算式看起來如下所示:avg(toInteger(Rating))完成之後,選取 [儲存並完成]。

前往 [資料預覽] 索引標籤以檢視轉換輸出。 請注意,其中只有兩個資料行:[year] 和 [AverageComedyRating]。

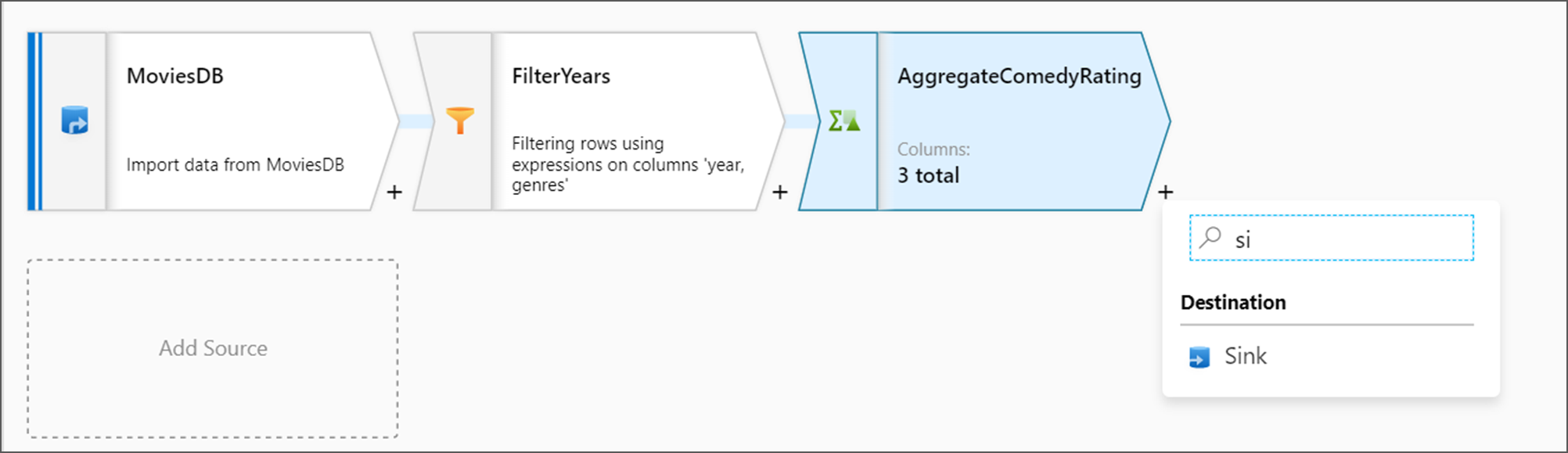

新增接收轉換

接下來,您想要在 [目的地] 下新增接收器轉換。

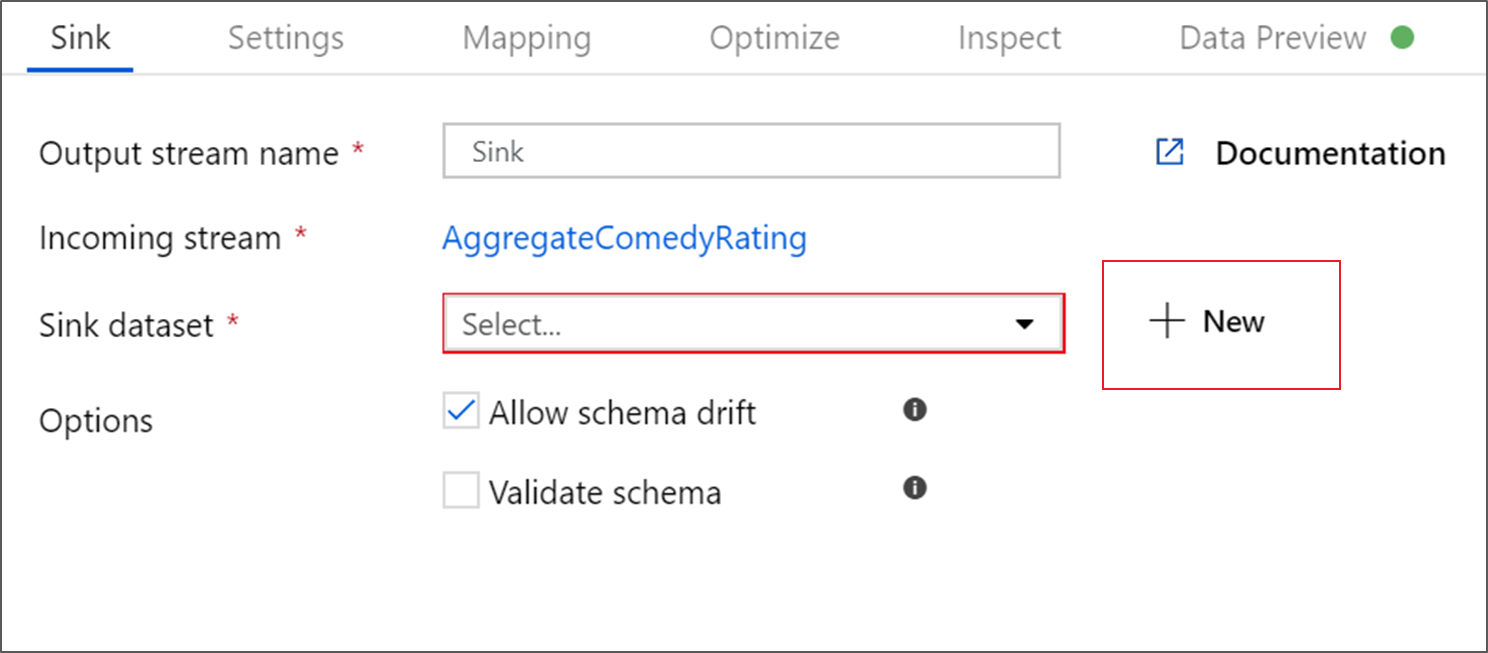

將您的接收器命名為 Sink。 選取 [新增] 以建立您的接收器資料集。

在 [新增資料集] 頁面上,選取 [Azure Data Lake Storage Gen2],然後選取 [繼續]。

在 [選取格式] 頁面上,選取 [DelimitedText],然後選取 [繼續]。

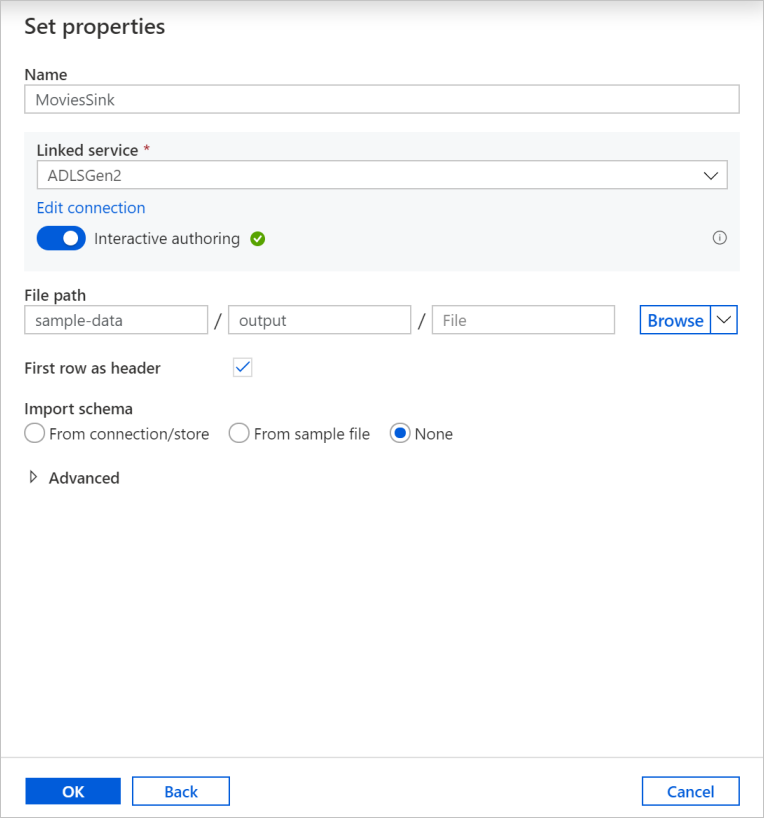

將接收器資料集命名為 MoviesSink。 針對連結服務,請選擇您為來源轉換建立的相同 ADLSGen2 連結服務。 輸入要寫入資料的輸出資料夾。 在本教學課程中,我們會寫入容器 sample-data 中的資料夾 output。 此資料夾不需要事先存在,而且可以動態建立。 選取 [First row as header] \(第一個資料列作為標頭\) 核取方塊,然後針對 [Import schema] (匯入架構\) 選取 [None]。 選取 [確定]。

現在您已完成建立資料流程, 可以立即在管線中執行。

執行和監視資料流程

您可以在發佈管線之前先進行偵錯。 在此步驟中,您會觸發資料流程管線的偵錯執行。 雖然資料預覽不會寫入資料,但偵錯執行會將資料寫入至您的接收目的地。

前往管線畫布。 選取 [偵錯] 以觸發偵錯執行。

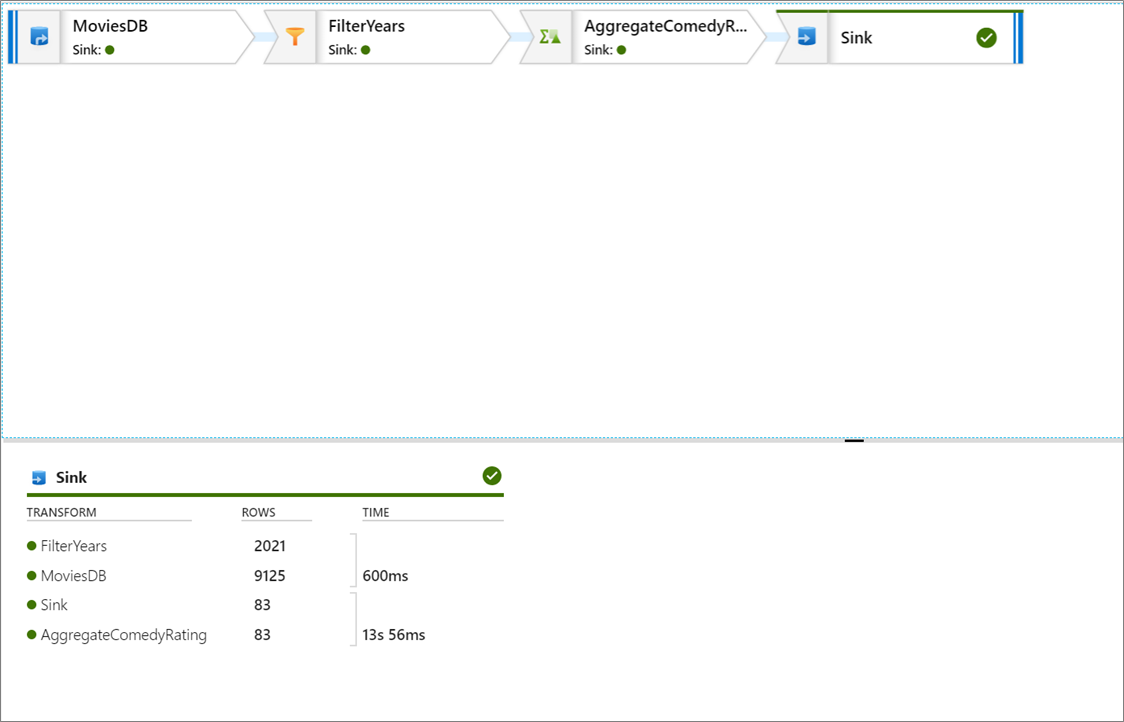

資料流程活動的管線偵錯會使用使用中偵錯叢集,但仍需要至少一分鐘的時間來初始化。 您可以透過 [輸出] 索引標籤來追蹤進度。執行成功之後,請選取眼鏡圖示以取得執行詳細資料。

在詳細資料窗格中,您可以看到每個轉換步驟中的資料列數目及所花費的時間。

選取轉換以取得資料行和資料分割的詳細資訊。

如果您正確遵循本教學課程的內容,應該已將 83 個資料列和 2 個資料行寫入您的接收資料夾。 您可以檢查 Blob 儲存體來確認資料是否正確無誤。

摘要

在本教學課程中,您將使用 Data Factory UI 來建立管線,目標是透過使用 Data Factory 受控虛擬網路 (部分機器翻譯) 中的對應資料流程,來將資料從 Azure Data Lake Storage Gen2 來源複製和轉換到 Data Lake Storage Gen2 接收器 (這兩者都允許存取選取的網路)。