手部指向和行動

手部指向和行動是輸入模型,可讓使用者鎖定目標、選取和操控可及範圍之外的 2D 與 3D 內容。 「遠方 (far)」互動技術是混合實境特有的技術,因為人類不會那樣與真實世界自然互動。 例如,在超級英雄電影「X 戰警」中,萬磁王可以使用他的手操控遠方物件。 這不是人類在現實中可做到的事。 在 HoloLens (AR) 和混合實境 (MR) 中,我們會為使用者提供這項神奇功能,以中斷真實世界的實體限制。 這不僅是很有趣的全像攝影體驗,還能讓使用者互動更有效且效率更高。

裝置支援

| 輸入模型 | HoloLens (第 1 代) | HoloLens 2 | 沉浸式頭戴裝置 |

| 手部指向和行動 | ❌ 未支援 | ✔️ 建議使用 | ✔️ 建議使用 |

「指向和行動」是使用新式關節手部追蹤系統的新功能之一。 使用運動控制器的沈浸式耳機也是以此輸入模型作為主要輸入模型。

手部光線 (Hand Ray)

在 HoloLens 2 上,我們已建立可從使用者手掌中央發射的手部光線。 此光線將視為手的延伸。 光線末端會附加環狀游標,以指出光線與目標物件交集的位置。 然後,游標所在的物件就能從手部接收手勢命令。

此基本手勢命令會藉由使用拇指與食指進行空中點選動作來觸發。 藉由使用手部光線進行指向及空中點選來行動,使用者就可以啟用按鈕或超連結。 藉由更多的複合手勢,使用者可以從遠處瀏覽網頁內容和操作 3D 物件。 手動光線的視覺化設計也會反應這些指向和行動狀態,請見下列圖示和說明:

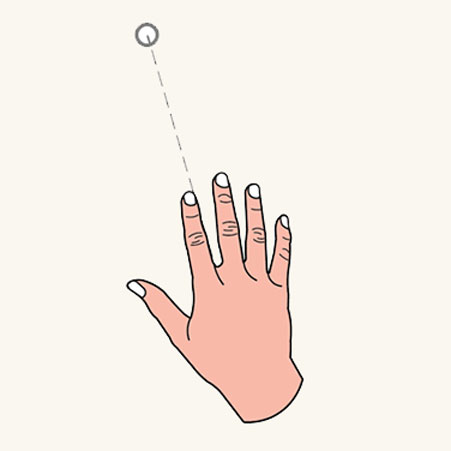

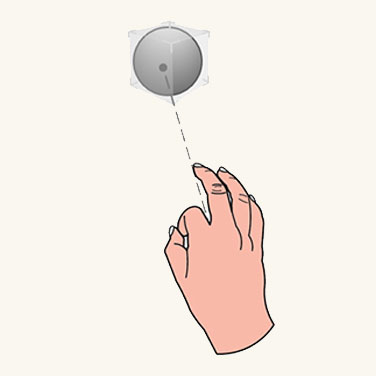

指向狀態

在「指向」 狀態時,光線呈虛線,而指標呈環狀。

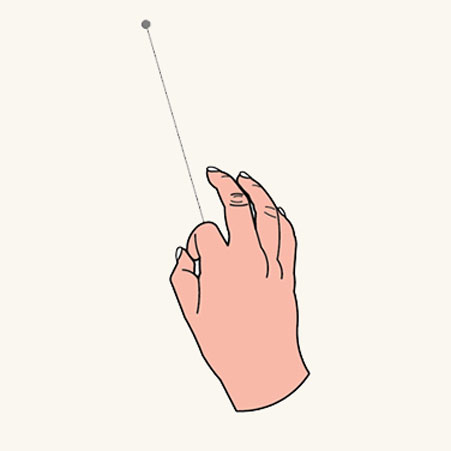

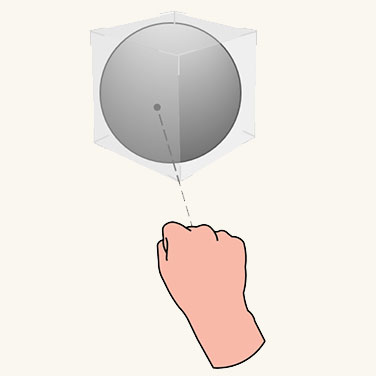

認可狀態

在「行動」 狀態時,光線變成一條實線,而游標會壓縮成一個點。

遠近轉換

我們不會使用「以食指指向」之類的特定手勢來指引光線,而是將光線設計成從使用者的手掌中心射出來。 如此一來,我們空出並保留了五隻手指頭來進行更具操控性的手勢,例如捏取和抓取。 透過這項設計,我們只需建立一個心智模型 - 針對遠近互動使用完全相同的一組手勢。 您可以使用相同的抓取手勢來操作不同距離的物件。 光線的引動過程會根據鄰近程度自動執行,如下所示:

近距操作

當物件位在手臂可及的範圍內 (大約 50 公分) 時,光線就會自動關閉,並建議您進行近距離互動。

遠距操作

物件距離超過 50 公分時,光線就會開啟。 此轉換會很順利且流暢。

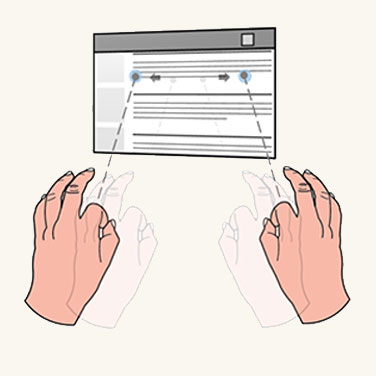

2D 平板互動

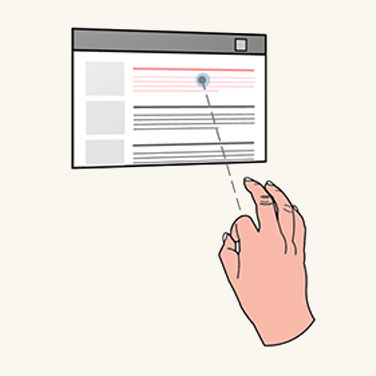

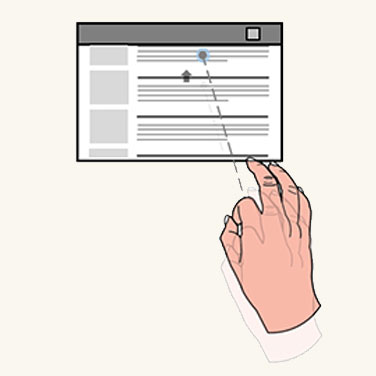

2D 平板是裝載 2D 應用程式內容 (例如網頁瀏覽器) 的全像攝影容器。 以 2D 平板進行遠端互動的設計概念是,使用手部光線來鎖定目標和使用空中點選來選取項目。 以手部光線鎖定目標後,使用者可以透過空中點選來觸發超連結或按鈕。 他們可使用一隻手來進行「空中點選和拖曳」,進而上下捲動平板內容。 使用兩隻手進行空中點選和拖曳的相對動作則可縮放平板內容。

將手部光線的目標鎖定在角落和邊緣會顯示最接近的操作能供性 (affordance)。 藉由「抓取並拖曳」操作能供性,使用者可以透過角落能供性進行統一的尺寸調整,以及可透過邊緣能供性來重排平板。 藉由抓取並拖曳 2D 平板上方的 holobar,使用者可以移動整個平板。

按一下

捲動

縮放

操作 2D 平板

- 使用者將手部光線指向角落或邊緣時,可顯示最接近的操作能供性。

- 藉由在能供性上套用操作手勢,使用者可以透過角落能供性進行統一的尺寸調整,以及可透過邊緣能供性來重排平板。

- 藉由在 2D 平板頂端的 holobar 上套用操作手勢,使用者可以移動整個平板。

3D 物件操作

在直接操作中,有兩種方法可讓使用者操作 3D 物件:能供性操作與非能供性操作。 在指向和行動模型中,使用者可以透過手部射線來完成完全相同的工作。 不需要額外的學習。

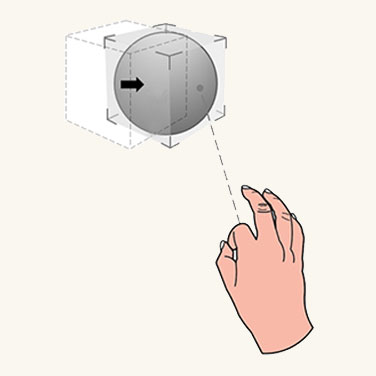

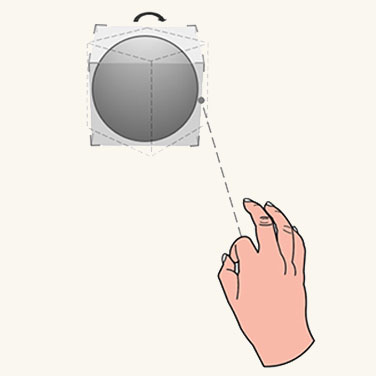

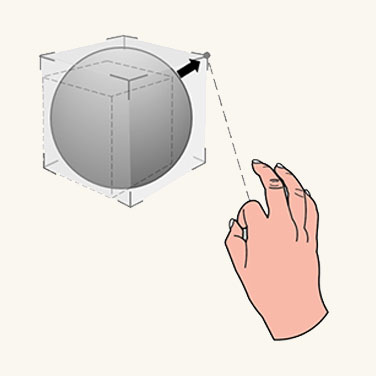

能供性操作

使用者使用手部光線來指向並顯示週框方塊和操作能供性。 使用者可以將操作手勢套用到週框方塊來移動整個物件,套用到邊緣能供性可旋轉物件,套用到角落能供性可統一調整物件大小。

移動

旋轉

縮放

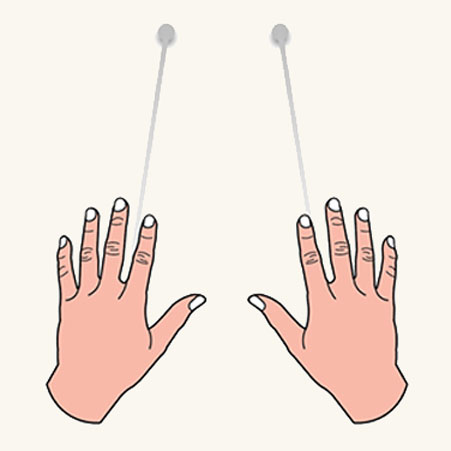

非能供性操作

使用者透過手部光線指向來顯示週框方塊,然後直接在其上方套用操作手勢。 若使用一隻手,物件的轉譯和旋轉會與手的動作和方向相關聯。 若使用兩隻手,使用者可以根據兩隻手的相對動作來轉譯、縮放及旋轉週框方塊。

本能手勢

指向和行動的本能手勢基本上類似於手部直接操作。 使用者在 3D 物件上進行的手勢,皆由 UI 能供性的設計來引導。 例如,在使用者想使用五隻手指頭來抓取較大的物件時,小型控點可能會促使使用者運用其拇指與食指來捏取物件。

小型物件

中型物件

大型物件

手部與 6 DoF 控制器之間的對稱設計

針對混合實境入口網站 (MRP),已建立並定義了遠方互動的指向和行動概念。 在此案例中,使用者會戴上沉浸式頭戴裝置,並透過動作控制器與 3D 物件互動。 運動控制器會發射光線來指向及操作遠方物件。 控制器上有按鈕,可用來進一步執行不同動作。 我們會套用射線的互動模型,並將其連結至雙手。 透過此對稱設計,熟悉 MRP 的使用者在使用 HoloLens 2 時,不需要學習另一個用於遠端指向及操作的互動模型,反之亦然。

控制器光線

手部光線

MRTK (混合實境工具組) 中適用於 Unity 的手部射線

根據預設,MRTK 會提供一個手部射線 prefab (DefaultControllerPointer.prefab),其與命令介面的系統手部射線具有相同視覺狀態。 其是在 MRTK 輸入設定檔的 [指標] 下進行指派。 在沉浸式頭戴裝置中,相同的光線會用於運動控制器。