本能互動簡介

簡單、直覺式互動的原理貫穿整個混合實境 (MR) 平台。 我們採取了三個步驟,以確保應用程式設計人員和開發人員可為其客戶提供簡單且直覺式的互動。

首先,我們已確保將感應器和輸入技術結合成多樣式互動模型。 這些互動模型包括手部和眼睛追蹤以及自然語言輸入。 根據我們的研究,多樣式架構內的設計和開發 (而不是根據個別輸入) 是創造直覺式體驗的關鍵。

其次,我們了解許多開發人員都以多個 HoloLens 裝置為目標,例如 HoloLens 2 和 HoloLens (第 1 代) 或 HoloLens 和 VR。 因此,我們將互動模型設計為可跨裝置運作,即使每個裝置上的輸入技術有所不同。 例如,在採用 6DOF 控制器以及 HoloLens 2 的 Windows 沉浸式頭戴裝置上的遠距互動上均使用相同的能供性和模式。 這可讓您輕鬆地進行跨裝置應用程式開發,並為使用者互動提供自然的感覺。

我們承認 MR 中有數千種有效、吸引人和神奇的可能互動,但我們也發現在應用程式中刻意運用單一互動模型,是確保使用者順利擁有絕佳體驗的最佳方法。 為此,我們在此互動指引中納入了三件事:

- 特定指引,以三個主要互動模型以及每個模型所需的元件和模式為主。

- 補充指引,說明我們的平台具備的其他優勢。

- 一般指引,可針對您的開發案例協助您選取適當的互動模型。

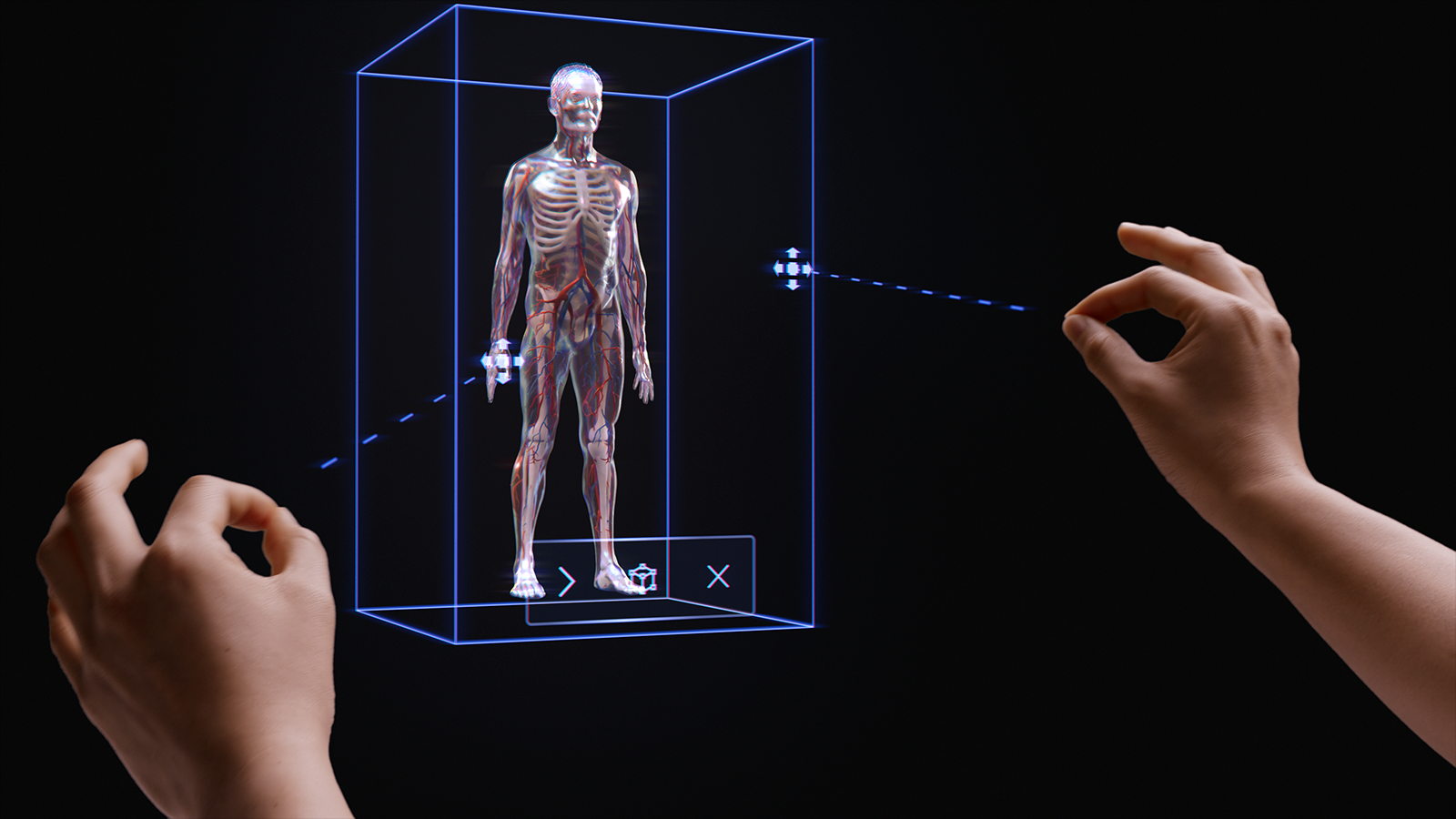

基本手部追蹤和直覺互動示範

請參閱下方 的設計全像投影 - 頭部追蹤和眼球追蹤 影片示範,然後移至更具體的主題:

這段影片取自「設計全像投影」HoloLens 2應用程式。 在這裡下載並享受完整的體驗。

多樣式互動模型

根據我們的研究以及客戶的意見反應,我們發現有三個主要互動模型適合大部分的混合實境體驗。 在許多方面,互動模型是使用者如何完成流程的心智模型。 上述每種互動模型都已針對一套客戶需求進行最佳化,正確使用時每種都很方便、強大、實用。

下圖是簡化的概觀。 在底下的頁面中,使用每個互動模型的詳細資訊都有連結,其中包含影像和程式碼範例。

| 型號 | 範例案例 | 適合 | 硬體 |

| 手部和運動控制器 | 3D 空間體驗,例如空間版面配置與設計、內容操作或模擬。 | 很適用於新使用者,可搭配語音、眼球追蹤或頭部注視使用。 降低學習曲線。 手部追蹤和 6DoF 控制器之間的一致 UX。 | HoloLens 2 沉浸式頭戴裝置 |

| 免持式 | 使用者雙手沒空 (例如在職培訓和維護) 時的情境體驗。 | 需要學習一下。 如果無法使用手部,裝置可搭配語音和自然語言使用。 | HoloLens 2 HoloLens (第 1 代) 沉浸式頭戴裝置 |

| 目光和行動 | 點擊體驗,例如 3D 簡報、示範。 | 需要在 HMD 而非在行動裝置上訓練。 非常適合無障礙控制器。 最適合用於 HoloLens (第 1 代)。 | HoloLens 2 HoloLens (第 1 代) 沉浸式頭戴裝置 行動 AR |

為了避免使用者互動體驗中發生落差,從頭到尾遵循單一模型的指引將是最佳方式。

下列各節會逐步說明選取及實作其中一個互動模型的步驟。

在此頁面結束前,您會了解我們對於下列各項的指導:

- 為您的客戶選擇互動模型

- 實作互動模型

- 互動模型之間的轉換

- 設計後續步驟

為您的客戶選擇互動模型

開發人員和創作者通常都已考量其客戶可使用的互動類型。 為了鼓勵以客戶為主的設計方式,我們建議您遵循下列指引來選取最適合客戶的互動模型。

為什麼要遵循本指引?

- 我們均針對客觀和主觀準則對互動模型進行測試,例如實際和認知投入、直覺性和學習力。

- 由於互動不同,互動模型之間的視訊/音訊能供性及物件行為也可能不同。

- 結合多個互動模型的組件,會引起競用能供性 (例如同時手部光線和頭部注視游標) 的風險。 這可能會造成使用者的負荷和困惑。

以下是如何針對每個互動模型將能供性和行為最佳化的一些範例。 我們常會看到新使用者有類似的問題,例如「如何得知系統是否能運作」 、「如何得知我可以做什麼」 、「如何得知它是否了解我剛做了什麼?」

| 型號 | 如何得知它是否能運作? | 如何得知我可以做什麼? | 如何得知我剛做了什麼? |

| 手部和運動控制器 | 我看到手狀網格、指尖能供性或手部/控制器光線。 | 我看到當我的手部靠近物件時會出現可握住的把手或週框方塊。 | 我會在握住和放開時聽到聽得見的音調並看到動畫。 |

| 頭部目光和行動 | 我在視野中央看到一個游標。 | 游標會在其掃過某些物件時變更狀態。 | 當我採取動作時,我會看到/聽到看得見和聽得見的確認。 |

| 免持式 (頭部注視並佇留) | 我在視野中央看到一個游標。 | 當我佇留在可互動的物件上時,我會看到進度列指示器。 | 當我採取動作時,我會看到/聽到看得見和聽得見的確認。 |

| 免持式 (語音命令) | 我看到接聽指示器以及可顯示系統所聽內容的字幕。 | 我取得語音提示。 當我說:「我可以說什麼?」 我看到回饋。 | 當我發出命令時,我會看到/聽到看得見和聽得見的確認,或在必要時取得去除混淆 UX。 |

以下是我們發現有助於小組選取互動模型的問題:

問:我的使用者是否想要觸控全像投影並執行精確度全像攝影操作?

答:如果是,請查看手部和運動控制器互動模型,以取得有效目標和操作。問:我的使用者是否需要為真實世界的工作保留手部?

答:如果是的話,請查看免手操作互動模型,其透過注視和語音型互動提供絕佳的免操作體驗。問:我的使用者是否有時間學習 MR 應用程式的互動,或是否需要與最低學習曲線的互動?

答:針對最低學習曲線和最直覺的互動,我們建議使用手部和運動控制器模型,只要使用者可以使用手部進行互動即可。問:我的使用者是否使用動作控制器進行指標和操作?

答:手部和運動控制器模型包含所有指引,以取得絕佳的動作控制器體驗。問:我的使用者是否使用協助工具控制器或常見的藍牙控制器,例如按一下器?

答:我們建議所有非追蹤控制器的頭部注視和認可模型。 其設計訴求是可讓使用者使用簡單的「定向和行動」經歷整個體驗。Q:相對於瀏覽 UI 控制項的密集版面配置,我的使用者只是經由「點選」來進行體驗 (例如在類似 3D 投影片的環境中) 嗎?

答:如果使用者不需要控制許多 UI,則頭部注視和認可提供可學習的選項,讓使用者不需要擔心目標。問:我的使用者是否同時使用 HoloLens (第 1 代) 和HoloLens 2/Windows Mixed Reality沉浸式頭戴裝置 (VR) ?

答:由於頭部注視和認可是 HoloLens (第 1 代) 的互動模型,因此建議您支援 HoloLens (第 1 代的建立者,) 使用頭部注視,並認可使用者在 HoloLens (第 1 代) 頭戴式裝置上體驗的任何功能或模式。 如需了解如何為多代 HoloLens 創造絕佳體驗的詳細資訊,請參閱「轉換互動模型」一節。Q:對於移動 (涵蓋大型空間或在空間之間移動) 的使用者,與傾向在單一空間工作的使用者而言,有何差別?

答:任何互動模型都適用于這些使用者。

注意

即將推出應用程式設計專屬的詳細指引。

轉換互動模型

在某些使用案例中,也可能需要使用多個互動模型。 例如,您應用程式的「建立流程」使用「雙手和運動控制器」互動模型,但您想要讓現場技術人員使用語音輸入模式。 如果您的經驗的確需要多種互動模型,使用者可能會在轉換模型時遇到困難 (尤其是不熟悉混合實境的使用者)。

注意

我們會繼續撰寫更多適用於開發人員和設計人員的指引,讓他們了解使用多個 MR 互動模型的方式、時機和原因。