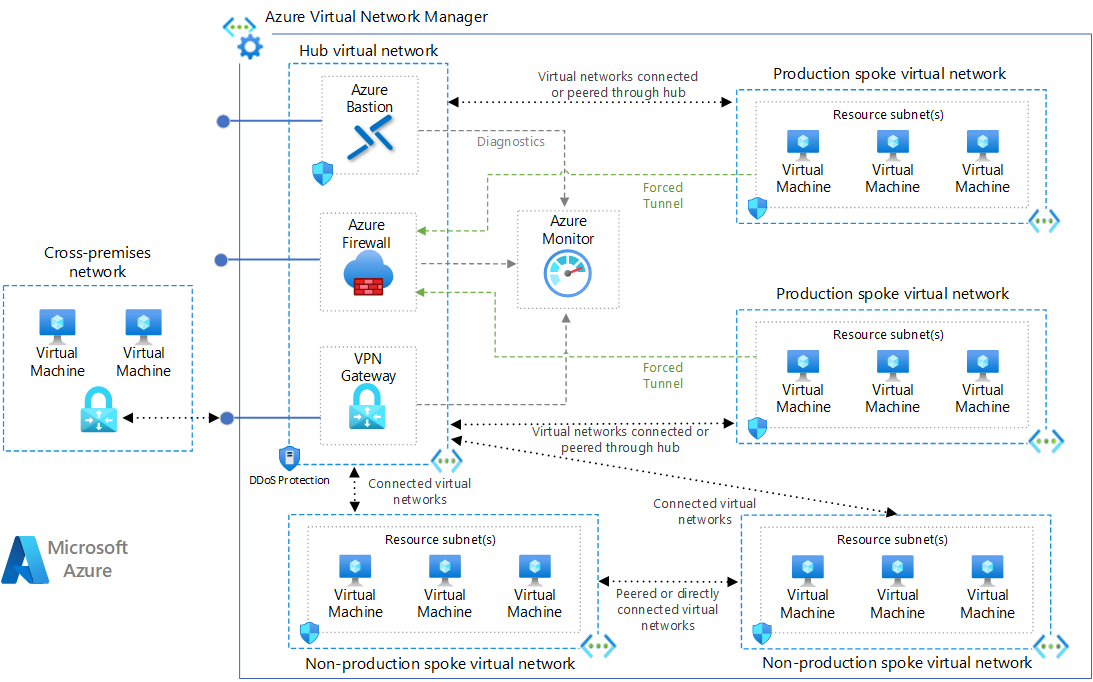

This reference architecture implements a hub-spoke network pattern with customer-managed hub infrastructure components. For a Microsoft-managed hub infrastructure solution, see Hub-spoke network topology with Azure Virtual WAN.

Architecture

Download a Visio file of this architecture.

Workflow

This hub-spoke network configuration uses the following architectural elements:

Hub virtual network. The hub virtual network hosts shared Azure services. Workloads hosted in the spoke virtual networks can use these services. The hub virtual network is the central point of connectivity for cross-premises networks.

Spoke virtual networks. Spoke virtual networks isolate and manage workloads separately in each spoke. Each workload can include multiple tiers, with multiple subnets connected through Azure load balancers. Spokes can exist in different subscriptions and represent different environments, such as Production and Non-production.

Virtual network connectivity. This architecture connects virtual networks by using peering connections or connected groups. Peering connections and connected groups are non-transitive, low-latency connections between virtual networks. Peered or connected virtual networks can exchange traffic over the Azure backbone without needing a router. Azure Virtual Network Manager creates and manages network groups and their connections.

Azure Bastion host. Azure Bastion provides secure connectivity from the Azure portal to virtual machines (VMs) by using your browser. An Azure Bastion host deployed inside an Azure virtual network can access VMs in that virtual network or in connected virtual networks.

Azure Firewall. An Azure Firewall managed firewall instance exists in its own subnet.

Azure VPN Gateway or Azure ExpressRoute gateway. A virtual network gateway enables a virtual network to connect to a virtual private network (VPN) device or Azure ExpressRoute circuit. The gateway provides cross-premises network connectivity. For more information, see Connect an on-premises network to a Microsoft Azure virtual network and Extend an on-premises network using VPN.

VPN device. A VPN device or service provides external connectivity to the cross-premises network. The VPN device can be a hardware device or a software solution such as the Routing and Remote Access Service (RRAS) in Windows Server. For more information, see Validated VPN devices and device configuration guides.

Components

Virtual Network Manager is a management service that helps you group, configure, deploy, and manage virtual networks at scale across Azure subscriptions, regions, and tenants. With Virtual Network Manager, you can define groups of virtual networks to identify and logically segment your virtual networks. You can define and apply connectivity and security configurations across all virtual networks in a network group at once.

Azure Virtual Network is the fundamental building block for private networks in Azure. Virtual Network enables many Azure resources, such as Azure VMs, to securely communicate with each other, cross-premises networks, and the internet.

Azure Bastion is a fully managed service that provides more secure and seamless Remote Desktop Protocol (RDP) and Secure Shell Protocol (SSH) access to VMs without exposing their public IP addresses.

Azure Firewall is a managed cloud-based network security service that protects Virtual Network resources. This stateful firewall service has built-in high availability and unrestricted cloud scalability to help you create, enforce, and log application and network connectivity policies across subscriptions and virtual networks.

VPN Gateway is a specific type of virtual network gateway that sends encrypted traffic between a virtual network and an on-premises location over the public internet. You can also use VPN Gateway to send encrypted traffic between Azure virtual networks over the Microsoft network.

Azure Monitor can collect, analyze, and act on telemetry data from cross-premises environments, including Azure and on-premises. Azure Monitor helps you maximize the performance and availability of your applications and proactively identify problems in seconds.

Scenario details

This reference architecture implements a hub-spoke network pattern where the hub virtual network acts as a central point of connectivity to many spoke virtual networks. The spoke virtual networks connect with the hub and can be used to isolate workloads. You can also enable cross-premises scenarios by using the hub to connect to on-premises networks.

This architecture describes a network pattern with customer-managed hub infrastructure components. For a Microsoft-managed hub infrastructure solution, see Hub-spoke network topology with Azure Virtual WAN.

The benefits of using a hub and spoke configuration include:

- Cost savings

- Overcoming subscription limits

- Workload isolation

For more information, see Hub-and-spoke network topology.

Potential use cases

Typical uses for a hub and spoke architecture include workloads that:

- Have several environments that require shared services. For example, a workload might have development, testing, and production environments. Shared services might include DNS IDs, Network Time Protocol (NTP), or Active Directory Domain Services (AD DS). Shared services are placed in the hub virtual network, and each environment deploys to a different spoke to maintain isolation.

- Don't require connectivity to each other, but require access to shared services.

- Require central control over security, like a perimeter network (also known as DMZ) firewall in the hub with segregated workload management in each spoke.

- Require central control over connectivity, such as selective connectivity or isolation between spokes of certain environments or workloads.

Recommendations

The following recommendations apply to most scenarios. Follow these recommendations unless you have specific requirements that override them.

Resource groups, subscriptions, and regions

This example solution uses a single Azure resource group. You can also implement the hub and each spoke in different resource groups and subscriptions.

When you peer virtual networks in different subscriptions, you can associate the subscriptions to the same or different Microsoft Entra tenants. This flexibility allows for decentralized management of each workload while maintaining shared services in the hub. See Create a virtual network peering - Resource Manager, different subscriptions, and Microsoft Entra tenants.

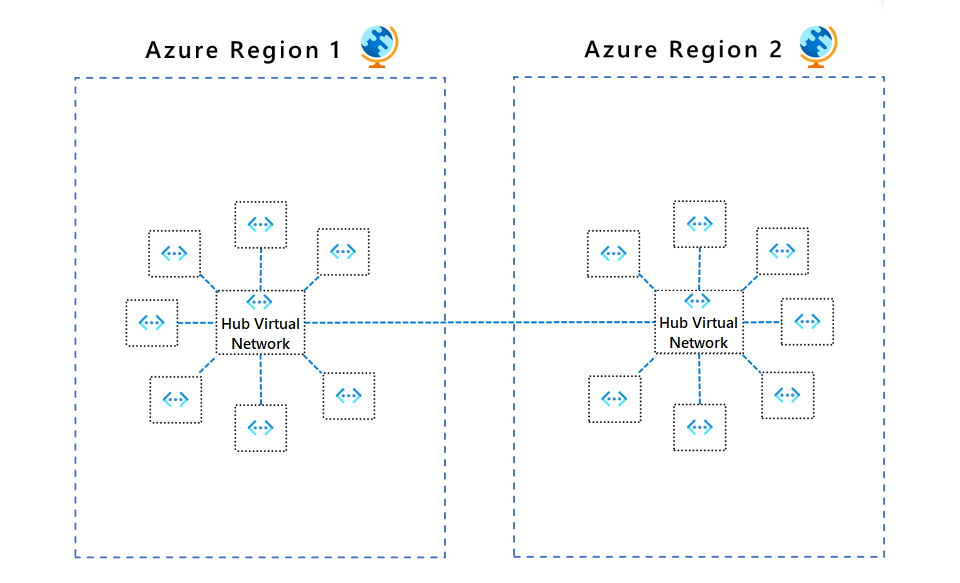

As a general rule, it's best to have at least one hub per region. This configuration helps avoid a single point of failure, for example to avoid Region A resources being affected at the network level by an outage in Region B.

Virtual network subnets

The following recommendations outline how to configure the subnets on the virtual network.

GatewaySubnet

The virtual network gateway requires this subnet. You can also use a hub-spoke topology without a gateway if you don't need cross-premises network connectivity.

Create a subnet named GatewaySubnet with an address range of at least /27. The /27 address range gives the subnet enough scalability configuration options to prevent reaching the gateway size limitations in the future. For more information about setting up the gateway, see the following reference architectures, depending on your connection type:

For higher availability, you can use ExpressRoute plus a VPN for failover. See Connect an on-premises network to Azure using ExpressRoute with VPN failover.

AzureFirewallSubnet

Create a subnet named AzureFirewallSubnet with an address range of at least /26. Regardless of scale, the /26 address range is the recommended size and covers any future size limitations. This subnet doesn't support network security groups (NSGs).

Azure Firewall requires this subnet. If you use a partner network virtual appliance (NVA), follow its network requirements.

Spoke network connectivity

Virtual network peering or connected groups are non-transitive relationships between virtual networks. If you need spoke virtual networks to connect to each other, add a peering connection between those spokes or place them in the same network group.

Spoke connections through Azure Firewall or NVA

The number of virtual network peerings per virtual network is limited. If you have many spokes that need to connect with each other, you could run out of peering connections. Connected groups also have limitations. For more information, see Networking limits and Connected groups limits.

In this scenario, consider using user-defined routes (UDRs) to force spoke traffic to be sent to Azure Firewall or another NVA that acts as a router at the hub. This change allows the spokes to connect to each other. To support this configuration, you must implement Azure Firewall with forced tunnel configuration enabled. For more information, see Azure Firewall forced tunneling.

The topology in this architectural design facilitates egress flows. While Azure Firewall is primarily for egress security, it can also be an ingress point. For more considerations about hub NVA ingress routing, see Firewall and Application Gateway for virtual networks.

Spoke connections to remote networks through a hub gateway

To configure spokes to communicate with remote networks through a hub gateway, you can use virtual network peerings or connected network groups.

To use virtual network peerings, in the virtual network Peering setup:

- Configure the peering connection in the hub to Allow gateway transit.

- Configure the peering connection in each spoke to Use the remote virtual network's gateway.

- Configure all peering connections to Allow forwarded traffic.

For more information, see Create a virtual network peering.

To use connected network groups:

- In Virtual Network Manager, create a network group and add member virtual networks.

- Create a hub and spoke connectivity configuration.

- For the Spoke network groups, select Hub as gateway.

For more information, see Create a hub and spoke topology with Azure Virtual Network Manager.

Spoke network communications

There are two main ways to allow spoke virtual networks to communicate with each other:

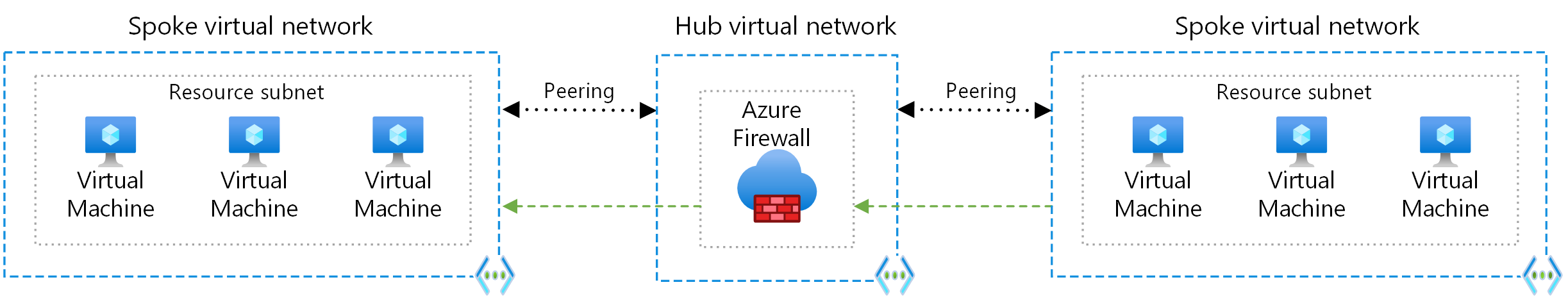

- Communication via an NVA like a firewall and router. This method incurs a hop between the two spokes.

- Communication by using virtual network peering or Virtual Network Manager direct connectivity between spokes. This approach doesn't cause a hop between the two spokes and is recommended for minimizing latency.

Communication through an NVA

If you need connectivity between spokes, consider deploying Azure Firewall or another NVA in the hub. Then create routes to forward traffic from a spoke to the firewall or NVA, which can then route to the second spoke. In this scenario, you must configure the peering connections to allow forwarded traffic.

You can also use a VPN gateway to route traffic between spokes, although this choice affects latency and throughput. For configuration details, see Configure VPN gateway transit for virtual network peering.

Evaluate the services you share in the hub to ensure that the hub scales for a larger number of spokes. For instance, if your hub provides firewall services, consider your firewall solution's bandwidth limits when you add multiple spokes. You can move some of these shared services to a second level of hubs.

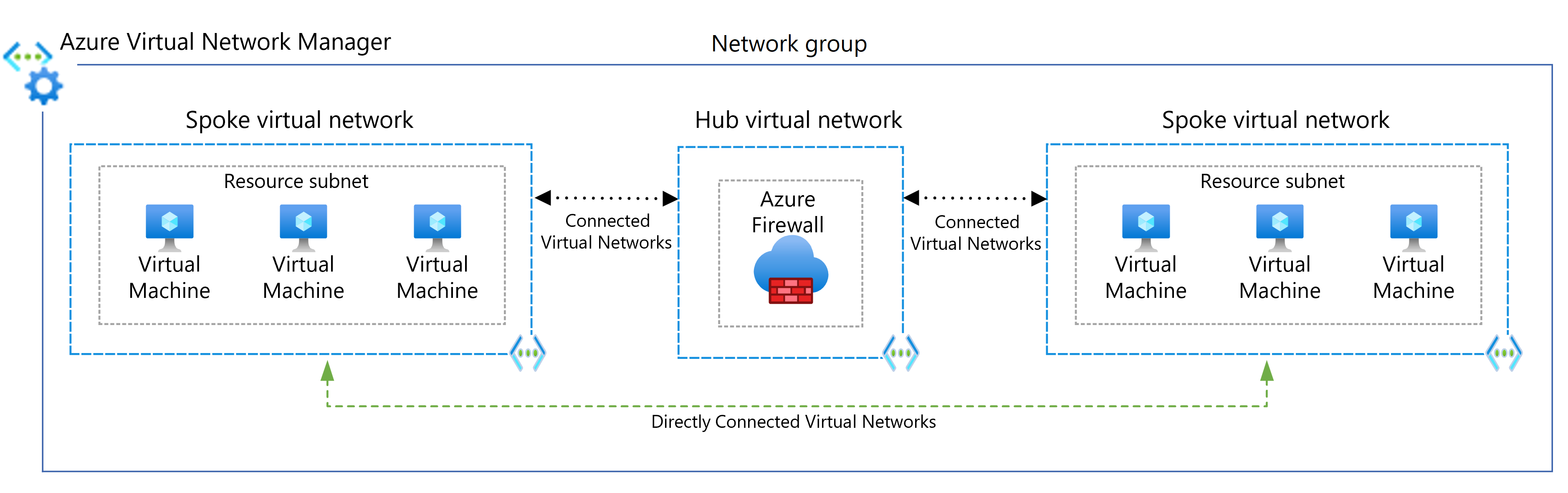

Direct communication between spoke networks

To connect directly between spoke virtual networks without traversing the hub virtual network, you can create peering connections between spokes or enable direct connectivity for the network group. It's best to limit peering or direct connectivity to spoke virtual networks that are part of the same environment and workload.

When you use Virtual Network Manager, you can add spoke virtual networks to network groups manually, or add networks automatically based on conditions you define. For more information, see Spoke-to-spoke networking.

The following diagram illustrates using Virtual Network Manager for direct connectivity between spokes.

Management recommendations

To centrally manage connectivity and security controls, use Virtual Network Manager to create new hub and spoke virtual network topologies or onboard existing topologies. Using Virtual Network Manager ensures that your hub and spoke network topologies are prepared for large-scale future growth across multiple subscriptions, management groups, and regions.

Example Virtual Network Manager use case scenarios include:

- Democratization of spoke virtual network management to groups such as business units or application teams. Democratization can result in large numbers of virtual network-to-virtual network connectivity and network security rules requirements.

- Standardization of multiple replica architectures in multiple Azure regions to ensure a global footprint for applications.

To ensure uniform connectivity and network security rules, you can use network groups to group virtual networks in any subscription, management group, or region under the same Microsoft Entra tenant. You can automatically or manually onboard virtual networks to network groups through dynamic or static membership assignments.

You define discoverability of the virtual networks that Virtual Network Manager manages by using Scopes. This feature provides flexibility for a desired number of network manager instances, which allows further management democratization for virtual network groups.

To connect spoke virtual networks in the same network group to each other, use Virtual Network Manager to implement virtual network peering or direct connectivity. Use the global mesh option to extend mesh direct connectivity to spoke networks in different regions. The following diagram shows global mesh connectivity between regions.

You can associate virtual networks within a network group to a baseline set of security admin rules. Network group security admin rules prevent spoke virtual network owners from overwriting baseline security rules, while letting them independently add their own sets of security rules and NSGs. For an example of using security admin rules in hub and spoke topologies, see Tutorial: Create a secured hub and spoke network.

To facilitate a controlled rollout of network groups, connectivity, and security rules, Virtual Network Manager configuration deployments help you safely release potentially breaking configuration changes to hub and spoke environments. For more information, see Configuration deployments in Azure Virtual Network Manager.

To get started with Virtual Network Manager, see Create a hub and spoke topology with Azure Virtual Network Manager.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that can be used to improve the quality of a workload. For more information, see Microsoft Azure Well-Architected Framework.

Security

Security provides assurances against deliberate attacks and the abuse of your valuable data and systems. For more information, see Overview of the security pillar.

To ensure a baseline set of security rules, make sure to associate security admin rules with virtual networks in network groups. Security admin rules take precedence over and are evaluated before NSG rules. Like NSG rules, security admin rules support prioritization, service tags, and L3-L4 protocols. For more information, see Security admin rules in Virtual Network Manager.

Use Virtual Network Manager deployments to facilitate controlled rollout of potentially breaking changes to network group security rules.

Azure DDoS Protection, combined with application-design best practices, provides enhanced DDoS mitigation features to provide more defense against DDoS attacks. You should enable Azure DDOS Protection on any perimeter virtual network.

Cost optimization

Cost optimization is about ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Overview of the cost optimization pillar.

Consider the following cost-related factors when you deploy and manage hub and spoke networks. For more information, see Virtual network pricing.

Azure Firewall costs

This architecture deploys an Azure Firewall instance in the hub network. Using an Azure Firewall deployment as a shared solution consumed by multiple workloads can significantly save cloud costs compared to other NVAs. For more information, see Azure Firewall vs. network virtual appliances.

To use all deployed resources effectively, choose the right Azure Firewall size. Decide what features you need and which tier best suits your current set of workloads. To learn about the available Azure Firewall SKUs, see What is Azure Firewall?

Private IP address costs

You can use private IP addresses to route traffic between peered virtual networks or between networks in connected groups. The following cost considerations apply:

- Ingress and egress traffic is charged at both ends of the peered or connected networks. For instance, data transfer from a virtual network in zone 1 to another virtual network in zone 2 incurs an outbound transfer rate for zone 1 and an inbound rate for zone 2.

- Different zones have different transfer rates.

Plan for IP addressing based on your peering requirements, and make sure the address space doesn't overlap across cross-premises locations and Azure locations.

Operational excellence

Operational excellence covers the operations processes that deploy an application and keep it running in production. For more information, see Overview of the operational excellence pillar.

Use Azure Network Watcher to monitor and troubleshoot network components with the following tools:

- Traffic Analytics shows you the systems in your virtual networks that generate the most traffic. You can visually identify bottlenecks before they become problems.

- Network Performance Monitor monitors information about ExpressRoute circuits.

- VPN diagnostics helps troubleshoot site-to-site VPN connections that connect your applications to on-premises users.

Also consider enabling Azure Firewall diagnostic logging to get better insights into the DNS requests and the allow/deny results in the logs.

Deploy this scenario

This deployment includes one hub virtual network and two connected spokes, and also deploys an Azure Firewall instance and Azure Bastion host. Optionally, the deployment can include VMs in the first spoke network and a VPN gateway.

You can choose between virtual network peering or Virtual Network Manager connected groups to create the network connections. Each method has several deployment options.

Use virtual network peering

Run the following command to create a resource group named

hub-spokein theeastusregion for the deployment. Select Try It to use an embedded shell.az group create --name hub-spoke --location eastusRun the following command to download the Bicep template.

curl https://raw.githubusercontent.com/mspnp/samples/main/solutions/azure-hub-spoke/bicep/main.bicep > main.bicepRun the following command to deploy the hub and spoke network configuration, virtual network peerings between the hub and spokes, and an Azure Bastion host. When prompted, enter a user name and password. You can use this user name and password to access VMs in the spoke networks.

az deployment group create --resource-group hub-spoke --template-file main.bicep

For detailed information and extra deployment options, see the Hub and Spoke ARM and Bicep templates that deploy this solution.

Use Virtual Network Manager connected groups

Run the following command to create a resource group for the deployment. Select Try It to use an embedded shell.

az group create --name hub-spoke --location eastusRun the following command to download the Bicep template.

curl https://raw.githubusercontent.com/mspnp/samples/main/solutions/azure-hub-spoke-connected-group/bicep/main.bicep > main.bicepRun the following commands to download all the needed modules to a new directory.

mkdir modules curl https://raw.githubusercontent.com/mspnp/samples/main/solutions/azure-hub-spoke-connected-group/bicep/modules/avnm.bicep > modules/avnm.bicep curl https://raw.githubusercontent.com/mspnp/samples/main/solutions/azure-hub-spoke-connected-group/bicep/modules/avnmDeploymentScript.bicep > modules/avnmDeploymentScript.bicep curl https://raw.githubusercontent.com/mspnp/samples/main/solutions/azure-hub-spoke-connected-group/bicep/modules/hub.bicep > modules/hub.bicep curl https://raw.githubusercontent.com/mspnp/samples/main/solutions/azure-hub-spoke-connected-group/bicep/modules/spoke.bicep > modules/spoke.bicepRun the following command to deploy the hub and spoke network configuration, virtual network connections between the hub and spokes, and a Bastion host. When prompted, enter a user name and password. You can use this user name and password to access VMs in the spoke networks.

az deployment group create --resource-group hub-spoke --template-file main.bicep

For detailed information and extra deployment options, see the Hub and Spoke ARM and Bicep templates that deploy this solution.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal author:

Alejandra Palacios | Senior Customer Engineer

Other contributors:

- Matthew Bratschun | Customer Engineer

- Jay Li | Senior Product Manager

- Telmo Sampaio | Principal Service Engineering Manager

To see non-public LinkedIn profiles, sign in to LinkedIn.

Next steps

To learn about secured virtual hubs and the associated security and routing policies that Azure Firewall Manager configures, see What is a secured virtual hub?

The hub in a hub-spoke network topology is the main component of a connectivity subscription in an Azure landing zone. For more information about building large-scale networks in Azure with routing and security managed by the customer or by Microsoft, see Define an Azure network topology.

Related resources

Explore the following related architectures:

- Azure firewall architecture guide

- Firewall and Application Gateway for virtual networks

- Troubleshoot a hybrid VPN connection

- Spoke-to-spoke networking

- Hybrid connection

- Connect standalone servers by using Azure Network Adapter

- Secure and govern workloads with network level segmentation

- Baseline architecture for an Azure Kubernetes Service (AKS) cluster