What is Azure Content Moderator?

Important

Azure Content Moderator is being deprecated in February 2024, and will be retired by February 2027. It is being replaced by Azure AI Content Safety, which offers advanced AI features and enhanced performance.

Azure AI Content Safety is a comprehensive solution designed to detect harmful user-generated and AI-generated content in applications and services. Azure AI Content Safety is suitable for many scenarios such as online marketplaces, gaming companies, social messaging platforms, enterprise media companies, and K-12 education solution providers. Here's an overview of its features and capabilities:

- Text and Image Detection APIs: Scan text and images for sexual content, violence, hate, and self-harm with multiple severity levels.

- Content Safety Studio: An online tool designed to handle potentially offensive, risky, or undesirable content using our latest content moderation ML models. It provides templates and customized workflows that enable users to build their own content moderation systems.

- Language support: Azure AI Content Safety supports more than 100 languages and is specifically trained on English, German, Japanese, Spanish, French, Italian, Portuguese, and Chinese.

Azure AI Content Safety provides a robust and flexible solution for your content moderation needs. By switching from Content Moderator to Azure AI Content Safety, you can take advantage of the latest tools and technologies to ensure that your content is always moderated to your exact specifications.

Learn more about Azure AI Content Safety and explore how it can elevate your content moderation strategy.

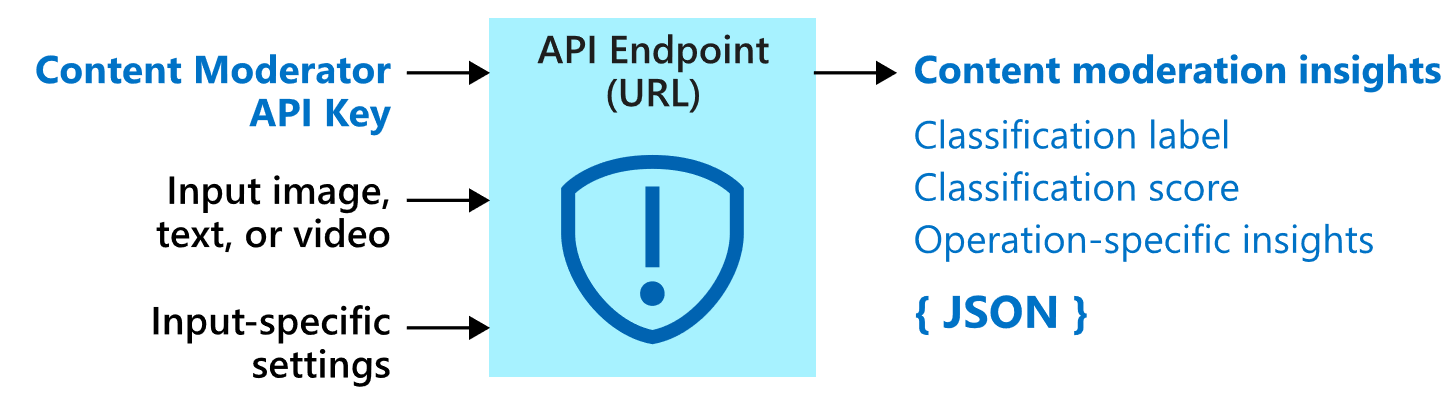

Azure Content Moderator is an AI service that lets you handle content that is potentially offensive, risky, or otherwise undesirable. It includes the AI-powered content moderation service which scans text, image, and videos and applies content flags automatically.

You may want to build content filtering software into your app to comply with regulations or maintain the intended environment for your users.

This documentation contains the following article types:

- Quickstarts are getting-started instructions to guide you through making requests to the service.

- How-to guides contain instructions for using the service in more specific or customized ways.

- Concepts provide in-depth explanations of the service functionality and features.

For a more structured approach, follow a Training module for Content Moderator.

Where it's used

The following are a few scenarios in which a software developer or team would require a content moderation service:

- Online marketplaces that moderate product catalogs and other user-generated content.

- Gaming companies that moderate user-generated game artifacts and chat rooms.

- Social messaging platforms that moderate images, text, and videos added by their users.

- Enterprise media companies that implement centralized moderation for their content.

- K-12 education solution providers filtering out content that is inappropriate for students and educators.

Important

You cannot use Content Moderator to detect illegal child exploitation images. However, qualified organizations can use the PhotoDNA Cloud Service to screen for this type of content.

What it includes

The Content Moderator service consists of several web service APIs available through both REST calls and a .NET SDK.

Moderation APIs

The Content Moderator service includes Moderation APIs, which check content for material that is potentially inappropriate or objectionable.

The following table describes the different types of moderation APIs.

| API group | Description |

|---|---|

| Text moderation | Scans text for offensive content, sexually explicit or suggestive content, profanity, and personal data. |

| Custom term lists | Scans text against a custom list of terms along with the built-in terms. Use custom lists to block or allow content according to your own content policies. |

| Image moderation | Scans images for adult or racy content, detects text in images with the Optical Character Recognition (OCR) capability, and detects faces. |

| Custom image lists | Scans images against a custom list of images. Use custom image lists to filter out instances of commonly recurring content that you don't want to classify again. |

| Video moderation | Scans videos for adult or racy content and returns time markers for said content. |

Data privacy and security

As with all of the Azure AI services, developers using the Content Moderator service should be aware of Microsoft's policies on customer data. See the Azure AI services page on the Microsoft Trust Center to learn more.

Next steps

- Complete a client library or REST API quickstart to implement the basic scenarios in code.