Quickstart: Azure Blob Storage client library for Node.js

Note

The Build from scratch option walks you step by step through the process of creating a new project, installing packages, writing the code, and running a basic console app. This approach is recommended if you want to understand all the details involved in creating an app that connects to Azure Blob Storage. If you prefer to automate deployment tasks and start with a completed project, choose Start with a template.

Note

The Start with a template option uses the Azure Developer CLI to automate deployment tasks and starts you off with a completed project. This approach is recommended if you want to explore the code as quickly as possible without going through the setup tasks. If you prefer step by step instructions to build the app, choose Build from scratch.

Get started with the Azure Blob Storage client library for Node.js to manage blobs and containers.

In this article, you follow steps to install the package and try out example code for basic tasks.

In this article, you use the Azure Developer CLI to deploy Azure resources and run a completed console app with just a few commands.

API reference | Library source code | Package (npm) | Samples

Prerequisites

- Azure account with an active subscription - create an account for free

- Azure Storage account - Create a storage account

- Node.js LTS

- Azure subscription - create one for free

- Node.js LTS

- Azure Developer CLI

Setting up

This section walks you through preparing a project to work with the Azure Blob Storage client library for Node.js.

Create the Node.js project

Create a JavaScript application named blob-quickstart.

In a console window (such as cmd, PowerShell, or Bash), create a new directory for the project:

mkdir blob-quickstartSwitch to the newly created blob-quickstart directory:

cd blob-quickstartCreate a package.json file:

npm init -yOpen the project in Visual Studio Code:

code .

Install the packages

From the project directory, install the following packages using the npm install command.

Install the Azure Storage npm package:

npm install @azure/storage-blobInstall the Azure Identity npm package for a passwordless connection:

npm install @azure/identityInstall other dependencies used in this quickstart:

npm install uuid dotenv

Set up the app framework

From the project directory:

Create a new file named

index.jsCopy the following code into the file:

const { BlobServiceClient } = require("@azure/storage-blob"); const { v1: uuidv1 } = require("uuid"); require("dotenv").config(); async function main() { try { console.log("Azure Blob storage v12 - JavaScript quickstart sample"); // Quick start code goes here } catch (err) { console.err(`Error: ${err.message}`); } } main() .then(() => console.log("Done")) .catch((ex) => console.log(ex.message));

With Azure Developer CLI installed, you can create a storage account and run the sample code with just a few commands. You can run the project in your local development environment, or in a DevContainer.

Initialize the Azure Developer CLI template and deploy resources

From an empty directory, follow these steps to initialize the azd template, create Azure resources, and get started with the code:

Clone the quickstart repository assets from GitHub and initialize the template locally:

azd init --template blob-storage-quickstart-nodejsYou'll be prompted for the following information:

- Environment name: This value is used as a prefix for all Azure resources created by Azure Developer CLI. The name must be unique across all Azure subscriptions and must be between 3 and 24 characters long. The name can contain numbers and lowercase letters only.

Sign in to Azure:

azd auth loginProvision and deploy the resources to Azure:

azd upYou'll be prompted for the following information:

- Subscription: The Azure subscription that your resources are deployed to.

- Location: The Azure region where your resources are deployed.

The deployment might take a few minutes to complete. The output from the

azd upcommand includes the name of the newly created storage account, which you'll need later to run the code.

Run the sample code

At this point, the resources are deployed to Azure and the code is almost ready to run. Follow these steps to install packages, update the name of the storage account in the code, and run the sample console app:

- Install packages: Navigate to the local

blob-quickstartdirectory. Install packages for the Azure Blob Storage and Azure Identity client libraries, along with other packages used in the quickstart, using the following command:npm install @azure/storage-blob @azure/identity uuid dotenv - Update the storage account name: In the local

blob-quickstartdirectory, edit the file named index.js. Find the<storage-account-name>placeholder and replace it with the actual name of the storage account created by theazd upcommand. Save the changes. - Run the project: Execute the following command to run the app:

node index.js - Observe the output: This app creates a container and uploads a text string as a blob to the container. The example then lists the blobs in the container and downloads the blob and displays the blob contents. The app then deletes the container and all its blobs.

To learn more about how the sample code works, see Code examples.

When you're finished testing the code, see the Clean up resources section to delete the resources created by the azd up command.

Object model

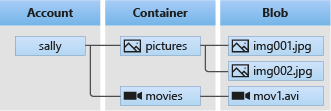

Azure Blob storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn't adhere to a particular data model or definition, such as text or binary data. Blob storage offers three types of resources:

- The storage account

- A container in the storage account

- A blob in the container

The following diagram shows the relationship between these resources.

Use the following JavaScript classes to interact with these resources:

- BlobServiceClient: The

BlobServiceClientclass allows you to manipulate Azure Storage resources and blob containers. - ContainerClient: The

ContainerClientclass allows you to manipulate Azure Storage containers and their blobs. - BlobClient: The

BlobClientclass allows you to manipulate Azure Storage blobs.

Code examples

These example code snippets show you how to do the following tasks with the Azure Blob Storage client library for JavaScript:

- Authenticate to Azure and authorize access to blob data

- Create a container

- Upload blobs to a container

- List the blobs in a container

- Download blobs

- Delete a container

Sample code is also available on GitHub.

Note

The Azure Developer CLI template includes a file with sample code already in place. The following examples provide detail for each part of the sample code. The template implements the recommended passwordless authentication method, as described in the Authenticate to Azure section. The connection string method is shown as an alternative, but isn't used in the template and isn't recommended for production code.

Authenticate to Azure and authorize access to blob data

Application requests to Azure Blob Storage must be authorized. Using the DefaultAzureCredential class provided by the Azure Identity client library is the recommended approach for implementing passwordless connections to Azure services in your code, including Blob Storage.

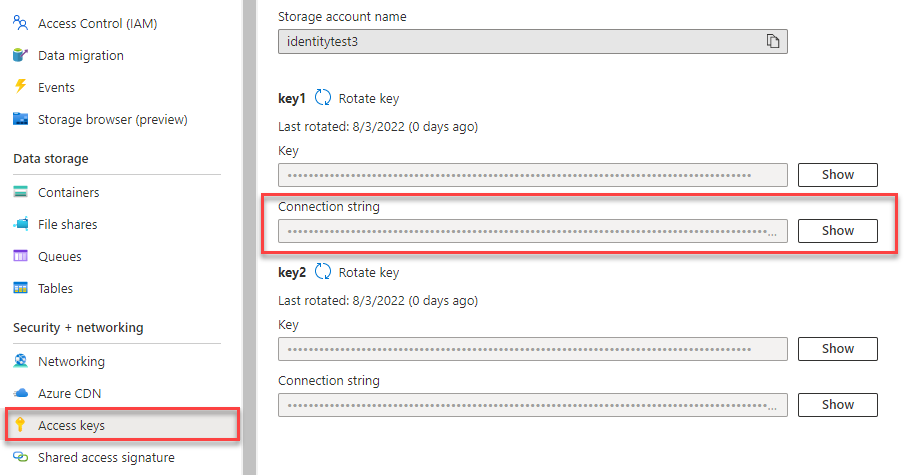

You can also authorize requests to Azure Blob Storage by using the account access key. However, this approach should be used with caution. Developers must be diligent to never expose the access key in an unsecure location. Anyone who has the access key is able to authorize requests against the storage account, and effectively has access to all the data. DefaultAzureCredential offers improved management and security benefits over the account key to allow passwordless authentication. Both options are demonstrated in the following example.

DefaultAzureCredential supports multiple authentication methods and determines which method should be used at runtime. This approach enables your app to use different authentication methods in different environments (local vs. production) without implementing environment-specific code.

The order and locations in which DefaultAzureCredential looks for credentials can be found in the Azure Identity library overview.

For example, your app can authenticate using your Azure CLI sign-in credentials with when developing locally. Your app can then use a managed identity once it's deployed to Azure. No code changes are required for this transition.

Assign roles to your Microsoft Entra user account

When developing locally, make sure that the user account that is accessing blob data has the correct permissions. You'll need Storage Blob Data Contributor to read and write blob data. To assign yourself this role, you'll need to be assigned the User Access Administrator role, or another role that includes the Microsoft.Authorization/roleAssignments/write action. You can assign Azure RBAC roles to a user using the Azure portal, Azure CLI, or Azure PowerShell. You can learn more about the available scopes for role assignments on the scope overview page.

In this scenario, you'll assign permissions to your user account, scoped to the storage account, to follow the Principle of Least Privilege. This practice gives users only the minimum permissions needed and creates more secure production environments.

The following example will assign the Storage Blob Data Contributor role to your user account, which provides both read and write access to blob data in your storage account.

Important

In most cases it will take a minute or two for the role assignment to propagate in Azure, but in rare cases it may take up to eight minutes. If you receive authentication errors when you first run your code, wait a few moments and try again.

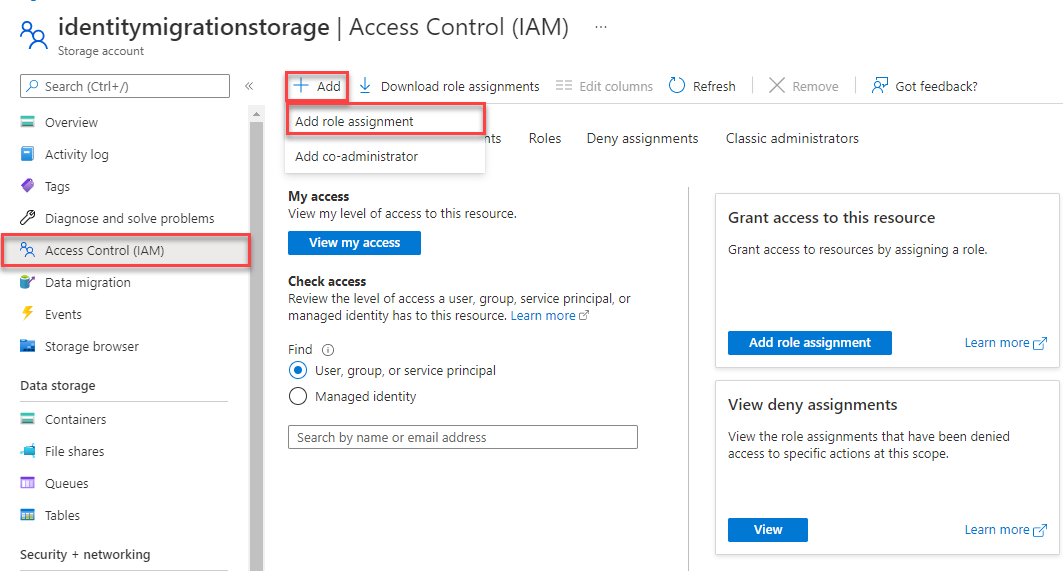

In the Azure portal, locate your storage account using the main search bar or left navigation.

On the storage account overview page, select Access control (IAM) from the left-hand menu.

On the Access control (IAM) page, select the Role assignments tab.

Select + Add from the top menu and then Add role assignment from the resulting drop-down menu.

Use the search box to filter the results to the desired role. For this example, search for Storage Blob Data Contributor and select the matching result and then choose Next.

Under Assign access to, select User, group, or service principal, and then choose + Select members.

In the dialog, search for your Microsoft Entra username (usually your user@domain email address) and then choose Select at the bottom of the dialog.

Select Review + assign to go to the final page, and then Review + assign again to complete the process.

Sign in and connect your app code to Azure using DefaultAzureCredential

You can authorize access to data in your storage account using the following steps:

Make sure you're authenticated with the same Microsoft Entra account you assigned the role to on your storage account. You can authenticate via the Azure CLI, Visual Studio Code, or Azure PowerShell.

Sign-in to Azure through the Azure CLI using the following command:

az loginTo use

DefaultAzureCredential, make sure that the @azure\identity package is installed, and the class is imported:const { DefaultAzureCredential } = require('@azure/identity');Add this code inside the

tryblock. When the code runs on your local workstation,DefaultAzureCredentialuses the developer credentials of the prioritized tool you're logged into to authenticate to Azure. Examples of these tools include Azure CLI or Visual Studio Code.const accountName = process.env.AZURE_STORAGE_ACCOUNT_NAME; if (!accountName) throw Error('Azure Storage accountName not found'); const blobServiceClient = new BlobServiceClient( `https://${accountName}.blob.core.windows.net`, new DefaultAzureCredential() );Make sure to update the storage account name,

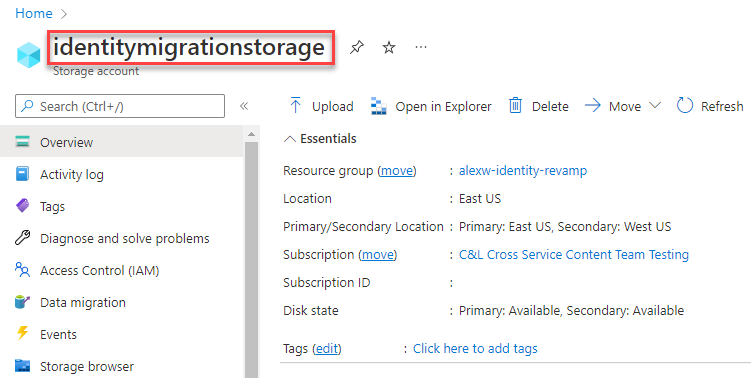

AZURE_STORAGE_ACCOUNT_NAME, in the.envfile or your environment's variables. The storage account name can be found on the overview page of the Azure portal.

Note

When deployed to Azure, this same code can be used to authorize requests to Azure Storage from an application running in Azure. However, you'll need to enable managed identity on your app in Azure. Then configure your storage account to allow that managed identity to connect. For detailed instructions on configuring this connection between Azure services, see the Auth from Azure-hosted apps tutorial.

Create a container

Create a new container in the storage account. The following code example takes a BlobServiceClient object and calls the getContainerClient method to get a reference to a container. Then, the code calls the create method to actually create the container in your storage account.

Add this code to the end of the try block:

// Create a unique name for the container

const containerName = 'quickstart' + uuidv1();

console.log('\nCreating container...');

console.log('\t', containerName);

// Get a reference to a container

const containerClient = blobServiceClient.getContainerClient(containerName);

// Create the container

const createContainerResponse = await containerClient.create();

console.log(

`Container was created successfully.\n\trequestId:${createContainerResponse.requestId}\n\tURL: ${containerClient.url}`

);

To learn more about creating a container, and to explore more code samples, see Create a blob container with JavaScript.

Important

Container names must be lowercase. For more information about naming containers and blobs, see Naming and Referencing Containers, Blobs, and Metadata.

Upload blobs to a container

Upload a blob to the container. The following code gets a reference to a BlockBlobClient object by calling the getBlockBlobClient method on the ContainerClient from the Create a container section.

The code uploads the text string data to the blob by calling the upload method.

Add this code to the end of the try block:

// Create a unique name for the blob

const blobName = 'quickstart' + uuidv1() + '.txt';

// Get a block blob client

const blockBlobClient = containerClient.getBlockBlobClient(blobName);

// Display blob name and url

console.log(

`\nUploading to Azure storage as blob\n\tname: ${blobName}:\n\tURL: ${blockBlobClient.url}`

);

// Upload data to the blob

const data = 'Hello, World!';

const uploadBlobResponse = await blockBlobClient.upload(data, data.length);

console.log(

`Blob was uploaded successfully. requestId: ${uploadBlobResponse.requestId}`

);

To learn more about uploading blobs, and to explore more code samples, see Upload a blob with JavaScript.

List the blobs in a container

List the blobs in the container. The following code calls the listBlobsFlat method. In this case, only one blob is in the container, so the listing operation returns just that one blob.

Add this code to the end of the try block:

console.log('\nListing blobs...');

// List the blob(s) in the container.

for await (const blob of containerClient.listBlobsFlat()) {

// Get Blob Client from name, to get the URL

const tempBlockBlobClient = containerClient.getBlockBlobClient(blob.name);

// Display blob name and URL

console.log(

`\n\tname: ${blob.name}\n\tURL: ${tempBlockBlobClient.url}\n`

);

}

To learn more about listing blobs, and to explore more code samples, see List blobs with JavaScript.

Download blobs

Download the blob and display the contents. The following code calls the download method to download the blob.

Add this code to the end of the try block:

// Get blob content from position 0 to the end

// In Node.js, get downloaded data by accessing downloadBlockBlobResponse.readableStreamBody

// In browsers, get downloaded data by accessing downloadBlockBlobResponse.blobBody

const downloadBlockBlobResponse = await blockBlobClient.download(0);

console.log('\nDownloaded blob content...');

console.log(

'\t',

await streamToText(downloadBlockBlobResponse.readableStreamBody)

);

The following code converts a stream back into a string to display the contents.

Add this code after the main function:

// Convert stream to text

async function streamToText(readable) {

readable.setEncoding('utf8');

let data = '';

for await (const chunk of readable) {

data += chunk;

}

return data;

}

To learn more about downloading blobs, and to explore more code samples, see Download a blob with JavaScript.

Delete a container

Delete the container and all blobs within the container. The following code cleans up the resources created by the app by removing the entire container using the delete method.

Add this code to the end of the try block:

// Delete container

console.log('\nDeleting container...');

const deleteContainerResponse = await containerClient.delete();

console.log(

'Container was deleted successfully. requestId: ',

deleteContainerResponse.requestId

);

To learn more about deleting a container, and to explore more code samples, see Delete and restore a blob container with JavaScript.

Run the code

From a Visual Studio Code terminal, run the app.

node index.js

The output of the app is similar to the following example:

Azure Blob storage - JavaScript quickstart sample

Creating container...

quickstart4a0780c0-fb72-11e9-b7b9-b387d3c488da

Uploading to Azure Storage as blob:

quickstart4a3128d0-fb72-11e9-b7b9-b387d3c488da.txt

Listing blobs...

quickstart4a3128d0-fb72-11e9-b7b9-b387d3c488da.txt

Downloaded blob content...

Hello, World!

Deleting container...

Done

Step through the code in your debugger and check your Azure portal throughout the process. Check to see that the container is being created. You can open the blob inside the container and view the contents.

Clean up resources

- When you're done with this quickstart, delete the

blob-quickstartdirectory. - If you're done using your Azure Storage resource, use the Azure CLI to remove the Storage resource.

When you're done with the quickstart, you can clean up the resources you created by running the following command:

azd down

You'll be prompted to confirm the deletion of the resources. Enter y to confirm.

Next steps

In this quickstart, you learned how to upload, download, and list blobs using JavaScript.

To see Blob storage sample apps, continue to:

- To learn more, see the Azure Blob Storage client libraries for JavaScript.

- For tutorials, samples, quickstarts, and other documentation, visit Azure for JavaScript and Node.js developers.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for