Quickstart: Create a Stream Analytics job by using the Azure portal

This quickstart shows you how to create a Stream Analytics job in the Azure portal. In this quickstart, you define a Stream Analytics job that reads real-time streaming data and filters messages with a temperature greater than 27. The Stream Analytics job reads data from IoT Hub, transforms the data, and writes the output data to a container in an Azure blob storage. The input data used in this quickstart is generated by a Raspberry Pi online simulator.

Before you begin

If you don't have an Azure subscription, create a free account.

Prepare the input data

Before defining the Stream Analytics job, you should prepare the input data. The real-time sensor data is ingested to IoT Hub, which later configured as the job input. To prepare the input data required by the job, complete the following steps:

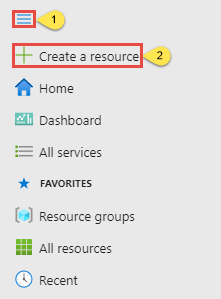

Sign in to the Azure portal.

Select Create a resource.

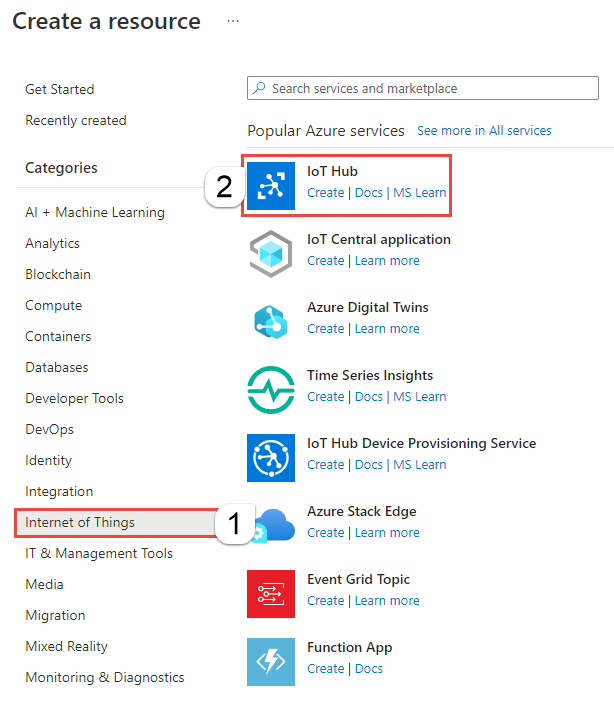

On the Create a resource page, select Internet of Things > IoT Hub.

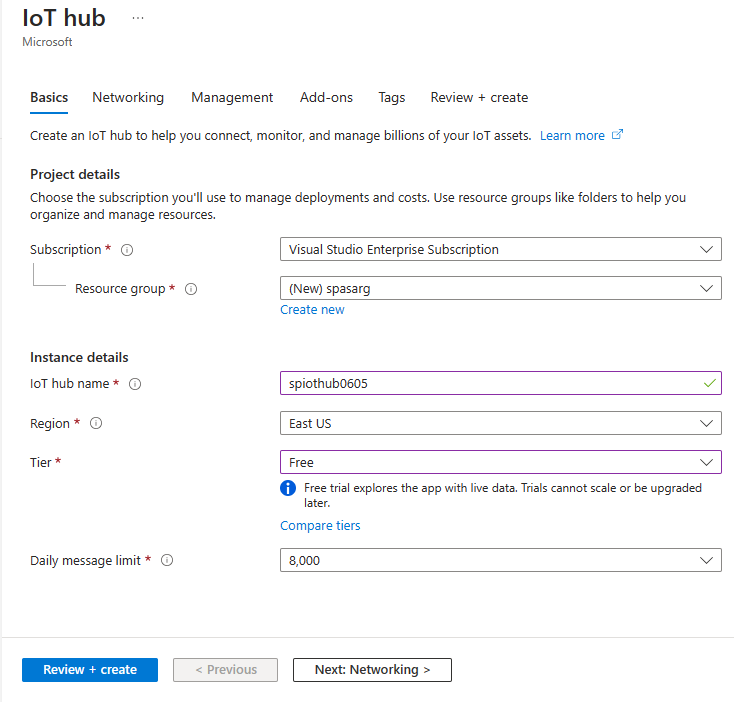

On IoT Hub page, follow these steps:

- For Subscription, select your Azure subscription.

- For Resource group, select an existing resource group or create a new resource group.

- For IoT hub name, enter a name for your IoT hub.

- For Region, select the region that's closest to you.

- for Tier, select Free, if it's still available on your subscription. For more information, see IoT Hub pricing.

- For Daily message limit, keep the default value.

- Select Next: Networking at the bottom of the page.

Select Review + create. Review your IoT Hub information and select Create. Your IoT Hub might take a few minutes to create. You can monitor the progress in the Notifications pane.

After the resource (IoT hub) is created, select Go to resource to navigate to the IoT Hub page.

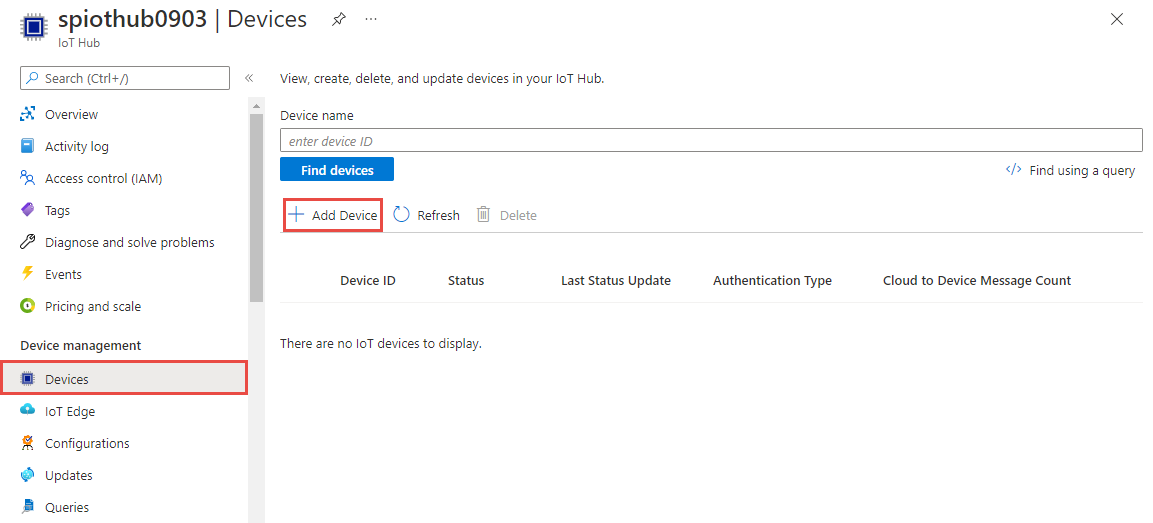

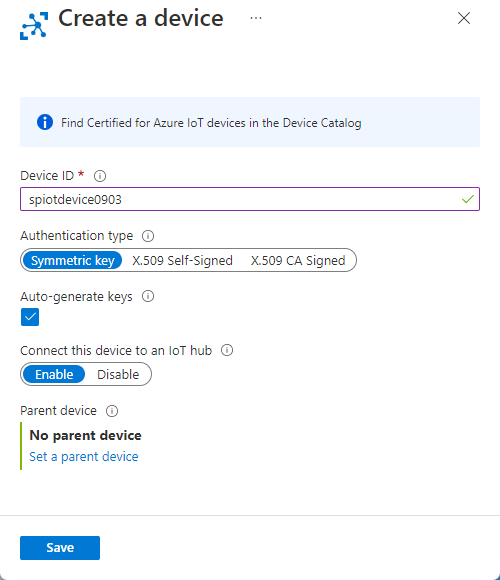

On the IoT Hub page, select Devices on the left menu, and then select + Add device.

Enter a Device ID and select Save.

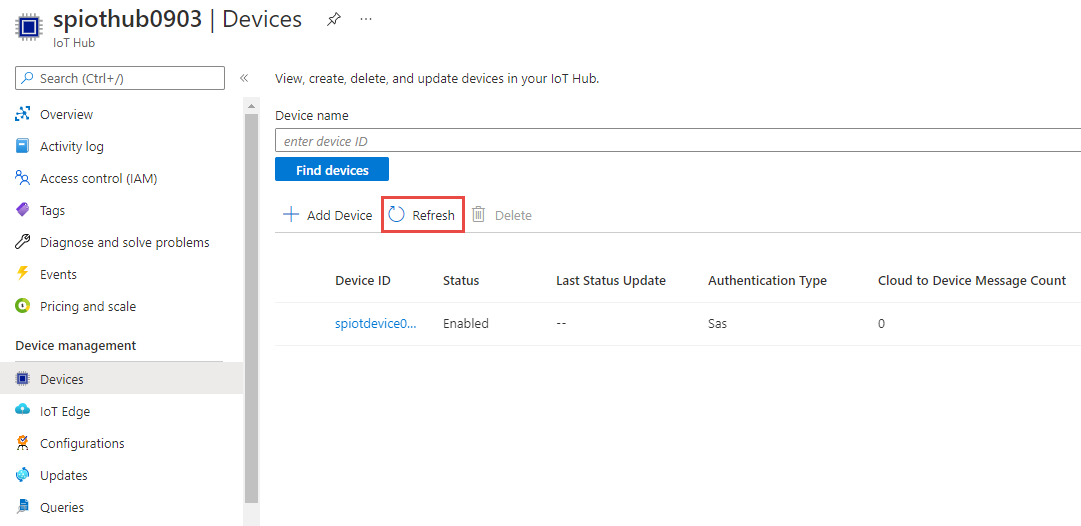

Once the device is created, you should see the device from the IoT devices list. Select Refresh button on the page if you don't see it.

Select your device from the list.

On the device page, select the copy button next to Primary Connection String, and save it to a notepad to use later.

Create blob storage

From the upper left-hand corner of the Azure portal, select Create a resource > Storage > Storage account.

In the Create storage account pane, enter a storage account name, location, and resource group. Choose the same location and resource group as the IoT Hub you created. Then select Review at the bottom of the page.

On the Review page, review your settings, and select Create to create the account.

After the resource is created, select Go to resource to navigate to the Storage account page.

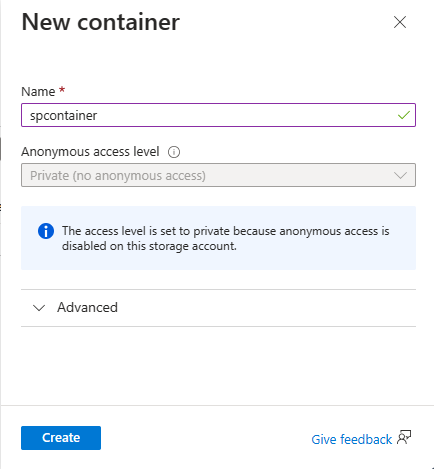

On the Storage account page, select Containers on the left menu, and then select + Container.

On the New container page, provide a name for your container, such as container1, and select Create.

Create a Stream Analytics job

- On a separate tab of the same browser window or in a separate browser window, sign in to the Azure portal.

- Select Create a resource in the upper left-hand corner of the Azure portal.

- Select Analytics > Stream Analytics job from the results list. If you don't see Stream Analytics job in the list, search for Stream Analytics job using the search box at the topic, and select it from the search results.

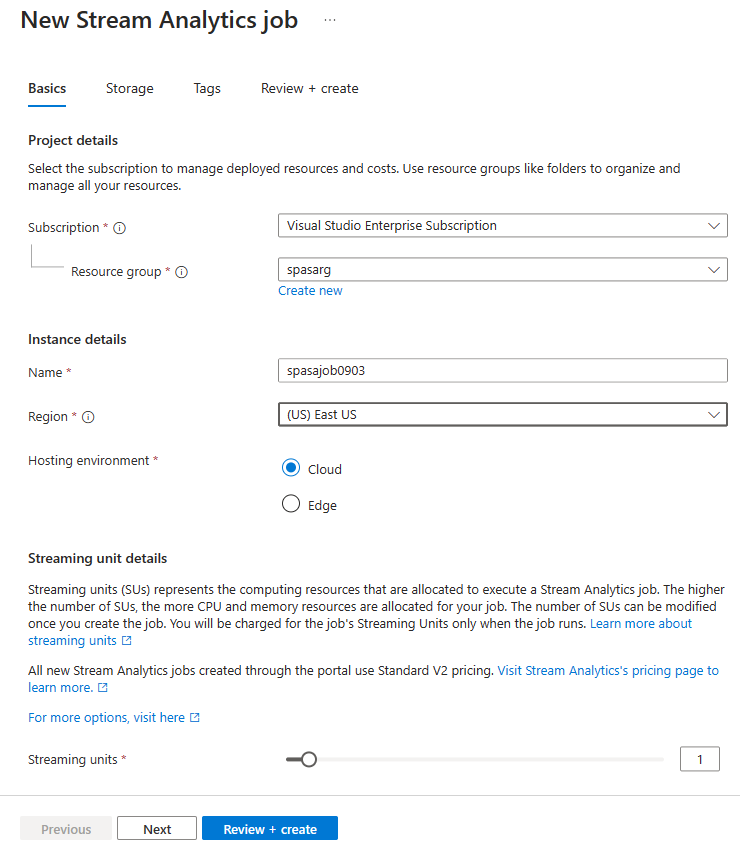

- On the New Stream Analytics job page, follow these steps:

For Subscription, select your Azure subscription.

For Resource group, select the same resource that you used earlier in this quickstart.

For Name, enter a name for the job. Stream Analytics job name can contain alphanumeric characters, hyphens, and underscores only and it must be between 3 and 63 characters long.

For Hosting environment, confirm that Cloud is selected. Stream Analytics jobs can be deployed to cloud or edge. Cloud allows you to deploy to Azure cloud, and the Edge option allows you to deploy to an IoT Edge device.

For Stream units, select 1. Streaming units represent the computing resources that are required to execute a job. To learn about scaling streaming units, refer to understanding and adjusting streaming units article.

Select Review + create at the bottom of the page.

- On the Review + create page, review settings, and select Create to create a Stream Analytics page.

- On the deployment page, select Go to resource to navigate to the Stream Analytics job page.

Configure job input

In this section, you configure an IoT Hub device input to the Stream Analytics job. Use the IoT Hub you created in the previous section of the quickstart.

On the Stream Analytics job page, select Inputs under Job topology on the left menu.

On the Inputs page, select Add input > IoT Hub.

On the IoT Hub page, follow these steps:

For Input alias, enter IoTHubInput.

For Subscription, select the subscription that has the IoT hub you created earlier. This quickstart assumes that you've created the IoT hub in the same subscription.

For IoT Hub, select your IoT hub.

Select Save to save the input settings for the Stream Analytics job.

Configure job output

Now, select Outputs under Job topology on the left menu.

On the Outputs page, select Add output > Blob storage/ADLS Gen2.

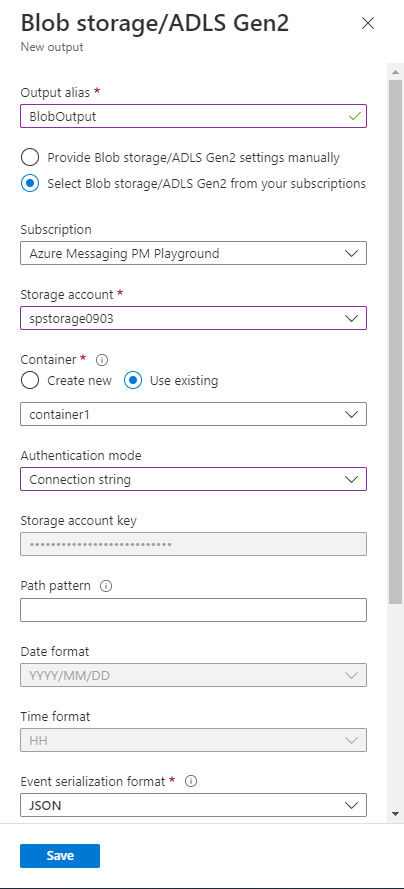

On the New output page for Blob storage/ADLS Gen2, follow these steps:

For Output alias, enter BlobOutput.

For Subscription, select the subscription that has the Azure storage account you created earlier. This quickstart assumes that you've created the Storage account in the same subscription.

For Storage account, select your Storage account.

For Container, select your blob container if it isn't already selected.

For Authentication mode, select Connection string.

Select Save at the bottom of the page to save the output settings.

Define the transformation query

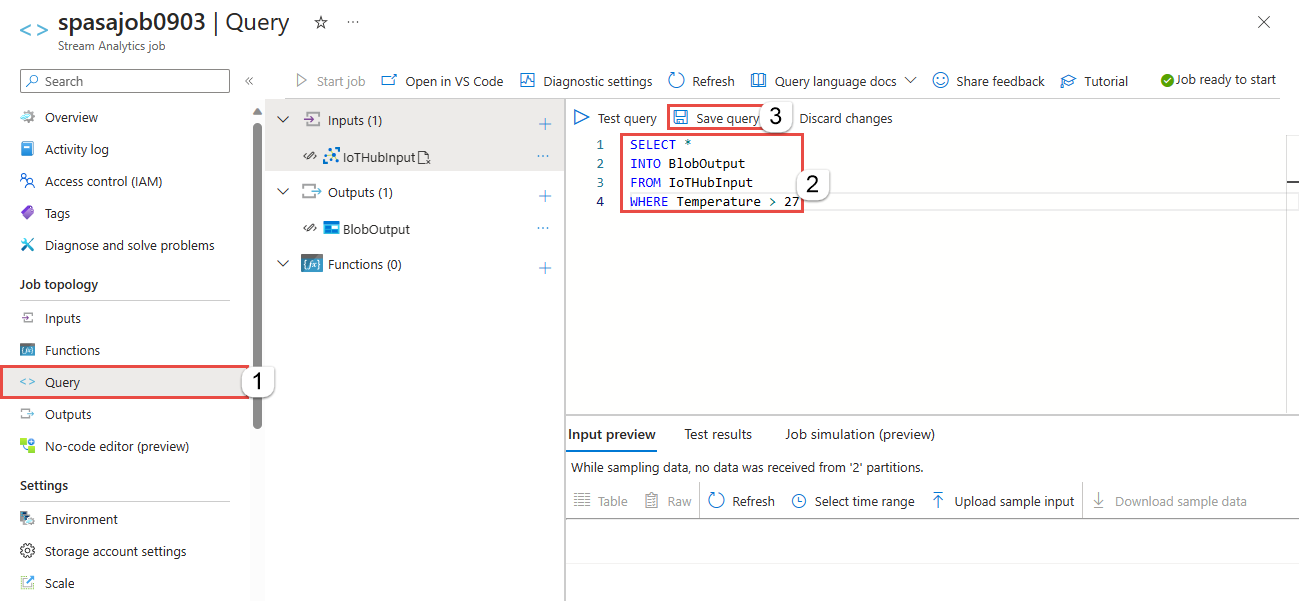

Now, select Query under Job topology on the left menu.

Enter the following query into the query window. In this example, the query reads the data from IoT Hub and copies it to a new file in the blob.

SELECT * INTO BlobOutput FROM IoTHubInput WHERE Temperature > 27Select Save query on the toolbar.

Run the IoT simulator

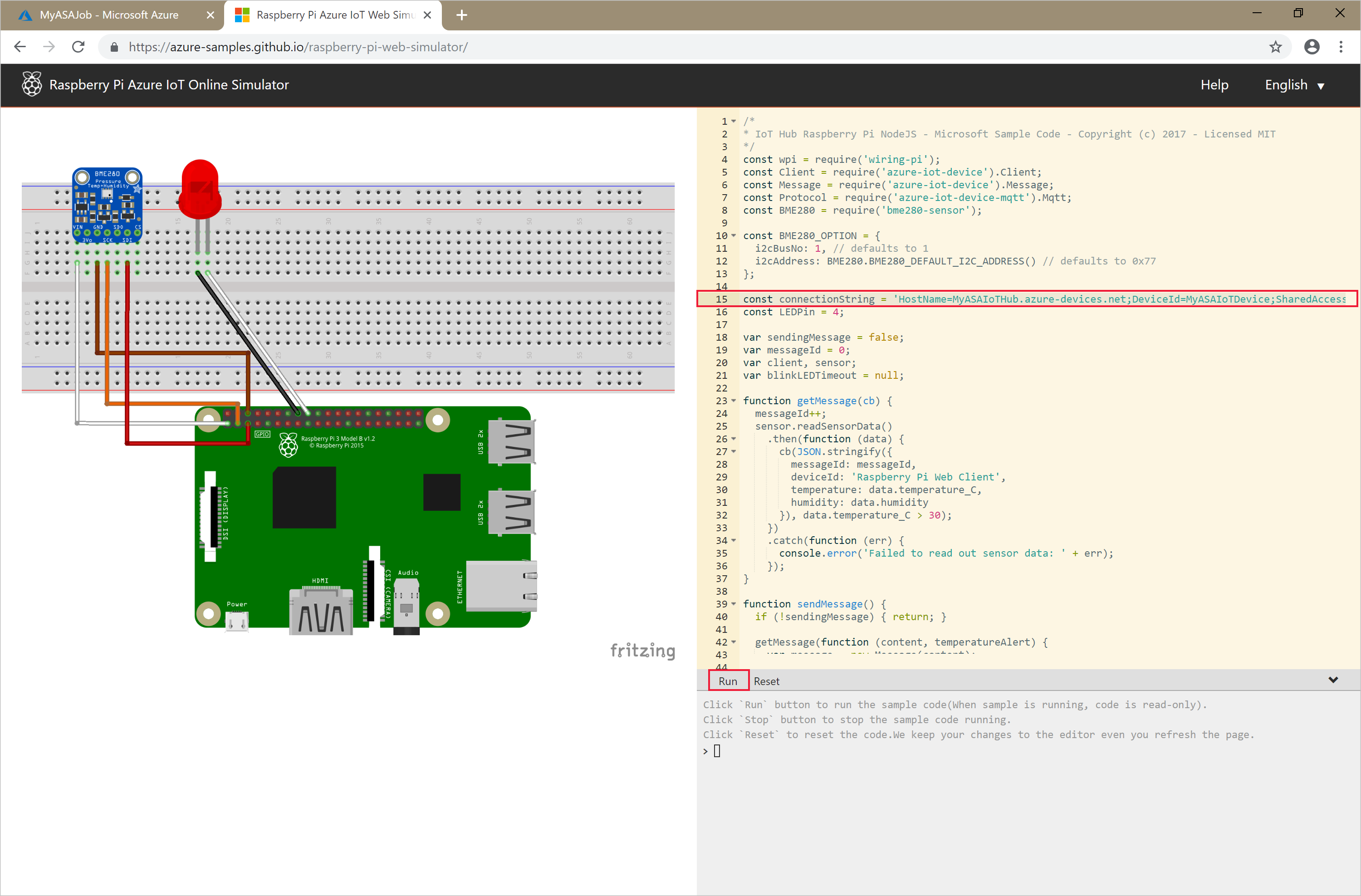

Open the Raspberry Pi Azure IoT Online Simulator.

Replace the placeholder in Line 15 with the Azure IoT Hub device connection string you saved in a previous section.

Select Run. The output should show the sensor data and messages that are being sent to your IoT Hub.

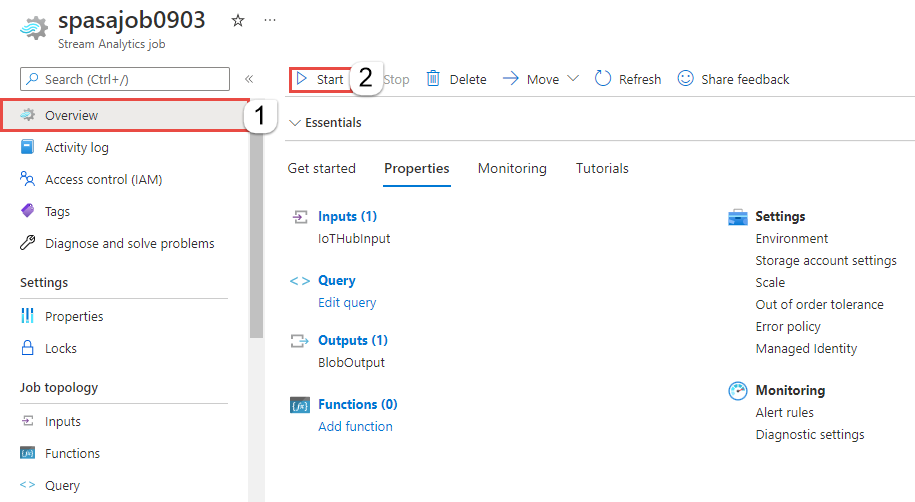

Start the Stream Analytics job and check the output

Return to the job overview page in the Azure portal, and select Start job.

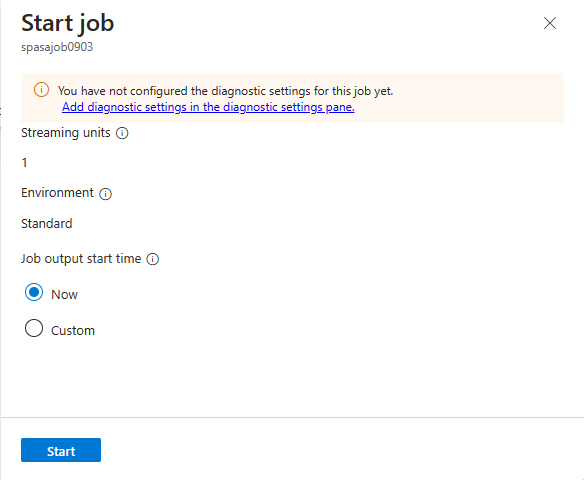

On the Start job page, confirm that Now is selected for Job output start time, and then select Start at the bottom of the page.

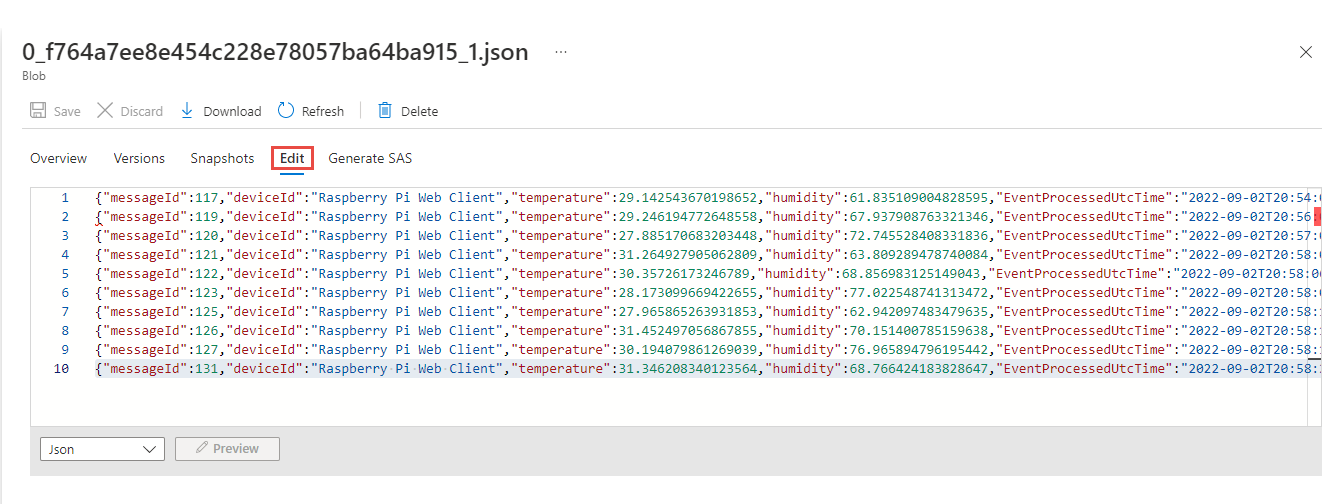

After few minutes, in the portal, find the storage account & the container that you've configured as output for the job. You can now see the output file in the container. The job takes a few minutes to start for the first time, after it's started, it will continue to run as the data arrives.

Select the file, and then on the Blob page, select Edit to view contents in the file.

Clean up resources

When no longer needed, delete the resource group, the Stream Analytics job, and all related resources. Deleting the job avoids billing the streaming units consumed by the job. If you're planning to use the job in future, you can stop it and restart it later when you need. If you aren't going to continue to use this job, delete all resources created by this quickstart by using the following steps:

From the left-hand menu in the Azure portal, select Resource groups and then select the name of the resource you created.

On your resource group page, select Delete, type the name of the resource to delete in the text box, and then select Delete.

Next steps

In this quickstart, you deployed a simple Stream Analytics job using Azure portal. You can also deploy Stream Analytics jobs using PowerShell, Visual Studio, and Visual Studio Code.

To learn about configuring other input sources and performing real-time detection, continue to the following article:

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for