Create a Spark cluster

You can create one or more clusters in your Azure Databricks workspace by using the Azure Databricks portal.

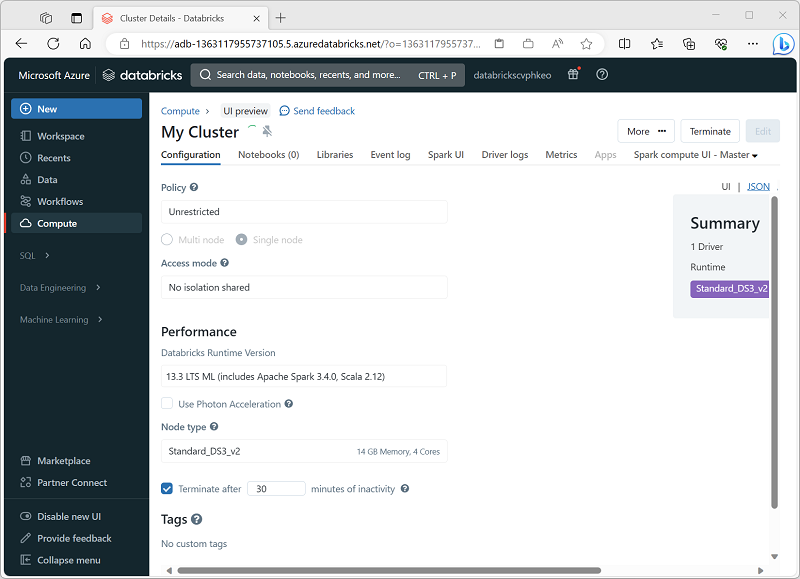

When creating the cluster, you can specify configuration settings, including:

- A name for the cluster.

- A cluster mode, which can be:

- Standard: Suitable for single-user workloads that require multiple worker nodes.

- High Concurrency: Suitable for workloads where multiple users will be using the cluster concurrently.

- Single Node: Suitable for small workloads or testing, where only a single worker node is required.

- The version of the Databricks Runtime to be used in the cluster; which dictates the version of Spark and individual components such as Python, Scala, and others that get installed.

- The type of virtual machine (VM) used for the worker nodes in the cluster.

- The minimum and maximum number of worker nodes in the cluster.

- The type of VM used for the driver node in the cluster.

- Whether the cluster supports autoscaling to dynamically resize the cluster.

- How long the cluster can remain idle before being shut down automatically.

How Azure manages cluster resources

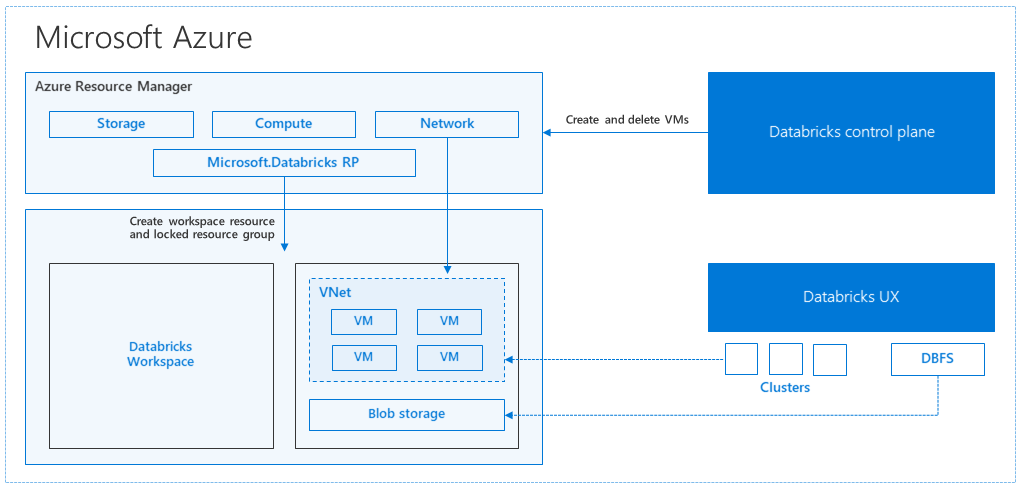

When you create an Azure Databricks workspace, a Databricks appliance is deployed as an Azure resource in your subscription. When you create a cluster in the workspace, you specify the types and sizes of the virtual machines (VMs) to use for both the driver and worker nodes, and some other configuration options, but Azure Databricks manages all other aspects of the cluster.

The Databricks appliance is deployed into Azure as a managed resource group within your subscription. This resource group contains the driver and worker VMs for your clusters, along with other required resources, including a virtual network, a security group, and a storage account. All metadata for your cluster, such as scheduled jobs, is stored in an Azure Database with geo-replication for fault tolerance.

Internally, Azure Kubernetes Service (AKS) is used to run the Azure Databricks control-plane and data-planes via containers running on the latest generation of Azure hardware (Dv3 VMs), with NvMe SSDs capable of blazing 100us latency on high-performance Azure virtual machines with accelerated networking. Azure Databricks utilizes these features of Azure to further improve Spark performance. After the services within your managed resource group are ready, you can manage the Databricks cluster through the Azure Databricks UI and through features such as auto-scaling and auto-termination.

Note

You also have the option of attaching your cluster to a pool of idle nodes to reduce cluster startup time. For more information, see Pools in the Azure Databricks documentation.