你当前正在访问 Microsoft Azure Global Edition 技术文档网站。 如果需要访问由世纪互联运营的 Microsoft Azure 中国技术文档网站,请访问 https://docs.azure.cn。

快速入门:使用 Web 工具在 Azure Synapse Analytics 中创建无服务器 Apache Spark 池

本快速入门介绍如何使用 Web 工具在 Azure Synapse 中创建无服务器 Apache Spark 池。 然后,介绍如何连接到 Apache Spark 池并针对文件和表运行 Spark SQL 查询。 通过 Apache Spark 可以使用内存处理进行快速数据分析和群集计算。 有关 Azure Synapse 中 Spark 的信息,请参阅概述:Azure Synapse 上的 Apache Spark。

重要

不管是否正在使用 Spark 实例,它们都会按分钟按比例计费。 请务必在用完 Spark 实例后将其关闭,或设置较短的超时。 有关详细信息,请参阅本文的清理资源部分。

如果没有 Azure 订阅,请在开始之前创建一个免费帐户。

先决条件

- 将需要 Azure 订阅。 根据需要创建免费 Azure 帐户

- Synapse Analytics 工作区

- 无服务器 Apache Spark 池

登录到 Azure 门户

登录到 Azure 门户。

如果还没有 Azure 订阅,可以在开始前创建一个免费 Azure 帐户。

创建笔记本

笔记本是支持各种编程语言的交互式环境。 使用笔记本可与数据交互,将代码和 Markdown、文本相结合,以及执行简单的可视化操作。

在要使用的 Azure Synapse 工作区的 Azure 门户视图中,选择“启动 Synapse Studio”。

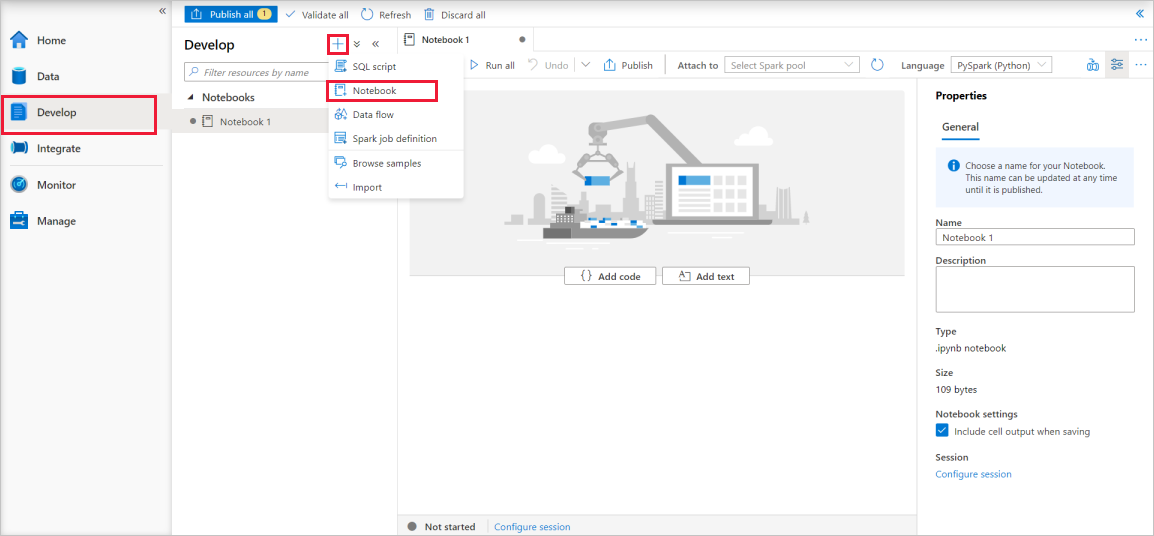

启动 Synapse Studio 后,选择“开发”。 然后,选择“+”图标以新增资源。

然后选择“笔记本”。 随即会创建并打开一个具有自动生成的名称的新笔记本。

在“属性”窗口中提供笔记本的名称。

在工具栏上单击“发布”。

如果工作区中只有一个 Apache Spark 池,则默认选择该池。 如果未选择任何池,请使用下拉箭头选择合适的 Apache Spark 池。

单击“添加代码”。 默认语言为

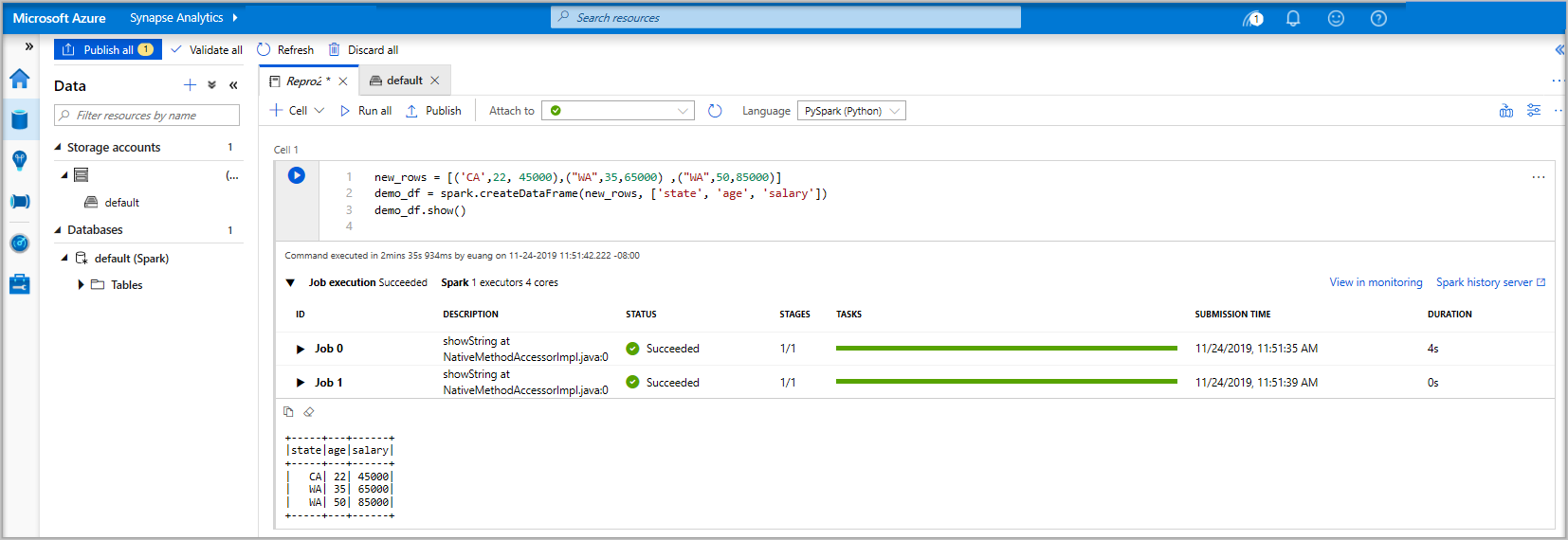

Pyspark。 你将混合使用 Pyspark 和 Spark SQL,因此默认选择是适当的。 其他支持的语言是适用于 Spark 的 Scala 和 .NET。接下来,创建一个用于操作的简单 Spark 数据帧对象。 在本例中,你将在代码中创建该对象。 有三行和三列:

new_rows = [('CA',22, 45000),("WA",35,65000) ,("WA",50,85000)] demo_df = spark.createDataFrame(new_rows, ['state', 'age', 'salary']) demo_df.show()现在,使用以下方法之一运行代码单元:

按 Shift + Enter。

选择单元左侧的蓝色播放图标。

选择工具栏上的“全部运行”按钮。

如果 Apache Spark 池实例尚未运行,它会自动启动。 在运行的单元下面,以及在笔记本底部的状态面板上,都可以看到 Apache Spark 池实例的状态。 启动池需要 2-5 分钟时间,具体取决于池的大小。 代码运行完成后,单元下面会显示有关运行该代码花费了多长时间及其执行情况的信息。 在输出单元中可以看到输出。

现在,数据会存在于一个数据帧中,从该数据帧中可以通过多种不同的方式使用这些数据。 在本快速入门的余下部分,需要以不同的格式使用这些数据。

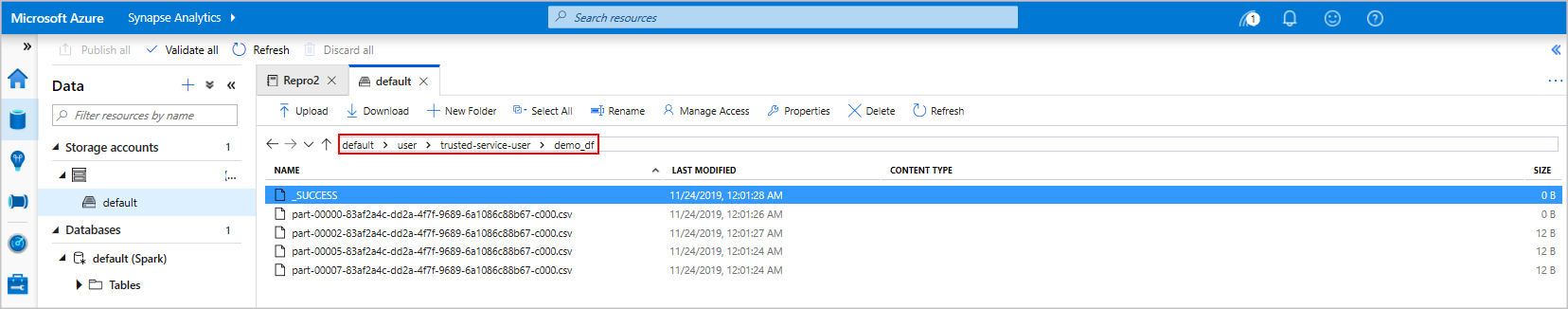

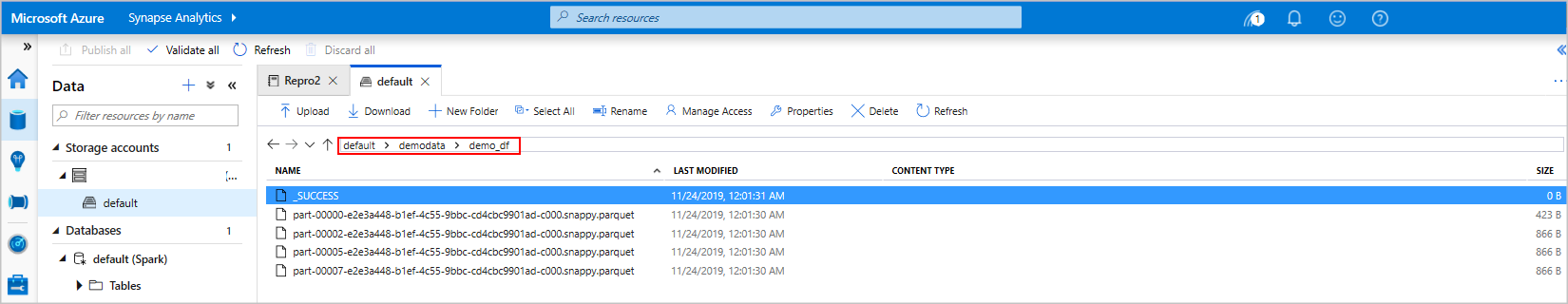

在另一个单元中输入并运行以下代码,以创建一个 Spark 表、一个 CSV 文件和一个 Parquet 文件,它们都包含数据的副本:

demo_df.createOrReplaceTempView('demo_df') demo_df.write.csv('demo_df', mode='overwrite') demo_df.write.parquet('abfss://<<TheNameOfAStorageAccountFileSystem>>@<<TheNameOfAStorageAccount>>.dfs.core.windows.net/demodata/demo_df', mode='overwrite')如果使用存储资源管理器,可以查看上述两种不同的文件编写方式的影响。 如果未指定文件系统,则会使用默认文件系统,在本例中为

default>user>trusted-service-user>demo_df。 数据将保存到指定的文件系统的位置。请注意,在使用“csv”和“parquet”格式的情况下,写入操作创建了一个包含许多已分区文件的目录。

运行 Spark SQL 语句

结构化查询语言 (SQL) 是用于查询和定义数据的最常见且最广泛使用的语言。 Spark SQL 作为 Apache Spark 的扩展使用,可使用熟悉的 SQL 语法处理结构化数据。

将以下代码粘贴到空单元中,然后运行代码。 该命令将列出池中的表。

%%sql SHOW TABLES将 Notebook 与 Azure Synapse Apache Spark 池配合使用时,将获得预设

sqlContext,可以使用该预设通过 Spark SQL 运行查询。%%sql告知笔记本要使用预设sqlContext来运行查询。 默认情况下,该查询检索所有 Azure Synapse Apache Spark 池包含的系统表中的前 10 行。运行另一个查询,请查看

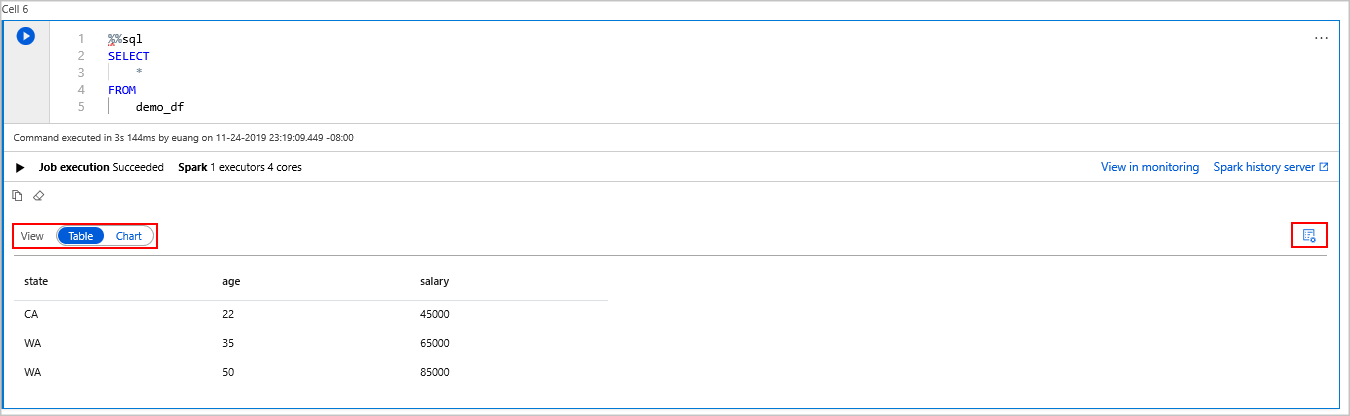

demo_df中的数据。%%sql SELECT * FROM demo_df该代码生成两个输出单元,其中一个包含数据结果,另一个显示作业视图。

默认情况下,结果视图会显示一个网格。 但是,网格下面会提供一个视图切换器,用于在网格视图与图形视图之间进行切换。

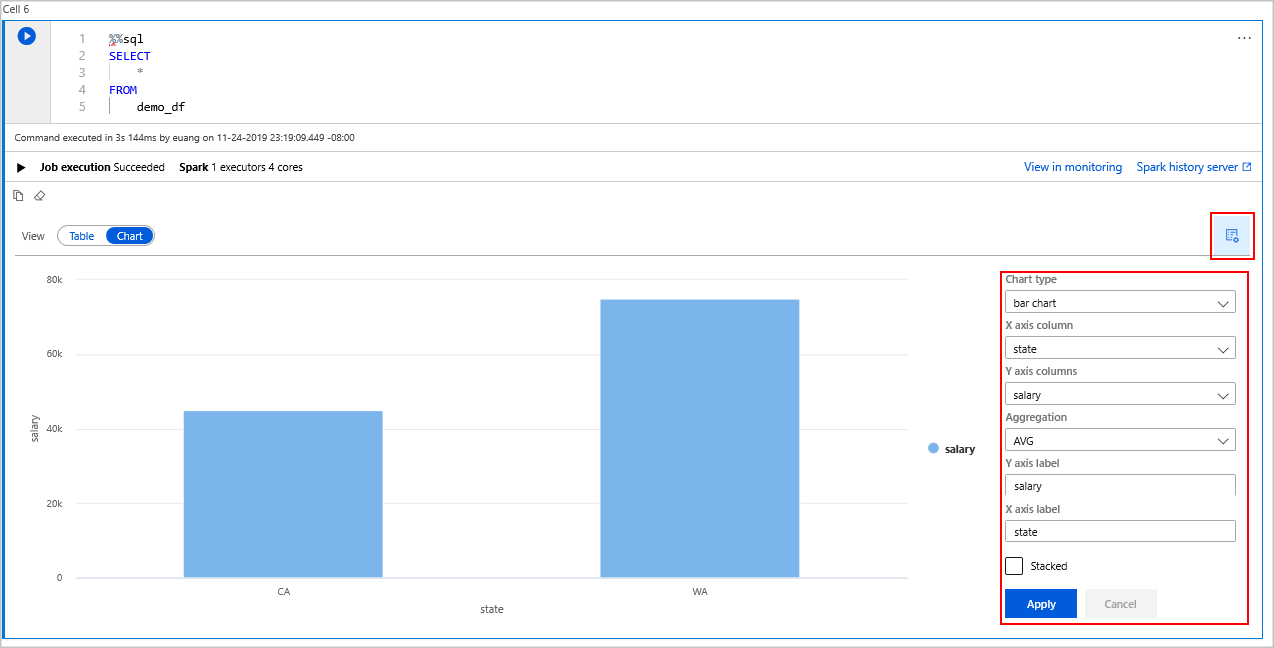

在“视图”切换器中,选择“图表”。

选择最右侧的“视图选项”图标。

在“图表类型”字段中选择“条形图”。

在“X 轴列”字段中选择“省/市/自治区”。

在“Y 轴列”字段中选择“工资”。

在“聚合”字段中,选择“平均”。

选择“应用”。

运行 SQL 时可以获得相同的体验,但不需要切换语言。 为此,可将上面的 SQL 单元替换为以下 PySpark 单元,其输出体验是相同的,因为使用了 display 命令:

display(spark.sql('SELECT * FROM demo_df'))对于前面执行的每个单元,可以选择转到“History Server”和“监视”。 单击相应的链接会转到用户体验的不同组成部分。

注意

某些 Apache Spark 官方文档依赖于使用 Spark 控制台,但该控制台在 Synapse Spark 中不可用。 请改用笔记本或 IntelliJ 体验。

清理资源

Azure Synapse 在 Azure Data Lake Storage 中保存数据。 可以安全关闭未在使用的 Spark 实例。 只要无服务器 Apache Spark 池正在运行,即使不使用它,也会产生费用。

由于池的费用是存储费用的许多倍,关闭未在使用的 Spark 实例可以节省费用。

为了确保关闭 Spark 实例,请结束任何已连接的会话(笔记本)。 达到 Apache Spark 池中指定的空闲时间时,池将会关闭。 也可以在笔记本底部的状态栏中选择“结束会话”。

后续步骤

本快速入门介绍了如何创建无服务器 Apache Spark 池和运行基本的 Spark SQL 查询。