Unity 中的手势

对 Unity 中的凝视执行操作的主要方式有两种:HoloLens 和沉浸式 HMD 中的手势和运动控制器。 可以通过 Unity 中的相同 API 访问这两个空间输入源的数据。

Unity 为 Windows Mixed Reality 提供两种访问空间输入数据的主要方式。 通用的 Input.GetButton/Input.GetAxis API 可跨多个 Unity XR SDK 运行,而特定于 Windows Mixed Reality 的 InteractionManager/GestureRecognizer API 则公开一组完整的空间输入数据。

高级复合手势 API (GestureRecognizer)

命名空间:UnityEngine.XR.WSA.Input

类型:GestureRecognizer、GestureSettings 和 InteractionSourceKind

应用还可以识别空间输入源的更高级复合手势,例如点击、按住、操控和导航手势。 可以使用 GestureRecognizer 通过手部和运动控制器识别这些复合手势。

GestureRecognizer 上的每个手势事件都在事件发生时提供输入和目标头部射线的 SourceKind。 某些事件提供其他特定于上下文的信息。

只需完成几个步骤就能使用手势识别器捕获手势:

- 创建新的手势识别器

- 指定要观察的手势

- 订阅这些手势的事件

- 开始捕获手势

创建新的手势识别器

若要使用 GestureRecognizer,必须事先创建 GestureRecognizer:

GestureRecognizer recognizer = new GestureRecognizer();

指定要观察的手势

通过 SetRecognizableGestures() 指定关注的手势:

recognizer.SetRecognizableGestures(GestureSettings.Tap | GestureSettings.Hold);

订阅这些手势的事件

订阅关注的手势事件。

void Start()

{

recognizer.Tapped += GestureRecognizer_Tapped;

recognizer.HoldStarted += GestureRecognizer_HoldStarted;

recognizer.HoldCompleted += GestureRecognizer_HoldCompleted;

recognizer.HoldCanceled += GestureRecognizer_HoldCanceled;

}

注意

导航和操控手势在 GestureRecognizer 的实例上互斥。

开始捕获手势

默认情况下,GestureRecognizer 在调用 StartCapturingGestures() 之前不会监视输入。 如果在处理 StopCapturingGestures() 的帧之前执行了输入,则手势事件可能会在调用 StopCapturingGestures() 之后生成。 GestureRecognizer 将记住在手势实际发生的上一帧中它是打开还是关闭,因此,根据此帧的视线目标启动和停止手势监视是可靠的。

recognizer.StartCapturingGestures();

停止捕获手势

若要停止手势识别,请执行以下操作:

recognizer.StopCapturingGestures();

删除手势识别器

在销毁 GestureRecognizer 对象之前,请记得取消订阅事件。

void OnDestroy()

{

recognizer.Tapped -= GestureRecognizer_Tapped;

recognizer.HoldStarted -= GestureRecognizer_HoldStarted;

recognizer.HoldCompleted -= GestureRecognizer_HoldCompleted;

recognizer.HoldCanceled -= GestureRecognizer_HoldCanceled;

}

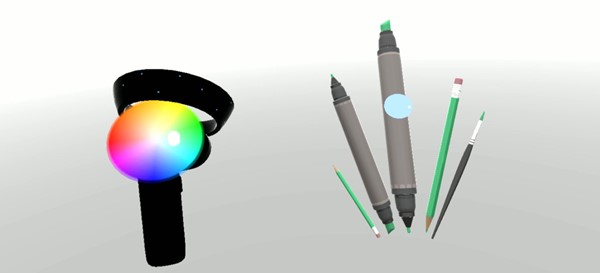

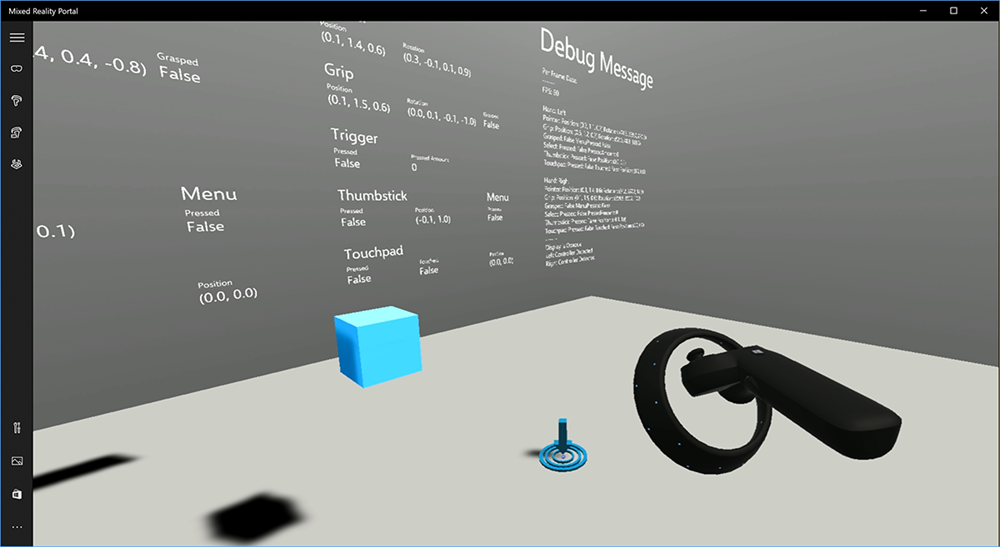

在 Unity 中渲染运动控制器模型

运动控制器模型和传送

若要在应用中渲染与用户持有的物理控制器相匹配的运动控制器,并在按下各种按钮时进行表述,可以使用混合现实工具包中的 MotionController 预制件。 此预制件在运行时从系统安装的动态控制器驱动程序动态加载正确的 glTF 模型。 必须动态加载这些模型,而不是在编辑器中手动导入它们,这样应用就会为用户可能拥有的任何当前和未来控制器显示物理上准确的 3D 模型。

- 按照入门说明下载混合现实工具包,并将其添加到 Unity 项目。

- 如果在入门步骤中将相机替换为 MixedRealityCameraParent 预制件,则可以继续操作! 该预制件包含运动控制器渲染。 否则,请从“对象”窗格将 Assets/HoloToolkit/Input/Prefabs/MotionControllers.prefab 添加到场景中。 当用户在你的场景中进行传送时,需要将该预制件作为用来移动相机的父对象的子级添加,以便控制器与用户一起移动。 如果应用不涉及传送,只需在场景的根目录添加预制件。

引发对象

在虚拟现实中引发对象比最初看起来更困难。 与大多数基于物理的交互一样,当游戏以意外方式引发时,它将立即明显并中断沉浸感。 我们花费了一些时间来深入思考如何表示物理上正确的引发行为,并制定了一些指南,通过更新我们的平台来启用,我们希望与你分享。

可在此处找到演示如何建议实现引发的示例。 此示例遵循以下四个准则:

使用控制器的速度,而不是位置。 在 Windows 的 11 月更新中,我们引入了在“近似”位置跟踪状态时的行为更改。 处于此状态时,只要我们认为控制器的精度高(通常比位置保持高精度的时间长),就会继续报告有关控制器的速度信息。

合并控制器的角速度。 此逻辑以

GetThrownObjectVelAngVel静态方法全部包含在throwing.cs文件中,位于上面链接的包中:由于角速度是守恒的,引发的对象必须保持与引发时相同的角速度:

objectAngularVelocity = throwingControllerAngularVelocity;由于所引发对象的质量中心可能不在抓握姿势的原点,因此在用户参考框架中,其速度可能不同于控制器的速度。 通过此方式提供的对象速度的一部分是引发对象质心围绕控制器原点的即时正切速度。 此正切速度是控制器角度速度与矢量的叉积,矢量表示控制器原点与所引发对象的质量中心之间的距离。

Vector3 radialVec = thrownObjectCenterOfMass - throwingControllerPos; Vector3 tangentialVelocity = Vector3.Cross(throwingControllerAngularVelocity, radialVec);所引发对象的总速度是控制器的速度和此正切速度之和:

objectVelocity = throwingControllerVelocity + tangentialVelocity;

密切注意应用速度的时间。 按下按钮时,该事件可能需要长达 20 毫秒的时间才能通过蓝牙弹出到操作系统。 这意味着,如果轮询控制器状态从按下状态更改为未按下状态,或者反过来,则控制器会提供你通过它获得的信息,实际上在对状态进行此更改之前。 此外,轮询 API 呈现的控制器姿势会向前预测,以在显示帧时反映可能姿势,这在将来可能会超过 20 毫秒。 这适用于渲染持有的对象,但在我们计算用户释放引发的轨迹时,会使以对象为目标的时间问题复杂化。 幸运的是,在 11 月更新中,当发送 InteractionSourcePressed 或 InteractionSourceReleased 等 Unity 事件时,状态包括按下或释放按钮时的历史姿势数据。 若要在引发期间获取最准确的控制器渲染和控制器目标,必须正确使用轮询和事件处理(如果适用):

- 对于“控制器渲染”每帧,应用应该将控制器的 GameObject 定位在当前帧的光子时间的前向预测的控制器姿势。 从 XR.InputTracking.GetLocalPosition 和 XR.WSA.Input.InteractionManager.GetCurrentReading 等 Unity 轮询 API 获取此数据。

- 对于按下或释放的控制器目标,应用应基于该按下或释放事件的历史控制器姿势进行光线投射并计算轨迹。 从 InteractionManager.InteractionSourcePressed 等 Unity 事件 API 获取此数据。

使用抓握姿势。 相对于抓握姿势而不是指针姿势报告角速度和速度。

引发将随着将来的 Windows 更新继续改进,你可以在此处找到更多有关它的信息。

MRTK 中的手势和运动控制器

可以从输入管理器访问手势和运动控制器。

按照教程进行操作

混合现实学院中提供了分步教程和更详细的自定义示例:

下一个开发检查点

如果你遵循了我们规划的 Unity 开发旅程,则目前正处于探索 MRTK 核心构建基块的过程中。 从这里,你可以继续了解下一部分基础知识:

或跳转到混合现实平台功能和 API:

你可以随时返回到 Unity 开发检查点。