HoloLens(第一代)和 Azure 302:计算机视觉

注意

混合现实学院教程在制作时考虑到了 HoloLens(第一代)和混合现实沉浸式头戴显示设备。 因此,对于仍在寻求这些设备的开发指导的开发人员而言,我们觉得很有必要保留这些教程。 我们不会在这些教程中更新 HoloLens 2 所用的最新工具集或集成相关的内容。 我们将维护这些教程,使之持续适用于支持的设备。 将来会发布一系列演示如何针对 HoloLens 2 进行开发的新教程。 此通知将在教程发布时通过指向这些教程的链接进行更新。

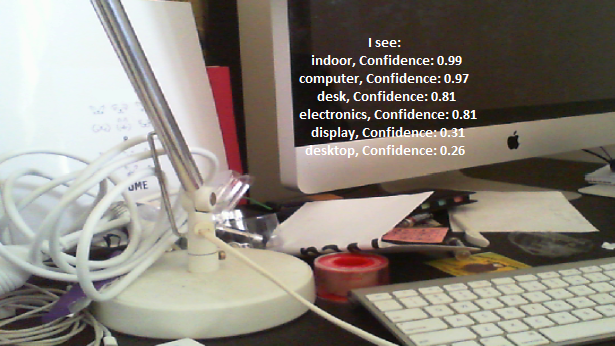

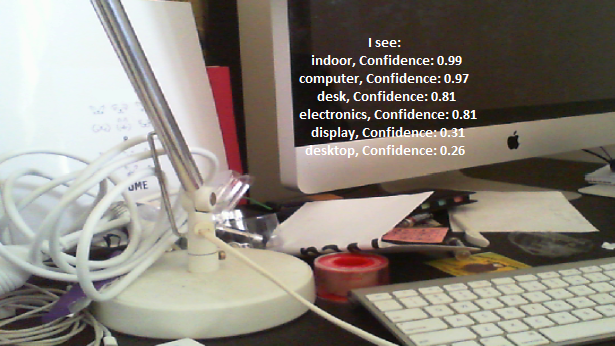

本课程介绍如何在混合现实应用程序中使用 Azure 计算机视觉功能来识别提供的图像中的视觉内容。

识别结果将显示为描述性标记。 无需训练机器学习模型即可使用此服务。 如果你的实现需要训练机器学习模型,请参阅 MR 和 Azure 302b。

Microsoft 计算机视觉是一组 API,旨在使用高级算法为开发人员提供图像处理和分析(并返回信息),所有这些操作全都在云中完成。 开发人员上传图像或图像 URL,然后,Microsoft 计算机视觉 API 算法将根据用户选择的输入分析视觉内容并可以返回信息,包括识别图像的类型和质量、检测人脸(返回其坐标),以及对图像进行标记或分类。 有关详细信息,请访问 Azure 计算机视觉 API 页。

完成本课程后,你将获得一个可执行以下操作的混合现实 HoloLens 应用程序:

- 使用点击手势时,HoloLens 的摄像头将捕获图像。

- 该图像将发送到 Azure 计算机视觉 API 服务。

- 识别的对象将列在位于 Unity 场景中的简单 UI 组内。

在应用程序中,由你决定结果与设计的集成方式。 本课程旨在介绍如何将 Azure 服务与 Unity 项目集成。 你的任务是运用从本课程中学到的知识来增强混合现实应用程序。

设备支持

| 课程 | HoloLens | 沉浸式头戴显示设备 |

|---|---|---|

| MR 和 Azure 302:计算机视觉 | ✔ | ✔ |

注意

尽管本课程重点介绍 HoloLens,但你也可以将本课程中学到的知识运用到 Windows Mixed Reality 沉浸式 (VR) 头戴显示设备。 由于沉浸式 (VR) 头戴显示设备没有可用的摄像头,因此你需要将外部摄像头连接到电脑。 随着课程的进行,你将看到有关支持沉浸式 (VR) 头戴显示设备可能需要进行的任何更改的说明。

先决条件

注意

本教程专为具有 Unity 和 C# 基本经验的开发人员设计。 另请注意,本文档中的先决条件和书面说明在编写时(2018 年 5 月)已经过测试和验证。 可随意使用最新软件(如安装工具一文所列的软件),但不应假设本课程中的信息会与你在比下列版本更高的软件中找到的内容完全一致。

建议在本课程中使用以下硬件和软件:

- 一台与 Windows Mixed Reality 兼容的开发电脑,用于沉浸式 (VR) 头戴显示设备方面的开发

- 已启用开发人员模式的 Windows 10 Fall Creators Update(或更高版本)

- 最新的 Windows 10 SDK

- Unity 2017.4

- Visual Studio 2017

- Windows Mixed Reality 沉浸式 (VR) 头戴显示设备或已启用开发人员模式的 Microsoft HoloLens

- 已连接到电脑的摄像头(用于沉浸式头戴显示设备开发)

- 可访问 Internet 以便能够进行 Azure 设置和计算机视觉 API 检索

开始之前

- 为了避免在生成此项目时遇到问题,强烈建议在根文件夹或接近根的文件夹中创建本教程中提到的项目(长文件夹路径会在生成时导致问题)。

- 设置并测试 HoloLens。 如需有关设置 HoloLens 的支持,请确保参阅“HoloLens 设置”一文。

- 在开始开发新的 HoloLens 应用时,建议执行校准和传感器优化(有时 HoloLens 应用可以帮助为每个用户执行这些任务)。

有关校准的帮助信息,请单击此链接访问“HoloLens 校准”一文。

有关传感器优化的帮助信息,请单击此链接访问“HoloLens 传感器优化”一文。

第 1 章 - Azure 门户

若要在 Azure 中使用计算机视觉 API 服务,需要配置该服务的实例以供应用程序使用。

首先,登录到 Azure 门户。

注意

如果你没有 Azure 帐户,需要创建一个。 如果你在课堂或实验室场景中跟着本教程学习,请让讲师或监督人员帮助设置你的新帐户。

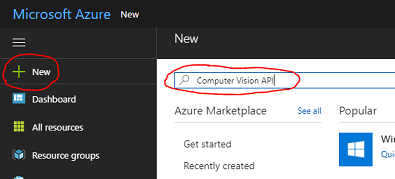

登录后,单击左上角的“新建”,搜索“计算机视觉 API”,然后按 Enter。

注意

在更新的门户中,“新建”一词可能已替换为“创建资源”。

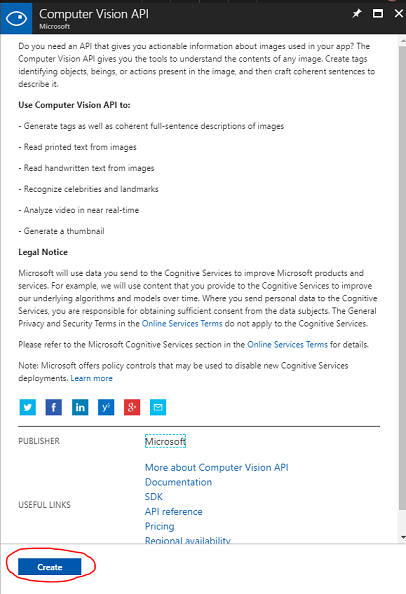

新页面会提供“计算机视觉 API”服务的说明。 在此页面的左下角,选择“创建”按钮以创建与此服务的关联。

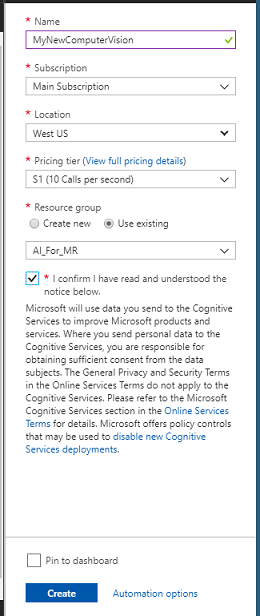

单击“创建”后:

插入此服务实例的所需名称。

选择一个“订阅” 。

选择合适的“定价层”,如果这是你首次创建计算机视觉 API 服务,则会向你提供免费层(名为 F0)。

选择一个资源组或创建一个新资源组。 通过资源组,可监视和预配 Azure 资产集合、控制其访问权限并管理其计费。 建议保留与单个项目(例如通用资源组下的这些实验室)相关联的所有 Azure 服务。

若要详细了解 Azure 资源组,请访问资源组一文。

确定资源组的位置(如果正在创建新的资源组)。 理想情况下,此位置在运行应用程序的区域中。 某些 Azure 资产仅在特定区域可用。

还需要确认了解应用于此服务的条款和条件。

单击 “创建” 。

单击“创建”后,必须等待服务创建完成,这可能需要一分钟时间。

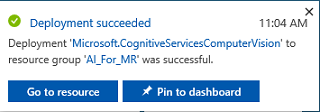

创建服务实例后,门户中将显示一条通知。

单击通知可浏览新的服务实例。

单击通知中的“转到资源”按钮,浏览新的服务实例。 你将转到新的计算机视觉 API 服务实例。

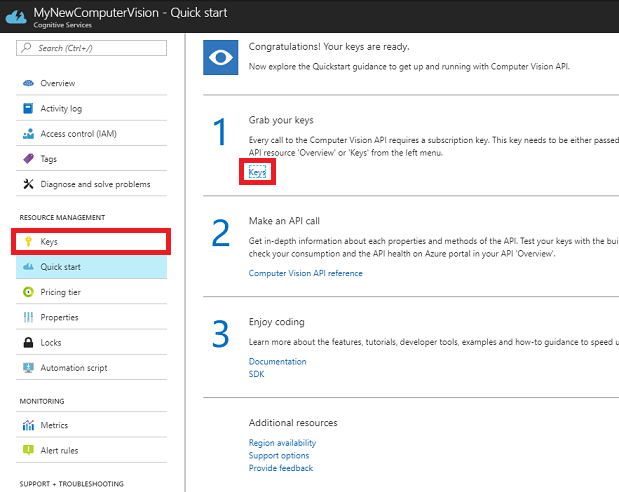

在本教程中,应用程序将需要调用你的服务,这是使用服务的订阅密钥来完成的。

在计算机视觉 API 服务的“快速启动”页上,导航到第一步(“获取密钥”),然后单击“密钥”(也可单击服务导航菜单中由钥匙图标表示的蓝色“密钥”超链接来实现此操作)。 这将显示你的服务密钥。

从显示的密钥中复制一个,稍后需要在项目中用到它。

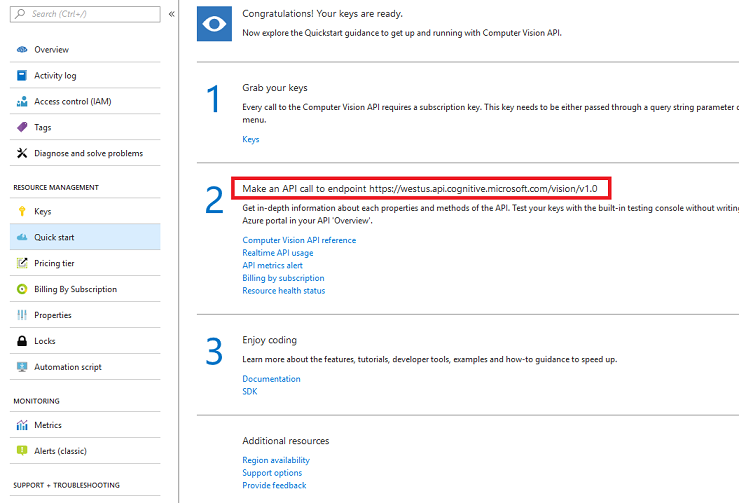

返回“快速入门”页,从中提取你的终结点。 请注意,你的终结点可能与此不同,具体取决于你所在的区域(如果是这样,稍后需要对代码进行更改)。 创建此终结点的副本供稍后使用:

提示

可在此处检查各种终结点。

第 2 章 - 设置 Unity 项目

下面是用于使用混合现实进行开发的典型设置,因此,这对其他项目来说是一个不错的模板。

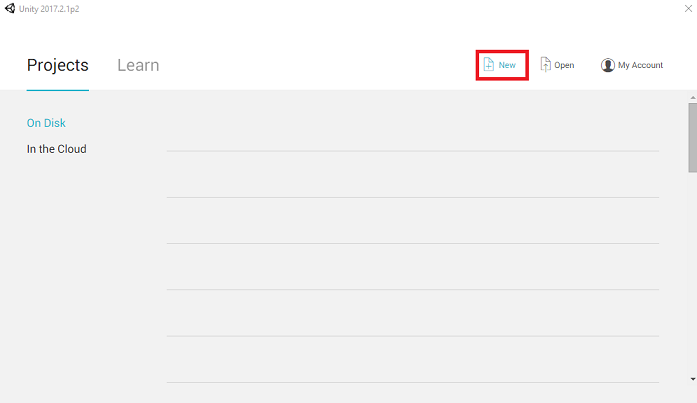

打开 Unity,单击“新建”。

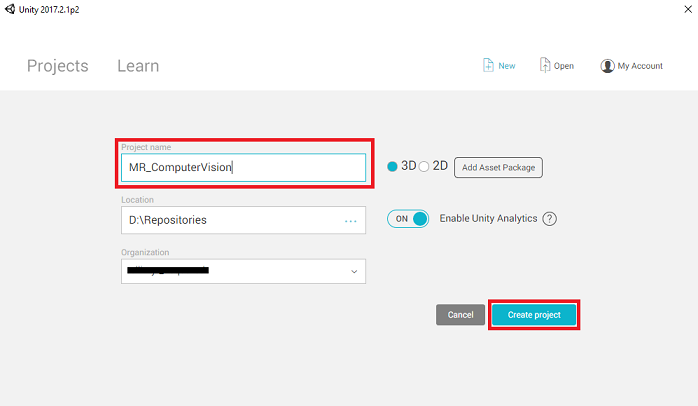

现在需要提供 Unity 项目名称。 插入“MR_ComputerVision”。 确保将项目类型设置为“3D”。 将“位置”设置为适合你的位置(请记住,越接近根目录越好)。 然后,单击“创建项目”。

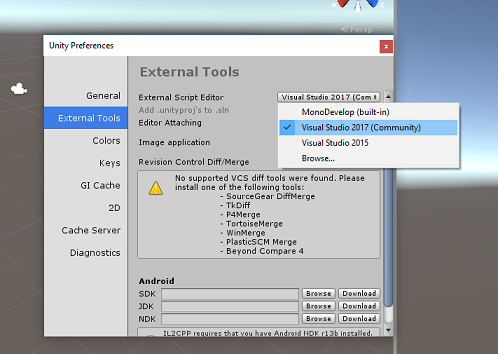

当 Unity 处于打开状态时,有必要检查默认“脚本编辑器”是否设置为“Visual Studio”。 转到“编辑”>“首选项”,然后在新窗口中导航到“外部工具”。 将外部脚本编辑器更改为 Visual Studio 2017。 关闭“首选项”窗口。

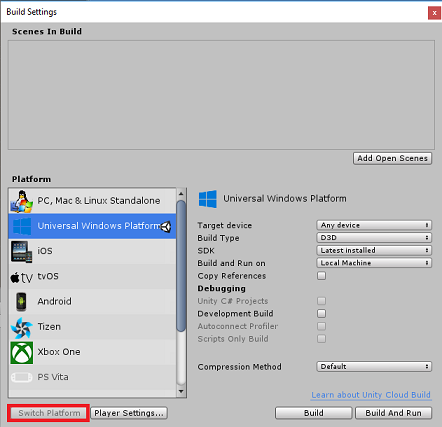

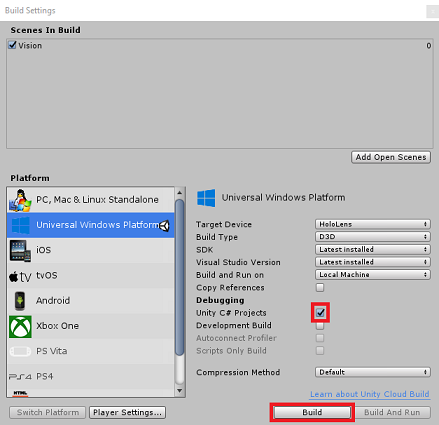

接下来,转到“文件”>“生成设置”,选择“通用 Windows 平台”,然后单击“切换平台”按钮以应用你的选择。

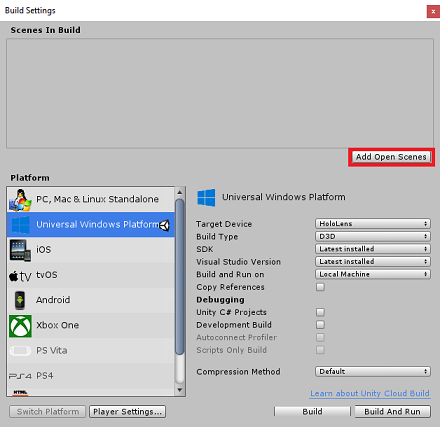

仍在“文件”>“生成设置”中,确保:

将“目标设备”设置为“HoloLens”

对于沉浸式头戴显示设备,将“目标设备”设置为“任何设备”。

将“生成类型”设置为“D3D”

将“SDK”设置为“最新安装的版本”

将“Visual Studio 版本”设置为“最新安装的版本”

将“生成并运行”设置为“本地计算机”

保存场景并将其添加到生成。

通过选择“添加开放场景”来执行此操作。 将出现一个保存窗口。

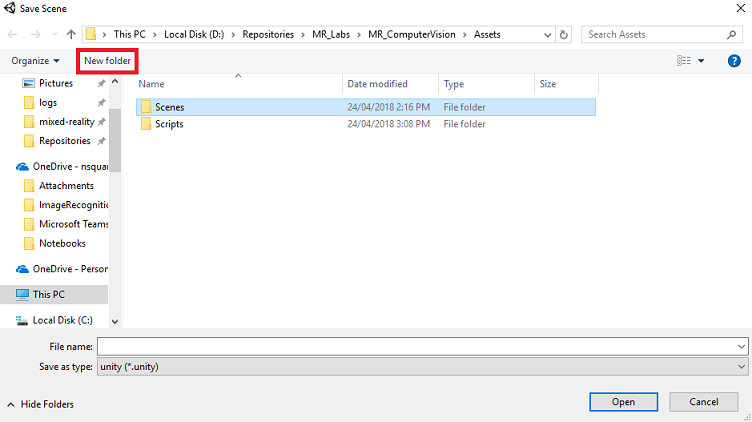

为此创建新文件夹,并为将来的任何场景创建一个新文件夹,然后选择“新建文件夹”按钮以创建新文件夹,将其命名为“场景”。

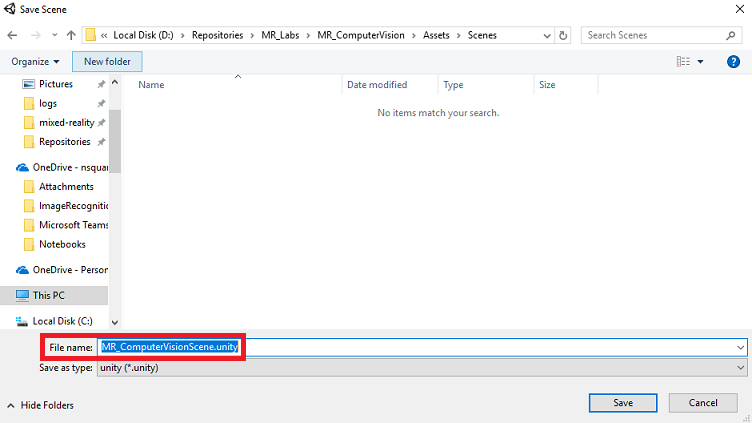

打开新创建的“场景”文件夹,然后在“文件名:”文本字段中,键入“MR_ComputerVisionScene”,然后单击“保存”。

请注意,必须将 Unity 场景保存在 Assets 文件夹中,因为它们必须与 Unity 项目关联。 创建场景文件夹(和其他类似文件夹)是构建 Unity 项目的典型方式。

在“生成设置”中,其余设置目前应保留为默认值。

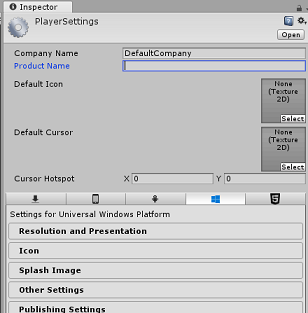

在“生成设置”窗口中,单击“播放器设置”按钮,这会在检查器所在的空间中打开相关面板。

在此面板中,需要验证一些设置:

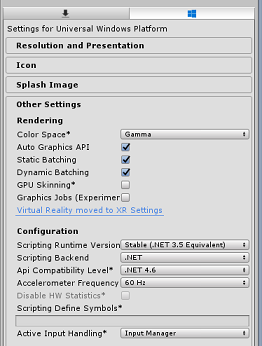

在“其他设置”选项卡中:

“脚本运行时版本”应为“稳定”(.NET 3.5 等效版本)。

“脚本后端”应为 “.NET”

“API 兼容性级别”应为“.NET 4.6”

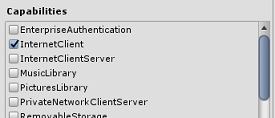

在“发布设置”选项卡的“功能”下,检查以下内容:

InternetClient

网络摄像头

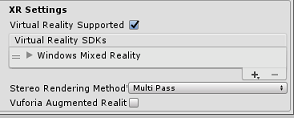

在面板再靠下部分,在“发布设置”下的“XR 设置”中,勾选“支持虚拟现实”,确保已添加“Windows Mixed Reality SDK”。

返回“生成设置”,此时 Unity C# 项目不再灰显;勾选此框旁边的复选框。

关闭“生成设置”窗口 。

保存场景和项目(“文件”>“保存场景/文件”>“保存项目”)。

第3章 - 主摄像头设置

重要

如果想要跳过本课程的“Unity 设置”部分,直接学习代码,可下载此 .unitypackage,将其作为自定义包导入到项目中,然后从第 5 章继续学习。

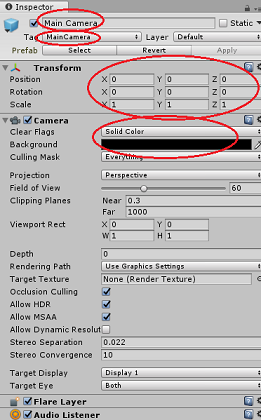

在“层次结构”面板中选择“主摄像头”。

选择后,你将能够在“检查器面板”中查看“主摄像头”的所有组件。

Camera 对象必须命名为“主相机”(注意拼写!)

“主摄像头”标记必须设置为“MainCamera”(注意拼写!)

请确保将“转换位置”设置为“0, 0, 0”

将“清除标志”设置为“纯色”(对于沉浸式头戴显示设备请忽略此项)。

将摄像头组件的“背景色”设置为“黑色,Alpha 0 (十六进制代码:#00000000)”(对于沉浸式头戴显示设备请忽略此项)。

接下来,必须创建一个附加到主摄像头的简单“光标”对象,以帮助在运行应用程序时定位图像分析输出。 该光标将确定摄像头焦点的中心点。

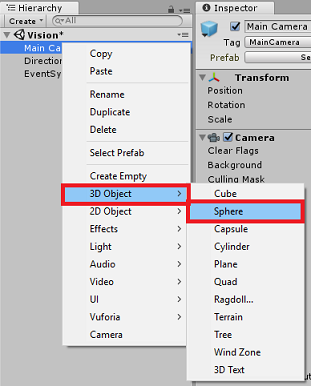

若要创建光标,请执行以下操作:

在“层次结构”面板中,右键单击“主摄像头”。 在“3D 对象”下,单击“球体”。

将“球体”重命名为“光标”(双击“光标”对象,或在选中该对象的情况下按“F2”键),并确保它是作为“主摄像头”的子项放置的。

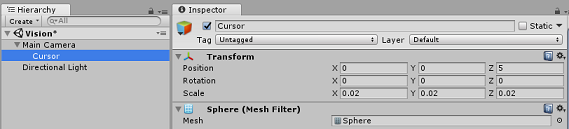

在“层次结构”面板中,单击“光标”。 选中“光标”后,在“检查器”面板中调整以下变量:

将“变换位置”设置为“0, 0, 5”

将“比例”设置为“0.02, 0.02, 0.02”

第 4 章– 设置标签系统

使用 HoloLens 的摄像头捕获图像后,该图像将发送到 Azure 计算机视觉 API 服务实例进行分析。

分析结果是一个称作“标记”的已识别对象列表。

你将使用标签(作为世界空间中的 3D 文本)在照片拍摄位置显示这些标记。

以下步骤说明如何设置标签对象。

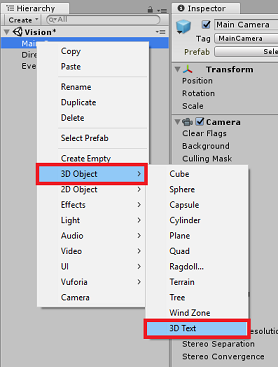

在“层次结构”面板中右键单击任意位置(此时位置无关紧要),在“3D 对象”下添加 3D 文本。 将其命名为“LabelText”。

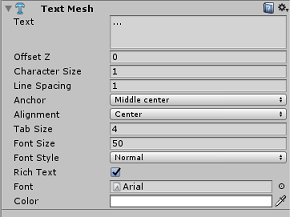

在“层次结构”面板中,单击“LabelText”。 选中“LabelText”后,在“检查器”面板中调整以下变量:

- 将“位置”设置为“0,0,0”

- 将“比例”设置为“0.01, 0.01, 0.01”

- 在“文本网格”组件中:

- 将“文本”中的所有文本替换为“...”

- 将“定位”设置为“居中”。

- 将“对齐方式”设置为“居中”

- 将“制表符大小”设置为“4”

- 将“字号”设置为“50”

- 将“颜色”设置为“#FFFFFFFF”

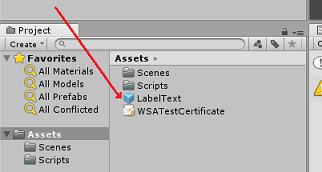

将“LabelText”从“层次结构”面板拖放到“项目”面板上的资产文件夹中。 这会将 LabelText 设为预制件,使其可在代码中实例化。

应从“层次结构”面板中删除“LabelText”,使其不会显示在开头的场景中。 由于它现在是要从“资产”文件夹对单个实例调用的预制件,因此无需将其保留在场景中。

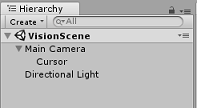

层次结构面板中的最终对象结构应与下图所示类似:

第 5 章 – 创建 ResultsLabel 类

需要创建的第一个脚本是 ResultsLabel 类,该类负责:

- 在相对于摄像头位置的适当世界空间中创建标签。

- 显示图像分析后返回的标记。

若要创建此类,请执行以下操作:

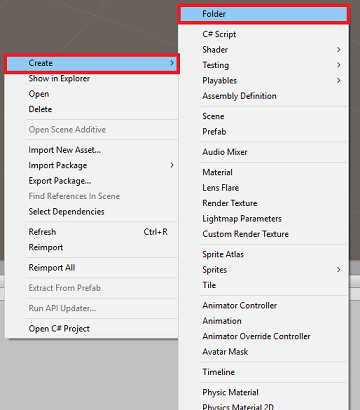

右键单击“项目”面板,然后单击“创建”>“文件夹”。 将文件夹命名为“脚本”。

创建“Scripts”文件夹后,双击它以将其打开。 然后在该文件夹中,右键单击并选择“创建”>“C# 脚本”。 将脚本命名为“ResultsLabel”。

双击新的“ResultsLabel”脚本以在 Visual Studio 中将其打开。

在“类”中,在“ResultsLabel”类中插入以下代码:

using System.Collections.Generic; using UnityEngine; public class ResultsLabel : MonoBehaviour { public static ResultsLabel instance; public GameObject cursor; public Transform labelPrefab; [HideInInspector] public Transform lastLabelPlaced; [HideInInspector] public TextMesh lastLabelPlacedText; private void Awake() { // allows this instance to behave like a singleton instance = this; } /// <summary> /// Instantiate a Label in the appropriate location relative to the Main Camera. /// </summary> public void CreateLabel() { lastLabelPlaced = Instantiate(labelPrefab, cursor.transform.position, transform.rotation); lastLabelPlacedText = lastLabelPlaced.GetComponent<TextMesh>(); // Change the text of the label to show that has been placed // The final text will be set at a later stage lastLabelPlacedText.text = "Analysing..."; } /// <summary> /// Set the Tags as Text of the last Label created. /// </summary> public void SetTagsToLastLabel(Dictionary<string, float> tagsDictionary) { lastLabelPlacedText = lastLabelPlaced.GetComponent<TextMesh>(); // At this point we go through all the tags received and set them as text of the label lastLabelPlacedText.text = "I see: \n"; foreach (KeyValuePair<string, float> tag in tagsDictionary) { lastLabelPlacedText.text += tag.Key + ", Confidence: " + tag.Value.ToString("0.00 \n"); } } }返回到 Unity 之前,请务必在 Visual Studio 中保存所做的更改。

返回 Unity 编辑器,单击“脚本”文件夹中的“ResultsLabel”类,并将其拖放到“层次结构”面板上的“主摄像头”对象中。

单击“主相机”并查看“检查器”面板。

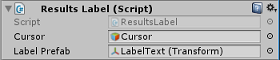

你将发现,在刚刚拖放到摄像头的脚本中有两个字段:“Cursor”和“Label Prefab”。

将名为“Cursor”的对象从“层次结构”面板拖放到名为“Cursor”的槽中,如下图所示。

将名为“LabelText”的对象从“项目”面板中的“资产”文件夹拖放到名为“Label Prefab”的槽中,如下图所示。

第 6 章 – 创建 ImageCapture 类

要创建的下一个类是 ImageCapture 类。 此类负责执行以下操作:

- 使用 HoloLens 摄像头捕获图像并将其存储在应用文件夹中。

- 捕获用户的点击手势。

若要创建此类,请执行以下操作:

转到之前创建的“脚本”文件夹。

右键单击文件夹并选择“创建”>“C# 脚本”。 将该脚本命名为“ImageCapture”。

双击新的“ImageCapture”脚本以在 Visual Studio 中将其打开。

将以下命名空间添加到 文件顶部:

using System.IO; using System.Linq; using UnityEngine; using UnityEngine.XR.WSA.Input; using UnityEngine.XR.WSA.WebCam;然后在 ImageCapture 类中的 Start() 方法上方添加以下变量:

public static ImageCapture instance; public int tapsCount; private PhotoCapture photoCaptureObject = null; private GestureRecognizer recognizer; private bool currentlyCapturing = false;

tapsCount 变量将存储从用户那里捕获的点击手势数。 此数字用于命名所捕获的图像。

现在需要添加 Awake() 和 Start() 方法的代码。 类初始化时,将调用这些方法:

private void Awake() { // Allows this instance to behave like a singleton instance = this; } void Start() { // subscribing to the HoloLens API gesture recognizer to track user gestures recognizer = new GestureRecognizer(); recognizer.SetRecognizableGestures(GestureSettings.Tap); recognizer.Tapped += TapHandler; recognizer.StartCapturingGestures(); }实现一个在发生点击手势时要调用的处理程序。

/// <summary> /// Respond to Tap Input. /// </summary> private void TapHandler(TappedEventArgs obj) { // Only allow capturing, if not currently processing a request. if(currentlyCapturing == false) { currentlyCapturing = true; // increment taps count, used to name images when saving tapsCount++; // Create a label in world space using the ResultsLabel class ResultsLabel.instance.CreateLabel(); // Begins the image capture and analysis procedure ExecuteImageCaptureAndAnalysis(); } }

TapHandler() 方法会增加从用户那里捕获的点击数,并使用当前光标位置来确定新标签的放置位置。

然后,此方法调用 ExecuteImageCaptureAndAnalysis() 方法来启动此应用程序的核心功能。

捕获并存储图像后,将调用以下处理程序。 如果该过程成功,结果将传递到 VisionManager(尚未创建)进行分析。

/// <summary> /// Register the full execution of the Photo Capture. If successful, it will begin /// the Image Analysis process. /// </summary> void OnCapturedPhotoToDisk(PhotoCapture.PhotoCaptureResult result) { // Call StopPhotoMode once the image has successfully captured photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode); } void OnStoppedPhotoMode(PhotoCapture.PhotoCaptureResult result) { // Dispose from the object in memory and request the image analysis // to the VisionManager class photoCaptureObject.Dispose(); photoCaptureObject = null; StartCoroutine(VisionManager.instance.AnalyseLastImageCaptured()); }然后添加由应用程序用来启动图像捕获过程并存储图像的方法。

/// <summary> /// Begin process of Image Capturing and send To Azure /// Computer Vision service. /// </summary> private void ExecuteImageCaptureAndAnalysis() { // Set the camera resolution to be the highest possible Resolution cameraResolution = PhotoCapture.SupportedResolutions.OrderByDescending((res) => res.width * res.height).First(); Texture2D targetTexture = new Texture2D(cameraResolution.width, cameraResolution.height); // Begin capture process, set the image format PhotoCapture.CreateAsync(false, delegate (PhotoCapture captureObject) { photoCaptureObject = captureObject; CameraParameters camParameters = new CameraParameters(); camParameters.hologramOpacity = 0.0f; camParameters.cameraResolutionWidth = targetTexture.width; camParameters.cameraResolutionHeight = targetTexture.height; camParameters.pixelFormat = CapturePixelFormat.BGRA32; // Capture the image from the camera and save it in the App internal folder captureObject.StartPhotoModeAsync(camParameters, delegate (PhotoCapture.PhotoCaptureResult result) { string filename = string.Format(@"CapturedImage{0}.jpg", tapsCount); string filePath = Path.Combine(Application.persistentDataPath, filename); VisionManager.instance.imagePath = filePath; photoCaptureObject.TakePhotoAsync(filePath, PhotoCaptureFileOutputFormat.JPG, OnCapturedPhotoToDisk); currentlyCapturing = false; }); }); }

警告

此时,你会注意到在 Unity 编辑器控制面板中出现了错误。 这是因为代码引用了将在下一章中创建的 VisionManager 类。

第 7 章 – 调用 Azure 和图像分析

需要创建的最后一个脚本是 VisionManager 类。

此类负责执行以下操作:

- 加载作为字节数组捕获的最新图像。

- 将字节数组发送到 Azure 计算机视觉 API 服务实例进行分析。

- 以 JSON 字符串的形式接收响应。

- 反序列化响应并将生成的标记传递给 ResultsLabel 类。

若要创建此类,请执行以下操作:

双击“Scripts”文件夹将其打开。

右键单击“脚本”文件夹,然后单击“创建”>“C# 脚本”。 将该脚本命名为“VisionManager”。

双击此新脚本,在 Visual Studio 中将其打开。

在 VisionManager 类的顶部,将命名空间更新为与以下内容相同:

using System; using System.Collections; using System.Collections.Generic; using System.IO; using UnityEngine; using UnityEngine.Networking;在脚本顶部的 VisionManager 类的内部(在 Start() 方法上方),现在需要创建两个类,用于表示来自 Azure 的已反序列化 JSON 响应:

[System.Serializable] public class TagData { public string name; public float confidence; } [System.Serializable] public class AnalysedObject { public TagData[] tags; public string requestId; public object metadata; }注意

需要在声明的前面为 TagData 和 AnalysedObject 类添加 [System.Serializable] 属性,以便能够使用 Unity 库进行反序列化。

在 VisionManager 类中,应添加以下变量:

public static VisionManager instance; // you must insert your service key here! private string authorizationKey = "- Insert your key here -"; private const string ocpApimSubscriptionKeyHeader = "Ocp-Apim-Subscription-Key"; private string visionAnalysisEndpoint = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Tags"; // This is where you need to update your endpoint, if you set your location to something other than west-us. internal byte[] imageBytes; internal string imagePath;警告

确保将身份验证密钥插入到 authorizationKey 变量中。 在本课程第 1 章的开头部分,你已经记下了自己的身份验证密钥。

警告

visionAnalysisEndpoint 变量可能与本示例中指定的变量不同。 west-us 严格表示为美国西部区域创建的服务实例。 使用终结点 URL 更新此变量;下面是此更新操作的一些示例:

- 西欧:

https://westeurope.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Tags - 东南亚:

https://southeastasia.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Tags - 澳大利亚东部:

https://australiaeast.api.cognitive.microsoft.com/vision/v1.0/analyze?visualFeatures=Tags

- 西欧:

现在需要添加唤醒代码。

private void Awake() { // allows this instance to behave like a singleton instance = this; }接下来添加协同例程(其下面是静态流方法),用于获取 ImageCapture 类捕获的图像的分析结果。

/// <summary> /// Call the Computer Vision Service to submit the image. /// </summary> public IEnumerator AnalyseLastImageCaptured() { WWWForm webForm = new WWWForm(); using (UnityWebRequest unityWebRequest = UnityWebRequest.Post(visionAnalysisEndpoint, webForm)) { // gets a byte array out of the saved image imageBytes = GetImageAsByteArray(imagePath); unityWebRequest.SetRequestHeader("Content-Type", "application/octet-stream"); unityWebRequest.SetRequestHeader(ocpApimSubscriptionKeyHeader, authorizationKey); // the download handler will help receiving the analysis from Azure unityWebRequest.downloadHandler = new DownloadHandlerBuffer(); // the upload handler will help uploading the byte array with the request unityWebRequest.uploadHandler = new UploadHandlerRaw(imageBytes); unityWebRequest.uploadHandler.contentType = "application/octet-stream"; yield return unityWebRequest.SendWebRequest(); long responseCode = unityWebRequest.responseCode; try { string jsonResponse = null; jsonResponse = unityWebRequest.downloadHandler.text; // The response will be in Json format // therefore it needs to be deserialized into the classes AnalysedObject and TagData AnalysedObject analysedObject = new AnalysedObject(); analysedObject = JsonUtility.FromJson<AnalysedObject>(jsonResponse); if (analysedObject.tags == null) { Debug.Log("analysedObject.tagData is null"); } else { Dictionary<string, float> tagsDictionary = new Dictionary<string, float>(); foreach (TagData td in analysedObject.tags) { TagData tag = td as TagData; tagsDictionary.Add(tag.name, tag.confidence); } ResultsLabel.instance.SetTagsToLastLabel(tagsDictionary); } } catch (Exception exception) { Debug.Log("Json exception.Message: " + exception.Message); } yield return null; } }/// <summary> /// Returns the contents of the specified file as a byte array. /// </summary> private static byte[] GetImageAsByteArray(string imageFilePath) { FileStream fileStream = new FileStream(imageFilePath, FileMode.Open, FileAccess.Read); BinaryReader binaryReader = new BinaryReader(fileStream); return binaryReader.ReadBytes((int)fileStream.Length); }返回到 Unity 之前,请务必在 Visual Studio 中保存所做的更改。

返回 Unity 编辑器,单击“脚本”文件夹中的“VisionManager”和“ImageCapture”类,并将其拖放到“层次结构”面板中的“主摄像头”对象。

第 8 章 - 在生成之前

若要对应用程序执行全面测试,需要将应用程序旁加载到 HoloLens。 执行此操作之前,请确保:

- 第 2 章中所述的所有设置均正确无误。

- 所有脚本已附加到“主摄像头”对象。

- 已正确分配主摄像头检查器面板中的所有字段。

- 确保将身份验证密钥插入到 authorizationKey 变量中。

- 确保还在 VisionManager 脚本中检查了终结点,确认它与你所在的区域相符(本文档默认使用 west-us)。

第 9 章 – 生成 UWP 解决方案并旁加载应用程序

此项目的 Unity 部分所需的一切现已完成,接下来要从 Unity 生成它。

导航到“生成设置” - “文件”>“生成设置…”

在“生成设置”窗口中,单击“生成”。

如果尚未勾选“Unity C# 项目”,请勾选。

单击“生成”。 Unity 将启动“文件资源管理器”窗口,你需要在其中创建并选择一个文件夹来生成应用。 现在创建该文件夹,并将其命名为“应用”。 选择“应用”文件夹,然后按“选择文件夹”。

Unity 将开始将项目生成到“应用”文件夹。

Unity 完成生成(可能需要一些时间)后,会在生成位置打开“文件资源管理器”窗口(检查任务栏,因为它可能不会始终显示在窗口上方,但会通知你增加了一个新窗口)。

第 10 章 – 部署到 HoloLens

在 HoloLens 上部署:

将需要 HoloLens 的 IP 地址(用于远程部署),并确保 HoloLens 处于“开发人员模式”。 要执行此操作:

- 佩戴 HoloLens 时,打开“设置”。

- 转到“网络和 Internet”>“Wi-Fi”>“高级选项”

- 记下 “IPv4” 地址。

- 接下来,导航回“设置”,然后转到“更新和安全”>“面向开发人员”

- 将“开发人员模式”设置为“打开”。

导航到新的 Unity 生成(“应用”文件夹)并使用 Visual Studio 打开解决方案文件。

在“解决方案配置”中,选择“调试”。

在“解决方案平台”中,选择“x86,远程计算机”。

转到“生成”菜单,并单击“部署解决方案”,将应用程序旁加载到 HoloLens。

你的应用现在应显示在 HoloLens 上的已安装应用列表中,随时可以启动!

注意

若要部署到沉浸式头戴显示设备,请将“解决方案平台”设置为“本地计算机”,并将“配置”设置为“调试”,将“平台”设置为“x86”。 然后,使用“生成”菜单,选择“部署解决方案”,将其部署到本地计算机。

已完成的计算机视觉 API 应用程序

祝贺你,现已生成了一个混合现实应用,它可以利用 Azure 计算机视觉 API 来识别现实世界对象,并显示识别内容的置信度。

额外练习

练习 1

由于你已使用 Tags 参数(从 VisionManager 中使用的终结点可以证明),请扩展应用以检测其他信息;在此处查看可以访问的其他参数。

练习 2

以更具对话交互性和易读性的方式显示返回的 Azure 数据(数字可能会隐藏), 就如同机器人与用户交谈一样。