Unreal 中的 HoloLens 照片/视频摄像头

HoloLens 的面板上有一个照片/视频 (PV) 摄像头,它既可用于混合现实捕获 (MRC),也可在 Unreal 世界空间中从相机帧中的像素坐标定位对象。

重要

全息远程不支持 PV 摄像头,但可使用电脑上附带的网络摄像头来模拟 HoloLens PV 摄像头功能。

PV 摄像头馈送设置

重要

PV 摄像头是在 Windows Mixed Reality 和 OpenXR 插件中实现的。 不过,OpenXR 要求安装 Microsoft OpenXR 插件。 此外,适用于 Unreal 4.26 的 OpenXR 目前存在限制:摄像头只能用于 DirectX11 RHI。 Unreal 4.27.1 或更高版本中已修复此限制。

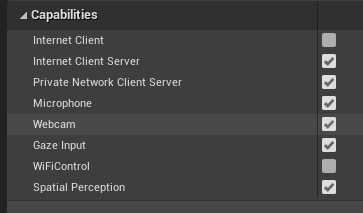

- 在“项目设置”>“HoloLens”中,启用网络摄像头功能:

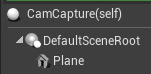

- 新建一个名为“CamCapture”的 Actor,再添加一个平面来渲染摄像头馈送:

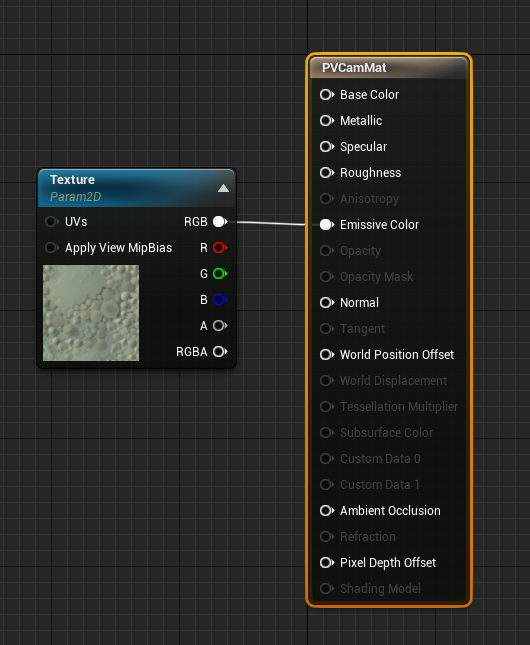

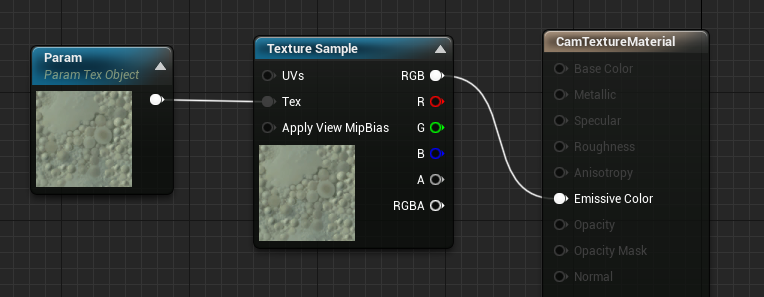

- 将该 Actor 添加到场景中,使用纹理对象参数和纹理示例创建一个名为 CamTextureMaterial 的新材料。 将纹理的 rgb 数据发送到输出自发光颜色:

渲染 PV 摄像头馈送

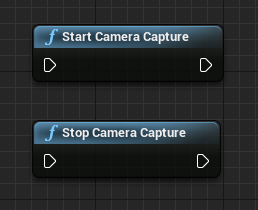

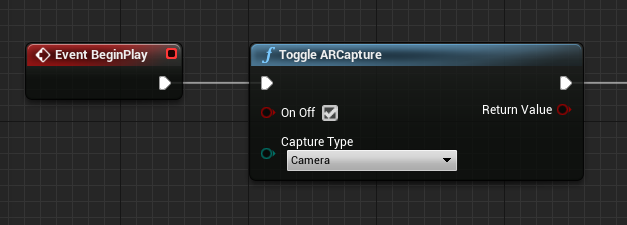

- 在 CamCapture 蓝图中,启用 PV 摄像头:

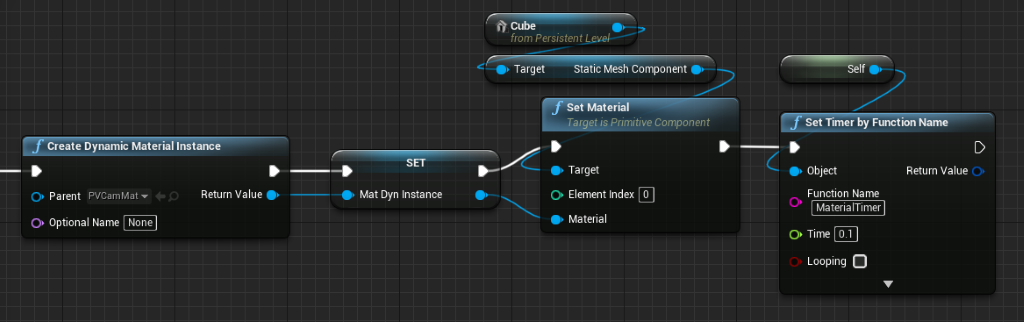

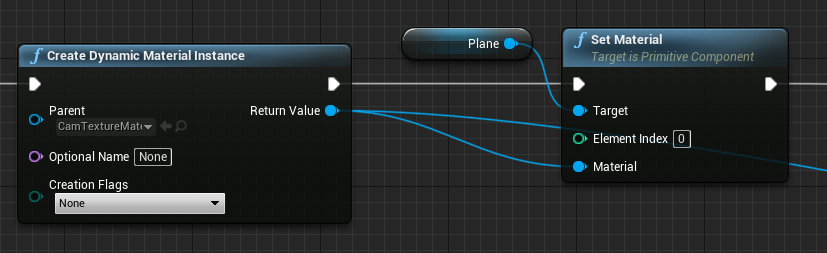

- 从 CamTextureMaterial 创建一个动态材料实例,再将此材料分配到 Actor 的平面:

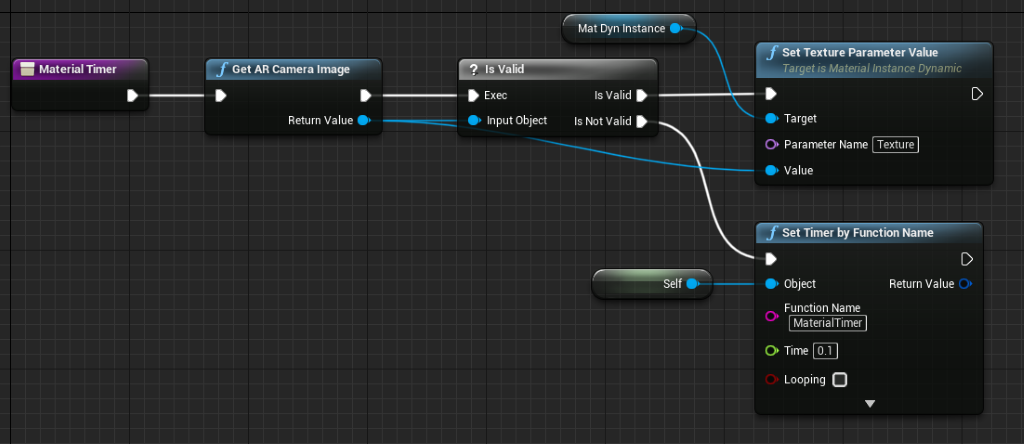

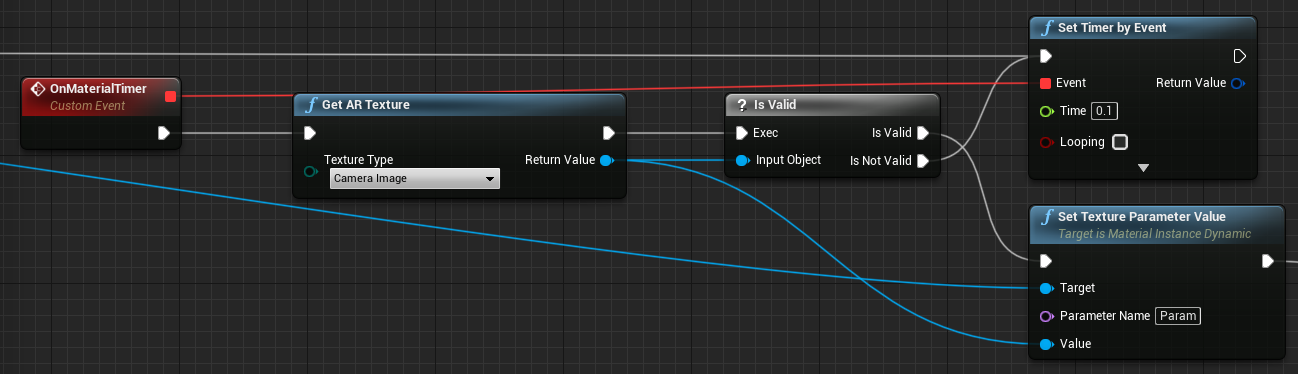

- 从摄像头馈送中获取纹理,然后将其分配到动态材料(若有效)。 如果纹理无效,请启动计时器,并在超时后重试:

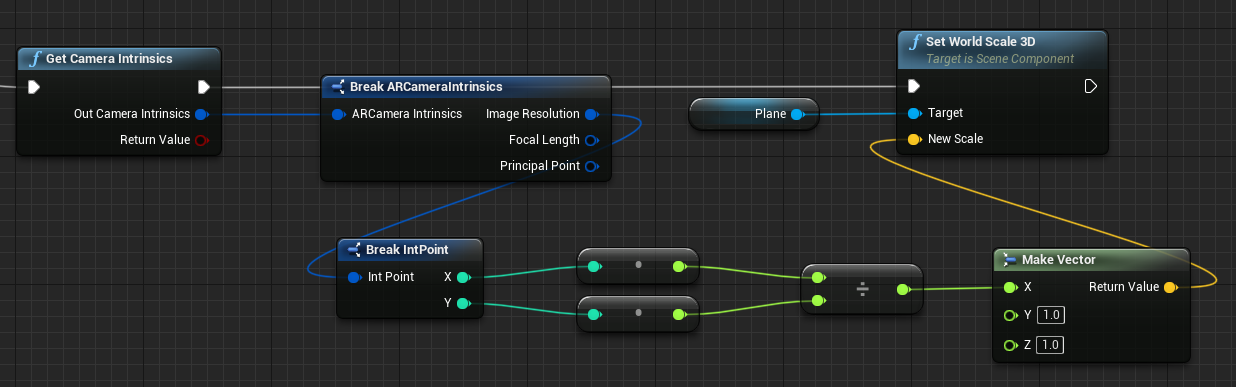

- 最后,按相机图像的纵横比缩放平面:

在世界空间中查找摄像头位置

HoloLens 2 上的摄像头在垂直方向上与设备的头部跟踪存在偏移。 有几个函数可用于在世界空间中定位摄像头,以解决偏移问题。

GetPVCameraToWorldTransform 可获取 PV 摄像头的世界空间中的变形,并将其定位在相机镜头上:

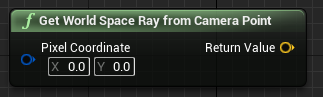

GetWorldSpaceRayFromCameraPoint 将摄像头中的射线投射到 Unreal 世界空间中的场景,以查找相机帧中像素的内容:

GetPVCameraIntrinsics 会返回摄像头固有值,在相机帧上执行计算机视觉处理时可使用这些值:

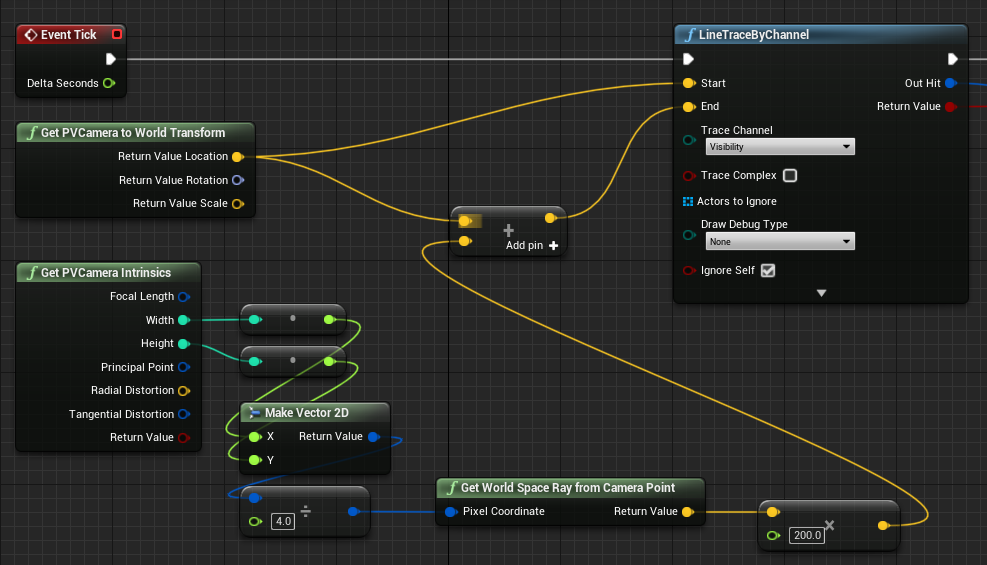

若要在世界空间中查找特定像素坐标处的内容,请将线条跟踪与世界空间射线一起使用:

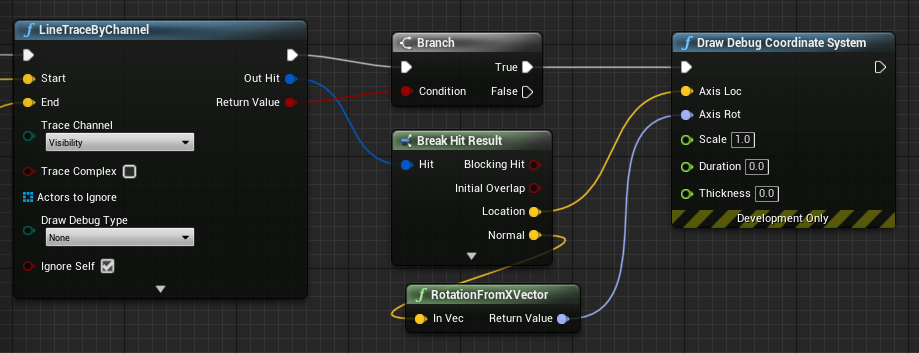

在这里,我们将来自摄像头的一条 2 米射线从帧的左上角投射到相机空间位置 ¼ 处。 然后,使用命中结果来渲染对象在世界空间中的位置:

使用空间映射时,此命中位置将与摄像头当前看到的表面匹配。

使用 C++ 渲染 PV 摄像头馈送

- 新建名为 CamCapture 的 C++ Actor

- 在项目的 build.cs 中,向 PublicDependencyModuleNames 列表添加“AugmentedReality”:

PublicDependencyModuleNames.AddRange(

new string[] {

"Core",

"CoreUObject",

"Engine",

"InputCore",

"AugmentedReality"

});

- 在 CamCapture.h 中,包含 ARBlueprintLibrary.h

#include "ARBlueprintLibrary.h"

- 还需要为网格和材料添加本地变量:

private:

UStaticMesh* StaticMesh;

UStaticMeshComponent* StaticMeshComponent;

UMaterialInstanceDynamic* DynamicMaterial;

bool IsTextureParamSet = false;

- 在 CamCapture.cpp 中,更新构造函数来向场景添加静态网格:

ACamCapture::ACamCapture()

{

PrimaryActorTick.bCanEverTick = true;

// Load a mesh from the engine to render the camera feed to.

StaticMesh = LoadObject<UStaticMesh>(nullptr, TEXT("/Engine/EngineMeshes/Cube.Cube"), nullptr, LOAD_None, nullptr);

// Create a static mesh component to render the static mesh

StaticMeshComponent = CreateDefaultSubobject<UStaticMeshComponent>(TEXT("CameraPlane"));

StaticMeshComponent->SetStaticMesh(StaticMesh);

// Scale and add to the scene

StaticMeshComponent->SetWorldScale3D(FVector(0.1f, 1, 1));

this->SetRootComponent(StaticMeshComponent);

}

在 BeginPlay 中,从项目的相机材料创建一个动态材料实例,将它应用于静态网格组件,然后启动 HoloLens 摄像头。

在编辑器中,右键单击内容浏览器中的 CamTextureMaterial,然后选择“复制引用”来获取 CameraMatPath 的字符串。

void ACamCapture::BeginPlay()

{

Super::BeginPlay();

// Create a dynamic material instance from the game's camera material.

// Right-click on a material in the project and select "Copy Reference" to get this string.

FString CameraMatPath("Material'/Game/Materials/CamTextureMaterial.CamTextureMaterial'");

UMaterial* BaseMaterial = (UMaterial*)StaticLoadObject(UMaterial::StaticClass(), nullptr, *CameraMatPath, nullptr, LOAD_None, nullptr);

DynamicMaterial = UMaterialInstanceDynamic::Create(BaseMaterial, this);

// Use the dynamic material instance when rendering the camera mesh.

StaticMeshComponent->SetMaterial(0, DynamicMaterial);

// Start the webcam.

UARBlueprintLibrary::ToggleARCapture(true, EARCaptureType::Camera);

}

在时钟周期中,从相机获取纹理,在 CamTextureMaterial 材料中将其设置为纹理参数,然后按相机帧的纵横比缩放静态网格组件:

void ACamCapture::Tick(float DeltaTime)

{

Super::Tick(DeltaTime);

// Dynamic material instance only needs to be set once.

if(IsTextureParamSet)

{

return;

}

// Get the texture from the camera.

UARTexture* ARTexture = UARBlueprintLibrary::GetARTexture(EARTextureType::CameraImage);

if(ARTexture != nullptr)

{

// Set the shader's texture parameter (named "Param") to the camera image.

DynamicMaterial->SetTextureParameterValue("Param", ARTexture);

IsTextureParamSet = true;

// Get the camera instrincs

FARCameraIntrinsics Intrinsics;

UARBlueprintLibrary::GetCameraIntrinsics(Intrinsics);

// Scale the camera mesh by the aspect ratio.

float R = (float)Intrinsics.ImageResolution.X / (float)Intrinsics.ImageResolution.Y;

StaticMeshComponent->SetWorldScale3D(FVector(0.1f, R, 1));

}

}

下一个开发检查点

如果你遵循我们规划的 Unreal 开发历程,则你处于探索混合现实平台功能和 API 的过程之中。 从这里,你可以继续了解下一个主题:

或直接跳到在设备或模拟器上部署应用:

你可以随时返回到 Unreal 开发检查点。