Manage Azure Data Lake Analytics using the Azure portal

Important

New Azure Data Lake Analytics accounts can no longer be created unless your subscription has been enabled. If you need your subscription to be enabled contact support and provide your business scenario.

If you are already using Azure Data Lake Analytics, you'll need to create a migration plan to Azure Synapse Analytics for your organization by February 29th, 2024.

This article describes how to manage Azure Data Lake Analytics accounts, data sources, users, and jobs by using the Azure portal.

Manage Data Lake Analytics accounts

Create an account

- Sign in to the Azure portal.

- Select Create a resource and search for Data Lake Analytics.

- Select values for the following items:

- Name: The name of the Data Lake Analytics account.

- Subscription: The Azure subscription used for the account.

- Resource Group: The Azure resource group in which to create the account.

- Location: The Azure datacenter for the Data Lake Analytics account.

- Data Lake Store: The default store to be used for the Data Lake Analytics account. The Azure Data Lake Store account and the Data Lake Analytics account must be in the same location.

- Select Create.

Delete a Data Lake Analytics account

Before you delete a Data Lake Analytics account, delete its default Data Lake Store account.

- In the Azure portal, go to your Data Lake Analytics account.

- Select Delete.

- Type the account name.

- Select Delete.

Manage data sources

Data Lake Analytics supports the following data sources:

- Data Lake Store

- Azure Storage

You can use Data Explorer to browse data sources and perform basic file management operations.

Add a data source

In the Azure portal, go to your Data Lake Analytics account.

Select Data explorer.

Select Add Data Source.

- To add a Data Lake Store account, you need the account name and access to the account to be able to query it.

- To add Azure Blob storage, you need the storage account and the account key. To find them, go to the storage account in the portal and select Access keys.

Set up firewall rules

You can use Data Lake Analytics to further lock down access to your Data Lake Analytics account at the network level. You can enable a firewall, specify an IP address, or define an IP address range for your trusted clients. After you enable these measures, only clients that have the IP addresses within the defined range can connect to the store.

If other Azure services, like Azure Data Factory or VMs, connect to the Data Lake Analytics account, make sure that Allow Azure Services is turned On.

Set up a firewall rule

- In the Azure portal, go to your Data Lake Analytics account.

- On the menu on the left, select Firewall.

Add a new user

You can use the Add User Wizard to easily create new Data Lake users.

- In the Azure portal, go to your Data Lake Analytics account.

- On the left, under Getting Started, select Add User Wizard.

- Select a user, and then select Select.

- Select a role, and then select Select. To set up a new developer to use Azure Data Lake, select the Data Lake Analytics Developer role.

- Select the access control lists (ACLs) for the U-SQL databases. When you're satisfied with your choices, select Select.

- Select the ACLs for files. For the default store, don't change the ACLs for the root folder "/" and for the /system folder. select Select.

- Review all your selected changes, and then select Run.

- When the wizard is finished, select Done.

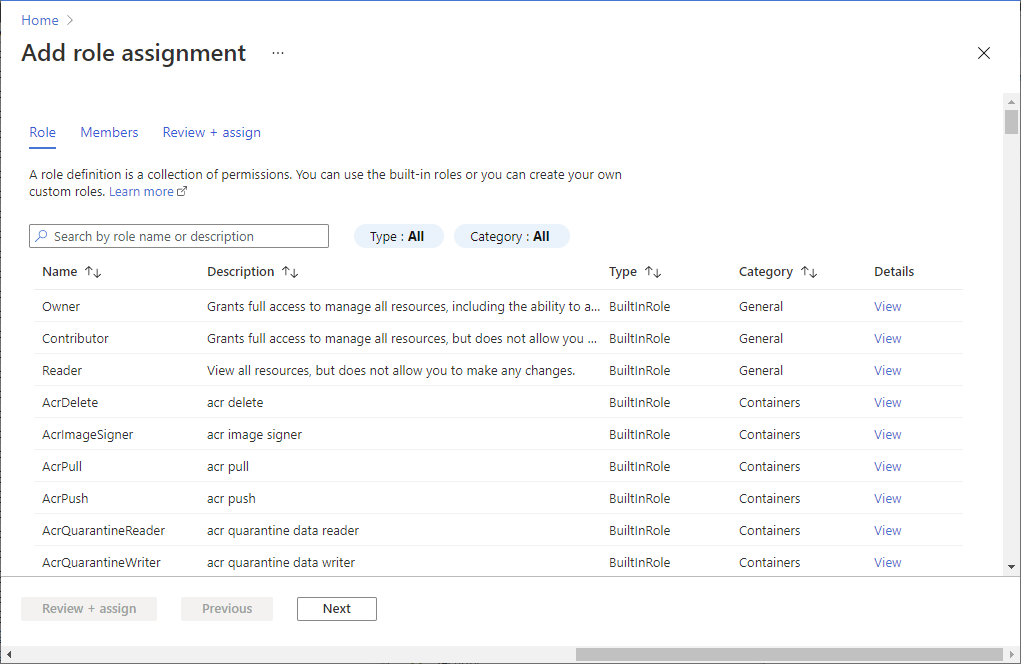

Manage Azure role-based access control

Like other Azure services, you can use Azure role-based access control (Azure RBAC) to control how users interact with the service.

The standard Azure roles have the following capabilities:

- Owner: Can submit jobs, monitor jobs, cancel jobs from any user, and configure the account.

- Contributor: Can submit jobs, monitor jobs, cancel jobs from any user, and configure the account.

- Reader: Can monitor jobs.

Use the Data Lake Analytics Developer role to enable U-SQL developers to use the Data Lake Analytics service. You can use the Data Lake Analytics Developer role to:

- Submit jobs.

- Monitor job status and the progress of jobs submitted by any user.

- See the U-SQL scripts from jobs submitted by any user.

- Cancel only your own jobs.

Add users or security groups to a Data Lake Analytics account

In the Azure portal, go to your Data Lake Analytics account.

Select Access control (IAM).

Select Add > Add role assignment to open the Add role assignment page.

Assign a role to a user. For detailed steps, see Assign Azure roles using the Azure portal.

Note

If a user or a security group needs to submit jobs, they also need permission on the store account. For more information, see Secure data stored in Data Lake Store.

Manage jobs

Submit a job

In the Azure portal, go to your Data Lake Analytics account.

Select New Job. For each job, configure:

- Job Name: The name of the job.

- Priority: This is under More options. Lower numbers have higher priority. If two jobs are queued, the one with lower priority value runs first.

- AUs: The maximum number of Analytics Units, or compute processes to reserve for this job.

- Runtime: Also under More options. Select the Default runtime unless you've received a custom runtime.

Add your script.

Select Submit Job.

Monitor jobs

- In the Azure portal, go to your Data Lake Analytics account.

- Select View All Jobs at the top of the page. A list of all the active and recently finished jobs in the account is shown.

- Optionally, select Filter to help you find the jobs by Time Range, Status, Job Name, Job ID, Pipeline name or Pipeline ID, Recurrence name or Recurrence ID, and Author values.

Monitoring pipeline jobs

Jobs that are part of a pipeline work together, usually sequentially, to accomplish a specific scenario. For example, you can have a pipeline that cleans, extracts, transforms, aggregates usage for customer insights. Pipeline jobs are identified using the "Pipeline" property when the job was submitted. Jobs scheduled using ADF V2 will automatically have this property populated.

To view a list of U-SQL jobs that are part of pipelines:

- In the Azure portal, go to your Data Lake Analytics accounts.

- Select Job Insights. The "All Jobs" tab will be defaulted, showing a list of running, queued, and ended jobs.

- Select the Pipeline Jobs tab. A list of pipeline jobs will be shown along with aggregated statistics for each pipeline.

Monitoring recurring jobs

A recurring job is one that has the same business logic but uses different input data every time it runs. Ideally, recurring jobs should always succeed, and have relatively stable execution time; monitoring these behaviors will help ensure the job is healthy. Recurring jobs are identified using the "Recurrence" property. Jobs scheduled using ADF V2 will automatically have this property populated.

To view a list of U-SQL jobs that are recurring:

- In the Azure portal, go to your Data Lake Analytics accounts.

- Select Job Insights. The "All Jobs" tab will be defaulted, showing a list of running, queued, and ended jobs.

- Select the Recurring Jobs tab. A list of recurring jobs will be shown along with aggregated statistics for each recurring job.