Social media analysis with Azure Stream Analytics

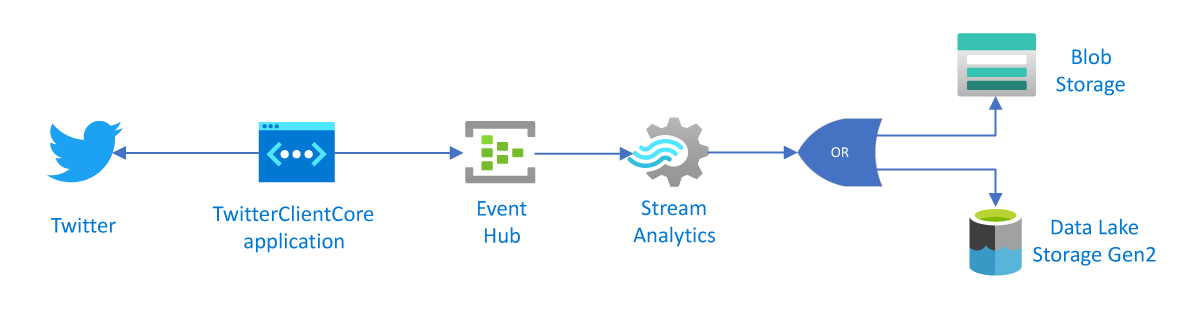

This article teaches you how to build a social media sentiment analysis solution by bringing real-time Twitter events into Azure Event Hubs and then analyzing them using Stream Analytics. You write an Azure Stream Analytics query to analyze the data and store results for later use or create a Power BI dashboard to provide insights in real-time.

Social media analytics tools help organizations understand trending topics. Trending topics are subjects and attitudes that have a high volume of posts on social media. Sentiment analysis, which is also called opinion mining, uses social media analytics tools to determine attitudes toward a product or idea.

Real-time Twitter trend analysis is a great example of an analytics tool because the hashtag subscription model enables you to listen to specific keywords (hashtags) and develop sentiment analysis of the feed.

Scenario: Social media sentiment analysis in real time

A company that has a news media website is interested in gaining an advantage over its competitors by featuring site content that's immediately relevant to its readers. The company uses social media analysis on topics that are relevant to readers by doing real-time sentiment analysis of Twitter data.

To identify trending topics in real time on Twitter, the company needs real-time analytics about the tweet volume and sentiment for key topics.

Prerequisites

In this how-to guide, you use a client application that connects to Twitter and looks for tweets that have certain hashtags (which you can set). The following list gives you prerequisites for running the application and analyzing the tweets using Azure Streaming Analytics.

If you don't have an Azure subscription, create a free account.

A Twitter account.

The TwitterClientCore application, which reads the Twitter feed. To get this application, download TwitterClientCore.

Install the .NET Core CLI version 2.1.0.

Here's the solution architecture you're going to implement.

Create an event hub for streaming input

The sample application generates events and pushes them to an event hub. Azure Event Hubs is the preferred method of event ingestion for Stream Analytics. For more information, see the Azure Event Hubs documentation.

Create an Event Hubs namespace and event hub

Follow instructions from Quickstart: Create an event hub using Azure portal to create an Event Hubs namespace and an event hub named socialtwitter-eh. You can use a different name. If you do, make a note of it, because you need the name later. You don't need to set any other options for the event hub.

Grant access to the event hub

Before a process can send data to an event hub, the event hub needs a policy that allows access. The access policy produces a connection string that includes authorization information.

In the navigation bar on the left side of your Event Hubs namespace, select Event Hubs, which is located in the Entities section. Then, select the event hub you just created.

In the navigation bar on the left side, select Shared access policies located under Settings.

Note

There is a Shared access policies option under for the namespace and for the event hub. Make sure you're working in the context of your event hub, not the namespace.

On the Shared access policies page, select + Add on the commandbar. Then enter socialtwitter-access for the Policy name and check the Manage checkbox.

Select Create.

After the policy has been deployed, select the policy from the list of shared access policies.

Find the box labeled Connection string primary-key and select the copy button next to the connection string.

Paste the connection string into a text editor. You need this connection string for the next section after you make some small edits.

The connection string looks like this:

Endpoint=sb://EVENTHUBS-NAMESPACE.servicebus.windows.net/;SharedAccessKeyName=socialtwitter-access;SharedAccessKey=XXXXXXXXXXXXXXX;EntityPath=socialtwitter-eh

Notice that the connection string contains multiple key-value pairs, separated with semicolons: Endpoint, SharedAccessKeyName, SharedAccessKey, and EntityPath.

Note

For security, parts of the connection string in the example have been removed.

Configure and start the Twitter client application

The client application gets tweet events directly from Twitter. In order to do so, it needs permission to call the Twitter Streaming APIs. To configure that permission, you create an application in Twitter, which generates unique credentials (such as an OAuth token). You can then configure the client application to use these credentials when it makes API calls.

Create a Twitter application

If you don't already have a Twitter application that you can use for this how-to guide, you can create one. You must already have a Twitter account.

Note

The exact process in Twitter for creating an application and getting the keys, secrets, and token might change. If these instructions don't match what you see on the Twitter site, refer to the Twitter developer documentation.

From a web browser, go to Twitter For Developers, create a developer account, and select Create an app. You might see a message saying that you need to apply for a Twitter developer account. Feel free to do so, and after your application has been approved, you should see a confirmation email. It could take several days to be approved for a developer account.

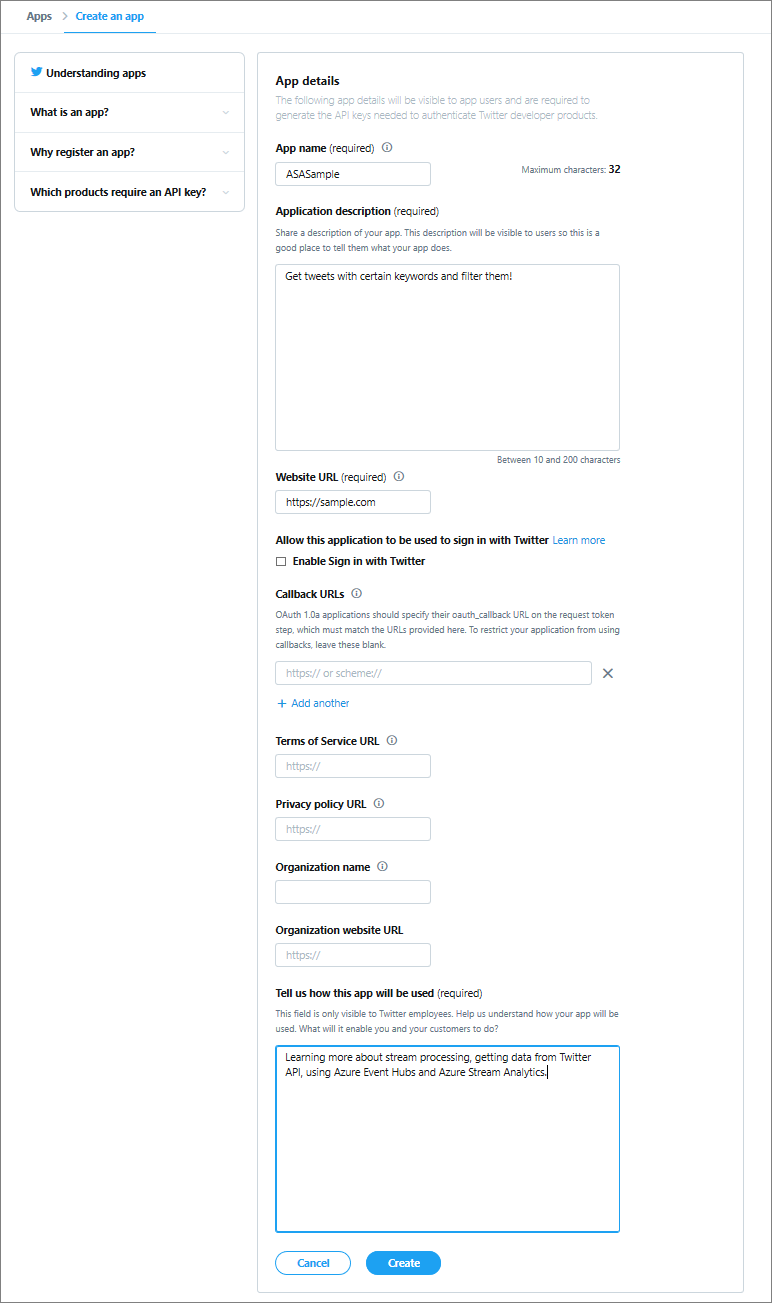

In the Create an application page, provide the details for the new app, and then select Create your Twitter application.

In the application page, select the Keys and Tokens tab and copy the values for Consumer API Key and Consumer API Secret Key. Also, select Create under Access Token and Access Token Secret to generate the access tokens. Copy the values for Access Token and Access Token Secret.

Save the values that you retrieved for the Twitter application. You need the values later.

Note

The keys and secrets for the Twitter application provide access to your Twitter account. Treat this information as sensitive, the same as you do your Twitter password. For example, don't embed this information in an application that you give to others.

Configure the client application

We've created a client application that connects to Twitter data using Twitter Streaming APIs to collect tweet events about a specific set of topics.

Before the application runs, it requires certain information from you, like the Twitter keys and the event hub connection string.

Make sure you've downloaded the TwitterClientCore application, as listed in the prerequisites.

Use a text editor to open the App.config file. Make the following changes to the

<appSettings>element:- Set

oauth_consumer_keyto the Twitter Consumer Key (API key). - Set

oauth_consumer_secretto the Twitter Consumer Secret (API secret key). - Set

oauth_tokento the Twitter Access token. - Set

oauth_token_secretto the Twitter Access token secret. - Set

EventHubNameConnectionStringto the connection string. - Set

EventHubNameto the event hub name (that is the value of the entity path).

- Set

Open the command line and navigate to the directory where your TwitterClientCore app is located. Use the command

dotnet buildto build the project. Then use the commanddotnet runto run the app. The app sends Tweets to your Event Hubs.

Create a Stream Analytics job

Now that tweet events are streaming in real time from Twitter, you can set up a Stream Analytics job to analyze these events in real time.

In the Azure portal, navigate to your resource group and select + Add. Then search for Stream Analytics job and select Create.

Name the job

socialtwitter-sa-joband specify a subscription, resource group, and location.It's a good idea to place the job and the event hub in the same region for best performance and so that you don't pay to transfer data between regions.

Select Create. Then navigate to your job when the deployment is finished.

Specify the job input

In your Stream Analytics job, select Inputs from the left menu under Job Topology.

Select + Add stream input > Event Hub. Fill out the New input form with the following information:

Setting Suggested value Description Input alias TwitterStream Enter an alias for the input. Subscription <Your subscription> Select the Azure subscription that you want to use. Event Hubs namespace asa-twitter-eventhub Event hub name socialtwitter-eh Choose Use existing. Then select the event hub you created. Event compression type Gzip The data compression type. Leave the remaining default values and select Save.

Specify the job query

Stream Analytics supports a simple, declarative query model that describes transformations. To learn more about the language, see the Azure Stream Analytics Query Language Reference. This how-to guide helps you author and test several queries over Twitter data.

To compare the number of mentions among topics, you can use a Tumbling window to get the count of mentions by topic every five seconds.

In your job Overview, select Edit query near the top right of the Query box. Azure lists the inputs and outputs that are configured for the job and lets you create a query to transform the input stream as it is sent to the output.

Change the query in the query editor to the following:

SELECT * FROM TwitterStreamEvent data from the messages should appear in the Input preview window below your query. Ensure the View is set to JSON. If you don't see any data, ensure that your data generator is sending events to your event hub, and that you've selected Gzip as the compression type for the input.

Select Test query and notice the results in the Test results window below your query.

Change the query in the code editor to the following and select Test query:

SELECT System.Timestamp as Time, text FROM TwitterStream WHERE text LIKE '%Azure%'This query returns all tweets that include the keyword Azure.

Create an output sink

You've now defined an event stream, an event hub input to ingest events, and a query to perform a transformation over the stream. The last step is to define an output sink for the job.

In this how-to guide, you write the aggregated tweet events from the job query to Azure Blob storage. You can also push your results to Azure SQL Database, Azure Table storage, Event Hubs, or Power BI, depending on your application needs.

Specify the job output

Under the Job Topology section on the left navigation menu, select Outputs.

In the Outputs page, select + Add and Blob storage/Data Lake Storage Gen2:

- Output alias: Use the name

TwitterStream-Output. - Import options: Select Select storage from your subscriptions.

- Storage account. Select your storage account.

- Container. Select Create new and enter

socialtwitter.

- Output alias: Use the name

Select Save.

Start the job

A job input, query, and output are specified. You're ready to start the Stream Analytics job.

Make sure the TwitterClientCore application is running.

In the job overview, select Start.

On the Start job page, for Job output start time, select Now and then select Start.

Get support

For further assistance, try our Microsoft Q&A question page for Azure Stream Analytics.

Next steps

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for