Training

Module

Explore Azure Event Hubs - Training

Learn how Azure Event Hubs captures events and how to scale your processing application.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Event Hubs is a native data-streaming service in the cloud that can stream millions of events per second, with low latency, from any source to any destination. Event Hubs is compatible with Apache Kafka. It enables you to run existing Kafka workloads without any code changes.

Businesses can use Event Hubs to ingest and store streaming data. By using streaming data, businesses can gain valuable insights, drive real-time analytics, and respond to events as they happen. They can use this data to enhance their overall efficiency and customer experience.

Event Hubs is the preferred event ingestion layer of any event streaming solution that you build on top of Azure. It integrates with data and analytics services inside and outside Azure to build a complete data streaming pipeline to serve the following use cases:

Learn about the key capabilities of Azure Event Hubs in the following sections.

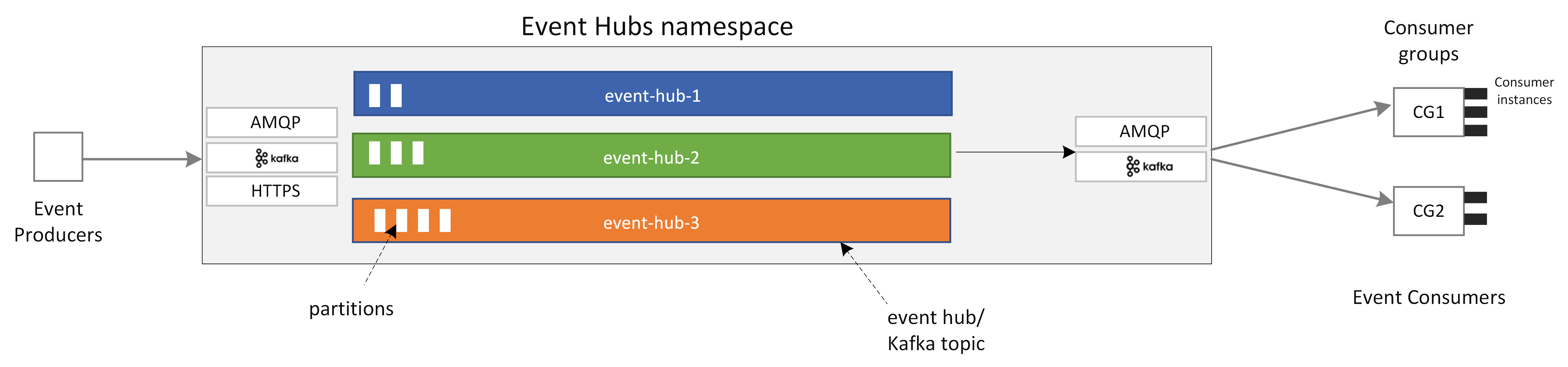

Event Hubs is a multi-protocol event streaming engine that natively supports Advanced Message Queuing Protocol (AMQP), Apache Kafka, and HTTPS protocols. Because it supports Apache Kafka, you can bring Kafka workloads to Event Hubs without making any code changes. You don't need to set up, configure, or manage your own Kafka clusters or use a Kafka-as-a-service offering that's not native to Azure.

Event Hubs is built as a cloud native broker engine. For this reason, you can run Kafka workloads with better performance, better cost efficiency, and no operational overhead.

For more information, see Azure Event Hubs for Apache Kafka.

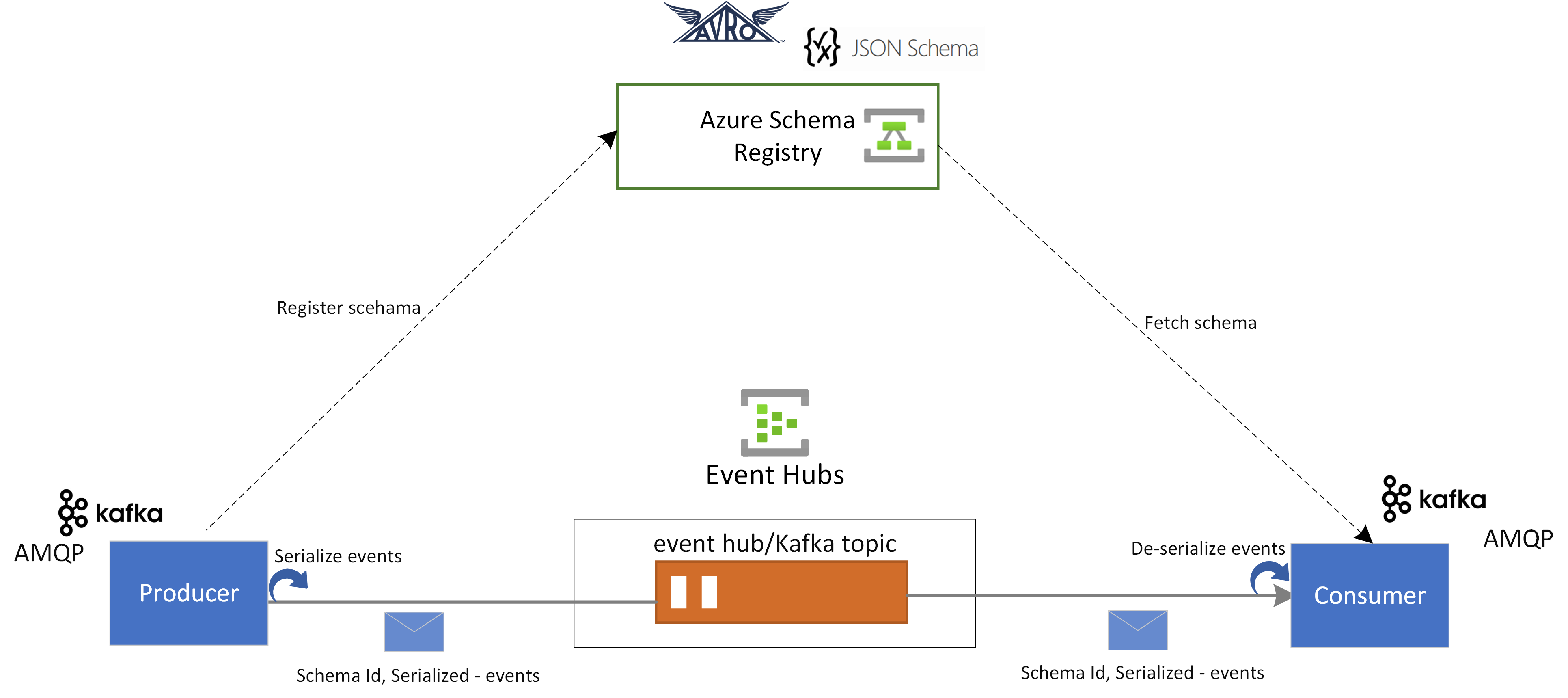

Azure Schema Registry in Event Hubs provides a centralized repository for managing schemas of event streaming applications. Schema Registry comes free with every Event Hubs namespace. It integrates with your Kafka applications or Event Hubs SDK-based applications.

Schema Registry ensures data compatibility and consistency across event producers and consumers. It enables schema evolution, validation, and governance and promotes efficient data exchange and interoperability.

Schema Registry integrates with your existing Kafka applications and supports multiple schema formats, including Avro and JSON schemas.

For more information, see Azure Schema Registry in Event Hubs.

Event Hubs integrates with Azure Stream Analytics to enable real-time stream processing. With the built-in no-code editor, you can develop a Stream Analytics job by using drag-and-drop functionality, without writing any code.

Alternatively, developers can use the SQL-based Stream Analytics query language to perform real-time stream processing and take advantage of a wide range of functions for analyzing streaming data.

For more information, see articles in the Azure Stream Analytics integration section of the table of contents.

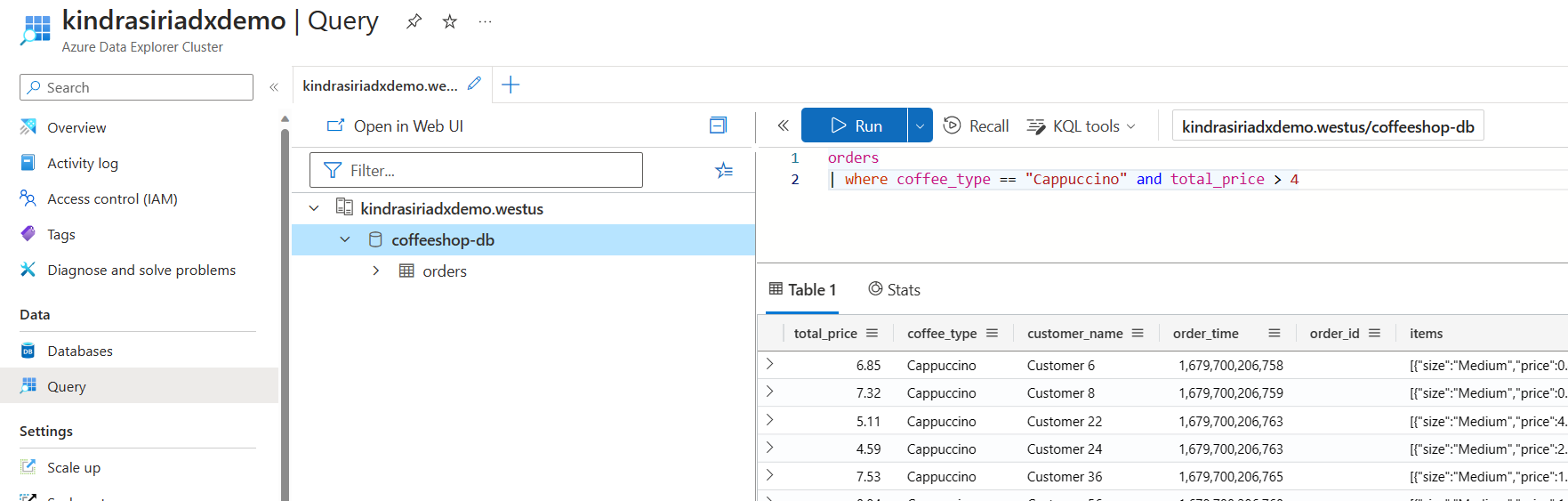

Azure Data Explorer is a fully managed platform for big data analytics that delivers high performance and allows for the analysis of large volumes of data in near real time. By integrating Event Hubs with Azure Data Explorer, you can perform near real-time analytics and exploration of streaming data.

For more information, see Ingest data from an event hub into Azure Data Explorer.

With Event Hubs, you can ingest, buffer, store, and process your stream in real time to get actionable insights. Event Hubs uses a partitioned consumer model. It enables multiple applications to process the stream concurrently and lets you control the speed of processing. Event Hubs also integrates with Azure Functions for serverless architectures.

A broad ecosystem is available for the industry-standard AMQP 1.0 protocol. SDKs are available in languages like .NET, Java, Python, and JavaScript, so you can start processing your streams from Event Hubs. All supported client languages provide low-level integration.

The ecosystem also allows you to integrate with Azure Functions, Azure Spring Apps, Kafka Connectors, and other data analytics platforms and technologies, such as Apache Spark and Apache Flink.

Azure Event Hubs emulator offers a local development experience for the Event Hubs. You can use the emulator to develop and test code against the service in isolation, free from cloud interference. For more information, see Event Hubs emulator

You can experience flexible and cost-efficient event streaming through the Standard, Premium, or Dedicated tiers for Event Hubs. These options cater to data streaming needs that range from a few MB/sec to several GB/sec. You can choose the match that's appropriate for your requirements.

With Event Hubs, you can start with data streams in megabytes and grow to gigabytes or terabytes. The auto-inflate feature is one of the options available to scale the number of throughput units or processing units to meet your usage needs.

In most streaming scenarios, data is characterized by being lightweight, typically less than 1 MB, and having a high throughput. There are also instances where messages can't be divided into smaller segments. Event Hubs can accommodate events up to 20 MB with self-serve scalable dedicated clusters at no extra charge. This capability allows Event Hubs to handle a wide range of message sizes to ensure uninterrupted business operations. For more information, see Send and receive large messages with Azure Event Hubs.

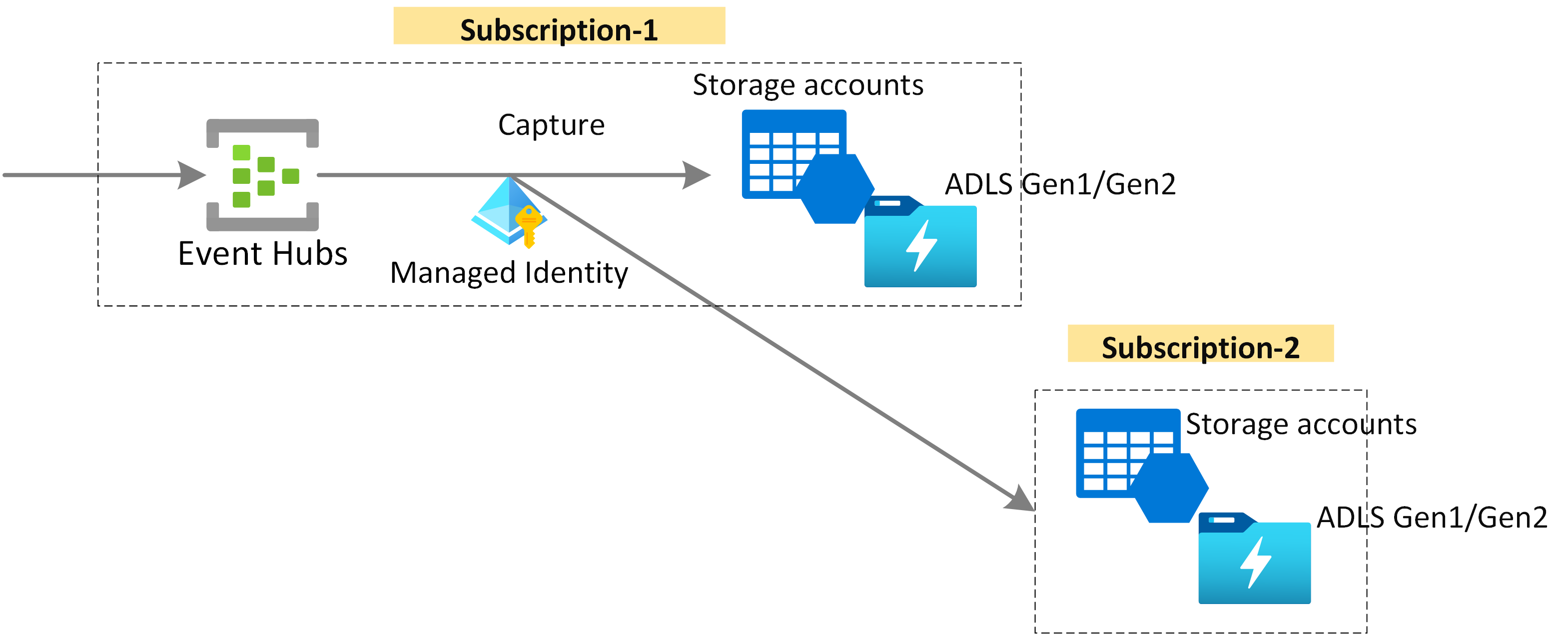

Capture your data in near real time in Azure Blob Storage or Azure Data Lake Storage for long-term retention or micro-batch processing. You can achieve this behavior on the same stream that you use for deriving real-time analytics. Setting up capture of event data is fast.

Event Hubs provides a unified event streaming platform with a time-retention buffer, decoupling event producers from event consumers. The producer and consumer applications can perform large-scale data ingestion through multiple protocols.

The following diagram shows the main components of Event Hubs architecture.

The key functional components of Event Hubs include:

To get started using Event Hubs, see the following quickstarts.

You can use any of the following samples to stream data to Event Hubs by using SDKs.

You can use the following samples to stream data from your Kafka applications to Event Hubs.

You can use Event Hubs Schema Registry to perform schema validation for your event streaming applications.

Training

Module

Explore Azure Event Hubs - Training

Learn how Azure Event Hubs captures events and how to scale your processing application.