Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Note

The Time Series Insights service will be retired on 7 July 2024. Consider migrating existing environments to alternative solutions as soon as possible. For more information on the deprecation and migration, visit our documentation.

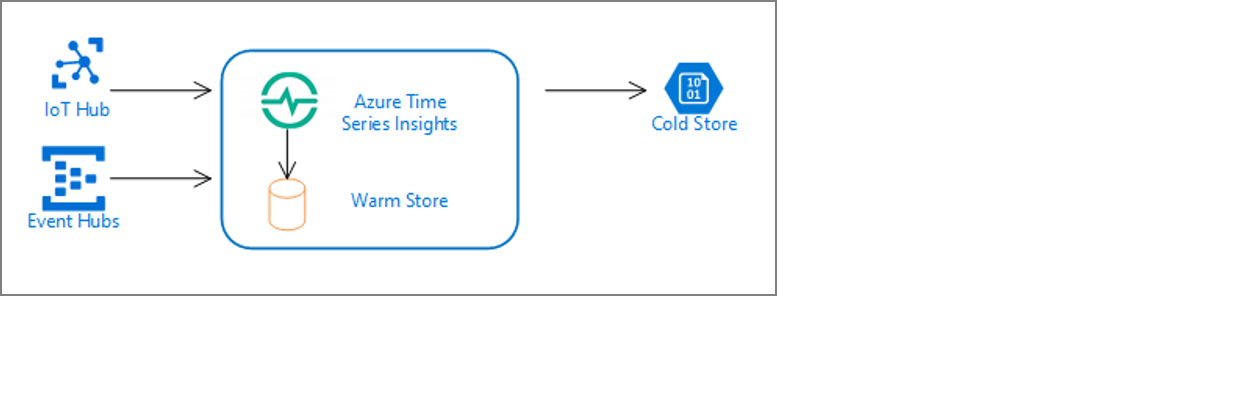

Your Azure Time Series Insights Gen2 environment contains an ingestion engine to collect, process, and store streaming time series data. As data arrives into your event source(s), Azure Time Series Insights Gen2 will consume and store your data in near real time.

The following articles cover data processing in depth, including best practices to follow:

Read about event sources and guidance on selecting an event source timestamp.

Review the supported data types

Understand how the ingestion engine will apply a set of rules to your JSON properties to create your storage account columns.

Review your environment throughput limitations to plan for your scale needs.

- Continue on to learn more about event sources for your Azure Time Series Insights Gen2 environment.