Unity 中的运动控制器

对 Unity 中的凝视执行操作的主要方式有两种:HoloLens 和沉浸式 HMD 中的手势和运动控制器。 可以通过 Unity 中的相同 API 访问这两个空间输入源的数据。

Unity 为 Windows Mixed Reality 提供两种访问空间输入数据的主要方式。 通用的 Input.GetButton/Input.GetAxis API 可跨多个 Unity XR SDK 运行,而特定于 Windows Mixed Reality 的 InteractionManager/GestureRecognizer API 则公开一组完整的空间输入数据。

Unity XR 输入 API

对于新项目,建议从头开始使用新的 XR 输入 API。

你可以在此处找到有关 XR API 的详细信息。

Unity 按钮/轴映射表

适用于 Windows Mixed Reality 运动控制器的 Unity 输入管理器通过 Input.GetButton/GetAxis API 支持以下列出的按钮和轴 ID。 “特定于 Windows MR”列是 InteractionSourceState 类型的可用属性。 以下各个部分将详细介绍这些 API。

适用于 Windows Mixed Reality 的按钮/轴 ID 映射通常与 Oculus 按钮/轴 ID 匹配。

适用于 Windows Mixed Reality 的按钮/轴 ID 映射在两个方面不同于 OpenVR 的映射:

- 此映射使用不同于控制杆的触控板 ID,以支持同时具有控制杆和触控板的控制器。

- 此映射避免重载菜单按钮的 A 和 X 按钮 ID,使它们可用于物理 ABXY 按钮。

| 输入 | 通用 Unity API (Input.GetButton/GetAxis) | 特定于 Windows MR 的输入 API (XR.WSA.Input) |

|

|---|---|---|---|

| 左手 | 右手 | ||

| 按下选择扳机键 | 轴 9 = 1.0 | 轴 10 = 1.0 | selectPressed |

| 选择扳机键模拟值 | 轴 9 | 轴 10 | selectPressedAmount |

| 部分按下选择扳机键 | 按钮 14(兼容手柄) | 按钮 15(兼容手柄) | selectPressedAmount > 0.0 |

| 按下菜单按钮 | 按钮 6* | 按钮 7* | menuPressed |

| 按下抓握按钮 | 轴 11 = 1.0(无模拟值) 按钮 4(兼容手柄) | 轴 12 = 1.0(无模拟值) 按钮 5(兼容手柄) | grasped |

| 控制杆 X(左:-1.0,右:1.0) | 轴 1 | 轴 4 | thumbstickPosition.x |

| 控制杆 Y(顶部:-1.0,底部:1.0) | 轴 2 | 轴 5 | thumbstickPosition.y |

| 按下控制杆 | 按钮 8 | 按钮 9 | thumbstickPressed |

| 触控板 X(左:-1.0,右:1.0) | 轴 17* | 轴 19* | touchpadPosition.x |

| 触控板 Y(顶部:-1.0,底部:1.0) | 轴 18* | 轴 20* | touchpadPosition.y |

| 触摸触控板 | 按钮 18* | 按钮 19* | touchpadTouched |

| 按下触控板 | 按钮 16* | 按钮 17* | touchpadPressed |

| 6DoF 抓握姿势或指向姿势 | 仅限抓握姿势:XR.InputTracking.GetLocalPosition XR.InputTracking.GetLocalRotation | 将 Grip 或 Pointer 作为参数传递:sourceState.sourcePose.TryGetPosition sourceState.sourcePose.TryGetRotation |

|

| 跟踪状态 | 仅特定于 MR 的 API 提供位置准确度和源丢失风险状态 | sourceState.sourcePose.positionAccuracy sourceState.properties.sourceLossRisk |

|

注意

由于手柄、Oculus Touch 和 OpenVR 所使用的映射存在冲突,因此这些按钮/轴 ID 不同于 Unity 用于 OpenVR 的 ID。

OpenXR

若要了解有关 Unity 中混合现实交互的基础知识,请访问 Unity 手册中的 Unity XR 输入。 此 Unity 文档介绍了从特定于控制器的输入到更通用的 InputFeatureUsages 的映射,如何识别和分类可用的 XR 输入,以及如何读取这些输入的数据等。

混合现实 OpenXR 插件提供其他映射到标准 InputFeatureUsages 的输入交互配置文件,如下所述:

| InputFeatureUsage | HP Reverb G2 手柄 (OpenXR) | HoloLens 手势 (OpenXR) |

|---|---|---|

| primary2DAxis | 游戏杆 | |

| primary2DAxisClick | 游戏杆 - 单击 | |

| 触发器 | 触发器 | |

| grip | 抓握 | 隔空敲击或紧捏 |

| primaryButton | [X/A] - 按 | 隔空敲击 |

| secondaryButton | [Y/B] - 按 | |

| gripButton | 抓握 - 按 | |

| triggerButton | 扳机键 - 按 | |

| menuButton | 菜单 |

抓握姿势与指向姿势

Windows Mixed Reality 支持各种外形规格的运动控制器。 每个控制器设计的不同之处在于用户手部位置与应用呈现控制器时应该用于指向的自然“向前”方向之间的关系。

为了更好地表示这些控制器,可以针对每个交互源调查两种类型的姿势:抓握姿势和指向姿势。 抓握姿势和指向姿势坐标均由全局 Unity 世界坐标中的所有 Unity API 来表示。

抓握姿势

抓握姿势表示由 HoloLens 检测到的或用户持有运动控制器时的手掌位置。

在沉浸式头戴显示设备中,抓握姿势最适合呈现用户手部或用户手中持有的对象。 可视化运动控制器时也可使用抓握姿势。 由 Windows 针对运动控制器提供的可呈现模型将抓握姿势作为原点和旋转中心。

抓握姿势的定义具体如下:

- 抓握位置:自然持有控制器时的手掌质心,向左或向右调整以使位置在抓握范围内。 在 Windows Mixed Reality 运动控制器上,此位置通常对齐“抓取”按钮。

- 抓握方向的右轴:当你完全打开手部以形成平展的 5 指姿势时,垂直于手掌的光线(指向左手掌前方,指向右手掌后方)

- 抓握方向的前轴:当你部分握紧手时(就如同持有控制器一样),通过手指关节形成的管道指向“前方”的射线。

- 抓握方向的上轴:由向右和向前定义默示的上轴。

可以通过 Unity 的跨供应商输入 API (XR.InputTracking.GetLocalPosition/Rotation) 或通过特定于 Windows MR 的 API(sourceState.sourcePose.TryGetPosition/Rotation,请求抓握节点的姿势数据)访问抓握姿势。

指向姿势

指向姿势表示指向前方的控制器指针。

在渲染控制器模型本身时,系统提供的指向姿势最适合进行光线投射。 如果要呈现替代控制器的其他一些虚拟对象(如虚拟枪支),则应使用该虚拟对象最自然的光线进行指向,例如沿着应用定义的枪支模型的枪管传播的光线。 由于用户看到的是虚拟对象,而非物理控制器,因此,对于使用应用的用户而言,借助虚拟对象进行指向可能会更自然。

目前,Unity 中的指向姿势仅通过特定于 Windows MR 的 API(sourceState.sourcePose.TryGetPosition/Rotation 作为参数传入 InteractionSourceNode.Pointer)提供。

OpenXR

通过 OpenXR 输入交互可以访问两组姿势:

- 用于呈现手中对象的抓握姿势

- 指向世界的瞄准姿势。

在 OpenXR 规范 - 输入子路径中可以找到有关此设计及两种姿势之间的差异的详细信息。

InputFeatureUsages DevicePosition、DeviceRotation、DeviceVelocity 和 DeviceAngularVelocity 提供的姿势都表示 OpenXR 抓握姿势。 Unity 的 CommonUsages 中定义了与抓握姿势相关的 InputFeatureUsage。

InputFeatureUsages PointerPosition、PointerRotation、PointerVelocity 和 PointerAngularVelocity 提供的姿势都表示 OpenXR 瞄准姿势。 没有任何包括的 C# 文件定义这些 InputFeatureUsage,因此你需要定义自己的 InputFeatureUsage,如下所示:

public static readonly InputFeatureUsage<Vector3> PointerPosition = new InputFeatureUsage<Vector3>("PointerPosition");

触觉

有关在 Unity 的 XR 输入系统中使用触觉的信息,请参阅 Unity 手册中的 Unity XR 输入 - 触觉文档。

控制器跟踪状态

与头戴显示设备一样,Windows Mixed Reality 运动控制器不需要安装外部跟踪传感器。 这些控制器由头戴显示设备本身中的传感器进行跟踪。

如果用户将控制器移出头戴显示设备的视野,则在大多数情况下,Windows 会继续推断控制器位置。 如果长时间内没有对控制器进行视觉跟踪,则控制器的位置只能是大致准确的位置。

此时,系统会通过身体锁定方式将控制器锁定到用户,跟踪用户移动时的位置,同时仍然使用控制器的内部方向传感器公开控制器的实际方向。 许多使用控制器来指向和激活 UI 元素的应用可以正常运行,其准确度基本不受影响,不会引起用户注意。

显式推理跟踪状态

要根据跟踪状态以不同方式处理位置的应用可能会进一步检查控制器状态的属性,如 SourceLossRisk 和 PositionAccuracy:

| 跟踪状态 | SourceLossRisk | PositionAccuracy | TryGetPosition |

|---|---|---|---|

| 高准确度 | < 1.0 | High | 是 |

| 高准确度(存在丢失风险) | == 1.0 | High | 是 |

| 近似准确度 | == 1.0 | 近似 | 是 |

| 无位置 | == 1.0 | 近似 | false |

这些运动控制器跟踪状态的定义如下:

- 高准确度:当运动控制器在头戴显示设备的视场内时,它通常会根据视觉跟踪提供高准确度的位置。 基于控制器本身的惯性跟踪,暂时离开视野或暂时遮挡了头戴显示设备传感器(例如被用户的另一只手遮挡)的移动控制器将在短时间内继续返回高准确度姿势。

- 高准确度(存在丢失风险):当用户移动运动控制器经过头戴显示设备视野的边缘时,头戴显示设备无法立即对控制器的位置进行视觉跟踪。 应用发现 SourceLossRisk 到达 1.0 时就会知道控制器已到达此 FOV 边界。 此时,应用可以选择暂停需要稳定的高质量姿势流的控制器手势。

- 近似准确度:如果长时间内没有对控制器进行视觉跟踪,则控制器的位置只能是大致准确的位置。 此时,系统会通过身体锁定方式将控制器锁定到用户,跟踪用户移动时的位置,同时仍然使用控制器的内部方向传感器公开控制器的实际方向。 许多使用控制器来指向和激活 UI 元素的应用可以如常运行,其准确度基本不受影响,不会引起用户注意。 对输入要求较高的应用可以选择通过检查 PositionAccuracy 属性来感知从高准确度到近似准确度的下降,例如在此期间为用户提供更宽松的屏幕外目标有效命中体。

- 无位置:虽然控制器可以在很长一段时间内以近似准确度运行,但有时系统知道即使身体锁定位置当前也没有意义。 例如,用户可能未察觉到控制器已打开,或用户可能放下控制器然后其他人又拿起它。 在这种情况下,系统不会向应用提供任何位置,且 TryGetPosition 将返回 False。

通用 Unity API (Input.GetButton/GetAxis)

命名空间:UnityEngine、UnityEngine.XR

类型:输入、XR.InputTracking

Unity 目前使用常规 Input.GetButton/Input.GetAxis API 公开 Oculus SDK、OpenVR SDK 和 Windows Mixed Reality(包括手部和运动控制器)的输入。 如果你的应用使用这些 API 进行输入,则可以跨多个 XR SDK(包括 Windows Mixed Reality)轻松支持运动控制器。

获取逻辑按钮的按下状态

若要使用常规 Unity 输入 API,通常先在 Unity 输入管理器中将按钮和轴连接到逻辑名称,以将按钮或轴 ID 绑定到每个名称。 然后,可以编写用于引用逻辑按钮/轴名称的代码。

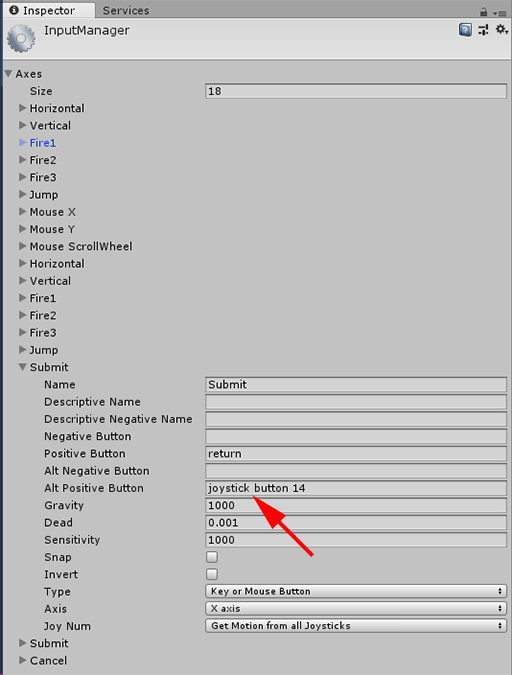

例如,若要将左运动控制器的扳机键按钮映射到“提交”操作,请在 Unity 内转至“编辑”>“项目设置”>“输入”,然后展开“轴”下“提交”部分的属性。 将“正向按钮”或“Alt 正向按钮”属性更改为“游戏杆按钮 14”,如下所示:

Unity InputManager

然后,脚本可以使用 Input.GetButton 检查“提交”操作:

if (Input.GetButton("Submit"))

{

// ...

}

可以通过在“轴”下更改“数量”属性来添加更多逻辑按钮。

直接获取物理按钮的按下状态

也可以使用 Input.GetKey,通过按钮的完全限定名手动访问按钮:

if (Input.GetKey("joystick button 8"))

{

// ...

}

获取手部姿势或运动控制器姿势

可以使用 XR.InputTracking 访问控制器的位置和旋转:

Vector3 leftPosition = InputTracking.GetLocalPosition(XRNode.LeftHand);

Quaternion leftRotation = InputTracking.GetLocalRotation(XRNode.LeftHand);

注意

以上代码表示控制器的抓握姿势(用户手持控制器),在呈现用户手中的剑或枪支或控制器本身的模型时非常有用。

抓握姿势和指向姿势(控制器指针的指向)之间的关系可能因控制器而异。 目前,仅能通过特定于 MR 的输入 API 访问控制器的指向姿势,如以下几节所述。

特定于 Windows 的 API (XR.WSA.Input)

注意

如果项目使用的是任一 XR.WSA API,则在将来的 Unity 版本中,这些 API 将逐步淘汰,取而代之的是 XR SDK。 对于新项目,建议从头开始使用 XR SDK。 你可以在此处找到有关 XR 输入系统和 API 的详细信息。

命名空间:UnityEngine.XR.WSA.Input

类型:InteractionManager、InteractionSourceState、InteractionSource、InteractionSourceProperties、InteractionSourceKind、InteractionSourceLocation

若要获取有关 Windows Mixed Reality 手部输入(适用于 HoloLens)和运动控制器的更多详细信息,可以选择使用 UnityEngine.XR.WSA.Input 命名空间下的特定于 Windows 的空间输入 API。 这样便可以访问其他信息(例如位置精确度或源类型),从而区分手部和控制器。

轮询手部和运动控制器状态

通过使用 GetCurrentReading 方法,可以轮询每个交互源(手部或运动控制器)的当前帧状态。

var interactionSourceStates = InteractionManager.GetCurrentReading();

foreach (var interactionSourceState in interactionSourceStates) {

// ...

}

返回的每个 InteractionSourceState 表示当前的交互源。 InteractionSourceState 公开如下信息:

出现哪些按下操作(选择/菜单/抓取/触控板/控制杆)

if (interactionSourceState.selectPressed) { // ... }其他特定于运动控制器的数据,例如触控板和/或控制杆的 X、Y 坐标和触摸状态

if (interactionSourceState.touchpadTouched && interactionSourceState.touchpadPosition.x > 0.5) { // ... }InteractionSourceKind,用于了解源是手部还是运动控制器

if (interactionSourceState.source.kind == InteractionSourceKind.Hand) { // ... }

轮询前向预测呈现姿势

当轮询来自手部和控制器的交互源数据时,所获姿势是此刻当前帧的光子到达用户眼部时的前向预测姿势。 前向预测姿势最适合用于呈现每一帧的控制器或持有对象。 如果使用以下所述的历史事件 API,则使用控制器执行给定的按下或松开操作是最准确的。

var sourcePose = interactionSourceState.sourcePose; Vector3 sourceGripPosition; Quaternion sourceGripRotation; if ((sourcePose.TryGetPosition(out sourceGripPosition, InteractionSourceNode.Grip)) && (sourcePose.TryGetRotation(out sourceGripRotation, InteractionSourceNode.Grip))) { // ... }此外,还可以获取当前帧的前向预测头部姿势。 与源姿势一样,这对于呈现光标非常有用,但如果使用以下所述的历史事件 API,则执行给定的按下或松开操作是最准确的。

var headPose = interactionSourceState.headPose; var headRay = new Ray(headPose.position, headPose.forward); RaycastHit raycastHit; if (Physics.Raycast(headPose.position, headPose.forward, out raycastHit, 10)) { var cursorPos = raycastHit.point; // ... }

处理交互源事件

若要在输入事件发生时使用其准确的历史姿势数据进行处理,可处理交互源事件,而不是进行轮询。

若要处理交互源事件,请执行以下操作:

注册 InteractionManager 输入事件。 对于你感兴趣的每种类型的交互事件,你需要订阅该事件。

InteractionManager.InteractionSourcePressed += InteractionManager_InteractionSourcePressed;处理 事件。 订阅交互事件后,将在适当时获取回调。 在 SourcePressed 示例中,检测到源并在其释放或丢失之前,将获取回调。

void InteractionManager_InteractionSourceDetected(InteractionSourceDetectedEventArgs args) var interactionSourceState = args.state; // args.state has information about: // targeting head ray at the time when the event was triggered // whether the source is pressed or not // properties like position, velocity, source loss risk // source id (which hand id for example) and source kind like hand, voice, controller or other }

如何停止处理事件

如果对事件不再感兴趣或要销毁已订阅到事件的对象,则需要停止处理事件。 若要停止处理事件,请取消订阅该事件。

InteractionManager.InteractionSourcePressed -= InteractionManager_InteractionSourcePressed;

交互源事件列表

可用的交互源事件包括:

- InteractionSourceDetected(源变为活动状态)

- InteractionSourceLost(变为停用状态)

- InteractionSourcePressed(点击、按下按钮或说出“选择”)

- InteractionSourceReleased(点击结束、松开按钮或说完“选择”)

- InteractionSourceUpdated(移动或以其他方式更改某种状态)

与按下或松开操作匹配最精准的历史目标姿势事件

前面所述的轮询 API 为应用提供前向预测姿势。 尽管这些预测姿势最适合呈现控制器或虚拟手持对象,但出于以下两个重要原因,未来姿势并非最佳目标姿势:

- 当用户按下控制器上的按钮时,在系统收到该按下操作之前,可能会有大约 20 毫秒的无线蓝牙延迟。

- 然后,如果使用前向预测姿势,在当前帧光子到达用户眼部时,前向预测还需 10-20 毫秒时间应用于目标。

这意味着,轮询提供源姿势或头部姿势的时间比用户按下或松开按钮时头部和手部实际返回的时间晚了 30-40 毫秒。 对于 HoloLens 手部输入,虽然没有无线传输延迟,但检测按下操作时仍有类似的处理延迟。

若要根据用户采用手部或控制器执行按下操作的原始意图来精准确定目标,应使用来自该 InteractionSourcePressed 或 InteractionSourceReleased 输入事件的历史源姿势或头部姿势。

可以使用用户头部或其控制器的历史姿势数据来确定按下或松开操作的目标:

按下手势或控制器按下操作发生时的头部姿势可用于确定目标,从而确定用户正在凝视的对象:

void InteractionManager_InteractionSourcePressed(InteractionSourcePressedEventArgs args) { var interactionSourceState = args.state; var headPose = interactionSourceState.headPose; RaycastHit raycastHit; if (Physics.Raycast(headPose.position, headPose.forward, out raycastHit, 10)) { var targetObject = raycastHit.collider.gameObject; // ... } }采用运动控制器执行按下操作时的源姿势可用于确定目标,从而确定用户将控制器指向的目标。 这是执行按下操作时控制器的状态。 如果要呈现控制器本身,则可以请求指向姿势(而非抓握姿势),以从用户认为的该呈现控制器自然的指针方向发射目标光线:

void InteractionManager_InteractionSourcePressed(InteractionSourcePressedEventArgs args) { var interactionSourceState = args.state; var sourcePose = interactionSourceState.sourcePose; Vector3 sourceGripPosition; Quaternion sourceGripRotation; if ((sourcePose.TryGetPosition(out sourceGripPosition, InteractionSourceNode.Pointer)) && (sourcePose.TryGetRotation(out sourceGripRotation, InteractionSourceNode.Pointer))) { RaycastHit raycastHit; if (Physics.Raycast(sourceGripPosition, sourceGripRotation * Vector3.forward, out raycastHit, 10)) { var targetObject = raycastHit.collider.gameObject; // ... } } }

事件处理程序示例

using UnityEngine.XR.WSA.Input;

void Start()

{

InteractionManager.InteractionSourceDetected += InteractionManager_InteractionSourceDetected;

InteractionManager.InteractionSourceLost += InteractionManager_InteractionSourceLost;

InteractionManager.InteractionSourcePressed += InteractionManager_InteractionSourcePressed;

InteractionManager.InteractionSourceReleased += InteractionManager_InteractionSourceReleased;

InteractionManager.InteractionSourceUpdated += InteractionManager_InteractionSourceUpdated;

}

void OnDestroy()

{

InteractionManager.InteractionSourceDetected -= InteractionManager_InteractionSourceDetected;

InteractionManager.InteractionSourceLost -= InteractionManager_InteractionSourceLost;

InteractionManager.InteractionSourcePressed -= InteractionManager_InteractionSourcePressed;

InteractionManager.InteractionSourceReleased -= InteractionManager_InteractionSourceReleased;

InteractionManager.InteractionSourceUpdated -= InteractionManager_InteractionSourceUpdated;

}

void InteractionManager_InteractionSourceDetected(InteractionSourceDetectedEventArgs args)

{

// Source was detected

// args.state has the current state of the source including id, position, kind, etc.

}

void InteractionManager_InteractionSourceLost(InteractionSourceLostEventArgs state)

{

// Source was lost. This will be after a SourceDetected event and no other events for this

// source id will occur until it is Detected again

// args.state has the current state of the source including id, position, kind, etc.

}

void InteractionManager_InteractionSourcePressed(InteractionSourcePressedEventArgs state)

{

// Source was pressed. This will be after the source was detected and before it is

// released or lost

// args.state has the current state of the source including id, position, kind, etc.

}

void InteractionManager_InteractionSourceReleased(InteractionSourceReleasedEventArgs state)

{

// Source was released. The source would have been detected and pressed before this point.

// This event will not fire if the source is lost

// args.state has the current state of the source including id, position, kind, etc.

}

void InteractionManager_InteractionSourceUpdated(InteractionSourceUpdatedEventArgs state)

{

// Source was updated. The source would have been detected before this point

// args.state has the current state of the source including id, position, kind, etc.

}

MRTK 中的运动控制器

可以从输入管理器访问手势和运动控制器。

按照教程进行操作

混合现实学院中提供了分步教程和更详细的自定义示例:

下一个开发检查点

如果你遵循了我们规划的 Unity 开发旅程,则目前正处于探索 MRTK 核心构建基块的过程中。 从这里,你可以继续了解下一部分基础知识:

或跳转到混合现实平台功能和 API:

你可以随时返回到 Unity 开发检查点。