Troubleshoot Azure Data Factory Studio Issues

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

This article explores common troubleshooting methods for Azure Data Factory Studio, the user interface for the service.

Azure Data Factory Studio fails to load

Note

The Azure Data Factory Studio officially supports Microsoft Edge and Google Chrome. Using other web browsers may lead to unexpected or undocumented behavior.

Third-party cookies blocked

Azure Data Factory Studio uses browser cookies to persist user session state and enable interactive development and monitoring experiences. Your browser could block third-party cookies because you're using an incognito session or have an ad blocker enabled. Blocking third-party cookies can cause issues when loading the portal. You could be redirected to a blank page, to 'https://adf.azure.com/accesstoken.html', or could encounter a warning message saying that third-party cookies are blocked. To solve this problem, enable third-party cookies options on your browser using the following steps:

Allow all cookies

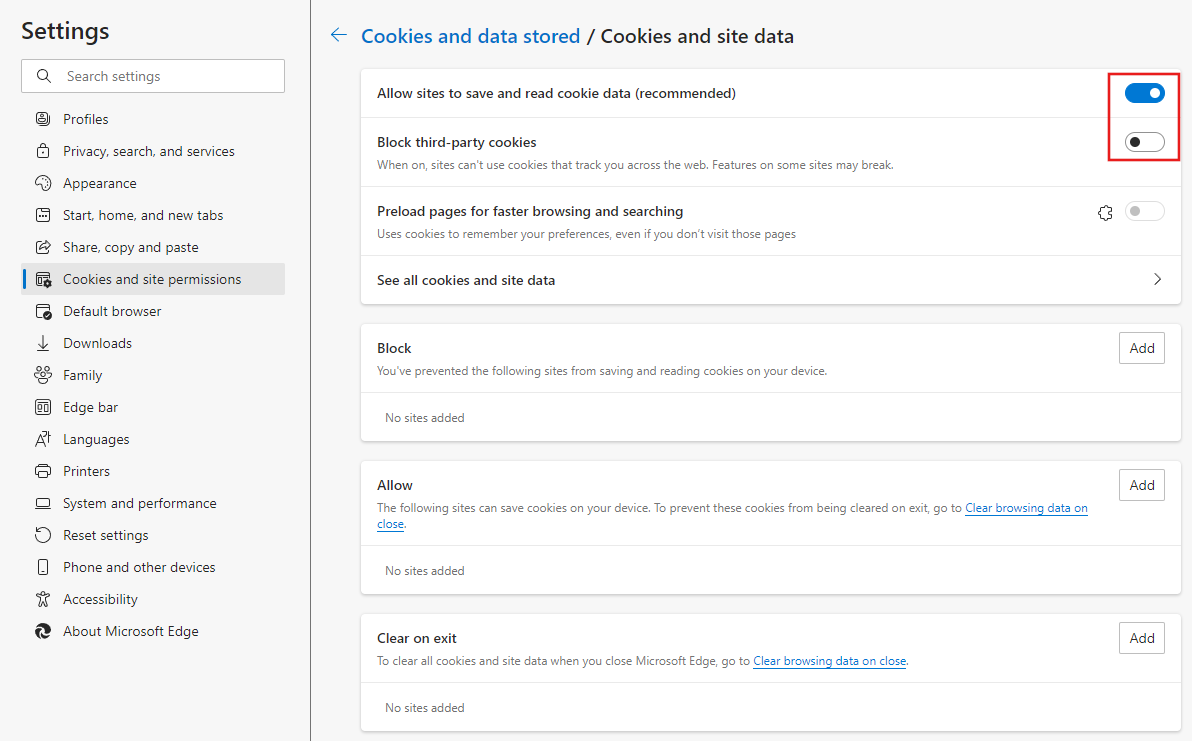

Visit edge://settings/content/cookies in your browser.

Ensure Allow sites to save and read cookie data is enabled and that Block third-party cookies option is disabled

Refresh Azure Data Factory Studio and try again.

Only allow Azure Data Factory Studio to use cookies

If you don't want to allow all cookies, you can optionally just allow ADF Studio:

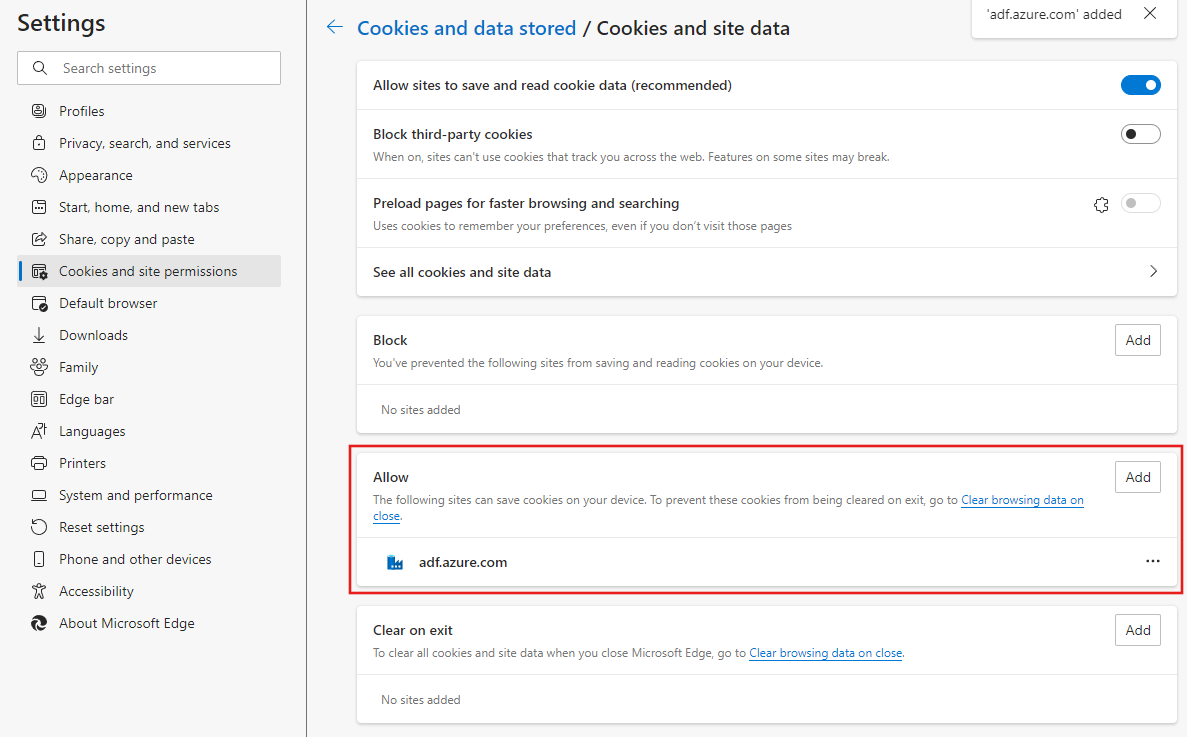

Visit edge://settings/content/cookies.

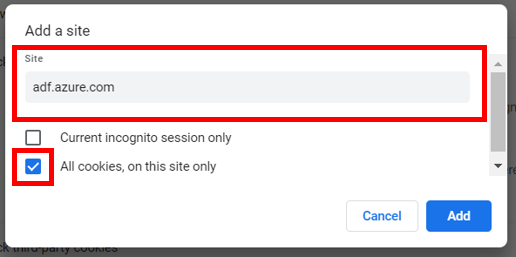

Under Allow section, select Add and add adf.azure.com site.

Refresh ADF UX and try again.

Connection failed error in Azure Data Factory Studio

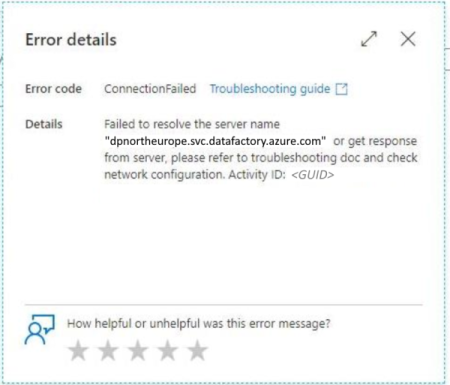

Sometimes you might see a "Connection failed" error in Azure Data Factory Studio similar to the screenshot below, for example, after clicking Test Connection or Preview. It means the operation failed because your local machine couldn't connect to the ADF service.

To resolve the issue, you can first try the same operation with InPrivate browsing mode in your browser.

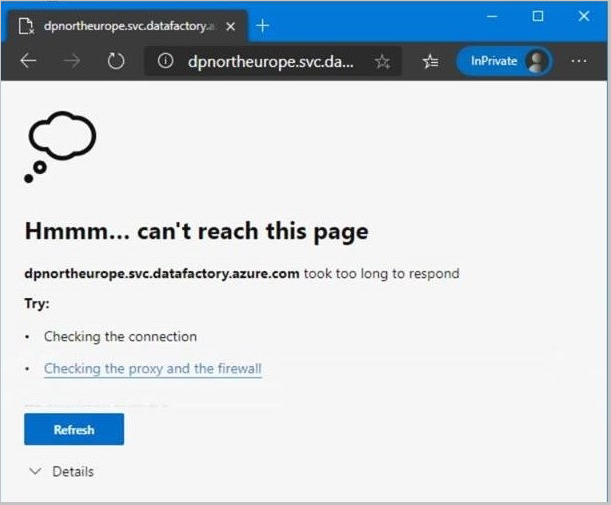

If it’s still not working, find the server name from the error message (in this example, dpnortheurope.svc.datafactory.azure.com), then type the server name directly in the address bar of your browser.

If you see 404 in the browser, it usually means your client side is ok and the issue is at ADF service side. File a support ticket with the Activity ID from the error message.

If you don't see 404 or you see similar error below in the browser, it usually means you have some client-side issue. Further follow the troubleshooting steps.

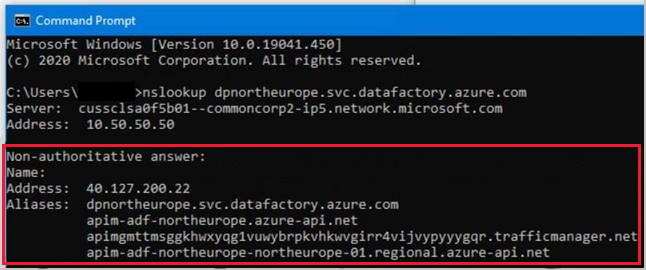

To troubleshoot further, open Command Prompt and type nslookup dpnortheurope.svc.datafactory.azure.com. A normal response should look like below:

If you see a normal Domain Name Service (DNS) response, contact your local IT support to check the firewall settings. Be sure HTTPS connections to this host name are not blocked. If the issue persists, file a support ticket with ADF providing the Activity ID from the error message.

An DNS response differing from the normal response above might also mean a problem exists with your DNS server when resolving the DNS name. Changing your DNS server (for example, to Google DNS 8.8.8.8) could workaround the issue in that case.

If the issue persists, you could further try

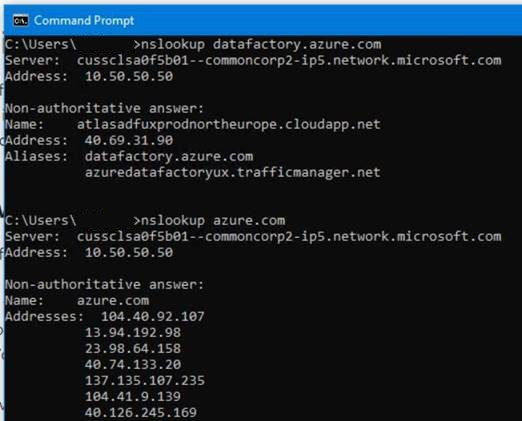

nslookup datafactory.azure.comandnslookup azure.comto see at which level your DNS resolution is failed and submit all information to your local IT support or your ISP for troubleshooting. If they believe the issue is still at Microsoft side, file a support ticket with the Activity ID from the error message.

Change linked service type warning message in datasets

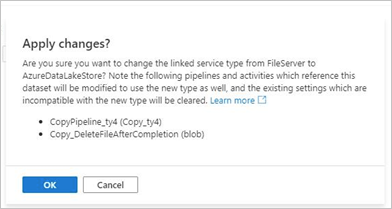

You might encounter the warning message below when you use a file format dataset in an activity, and later want to point to a linked service of a different type than what you used before in the activity (for example, from File System to Azure Data Lake Storage Gen2).

File format datasets can be used with all the file-based connectors, for example, you can configure a Parquet dataset on Azure Blob or Azure Data Lake Storage Gen2. Note each connector supports different set of data store related settings on the activity, and with different app model.

On the ADF authoring UI, when you use a file format dataset in an activity - including Copy, Lookup, GetMetadata, Delete activities - and in a dataset you want to point to a linked service of different type from the current type in the activity (for example, switch from File System to ADLS Gen2), you would see this warning message. To make sure it’s a clean switch, upon your consent, the pipelines and activities that reference this dataset will be modified to use the new type as well, and any existing data store settings that are incompatible with the new type will be cleared since it no longer applies.

To learn more on which the supported data store settings for each connector, you can go to the corresponding connector article -> copy activity properties to see the detailed property list. Refer to Amazon S3, Azure Blob, Azure Data Lake Storage Gen1, Azure Data Lake Storage Gen2, Azure Files, File System, FTP, Google Cloud Storage, HDFS, HTTP, and SFTP.

Could not load resource while opening pipeline

When the user accesses a pipeline using Azure Data Factory Studio, an error message indicates, "Could not load resource 'xxxxxx'. Ensure no mistakes in the JSON and that referenced resources exist. Status: TypeError: Cannot read property 'xxxxx' of undefined, Possible reason: TypeError: Cannot read property 'xxxxxxx' of undefined."

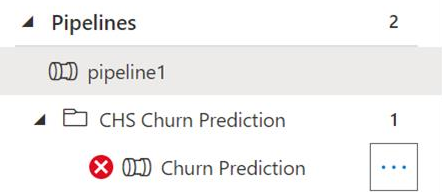

The source of the error message is JSON file that describes the pipeline. It happens when customer uses Git integration and pipeline JSON files get corrupted for some reason. You will see an error (red dot with x) left to pipeline name as shown below.

The solution is to fix JSON files at first and then reopen the pipeline using Authoring tool.

Related content

For more troubleshooting help, try these resources:

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for