Install NVIDIA GPU drivers on N-series VMs running Windows

Applies to: ✔️ Linux VMs ✔️ Windows VMs ✔️ Flexible scale sets

To take advantage of the GPU capabilities of Azure N-series VMs backed by NVIDIA GPUs, you must install NVIDIA GPU drivers. The NVIDIA GPU Driver Extension installs appropriate NVIDIA CUDA or GRID drivers on an N-series VM. Install or manage the extension using the Azure portal or tools such as Azure PowerShell or Azure Resource Manager templates. See the NVIDIA GPU Driver Extension documentation for supported operating systems and deployment steps.

If you choose to install NVIDIA GPU drivers manually, this article provides supported operating systems, drivers, and installation and verification steps. Manual driver setup information is also available for Linux VMs.

For basic specs, storage capacities, and disk details, see GPU Windows VM sizes.

Note

The Azure NVads A10 v5 VMs only support vGPU 16.x(536.25) or higher driver version. The vGPU driver for the A10 SKU is a unified driver that supports both graphics and compute workloads.

NVIDIA Tesla (CUDA) drivers for all NC* and ND-series VMs (optional for NV-series) are generic and not Azure specific. For the latest drivers, visit the NVIDIA website.

Tip

As an alternative to manual CUDA driver installation on a Windows Server VM, you can deploy an Azure Data Science Virtual Machine image. The DSVM editions for Windows Server 2016 pre-install NVIDIA CUDA drivers, the CUDA Deep Neural Network Library, and other tools.

Note

For Azure NVads A10 v5 VMs we recommend customers to always be on the latest driver version. The latest NVIDIA major driver branch(n) is only backward compatbile with the previous major branch(n-1). For eg, vGPU 17.x is backward compatible with vGPU 16.x only. Any VMs still runnig n-2 or lower may see driver failures when the latest drive branch is rolled out to Azure hosts.

NVs_v3 VMs only support vGPU 16 or lower driver version.

Windows server 2019 support is only available till vGPU 16.x.

Microsoft redistributes NVIDIA GRID driver installers for NV, NVv3 and NVads A10 v5-series VMs used as virtual workstations or for virtual applications. Install only these GRID drivers on Azure NV-series VMs, only on the operating systems listed in the following table. These drivers include licensing for GRID Virtual GPU Software in Azure. You don't need to set up a NVIDIA vGPU software license server.

The GRID drivers redistributed by Azure don't work on non-NV series VMs like NCv2, NCv3, ND, and NDv2-series VMs. The one exception is the NCas_T4_V3 VM series where the GRID drivers enable the graphics functionalities similar to NV-series.

The Nvidia extension always installs the latest driver.

For Windows 11 up to and including 24H2, Windows 10 up to and including 22H2, Server 2022:

- GRID 17.5 (553.62) (.exe)

The following links to previous versions are provided to support dependencies on older driver versions.

For Windows Server 2016 1607, 1709:

- GRID 14.1 (512.78) (.exe) is the last supported driver from NVIDIA. The newer 15.x and above don't support Windows Server 2016.

For Windows Server 2012 R2:

- GRID 13.1 (472.39) (.exe)

- GRID 13 (471.68) (.exe)

For links to all previous Nvidia GRID driver versions, visit GitHub.

Connect by Remote Desktop to each N-series VM.

Download, extract, and install the supported driver for your Windows operating system.

After GRID driver installation on a VM, a restart is required. After CUDA driver installation, a restart is not required.

Please note that the Nvidia Control panel is only accessible with the GRID driver installation. If you have installed CUDA drivers then the Nvidia control panel will not be visible.

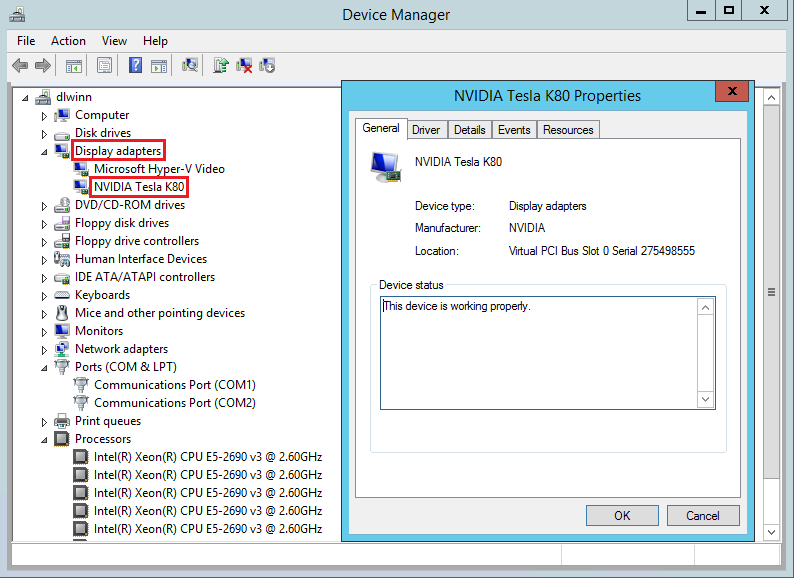

You can verify driver installation in Device Manager. The following example shows successful configuration of the Tesla K80 card on an Azure NC VM.

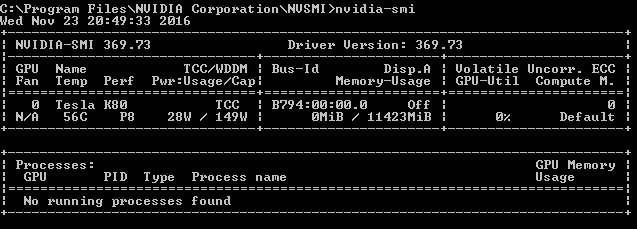

To query the GPU device state, run the nvidia-smi command-line utility installed with the driver.

Open a command prompt and change to the C:\Program Files\NVIDIA Corporation\NVSMI directory.

Run

nvidia-smi. If the driver is installed, you will see output similar to the following. The GPU-Util shows 0% unless you are currently running a GPU workload on the VM. Your driver version and GPU details may be different from the ones shown.

RDMA network connectivity can be enabled on RDMA-capable N-series VMs such as NC24r deployed in the same availability set or in a single placement group in a virtual machine scale set. The HpcVmDrivers extension must be added to install Windows network device drivers that enable RDMA connectivity. To add the VM extension to an RDMA-enabled N-series VM, use Azure PowerShell cmdlets for Azure Resource Manager.

To install the latest version 1.1 HpcVMDrivers extension on an existing RDMA-capable VM named myVM in the West US region:

Set-AzVMExtension -ResourceGroupName "myResourceGroup" -Location "westus" -VMName "myVM" -ExtensionName "HpcVmDrivers" -Publisher "Microsoft.HpcCompute" -Type "HpcVmDrivers" -TypeHandlerVersion "1.1"

For more information, see Virtual machine extensions and features for Windows.

The RDMA network supports Message Passing Interface (MPI) traffic for applications running with Microsoft MPI or Intel MPI 5.x.

- Developers building GPU-accelerated applications for the NVIDIA Tesla GPUs can also download and install the latest CUDA Toolkit. For more information, see the CUDA Installation Guide.