Events

May 19, 6 PM - May 23, 12 AM

Calling all developers, creators, and AI innovators to join us in Seattle @Microsoft Build May 19-22.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

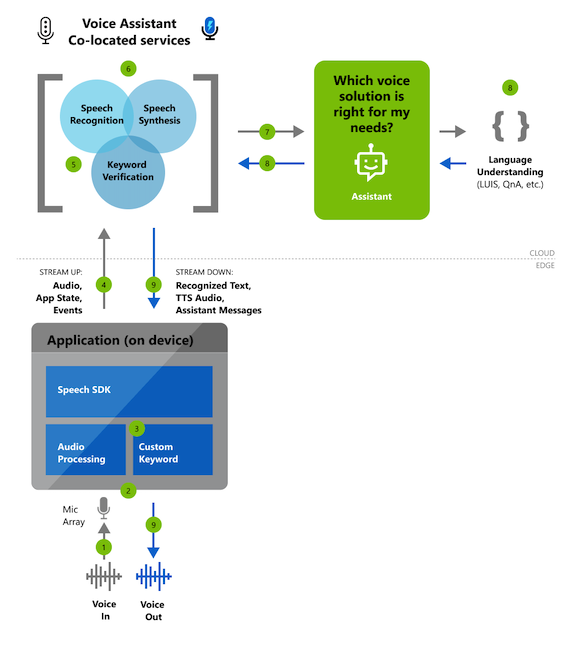

By using voice assistants with the Speech service, developers can create natural, human-like, conversational interfaces for their applications and experiences. The voice assistant service provides fast, reliable interaction between a device and an assistant implementation.

The first step in creating a voice assistant is to decide what you want it to do. Speech service provides multiple, complementary solutions for crafting assistant interactions. You might want your application to support an open-ended conversation with phrases such as "I need to go to Seattle" or "What kind of pizza can I order?"

Whether you choose custom keyword or another solution to create your assistant interactions, you can use a rich set of customization features to customize your assistant to your brand, product, and personality.

| Category | Features |

|---|---|

| Custom keyword | Users can start conversations with assistants by using a custom keyword such as "Hey Contoso." An app does this with a custom keyword engine in the Speech SDK, which you can configure by going to Get started with custom keywords. Voice assistants can use service-side keyword verification to improve the accuracy of the keyword activation (versus using the device alone). |

| Speech to text | Voice assistants convert real-time audio into recognized text by using speech to text from the Speech service. This text is available, as it's transcribed, to both your assistant implementation and your client application. |

| Text to speech | Textual responses from your assistant are synthesized through text to speech from the Speech service. This synthesis is then made available to your client application as an audio stream. Microsoft offers the ability to build your own custom, high-quality Neural Text to speech (Neural TTS) voice that gives a voice to your brand. |

Sample code for creating a voice assistant is available on GitHub at Azure-Samples/Cognitive-Services-Voice-Assistant.

Voice assistants that you build by using Speech service can use a full range of customization options.

Note

Customization options vary by language and locale. To learn more, see Supported languages.

Events

May 19, 6 PM - May 23, 12 AM

Calling all developers, creators, and AI innovators to join us in Seattle @Microsoft Build May 19-22.

Register today