Copy data from an SAP table using Azure Data Factory or Azure Synapse Analytics

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

This article outlines how to use the copy activity in Azure Data Factory and Azure Synapse Analytics pipelines to copy data from an SAP table. For more information, see Copy activity overview.

Tip

To learn the overall support on SAP data integration scenario, see SAP data integration using Azure Data Factory whitepaper with detailed introduction on each SAP connector, comparsion and guidance.

Supported capabilities

This SAP table connector is supported for the following capabilities:

| Supported capabilities | IR |

|---|---|

| Copy activity (source/-) | ② |

| Lookup activity | ② |

① Azure integration runtime ② Self-hosted integration runtime

For a list of the data stores that are supported as sources or sinks by the copy activity, see the Supported data stores table.

Specifically, this SAP table connector supports:

Copying data from an SAP table in:

- SAP ERP Central Component (SAP ECC) version 7.01 or later (in a recent SAP Support Package Stack released after 2015).

- SAP Business Warehouse (SAP BW) version 7.01 or later (in a recent SAP Support Package Stack released after 2015).

- SAP S/4HANA.

- Other products in SAP Business Suite version 7.01 or later (in a recent SAP Support Package Stack released after 2015).

Copying data from both an SAP transparent table, a pooled table, a clustered table, and a view.

Copying data by using basic authentication or Secure Network Communications (SNC), if SNC is configured.

Connecting to an SAP application server or SAP message server.

Retrieving data via default or custom RFC.

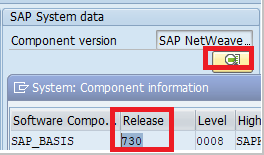

The version 7.01 or later refers to SAP NetWeaver version instead of SAP ECC version. For example, SAP ECC 6.0 EHP 7 in general has NetWeaver version >=7.4. In case you are unsure about your environment, here are the steps to confirm the version from your SAP system:

- Use SAP GUI to connect to the SAP System.

- Go to System -> Status.

- Check the release of the SAP_BASIS, ensure it is equal to or larger than 701.

Prerequisites

To use this SAP table connector, you need to:

Set up a self-hosted integration runtime (version 3.17 or later). For more information, see Create and configure a self-hosted integration runtime.

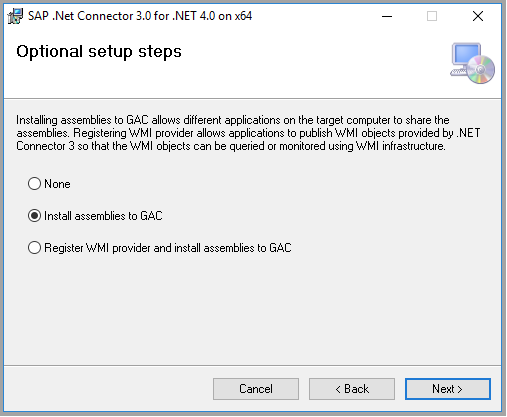

Download the 64-bit SAP Connector for Microsoft .NET 3.0 from SAP's website, and install it on the self-hosted integration runtime machine. During installation, make sure you select the Install Assemblies to GAC option in the Optional setup steps window.

The SAP user who's being used in the SAP table connector must have the following permissions:

- Authorization for using Remote Function Call (RFC) destinations.

- Permissions to the Execute activity of the S_SDSAUTH authorization object. You can refer to SAP Note 460089 on the majority authorization objects. Certain RFCs are required by the underlying NCo connector, for example RFC_FUNCTION_SEARCH.

Get started

To perform the Copy activity with a pipeline, you can use one of the following tools or SDKs:

- The Copy Data tool

- The Azure portal

- The .NET SDK

- The Python SDK

- Azure PowerShell

- The REST API

- The Azure Resource Manager template

Create a linked service to an SAP table using UI

Use the following steps to create a linked service to an SAP table in the Azure portal UI.

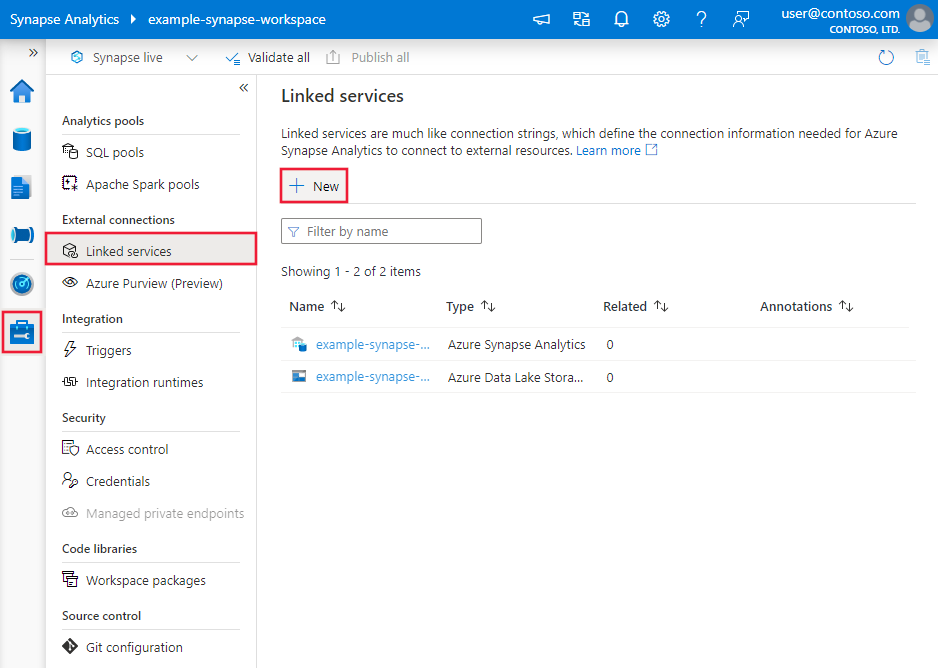

Browse to the Manage tab in your Azure Data Factory or Synapse workspace and select Linked Services, then click New:

Search for SAP and select the SAP table connector.

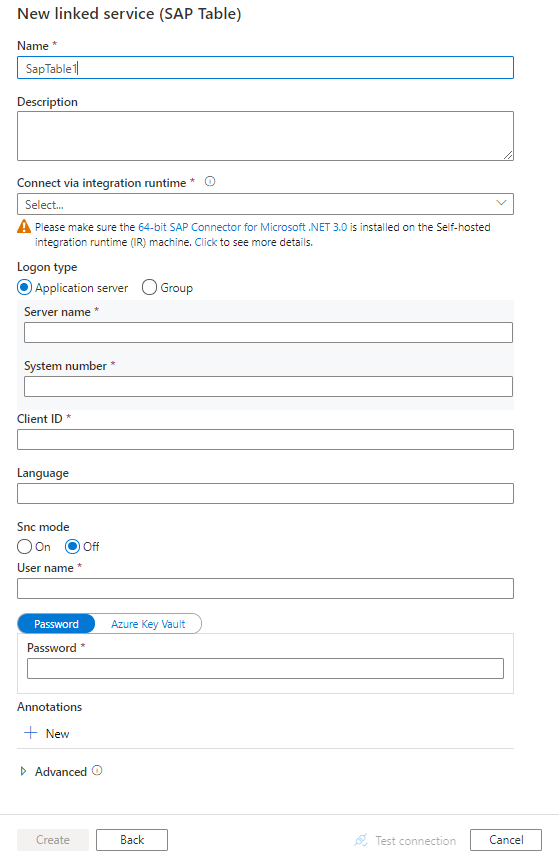

Configure the service details, test the connection, and create the new linked service.

Connector configuration details

The following sections provide details about properties that are used to define the entities specific to the SAP table connector.

Linked service properties

The following properties are supported for the SAP BW Open Hub linked service:

| Property | Description | Required |

|---|---|---|

type |

The type property must be set to SapTable. |

Yes |

server |

The name of the server on which the SAP instance is located. Use to connect to an SAP application server. |

No |

systemNumber |

The system number of the SAP system. Use to connect to an SAP application server. Allowed value: A two-digit decimal number represented as a string. |

No |

messageServer |

The host name of the SAP message server. Use to connect to an SAP message server. |

No |

messageServerService |

The service name or port number of the message server. Use to connect to an SAP message server. |

No |

systemId |

The ID of the SAP system where the table is located. Use to connect to an SAP message server. |

No |

logonGroup |

The logon group for the SAP system. Use to connect to an SAP message server. |

No |

clientId |

The ID of the client in the SAP system. Allowed value: A three-digit decimal number represented as a string. |

Yes |

language |

The language that the SAP system uses. Default value is EN. |

No |

userName |

The name of the user who has access to the SAP server. | Yes |

password |

The password for the user. Mark this field with the SecureString type to store it securely, or reference a secret stored in Azure Key Vault. |

Yes |

sncMode |

The SNC activation indicator to access the SAP server where the table is located. Use if you want to use SNC to connect to the SAP server. Allowed values are 0 (off, the default) or 1 (on). |

No |

sncMyName |

The initiator's SNC name to access the SAP server where the table is located. Applies when sncMode is on. |

No |

sncPartnerName |

The communication partner's SNC name to access the SAP server where the table is located. Applies when sncMode is on. |

No |

sncLibraryPath |

The external security product's library to access the SAP server where the table is located. Applies when sncMode is on. |

No |

sncQop |

The SNC Quality of Protection level to apply. Applies when sncMode is On. Allowed values are 1 (Authentication), 2 (Integrity), 3 (Privacy), 8 (Default), 9 (Maximum). |

No |

connectVia |

The integration runtime to be used to connect to the data store. A self-hosted integration runtime is required, as mentioned earlier in Prerequisites. | Yes |

Example 1: Connect to an SAP application server

{

"name": "SapTableLinkedService",

"properties": {

"type": "SapTable",

"typeProperties": {

"server": "<server name>",

"systemNumber": "<system number>",

"clientId": "<client ID>",

"userName": "<SAP user>",

"password": {

"type": "SecureString",

"value": "<Password for SAP user>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Example 2: Connect to an SAP message server

{

"name": "SapTableLinkedService",

"properties": {

"type": "SapTable",

"typeProperties": {

"messageServer": "<message server name>",

"messageServerService": "<service name or port>",

"systemId": "<system ID>",

"logonGroup": "<logon group>",

"clientId": "<client ID>",

"userName": "<SAP user>",

"password": {

"type": "SecureString",

"value": "<Password for SAP user>"

}

},

"connectVia": {

"referenceName": "<name of integration runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Example 3: Connect by using SNC

{

"name": "SapTableLinkedService",

"properties": {

"type": "SapTable",

"typeProperties": {

"server": "<server name>",

"systemNumber": "<system number>",

"clientId": "<client ID>",

"userName": "<SAP user>",

"password": {

"type": "SecureString",

"value": "<Password for SAP user>"

},

"sncMode": 1,

"sncMyName": "<SNC myname>",

"sncPartnerName": "<SNC partner name>",

"sncLibraryPath": "<SNC library path>",

"sncQop": "8"

},

"connectVia": {

"referenceName": "<name of integration runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Dataset properties

For a full list of the sections and properties for defining datasets, see Datasets. The following section provides a list of the properties supported by the SAP table dataset.

To copy data from and to the SAP BW Open Hub linked service, the following properties are supported:

| Property | Description | Required |

|---|---|---|

type |

The type property must be set to SapTableResource. |

Yes |

tableName |

The name of the SAP table to copy data from. | Yes |

Example

{

"name": "SAPTableDataset",

"properties": {

"type": "SapTableResource",

"typeProperties": {

"tableName": "<SAP table name>"

},

"schema": [],

"linkedServiceName": {

"referenceName": "<SAP table linked service name>",

"type": "LinkedServiceReference"

}

}

}

Copy activity properties

For a full list of the sections and properties for defining activities, see Pipelines. The following section provides a list of the properties supported by the SAP table source.

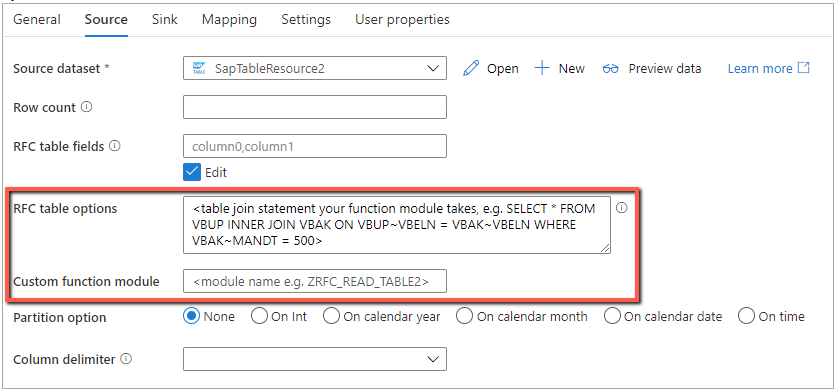

SAP table as source

To copy data from an SAP table, the following properties are supported:

| Property | Description | Required |

|---|---|---|

type |

The type property must be set to SapTableSource. |

Yes |

rowCount |

The number of rows to be retrieved. | No |

rfcTableFields |

The fields (columns) to copy from the SAP table. For example, column0, column1. |

No |

rfcTableOptions |

The options to filter the rows in an SAP table. For example, COLUMN0 EQ 'SOMEVALUE'. See also the SAP query operator table later in this article. |

No |

customRfcReadTableFunctionModule |

A custom RFC function module that can be used to read data from an SAP table. You can use a custom RFC function module to define how the data is retrieved from your SAP system and returned to the service. The custom function module must have an interface implemented (import, export, tables) that's similar to /SAPDS/RFC_READ_TABLE2, which is the default interface used by the service. |

No |

partitionOption |

The partition mechanism to read from an SAP table. The supported options include:

|

No |

partitionColumnName |

The name of the column used to partition the data. | No |

partitionUpperBound |

The maximum value of the column specified in partitionColumnName that will be used to continue with partitioning. |

No |

partitionLowerBound |

The minimum value of the column specified in partitionColumnName that will be used to continue with partitioning. (Note: partitionLowerBound cannot be "0" when partition option is PartitionOnInt) |

No |

maxPartitionsNumber |

The maximum number of partitions to split the data into. The default value is 1. | No |

sapDataColumnDelimiter |

The single character that is used as delimiter passed to SAP RFC to split the output data. | No |

Tip

If your SAP table has a large volume of data, such as several billion rows, use partitionOption and partitionSetting to split the data into smaller partitions. In this case, the data is read per partition, and each data partition is retrieved from your SAP server via a single RFC call.

Taking partitionOption as partitionOnInt as an example, the number of rows in each partition is calculated with this formula: (total rows falling between partitionUpperBound and partitionLowerBound)/maxPartitionsNumber.

To load data partitions in parallel to speed up copy, the parallel degree is controlled by the parallelCopies setting on the copy activity. For example, if you set parallelCopies to four, the service concurrently generates and runs four queries based on your specified partition option and settings, and each query retrieves a portion of data from your SAP table. We strongly recommend making maxPartitionsNumber a multiple of the value of the parallelCopies property. When copying data into file-based data store, it's also recommanded to write to a folder as multiple files (only specify folder name), in which case the performance is better than writing to a single file.

Tip

The BASXML is enabled by default for this SAP Table connector within the service.

In rfcTableOptions, you can use the following common SAP query operators to filter the rows:

| Operator | Description |

|---|---|

EQ |

Equal to |

NE |

Not equal to |

LT |

Less than |

LE |

Less than or equal to |

GT |

Greater than |

GE |

Greater than or equal to |

IN |

As in TABCLASS IN ('TRANSP', 'INTTAB') |

LIKE |

As in LIKE 'Emma%' |

Example

"activities":[

{

"name": "CopyFromSAPTable",

"type": "Copy",

"inputs": [

{

"referenceName": "<SAP table input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "SapTableSource",

"partitionOption": "PartitionOnInt",

"partitionSettings": {

"partitionColumnName": "<partition column name>",

"partitionUpperBound": "2000",

"partitionLowerBound": "1",

"maxPartitionsNumber": 500

}

},

"sink": {

"type": "<sink type>"

},

"parallelCopies": 4

}

}

]

Join SAP tables

Currently SAP Table connector only supports one single table with the default function module. To get the joined data of multiple tables, you can leverage the customRfcReadTableFunctionModule property in the SAP Table connector following steps below:

- Write a custom function module, which can take a query as OPTIONS and apply your own logic to retrieve the data.

- For the "Custom function module", enter the name of your custom function module.

- For the "RFC table options", specify the table join statement to feed into your function module as OPTIONS, such as "

<TABLE1>INNER JOIN<TABLE2>ON COLUMN0".

Below is an example:

Tip

You can also consider having the joined data aggregated in the VIEW, which is supported by SAP Table connector. You can also try to extract related tables to get onboard onto Azure (e.g. Azure Storage, Azure SQL Database), then use Data Flow to proceed with further join or filter.

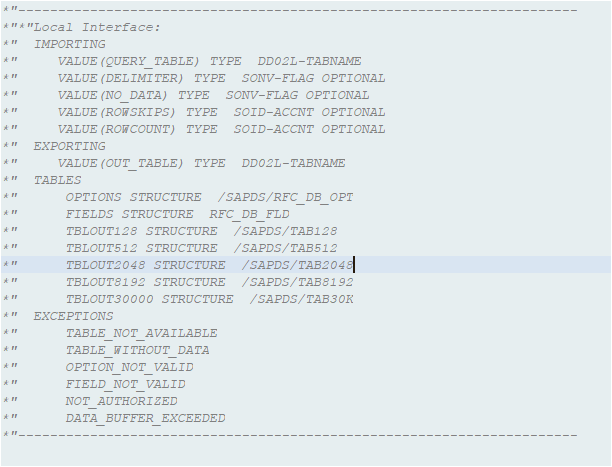

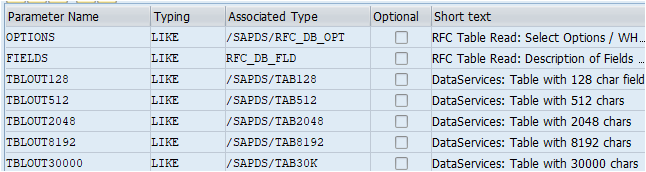

Create custom function module

For SAP table, currently we support customRfcReadTableFunctionModule property in the copy source, which allows you to leverage your own logic and process data.

As a quick guidance, here are some requirements to get started with the "Custom function module":

Definition:

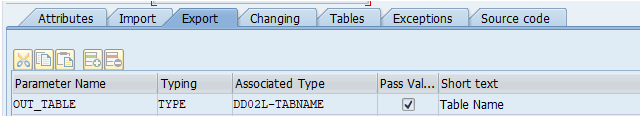

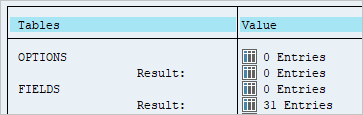

Export data into one of the tables below:

Below are illustrations of how SAP table connector works with custom function module:

Build connection with SAP server via SAP NCO.

Invoke "Custom function module" with the parameters set as below:

- QUERY_TABLE: the table name you set in the SAP Table dataset;

- Delimiter: the delimiter you set in the SAP Table Source;

- ROWCOUNT/Option/Fields: the Rowcount/Aggregated Option/Fields you set in the Table source.

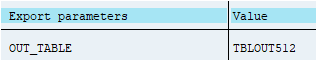

Get the result and parse the data in below ways:

Parse the value in the Fields table to get the schemas.

Get the values of the output table to see which table contains these values.

Get the values in the OUT_TABLE, parse the data and then write it into the sink.

Data type mappings for an SAP table

When you're copying data from an SAP table, the following mappings are used from the SAP table data types to interim data types used within the service. To learn how the copy activity maps the source schema and data type to the sink, see Schema and data type mappings.

| SAP ABAP Type | Service interim data type |

|---|---|

C (String) |

String |

I (Integer) |

Int32 |

F (Float) |

Double |

D (Date) |

String |

T (Time) |

String |

P (BCD Packed, Currency, Decimal, Qty) |

Decimal |

N (Numeric) |

String |

X (Binary and Raw) |

String |

Lookup activity properties

To learn details about the properties, check Lookup activity.

Related content

For a list of the data stores supported as sources and sinks by the copy activity, see Supported data stores.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for